A Systematic Survey of Prompt Engineering in LLMs: Techniques and Applications

Introduction

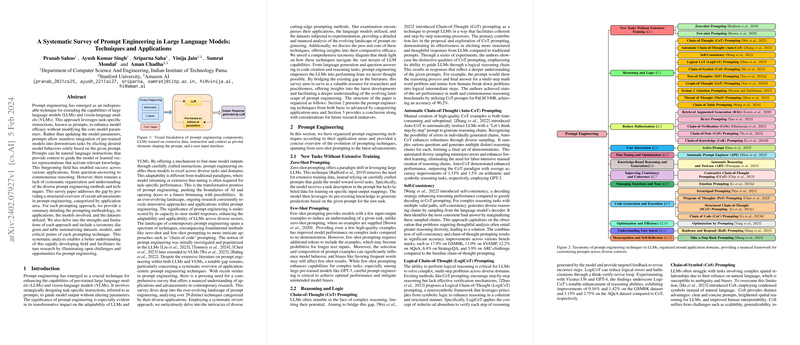

Prompt engineering presents itself as a seminal technique in the enhancement and adaptation of pre-trained LLMs and vision-LLMs (VLMs) across a broad spectrum of tasks without modifying their underlying parameters. This paper compiles a comprehensive survey of the field, delineating the evolution, methodologies, and applications of prompt engineering, stretching from zero-shot and few-shot learning to complex reasoning methodologies. This detailed overview addresses the pressing need for a systematic organization within this burgeoning field, aiming to pave the way for future research by identifying both current strengths and potential gaps.

Overview of Prompt Engineering Techniques

The taxonomy introduced in this survey categorizes prompt engineering techniques into several critical areas, emphasizing their application domains and contributions to advancing LLM capabilities.

New Tasks Without Extensive Training

- Zero-shot prompting, leveraging the innate knowledge of models to tackle tasks without specific training, and Few-shot prompting, which requires minimal examples, signify foundational techniques that extend model applicability without significant data or computational overheads.

Reasoning and Logic

- Advanced techniques like Chain-of-Thought (CoT) prompting showcase how models can be guided to generate step-by-step reasoning, replicating a thought process for complex problem-solving tasks. Innovations like Automatic Chain-of-Thought (Auto-CoT) and Self-Consistency further refine this idea, introducing automation and variety in reasoning generation to enhance performance and reliability.

Reducing Hallucination

- Techniques such as Retrieval Augmented Generation (RAG) and Chain-of-Verification (CoVe) aim to curb the issue of hallucination in outputs by augmenting prompts with retrieval mechanisms and verification steps, leading to more accurate and reliable responses.

Code Generation and Execution

- The survey explores the domain of code generation, highlighting approaches like Scratchpad Prompting, Structured Chain-of-Thought (SCoT), and Chain-of-Code (CoC), which elucidate methods to enhance the precision and logical flow in code-related tasks.

Managing Emotions and Tone

- A novel area explored is the management of emotions and tone through prompting, illustrating the method's versatility beyond technical applications to include human-like understanding and generation of content.

Theoretical and Practical Implications

This survey not only underscores the practical successes achieved across various tasks, including language generation, reasoning, and code execution, but also explores the theoretical understanding of how different prompt engineering methodologies can influence model behavior. It highlights the dual benefit of prompt engineering: enhancing model performance while providing insights into model cognition and decision-making processes.

Future Directions in Prompt Engineering

The comprehensive analysis in this survey pinpoints several future directions, including the exploration of meta-learning approaches for prompt optimization, the development of hybrid models combining different prompting techniques, and the imperative need for addressing ethical concerns. The ongoing efforts to mitigate biases, enhance factual accuracy, and improve the interpretability of models through advanced prompt engineering methodologies are critical focal points for future research.

Conclusion

In conclusion, this survey not only serves as a critical resource for researchers and practitioners exploring the field of prompt engineering but also lays down a roadmap for future investigations. By systematically organizing and analyzing the plethora of existing techniques and their applications, it brings to light the immense potential of prompt engineering in harnessing the capabilities of LLMs and VLMs, advocating for a balanced approach towards its development, with an eye toward ethical and responsible AI use.