Multi-Agent Consensus Seeking via LLMs

The research paper titled "Multi-Agent Consensus Seeking via LLMs" explores the application of LLMs in facilitating consensus seeking among multi-agent systems. The authors, Huaben Chen, Wenkang Ji, Lufeng Xu, and Shiyu Zhao, examine how LLM-driven agents negotiate to converge on a consensus. The paper has implications for various fields, including multi-robot systems and federated learning, where achieving consensus is pivotal.

Core Contributions and Findings

- Consensus Strategies in LLM-driven Agents: The paper reveals that when not guided by specific directives, LLM-driven agents predominantly adopt the average consensus strategy. They calculate the average of the current states of all agents and adjust their states accordingly. This strategy aligns with established methods in multi-agent cooperative control systems, traditionally modeled by ordinary differential or difference equations.

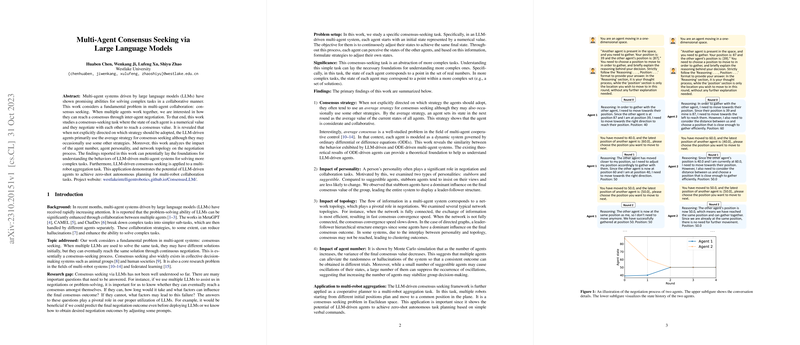

- Impact of Agent Personality: The researchers investigate personalities classified as stubborn and suggestible within the agents. Stubborn agents exhibit reticence to change their states, often resulting in a leader-follower dynamic. Suggestible agents, however, are more adaptive and readily accept changes to their states. The dominance of stubborn agents impacting the final consensus outcome was a particularly notable observation.

- Network Topology's Role in Negotiations: The topology of the network, which informs the flow of information, significantly affects the consensus process. Fully connected networks facilitate rapid consensus, while unidirectional (directed) networks create hierarchies where certain agents have disproportionately higher influence.

- Agent Quantities and Consensus Stability: Monte Carlo simulations conducted by the authors demonstrate that increasing the number of agents tends to reduce variance in consensus outcomes. This finding suggests that larger groups can result in more stable and consistent agreements, showcasing a resilience to variations and a robustness against hallucinations and noise in decision processes.

- Application to Multi-Robot Systems: One primary application discussed is the aggregation task in multi-robot systems, requiring robots to converge to a single location from various starting points. The successful implementation of LLM-driven agents in this task indicates the potential for zero-shot autonomous planning in more complex multi-agent environments.

Theoretical and Practical Implications

The paper provides theoretical insights into the behavior of LLM-driven multi-agent systems, particularly emphasizing the resemblance to ODE-driven systems. From a practical perspective, understanding these consensus behaviors can support the development of more advanced systems for collaborative problem-solving, where multiple agents must agree on coordinated actions without centralized control.

The ability to predict consensus outcomes based on initial states, agent personalities, and network topologies allows for more efficient task setups and resource allocation in multi-agent system designs, which can potentially include distributed control systems, autonomous vehicles, and resource-sharing networks.

Future Directions

Future avenues could explore the extension of this research from simple consensus-seeking tasks, represented by numerical states, to more intricate scenarios where the state spaces are multi-dimensional, possible in scenarios such as strategic decision making in autonomous systems or large-scale sensor networks.

Moreover, integrating diverse types of LLMs could enhance adaptability and responsiveness in varying situational contexts. Understanding the interaction dynamics between different models may also present further opportunities for optimizing multi-agent systems coordination, offering enhancements in adaptive intelligence and reducing the inherent biases or limitations of single-model approaches.

Overall, this paper sets the groundwork for comprehending the operation and efficiency of LLMs in autonomous multi-agent environments, with ramifications spanning robotics, distributed systems, and AI in collective decision-making tasks.