Analysis of "ReConcile: Round-Table Conference Improves Reasoning via Consensus among Diverse LLMs"

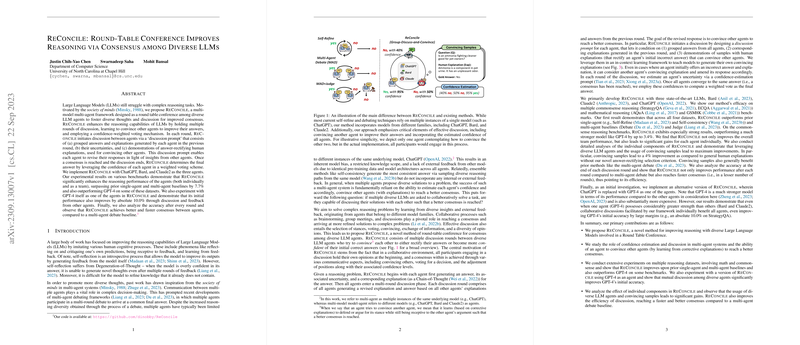

The paper presents a novel framework named ReConcile, aimed at enhancing the reasoning capabilities of LLMs by leveraging a multi-model, multi-agent consensus-building process. The authors draw upon the "society of minds" concept to formulate an environment that emulates a round table conference among diverse LLMs. This involves iterative dialogue sessions where LLMs not only present their solutions but also attempt to achieve consensus through a structured debate, accompanied by confidence-weighted voting mechanisms. This design differentiates ReConcile from previous works that typically rely on self-refinement or collaboration among identical models.

Key Components and Methodology

ReConcile is structured into three distinct phases: Initial Response Generation, Multi-round Discussion, and Team Answer Generation. During the Initialization phase, each LLM agent generates an answer, explanation, and a confidence score for a given problem. In the Multi-round Discussion phase, ReConcile facilitates dialogue where LLM agents revise their responses based on others' explanations, driving towards a consensus. The framework emphasizes diversity across agents, combining models like ChatGPT, Bard, and Claude2. It operationalizes this communication via discussion prompts, which integrate agents' past responses, confidence metrics, and exemplary human explanations that have rectified previous incorrect answers. Using such a dialogical mechanism allows ReConcile to teach agents to generate convincing explanations, thus optimizing the reasoning process. The final phase involves aggregating individual responses into a weighted team answer, leaning on recalibrated confidence scores to determine consensus.

Evaluation and Results

The framework was evaluated across seven benchmarks, including commonsense reasoning, mathematical reasoning, and logical reasoning tasks. The versatility of ReConcile is evident as it outperformed existing single-agent and multi-agent baselines by up to 11.4%, showcasing substantial improvements and occasional superiority over GPT-4 alone on selected datasets. Noteworthy is the flexibility of ReConcile in accommodating different LLM types, including API-based, open-source, and specialized models, leading to significant performance boosts exemplified by an 8% enhancement on the MATH dataset.

Implications and Future Directions

ReConcile introduces a new methodological perspective by harnessing the strengths of diverse LLMs for collaborative problem-solving, contributing to a better collective reasoning framework. The integration of a confidence-based voting scheme and multi-model collaboration underscores the potential for ReConcile to enhance individual agent performance while achieving superior collective reasoning outcomes.

The paper's findings open new pathways for exploration in NLP and AI, particularly in areas where ensemble methods and collaborative learning can further improve AI systems' robustness and accuracy. Future developments should explore fine-tuning the consensus mechanism and further expanding agent diversity to encompass a wider range of models, potentially increasing robustness to domain-specific challenges. Additionally, the impact of diversifying the types of explanations used within this system merits further research, particularly in how they contribute to refining inter-agent communication and decision-making.

In summary, ReConcile represents a promising advance in employing collaborative reasoning strategies within the rapidly evolving landscape of LLMs, driving towards enhanced understanding, diversity, and consensus in AI-based problem-solving.