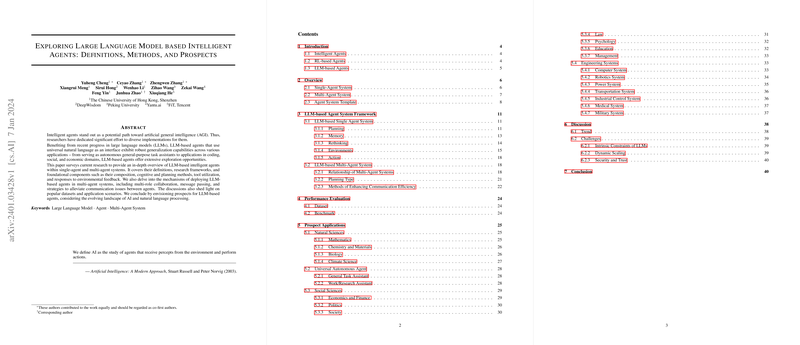

This paper provides a comprehensive survey and analysis of agents built on LLMs, discussing their definitions, architecture, methodologies, evaluation protocols, applications, trends, and open challenges. We outline below the key aspects and contributions of the work.

- Overview and Motivation The authors motivate the paper by explaining that intelligent agents are increasingly being augmented by LLMs. These agents benefit from the natural language reasoning, planning, and generalization capabilities of LLMs, which can be leveraged in both single-agent and multi-agent contexts. The paper situates this investigation within the broader trajectory from rule-based and reinforcement learning–based agents to those that integrate LLMs as their “brain.”

- LLM-Based Agent System Framework A major portion of the paper is devoted to deconstructing the architecture of LLM-based agents and their constituent modules. The framework is broadly organized into several core components:

a. Planning Capability * The paper details how LLMs can be guided to plan and decompose tasks into actionable components. * It reviews in-context learning techniques such as chain-of-thought (including variants like self-consistency, tree-of-thought, least-to-most, skeleton-of-thought, and graph-of-thought) that empower an LLM to generate intermediate reasoning steps. * In addition, it covers methods that integrate external capabilities (e.g., invoking classical planners via PDDL or using Monte Carlo methods) as well as multi-stage planning methods that divide the planning process into distinct phases.

b. Memory Mechanisms * The authors categorize memory into short-term (temporary context tracking) and long-term (persistent storage of experiences, knowledge graphs, vector databases, etc.). * They also discuss memory retrieval techniques—how agents extract context or past experiences to inform current decision-making—emphasizing the importance of efficient memory retrieval to overcome context-length limitations of LLMs.

c. Rethinking or Self-Reflection * Rethinking capabilities are presented as a mechanism by which agents can evaluate previous actions and outcomes, revise their plans, and improve their performance. * The discussion spans several approaches: in-context learning methods like ReAct and Reflexion; supervised learning paradigms that use feedback from prior outputs; and reinforcement learning–based methods as well as modular coordination strategies for iterative self-improvement.

d. Action and Environment Interaction * The framework examines how agents interact with external environments through tool usage. * Three aspects are discussed: (i) tool employment (the leveraging of external APIs, calculators, code interpreters, and similar resources), (ii) tool planning (where the agent decides which tools to call and how to sequence their usage), and (iii) tool creation (where new tools or functionalities may be generated on demand). * The paper also surveys a variety of environments in which these agents operate, including computer-based environments (web interaction, API calls, database queries), gaming, coding environments, real-world and simulation settings.

- Multi-Agent System (MAS) Considerations Beyond single-agent performance, the paper provides an in‐depth discussion of LLM-based multi-agent systems. Key features include:

a. Inter-Agent Relationships * Agents may engage cooperatively, competitively, in mixed strategies, or in hierarchical arrangements. The survey reviews existing frameworks and taxonomies that clarify these relationships and discusses how、多-agent interaction is managed through role allocation, task decomposition, and message passing.

b. Planning Paradigms for MAS * Two main planning paradigms are analyzed: centralized planning with decentralized execution (where a central LLM plans for all agents) and decentralized planning (where each agent plans independently, possibly with different modes of communication or shared memory). * The pros and cons of each approach are discussed, along with challenges in scaling coordination and managing communication overhead between agents.

c. Enhancing Communication Efficiency * Given that effective collaboration is essential, the paper reviews methods to design robust communication protocols between agents. Topics include structured messaging (drawing on concepts from agent communication languages, speech acts, and predefined protocols), mediator models that govern when agents should interact, and techniques to counteract hallucinations or inaccurate outputs during communication.

- Performance Evaluation and Benchmarking

Because LLM-based agents seldom require further training of their underlying models, evaluation focuses on measuring competence in tool usage, planning, memory retention, and task execution across environments.

- The survey highlights various publicly available datasets and benchmarks (spanning domains such as natural language question answering, code generation, simulation, and domain-specific tasks) that researchers currently employ.

- It also emphasizes that standardized evaluation protocols remain an open need, especially for task-level assessments and domain-specific applications.

- Prospect Applications The paper surveys an extensive range of prospective applications, illustrating the versatility of LLM-based agents across multiple fields:

a. Natural Sciences and Mathematics * Applications in mathematical reasoning, theorem proving, symbolic and numerical computation, and even autonomous hypothesis generation are discussed.

b. Chemistry, Materials, and Biology * Agents that can simulate molecular reactions, automate chemical experiments, and aid in drug discovery or materials design are presented as promising directions.

c. Climate Science, Universal Autonomous Agents, and Work/Research Assistance * The survey envisions agents that can perform complex simulations, assist in generating research and creative outputs, and interact naturally with human users.

d. Social Sciences (Economics, Politics, Law, Psychology, Education, Management) * The potential to model social dynamics, simulate market behaviors, enhance legal decision-making, and provide adaptive educational tutoring is explored.

e. Engineering and Technical Domains * Areas such as human–computer interaction, code generation and debugging, robotics, power system management, transportation, industrial control, and even medical and military applications are discussed. * In each instance, the strengths of LLM-based reasoning combined with tool usage and memory augmentation provide avenues for both simulation and real-world control.

- Trends and Future Directions The authors highlight several emergent trends:

- There is an increasing need for standardized benchmarks that not only assess foundational capabilities (e.g., logical reasoning, planning, memory) but also domain-specific performance.

- Continual learning, self-evaluation, and dynamic goal revision are seen as critical for enabling agents to perform well in dynamic and ever-changing environments.

- Enhancing multimodal capabilities is another promising direction. Future agents may directly integrate large multimodal models—handling images, videos, speech—instead of relying solely on textual conversion, thereby improving overall efficiency and task performance.

- Challenges and Open Issues The paper does not shy away from the limitations that remain:

- Intrinsic constraints of LLMs, such as context-length limitations and the risk of hallucinations, present significant barriers to achieving consistent and reliable performance.

- Dynamic scaling in multi-agent systems poses both computational and coordination challenges, especially when the number of agents or the complexity of tasks increases.

- Security and trust issues are paramount, as agents often require permission to interact with external systems and share information. A robust permission management framework is necessary to prevent misbehavior and ensure dependable human–agent collaboration.

- Conclusion In concluding, the authors reiterate that while current LLM-based agents are still far from achieving full artificial general intelligence, they represent a significant step forward. By integrating advanced planning, memory, and self-reflection capabilities with the natural language proficiency of LLMs, these agents open up multiple avenues for research and application. The survey underscores both the immense promise and the considerable hurdles that lie ahead in the quest to create more capable, adaptive, and trustworthy intelligent agents.

Overall, this work serves as a detailed roadmap for researchers seeking to understand or contribute to the rapidly evolving field of LLM-based intelligent agents, offering insights into current methodologies, evaluation strategies, application domains, and future research directions.