- The paper presents a framework addressing the tension between creative exploration and robust safety measures in open-ended AI systems.

- It details challenges such as specification issues and the orthogonality between optimization power and intended objectives, drawing parallels with biological evolution.

- It recommends research on controllable, interpretable AI systems through simulation-based controls and evolutionary insights to ensure safe autonomous innovation.

Open-ended AI and Safety: Balancing Innovation with Control

The paper "Open Questions in Creating Safe Open-ended AI: Tensions Between Control and Creativity" explores the intricate balance between creativity and safety in the development of open-ended AI systems. As AI systems increasingly seek autonomy in their self-improvement capabilities, understanding the inherent challenges of open-ended search processes becomes crucial. This essay explores the fundamental premises of the paper, the significance of safety concerns, and the implications for AI development.

Understanding Open-ended AI

Open-ended AI refers to systems designed to explore, innovate, and evolve continuously without a predefined endpoint or goal. This paradigm contrasts sharply with traditional AI systems that operate under strict objective functions aimed at achieving specific outcomes. Inspired by biological evolution and the principles of Artificial Life (ALife), open-ended AI systems aim to discover novel solutions and generate complex behaviors through exploration and adaptation over time. The core challenge lies in harnessing this creativity while ensuring that the outcomes align with human values and safety standards.

Open-ended AI systems promise to automate and enhance processes traditionally managed by humans, such as neural architecture design and meta-learning algorithms. By embracing open-ended search, AI has the potential to surpass human capabilities and uncover solutions intractable to direct computational approaches.

Safety Concerns in Open-ended Systems

As AI systems become more autonomous, the question of safety—ensuring behaviors align with human intentions—gains prominence. Open-ended AI presents unique safety challenges due to its less predictable nature compared to directed machine learning. Problems such as specification issues, where the explicit incentives of an AI system diverge from the ideal objectives intended by its designers, become more complex. This divergence risks problematic outcomes as AI systems optimize unintended or misaligned objectives.

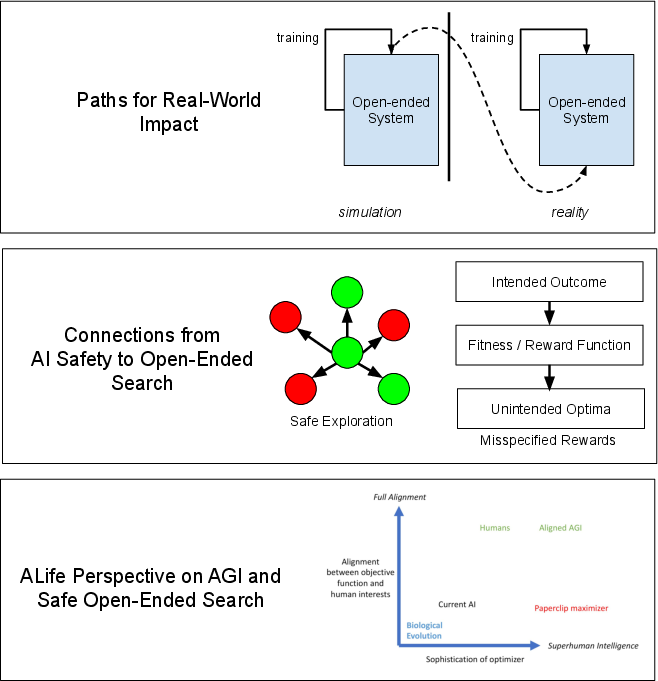

One pertinent case study is biological evolution, which, despite its blind objective of survival and reproduction, culminated in intelligent life with complex values, such as humans. This phenomenon highlights the orthogonality thesis: the ability to optimize is separate from what is being optimized. Yet, similar divergence in AI could lead to suboptimal or dangerous behaviors if not carefully managed.

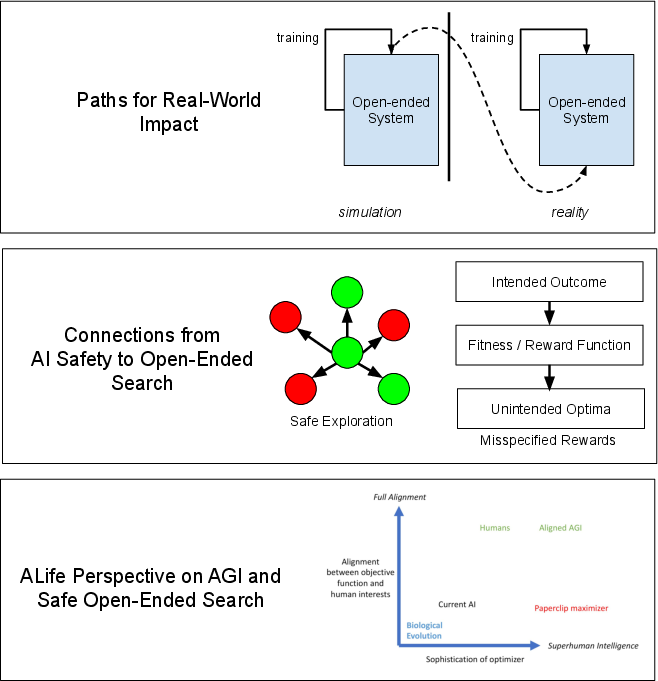

Figure 1: AI Safety for Open-ended Search. (a) To ground out concerns for safe open-ended search, first the paths for real-world impact from open-ended search are explored (most of which are expected to happen after transferring agents trained in simulation to reality). (b) With that grounding, connections are explored between open-ended search and the AI safety problems of safe exploration and misspecified objectives.

Theoretical and Practical Implications

Exploring safety in open-ended AI has profound theoretical and practical implications. Theoretically, it questions how creativity intersects with safety and how systems designed to innovate freely can be guided to avoid detrimental paths. It also challenges existing safety methodologies, often tailored to more directed forms of machine learning that presuppose clear objectives and pathways to success.

Practically, devising methods to control the outcomes of open-ended search is vital. This involves developing ways to specify goals indirectly and ensure that emergent properties align with safety constraints. Designing benchmarks that address the nuances of open-ended exploration and establishing interpretability of novel architectures are critical steps toward scalable and safe deployment of these systems.

Figure 2: Orthogonality thesis for open-ended ML. Hypothetical points are plotted for various trade-offs between sophistication of terminal goals and optimization power.

Future Directions

As the field advances, several research directions offer promise. Studying biological evolution and human cultural systems for lessons in alignment could provide insights into designing controllable open-ended systems. Moreover, computational studies focusing on predictability and controllability in simulated environments can offer safer pathways before real-world applications.

Developing automatic interpretability features for AI agents resulting from open-ended search is crucial for transparency and safety assurance. These advancements would allow AI systems to better communicate their decision-making processes and adapt their actions in alignment with human values and directives.

Conclusion

The paper underlines a significant frontier in AI research—creating safe and controllable open-ended AI systems. While offering unparalleled opportunities for innovation, these systems pose complex challenges regarding alignment and safety. Future efforts must focus on understanding and mitigating the risks associated with the freedom of open-ended exploration while leveraging its potential benefits. The discourse on safety in open-ended AI not only reflects the current technological trajectory but also shapes the ethical framework for future AI developments, underscoring the need for both creativity and caution.