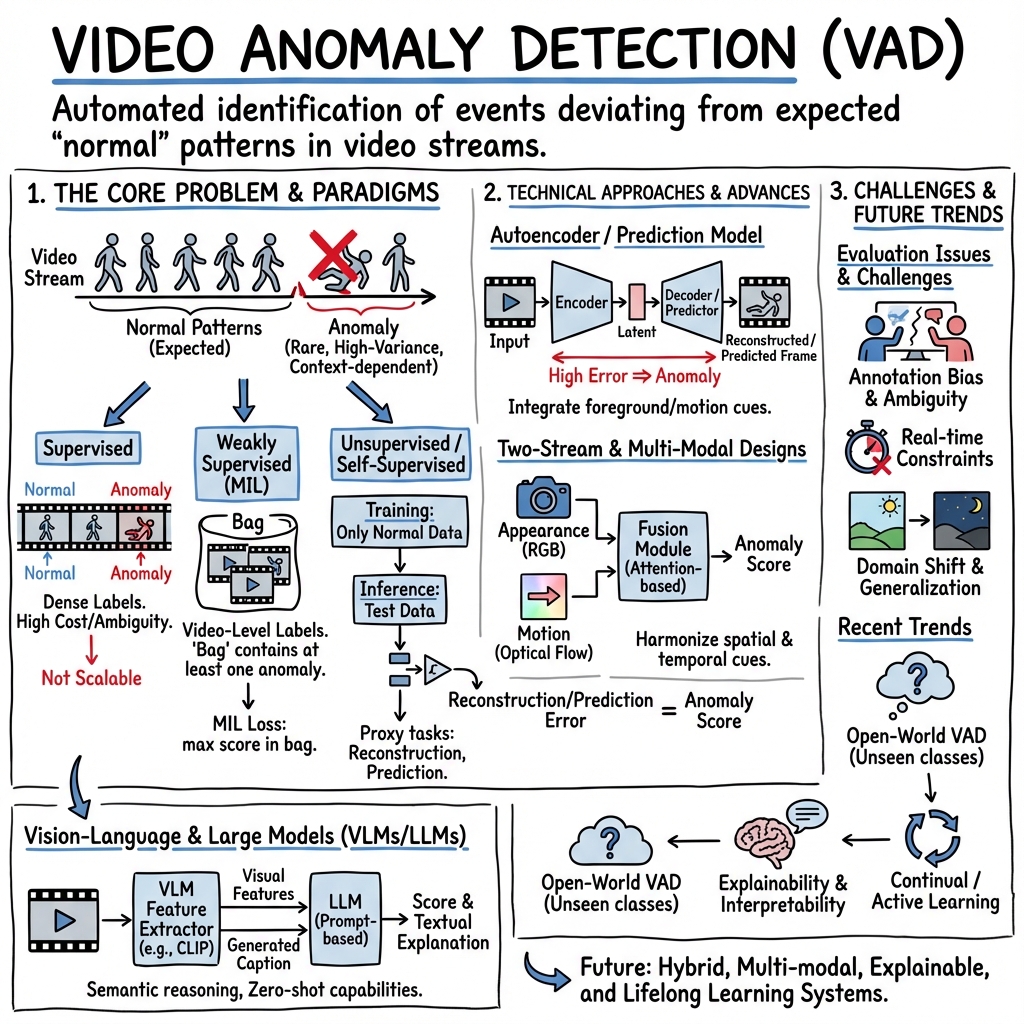

Video Anomaly Detection: Methods & Trends

- Video anomaly detection is the automated identification of deviations from normal behavior in video streams, addressing rare, high-variance events in spatial and temporal domains.

- Techniques have evolved from handcrafted features to deep learning, self-supervised, and vision–language based methods that enhance detection robustness.

- Applications span surveillance, healthcare, and environmental monitoring while focusing on real-time, explainable, and cross-domain detection challenges.

Video anomaly detection (VAD) is the automated identification of temporally or spatially localized events or behaviors in video streams that deviate from expected (“normal”) patterns. Serving as a core technology in surveillance, healthcare, environmental monitoring, and safety-critical applications, VAD addresses a fundamentally open-set recognition problem: by definition, anomalies are rare, high-variance, and context-dependent. Over the past decade, the field has witnessed a transformation from hand-crafted feature engineering to deep learning–based approaches, the advent of self-supervised and open-vocabulary methods, and the integration of vision–LLMs and LLMs for enhanced robustness and interpretability.

1. Fundamental Paradigms and Supervision Schemes

The conceptual and algorithmic landscape of VAD is structured by the supervision level and the type of proxy task used during learning, reflecting the scarcity and forms of available annotations (Liu et al., 2023, Wu et al., 2024, Noghre et al., 19 Aug 2025).

Supervised Approaches rely on dense frame-level or event-level anomaly annotations. Models are typically trained to directly discriminate between normal and anomalous instances in a classification setting. Such exhaustive labeling supports high detection accuracy but is rarely feasible for large-scale or open-world deployments due to labeling costs and annotation ambiguity (as shown by substantial shifts in performance and ranking when using multi-annotator averaged metrics over the same dataset (Liu et al., 25 May 2025)).

Weakly Supervised Approaches utilize video-level labels or coarser supervision. Multiple instance learning (MIL) is the dominant framework: a video is treated as a “bag” of segments, with the objective that at least one instance in an anomalous bag displays high anomaly confidence. MIL-style losses enforce that anomalous video instance scores are higher than those of normal videos:

where and are smoothness and sparsity factors (Liu et al., 2023, Wu et al., 2024).

Unsupervised and Self-Supervised Approaches assume the availability of only normal data at training. Proxy tasks, such as single-frame or multi-frame reconstruction (autoencoder or GAN-based) and future frame prediction (Schneider et al., 2022, Baradaran et al., 2023), are used to model regularities, with the main assumption that the learned model will yield high prediction error on anomalous patterns at inference:

with a distance metric (e.g., MSE). Self-supervised pretext tasks have grown more complex, including spatio-temporal jigsaw puzzles (Wang et al., 2022), where solving patch or frame orderings encourages discriminative spatiotemporal representations.

Fully Unsupervised and Open-Set VAD relax constraints further, removing even the normal/abnormal distinction at training. Methods in this family use pseudo-labeling, co-training, adversarial (e.g., Generative Cooperative Learning), or clustering to estimate normal prototypes in mixed data without any reliable supervision (Liu et al., 2023).

2. Methodological Advances and Technical Frameworks

VAD methodologies reflect recurring technical motifs and increasingly sophisticated architectural design.

Autoencoder and Prediction-Based Models: Standard in unsupervised VAD, autoencoders (R-CAE, R-ViT-AE) reconstruct the input, or predictive neural networks (P-CAE, P-ViT-AE, P-ConvLSTM) synthesize the next frame from past context (Schneider et al., 2022). Integrating foreground segmentation into the loss (e.g., F-MSE and W-MSE) exploits modalities such as depth, emphasizing anomaly-prone spatiotemporal regions.

Two-Stream and Multi-Modal Designs: Incorporating both appearance (RGB, depth) and motion (optical flow magnitude or direction) via dual-stream architectures addresses the challenge that many anomalies manifest through subtle motion or scene context deviations. Attention-based fusion modules, such as the ASTFM (Ning et al., 2023), harmonize motion and appearance channels across multiple neural network layers, with memory modules reinforcing regular patterns by storing normal prototypes.

Self-Supervised Pretext Tasks: Proxy learning includes not only reconstruction or prediction, but also spatial or temporal jigsaw puzzles (Wang et al., 2022), masked frame modeling, contrastive learning, and event restoration via keyframes (Yang et al., 2023). These increase the difficulty and discriminative power of normality modeling, with detailed theoretical loss definitions and architectural choices balancing computational complexity and detection sensitivity.

Vision–Language and Large (Multimodal) LLMs: The introduction of vision–LLMs (VLMs) as feature extractors (e.g., CLIP, BLIP) allows joint modeling of visual and semantic textual cues, beneficial for interpreting complex scene context (Wu et al., 2023, Lv et al., 2024, Zanella et al., 2024). Recent frameworks exploit LLMs and prompt engineering to perform anomaly scoring directly on video-generated captions and summaries, sometimes in a zero-shot or training-free manner (Zanella et al., 2024, Shao et al., 17 Apr 2025).

Domain Adaptation and Cross-Domain Learning: Real-world deployment often requires adaptation to new environments and scene variability. Weakly supervised cross-domain learning (CDL) strategies minimize prediction bias between pseudo-labeled auxiliary data and unlabeled external videos using uncertainty estimation, e.g.,

with high-dimensional feature agreement used to reweight the contribution of external data during learning (Jain et al., 2024).

3. Evaluation Protocols, Metrics, and Datasets

Standard evaluation metrics include frame-level or event-level Area Under the ROC Curve (AUC), Average Precision (AP), and detection latency; confusion-matrix–based rates (TPR, FPR, etc.) are also widely reported (Liu et al., 2023, Wu et al., 2024). However, systematic analysis highlights important protocol limitations (Liu et al., 25 May 2025):

- Annotation Bias: Traditional single-annotator metrics can be volatile due to subjective event labeling. Averaged AUC/AP over multi-annotator rounds is proposed:

where is the number of independent annotation rounds.

- Detection Latency: Latency-aware AP (LaAP) explicitly incorporates the timing of anomaly detection into scoring, rewarding prompt prediction within abnormal intervals:

with time-decaying weights favoring early detection.

- Scene Overfitting: Synthetic “hard normal” benchmarks (UCF-HN, MSAD-HN), generated via diffusion models, reveal scene overfitting (where models learn background cues rather than true anomaly characteristics) through sharply increased false alarm rates on matched-scene normal test clips.

Datasets include UCSD Ped1, Ped2, CUHK Avenue, ShanghaiTech, UCF-Crime, XD-Violence, and UBnormal, with varying degrees of annotation granularity, scene variance, and anomaly types. Synthetic and multimodal datasets are increasingly used to challenge models' robustness and generalization capabilities (Abdalla et al., 2024).

4. Application Domains and Challenges

VAD finds applications across three principal domains, each imposing specific technical constraints (Noghre et al., 19 Aug 2025):

- Human-Centric Contexts: Tasks include surveillance for public safety, healthcare monitoring (such as fall, seizure, or Parkinson’s episode detection), and crowded scene analysis. Key challenges are privacy, fine-grained pose/motion modeling, and rare event generalization.

- Vehicle-Centric Scenarios: Road traffic monitoring and autonomous driving demand robust detection of accidents, traffic violations, and unexpected vehicle/pedestrian interactions. Domain shift, real-time adaptation, and multi-view consistency are core problems.

- Environment-Centric Monitoring: Disaster detection (fire, flood) and environmental change tracking emphasize spatial complexity and the need for reliable scene context reasoning. Models often integrate pixel-based, motion, and audio modalities.

Across all domains, adaptive and continual learning methods are critical to maintain robustness as normal patterns shift or new anomaly types appear. Active and online learning approaches, memory modules to mitigate catastrophic forgetting, and meta-learning for rapid adaptation are actively explored (Noghre et al., 19 Aug 2025).

Open challenges include:

- Scarcity, ambiguity, and context-dependence of anomaly labels (including the definition of “anomaly” itself);

- Variability and domain shift across deployment environments;

- Resource constraints for real-time processing;

- The integration and alignment of multimodal information streams;

- Evaluation protocol mismatch between offline benchmarks and deployment scenarios.

5. Recent Trends: Large Models, Explainability, and Open-World VAD

The field has shifted toward models capable of semantic reasoning, explainability, and handling the inherently open-set nature of anomalies.

Vision–Language and Multimodal LLMs: VLMs and multimodal LLMs (MLLMs) enable open-vocabulary anomaly detection, semantic event categorization, and textual explanation of detection outcomes (Wu et al., 2023, Lv et al., 2024, Zanella et al., 2024, Ding et al., 14 Apr 2025, Shao et al., 17 Apr 2025, Cai et al., 23 Jul 2025). Strategies include:

- Prompt-based anomaly detection using pre-trained LLMs, leveraging frame- or segment-wise captions;

- Hierarchical prompting and event-aware graph segmentation (Shao et al., 17 Apr 2025);

- Hidden state probing and layer saliency analysis to extract linear anomaly-discriminative features from large models without fine-tuning (Cai et al., 23 Jul 2025);

- Integration of knowledge bases and retrieval-augmented generation (RAG) for domain-specific anomaly reasoning (Ding et al., 14 Apr 2025);

- Synthetic anomaly generation (using AIGC/diffusion technologies) to augment open-set generalizability and reduce overfitting.

Performance and Generalization: New training-free and tuning-free methods achieve competitive or superior AUC/AP versus traditional unsupervised models on challenging surveillance benchmarks (UCF-Crime, XD-Violence), with advances in temporal localization, semantic understanding, and reduced reliance on in-domain labelled data (Zanella et al., 2024, Cai et al., 23 Jul 2025).

Explainability and Interpretability: Several frameworks (e.g., those based on VLLMs with instruction-tuning or chain-of-thought reasoning) generate explicit textual explanations of detected anomalies, offering transparency in deployment-critical environments (Lv et al., 2024, Ding et al., 14 Apr 2025).

6. Technical Limitations, Evaluation, and Future Directions

While VAD research has produced a proliferation of architectures and algorithms, limitations persist.

- Annotation bias and metric instability necessitate multi-annotator, latency-aware, and scene-overfitting benchmarks as standard protocol (Liu et al., 25 May 2025).

- Open-world anomaly detection remains structurally unsolved. Pseudo-anomaly synthesis, margin learning, and flow-based generative modeling comprise the state of the art for seeking robust separation of seen and unseen anomalies (Wu et al., 2024).

- Scalability and resource constraints hinder real-time deployment. Hybrid fast–slow detection pipelines and efficient tuning-free MLLM utilization are promising directions (Ding et al., 14 Apr 2025, Cai et al., 23 Jul 2025).

- Interpretability and semantic integration are increasingly pressing, reflecting demands in safety-critical and human-in-the-loop uses.

Future research is expected to focus on:

- More sophisticated loss functions and regularization tailored for rare event detection and temporal consistency;

- Hybrid, multi-modal, and cross-domain architectures with explicit fusion and adaptation mechanisms;

- Continual, active, and meta-learning to facilitate lifelong VAD in dynamic, real-world environments;

- Taxonomically richer anomaly labeling and dataset construction, leveraging AIGC for challenging synthetic benchmarks;

- Consistent emphasis on explainability, open-vocabulary recognition, and strong performance under latency and generalization constraints.

7. Resources and Community Infrastructure

The field maintains large, widely adopted public datasets and open-source codebases (Liu et al., 2023, Wu et al., 2024). Curated resources centralize benchmarks, evaluation metrics, and libraries, facilitating reproducibility, transparency, and comparative assessment as the scope of VAD expands across an increasingly diverse set of applications and methodologies.

In summary, video anomaly detection comprises a spectrum of technical paradigms—each addressing annotation scarcity, open-set uncertainty, scene context, and generalization—in a research ecosystem that is rapidly integrating advanced deep, self-supervised, vision–language, and training-free techniques. Core innovations include foreground-prioritized loss design, object- and event-aware pipelines, multi-modal fusion, pseudo anomaly generation, and explainable modeling with multimodal LLMs. Persistent challenges in supervision, generalization, real-time efficiency, and interpretability continue to drive both foundational and application-specific advances in the field.