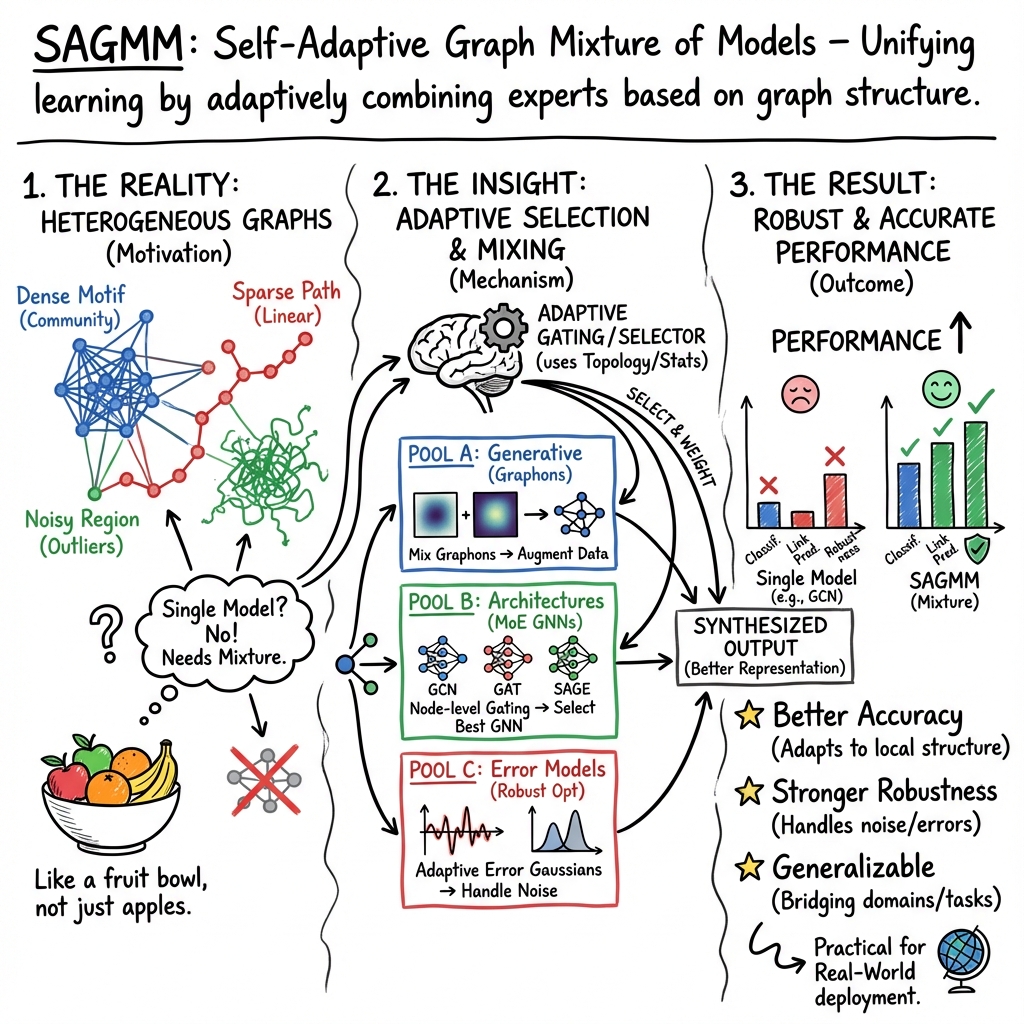

Self-Adaptive Graph Mixture Models (SAGMM)

- SAGMM is a framework that unifies graph learning, representation, and robust inference by adaptively combining multiple diverse models and experts based on graph structure.

- It employs explicit mixture modeling techniques such as graphon mixtures, motif-based clustering, and topology-aware gating to dynamically select and merge expert outputs.

- Empirical evaluations demonstrate SAGMM’s superior performance in tasks like node classification, link prediction, and robust graph optimization compared to traditional models.

Self-Adaptive Graph Mixture of Models (SAGMM) unifies graph learning, representation, and robust inference by adaptively combining multiple models or experts—either at the level of generative distributions, neural architectures, or error distributions—while leveraging graph structural properties to guide mixture assignments and model adaptation. SAGMM instances span from graphon mixture frameworks and domain-generalizing model mergers to robust graph optimization, all characterized by their capacity to discover, select, and efficiently synthesize diverse modeling components based on graph data statistics, topological cues, or latent mixture structure.

1. Core Principles and Formal Definitions

SAGMM builds on the observation that many real-world graph datasets are best interpreted as mixtures—over generative distributions, domains, or model architectures—rather than homogeneous samples from a single source. The formalism can target different modeling regimes:

- Graphon Mixtures: A graphon provides a nonparametric limit object encoding an edge probability kernel, from which finite graphs are sampled by latent variable draws and Bernoulli edge assignments. The mixture model assumption posits that each observed graph arises from a finite mixture with unknown (Azizpour et al., 4 Oct 2025).

- Mixture-of-Experts in GNNs: SAGMM maintains a heterogeneous expert pool , across diverse GNN architectures (e.g., GCN, GAT, SAGE, GIN, MixHop), and assigns mixture weights to each expert per node or graph based on input-adaptive gating informed by topological descriptors (Meena et al., 17 Nov 2025).

- Gaussian Mixture in Graph Optimization: For robust inference in factor graphs, model error terms as a mixture of zero-mean Gaussians, with parameters adaptively estimated through expectation–maximization jointly with state variables (Pfeifer et al., 2018).

These frameworks share three core mechanisms: (i) explicit mixture modeling; (ii) adaptive estimation or selection of components given graph structure, features, or errors; (iii) compositional inference where outputs from mixture components are selectively aggregated for downstream tasks.

2. Mixture Estimation and Adaptive Clustering

Graphon and Motif-Based Clustering:

Given observed graphs , estimate underlying mixture components using motif (graph moment) densities:

- Compute motif densities for a fixed motif set (e.g., all subgraphs with nodes), resulting in vectors .

- Cluster (e.g., -means, with or data-adaptive selection) to recover mixture assignments .

- For each cluster, apply a graphon estimation routine (e.g., SIGL) to obtain (Azizpour et al., 4 Oct 2025).

Domain Mixtures and Label-Conditional Generation:

In domain merging, assume the target domain lies in the convex hull of source distributions: . Since only pretrained models are available, a graph generator optimizes node features and edge encoders to synthesize data representative of each domain, using batch-norm and entropy regularization (Wang et al., 4 Jun 2025).

Error Mixtures via Expectation–Maximization:

In robust sensor fusion, embed an inner EM loop for Gaussian mixture estimation over graph factor residuals, nested within an outer gradient-based solver for state estimation, yielding a bi-level adaptive mechanism for multi-modal error structure (Pfeifer et al., 2018).

3. Model Selection, Attention Gating, and Expert Pruning

Topology-Aware Attention Gating (TAAG):

Assign mixture weights over experts for each node by extracting local/global topology features (e.g., , , and smallest Laplacian eigenvectors), followed by linear projection into Q/K/V and simple global attention (Meena et al., 17 Nov 2025). Sparse mask selects only experts with . An auxiliary rule ensures every node receives at least one expert.

Gating in Domain Mixtures:

Sparse gating is implemented by scoring (), Top-K selection, and softmax construction over experts, applied per (generated) sample. Each expert can be masked (with real-valued applied to a subset, commonly classifier head parameters) to enable domain shift adaptation while preserving knowledge (Wang et al., 4 Jun 2025).

Adaptive Pruning:

Periodically compute importance scores for each expert; those below threshold are pruned to reduce computation. Auxiliary losses ( for expert load balancing, for gating diversity) promote robust specialization without mode collapse (Meena et al., 17 Nov 2025).

Training-Efficient Variant:

In SAGMM-PE, all expert GNNs are pretrained and frozen; only gating and task heads are updated during downstream adaptation.

4. Augmentation, Contrastive Learning, and Robust Inference

Graphon-Mixture-Aware Mixup (GMAM):

Generate graph augmentations by interpolating in the space of estimated graphons: , and sampling new graphs using fresh node latents and Bernoulli edge assignments. Labels are similarly mixed: . Extension to higher-order mixtures is immediate: (Azizpour et al., 4 Oct 2025).

Model-Aware Graph Contrastive Learning (MGCL):

Following clustering, augment edges using the estimated cluster-specific graphon, and in InfoNCE loss restrict negative pairs to samples from distinct clusters: This reduces "false negatives" and preserves true mixture component structure.

Robust Factor-Graph Optimization:

In dynamic sensor fusion, assign each residual a likelihood under a Gaussian mixture, updating both mixture parameters (via EM) and state variables (via nonlinear least-squares on summed mixture log-likelihood) at every time step. Robustness and self-tuning are achieved through this tight interleaving (Pfeifer et al., 2018).

5. Theoretical Guarantees

Graphon Cut Distance and Motif Density Bounds:

A novel theoretical guarantee establishes that, for all motifs of vertices and edges, with , , and ,

holding with probability at least (Azizpour et al., 4 Oct 2025). This links structural proximity in graphon space with observable motif statistics, enabling principled mixture component estimation.

Domain Generalization Upper Bound:

Given source domains and pretrained optimal models, the error of the mixture on is upper-bounded by the sum of cross-validation errors between pairs of sub-learners, formalized using the -divergence (Wang et al., 4 Jun 2025).

EM Convergence in Robust Optimization:

Inner EM loops over residuals guarantee local optimum mixture parameters for fixed states. The outer blockwise alternation for state updates converges stably if state shifts are smooth and the sliding window scheme is narrow (Pfeifer et al., 2018).

6. Empirical Evaluation Across Regimes

Node and Graph Classification

- On ogbn-products (2.4M nodes): SAGMM achieves 82.91% versus GCN 75.54%, GAT 76.77%, prior mixtures ≤68.77% (Meena et al., 17 Nov 2025).

- On TUDatasets for graph classification: GMAM yields highest accuracy on 5/7 datasets, with up to +1.3% absolute improvement over strong augmentation baselines (Azizpour et al., 4 Oct 2025).

Link Prediction and Molecular Regression

- On ogbl-ddi: SAGMM achieves 74.20 HITS@20 vs. GraphSAGE 53.90, DA-MoE 45.58.

- On regression tasks (molfreesolv): SAGMM RMSE 2.15 (vs. 2.23 for GAT), indicating robust performance for molecular property prediction (Meena et al., 17 Nov 2025).

Robust Graph Optimization

- On UWB indoor localization and urban GNSS: Adaptive sum-mixture SAGMM yields up to 50% reduction in absolute trajectory error (ATE) relative to statically parameterized mixtures, and outperforms all tested robust estimators with only a modest (2×) computation overhead (Pfeifer et al., 2018).

Ablations and Sensitivity

- Ablations confirm the necessity of expert gating, masking, and diversity regularization for effective mixture selection. Removing mixture-adaptive components leads to sharp performance drops (e.g., pp drop without gating) (Wang et al., 4 Jun 2025, Meena et al., 17 Nov 2025).

- Expert pruning reduces parameter count and inference time by 10–20% with negligible accuracy loss (Meena et al., 17 Nov 2025).

7. Extensions, Generalizations, and Practical Applications

Toward Fully Adaptive, Multi-Granular SAGMM:

- Motif-based clustering can be replaced or supplemented by spectral signatures, node embeddings, or dynamic, data-driven selection (e.g., silhouette analysis), enabling self-adaptive discovery of mixtures as data distributions shift (Azizpour et al., 4 Oct 2025).

- Mixtures can leverage higher-order motifs, weighted mixtures, and stochastic gating mechanisms to address rare or underrepresented graph populations.

- Domain merging can operate in source-free settings, reconstructing synthetic data through label-conditional graph generation when only model weights are available, and merging heterogeneous experts via sparse gating and adaptive masking for out-of-distribution generalization (Wang et al., 4 Jun 2025).

- In streaming or evolving data, on-the-fly reclustering, gating re-optimization, and model pruning yield scalable, deployment-ready SAGMM variants.

- In robust estimation tasks, the SAGMM paradigm enables automatic parameter tuning to match non-Gaussian, multi-modal error distributions, without manual intervention (Pfeifer et al., 2018).

A plausible implication is that SAGMM provides a common abstraction for multi-model, multi-domain, and multi-generator learning in graphs, bridging generative, discriminative, and robust estimation paradigms. This suggests strong relevance for heterogeneous knowledge integration, transfer learning, and robust real-world graph deployment.