Representation Steering in Neural Models

- Representation steering is a technique for intervening in hidden neural representations to achieve fine-grained control over model inferences.

- It leverages the linear or affine properties of activation spaces by constructing steering vectors that adjust semantic, temporal, and behavioral traits.

- Empirical studies reveal improvements in performance, interpretability, bias mitigation, and efficient adaptation across multiple domains.

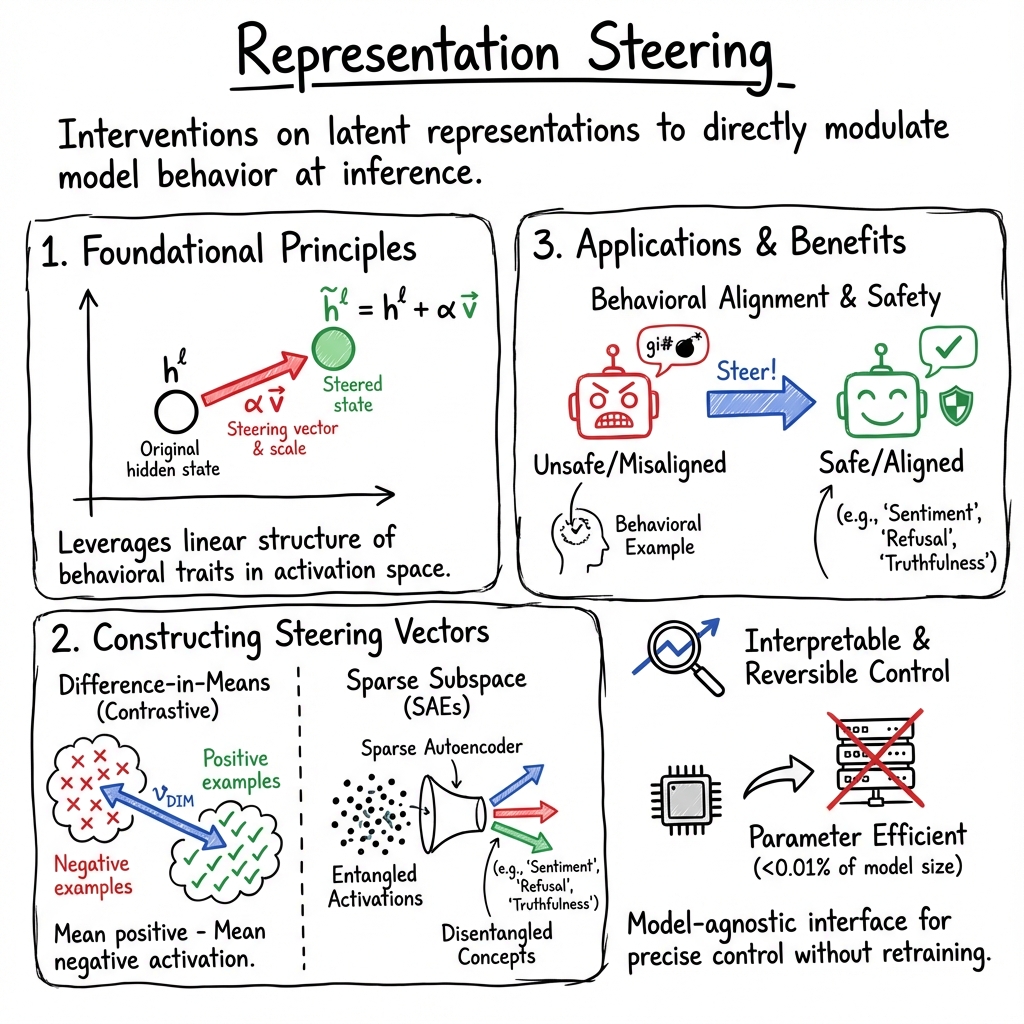

Representation steering denotes interventions on the latent representations (typically hidden activations or residual streams) of neural models—most prominently LLMs and multimodal models—designed to directly modulate model behavior at inference or during targeted fine-tuning. This paradigm leverages the observation that high-level semantic features, behavioral traits, and distributional information are linearly or affine-encoded in intermediate activation spaces, making them accessible to manipulation via appropriately constructed steering vectors or subspace transformations. Unlike weight-based or prompt-only techniques, representation steering operates by adding, projecting, or otherwise transforming the representation at forward-pass time, with the dual aims of fine-grained control and interpretability.

1. Foundational Principles of Representation Steering

At its core, representation steering rests on the linear or affine structure of hidden activation manifolds in neural networks. A canonical form involves augmenting the activation at a chosen layer with a steering vector scaled by a coefficient : where captures the difference between two distributions or behavioral modes in representation space. For instance, in the TARDIS framework, is constructed as the mean difference between activations corresponding to a source time period and a target time period, allowing the model to adapt to distributional shifts that occurred after its training cutoff without touching weights or requiring labeled data (Shin et al., 24 Mar 2025).

Such additive strategies quantify and utilize the geometric shifts observed in real distributional, semantic, or attribute-based transitions, relying on the empirical fact that the principal factors of variation underlying model outputs are encoded as directions (or low-dimensional subspaces) in latent space.

2. Algorithms and Methodological Paradigms

Numerous methodologies have been proposed for constructing and applying steering vectors or subspaces:

- Difference-in-Means (DIM) or Contrastive Vectors: Compute the mean hidden activation on positive examples minus that on negatives, yielding a steering direction for binary attributes (Shin et al., 24 Mar 2025, Siu et al., 16 Sep 2025, Wu et al., 28 Jan 2025).

- Supervised Sparse Subspace Steering: Employs sparse autoencoders (SAEs) to learn disentangled, monosemantic latent codes, followed by supervised selection and optimization of sparse steering directions, focusing on a minimal subspace linked to the target attribute (He et al., 22 May 2025).

- Multi-attribute, Orthogonal Subspace Steering: Allocates separate, orthogonal subspaces for individual attributes and a shared subspace for common factors, integrating steering directions dynamically via a mask network to minimize inter-attribute interference (MSRS) (Jiang et al., 14 Aug 2025).

- Affine and Distribution-matching Maps: Uses affine transformations constrained to align means and (optionally) covariances between pre- and post-intervention distributions, under Wasserstein or KL-divergence metrics (Singh et al., 2024, Sharma et al., 19 Sep 2025).

- Activation Addition/Removal: Implements vector addition to promote attributes and projection (subtraction of the component along the steering direction) or "affine concept editing" to suppress attribute-related signals (Siu et al., 16 Sep 2025, Siu et al., 16 Sep 2025, Cyberey et al., 27 Feb 2025).

- Sparse Shift Autoencoders (SSAEs): Trains an autoencoder to map differences between pairs of embeddings to sparse codes, yielding provably identifiable concept shift vectors for compositional or entangled settings (Joshi et al., 14 Feb 2025).

- Projection-based and Input-dependent Scaling: Scales the steering vector added or subtracted in proportion to the activation's projection onto the direction, yielding individualized interventions (Cyberey et al., 27 Feb 2025).

Pseudocode for core operations (from (Shin et al., 24 Mar 2025), TARDIS):

1 2 3 4 5 6 7 |

for each layer l in L: vs2t[l] = mean([model(x, layer=l) for x in D_t]) - mean([model(x, layer=l) for x in D_s]) for l in model.layers: h = model.forward_layer(h, l) if l in L: h = h + alpha * vs2t[l] |

3. Empirical Applications and Benchmark Results

Representation steering has demonstrated efficacy across a wide array of domains:

- Temporal Adaptation: TARDIS closes up to absolute accuracy gap on temporally shifted text classification tasks, outperforming earlier models without any weight updates or supervised target data (Shin et al., 24 Mar 2025).

- Behavioral Alignment and Attribute Control: SAE-SSV improves steering success rates on sentiment, political polarity, and truthfulness tasks by $15$–$20$ percentage points over unsupervised or generic dense-vector methods, with minimal impact on language quality and interpretability owing to the sparse, interpretable subspace constraint (He et al., 22 May 2025).

- Multi-Attribute and Interference Minimization: MSRS achieves up to absolute improvement on TruthfulQA, while drastically reducing attribute conflicts compared to single-subspace or non-orthogonal baselines (Jiang et al., 14 Aug 2025).

- Guardrails for Safety and Fairness: Sparse Representation Steering enables perfect (100%) refusal against malicious instructions and significant mitigation of stereotype bias and falsehoods, with finer control and less degradation of grammar than earlier dense-steering or CAA approaches (He et al., 21 Mar 2025).

- Concept Isolation for Narrow JL Attacks: RepIt orthogonalizes target concept vectors from non-target refusal directions, limiting behavioral change to hundreds of neurons, and thereby prevents overgeneralization of harmful (e.g., WMD) behaviors to unrelated safety contexts (Siu et al., 16 Sep 2025).

- Fairness Optimization: KL-projected affine steering achieves exact demographic parity or equal opportunity in LLM classification without training, and outperforms mean-matching and linear erasure techniques (Sharma et al., 19 Sep 2025).

Empirical benchmarks such as AxBench (Wu et al., 28 Jan 2025) and SteeringControl (Siu et al., 16 Sep 2025) confirm that, for precise steering of language generation, rank-1 supervised finetuning and difference-in-means remain competitive, while prompting is still best for unbounded concept injection, with representation methods excelling in localized, interpretable, and reversible control.

4. Interpretability, Identifiability, and Theoretical Guarantees

A central motivation and technical advantage of representation steering is interpretability. Disentangled, monosemantic subspaces induced by SAEs or SSAE architectures allow each latent or direction to be grounded in a single, human-interpretable concept (e.g., explicit sentiment, topicality, refusal) (He et al., 22 May 2025, He et al., 21 Mar 2025, Joshi et al., 14 Feb 2025). This enables:

- Direct inspection by activation probing (e.g., inspecting which prompt types activate which neurons).

- Modular interventions affecting only desired features, reducing side-effects and preserving fluency and informativeness.

- Proofs of identifiability: SSAEs provably recover unique (up to permutation and scaling) concept-shift vectors when trained on varied multi-concept shifts, even in the absence of labeled data or supervision (Joshi et al., 14 Feb 2025).

Furthermore, affine and distribution-matching approaches (as in MiMiC (Singh et al., 2024) and KL-minimization for fairness (Sharma et al., 19 Sep 2025)) provide closed-form solutions with Wasserstein or KL-optimality guarantees for steering distributions, justifying the choice of linear interventions from first principles.

5. Robustness, Side Effects, and Limitations

Despite their strengths, representation steering methods introduce several nuanced considerations:

- Entanglement and Collateral Effects: Modifying representations along directions spanning entangled attributes can inadvertently alter unrelated behaviors—e.g., steering a general refusal vector may compromise helpfulness or increase sycophancy (Siu et al., 16 Sep 2025). Orthogonal subspace allocation (MSRS) or targeted projection (RepIt) mitigate but do not eliminate these side effects.

- Layer and Location Sensitivity: The effectiveness of interventions depends strongly on the choice of layer; middle layers often yield the best tradeoff between efficacy and maintaining generalization (Shin et al., 24 Mar 2025, Jiang et al., 14 Aug 2025, He et al., 22 May 2025). Some methods, like TARDIS, dynamically aggregate contributions from multiple layers or integrate time-classifiers when period annotation is unavailable.

- Evaluation Pitfalls: Likelihood-based evaluation pipelines with context-matched prompts, baseline deltas, and difficulty quantiles are necessary—simpler metrics can overstate the success of activation-based methods (Pres et al., 2024).

- Parameter Efficiency: Linear or low-rank representation steering interventions are extremely parameter-efficient (often of model size) and reversible compared to prompt-based or full finetuning methods (Wu et al., 27 May 2025, Wu et al., 28 Jan 2025, Bi et al., 2024).

6. Extensions: Multimodal and Multiconcept Transition Steering

Representation steering extends beyond text LLMs to multimodal domains. In MLLMs, concept decomposition of residual streams via dictionary learning and analysis of representation shifts during finetuning yield interpretable, reusable shift vectors. These vectors enable direct style or answer-type edits at inference without gradient steps, and the same mathematical formalism applies (e.g., difference-of-means, concept embedding shifts) (Khayatan et al., 6 Jan 2025).

The MoReS framework, for example, efficiently steers visual representations in MLLMs through low-rank down-up linear maps per layer, rebalancing textual and visual modalities at lower parameter count than LoRA without loss of task performance (Bi et al., 2024).

7. Outlook

Representation steering offers a general, model-agnostic interface to behavioral, semantic, temporal, and multimodal control, with a rigorous basis in the geometry of neural activations and distributional optimal transport. State-of-the-art techniques now routinely integrate sparse, supervised, and multi-subspace construction, as well as input- or behavior-conditioned application. The area remains active, with open questions in the automatable discovery of disentangled features, universal metrics for side-effect quantification, and efficient extension to arbitrarily many attributes and modalities. As benchmarks, open tools, and evaluation standards mature, representation steering is positioned as a critical tool for both interpretability research and production alignment in the next generation of foundation models.