Real2Sim Pipeline: Bridging Reality and Simulation

- Real2Sim pipelines are computational frameworks that transform real-world data into simulation-ready digital twins with minimal fidelity loss.

- They integrate implicit neural representations and automated asset generation to bridge visual, geometric, and physical gaps in simulation environments.

- These methods enhance cost-efficiency, scalability, and accuracy in robotics, VR, and manufacturing by streamlining asset creation and parameter tuning.

A Real2Sim pipeline refers to a class of computational frameworks and methodologies that transform data, objects, phenomena, or scenes captured from the real world into highly accurate, simulation-ready digital representations. These pipelines enable the transfer, modeling, and interactive manipulation of complex real-world entities within virtual, robotic, or simulation environments, often with minimal loss of fidelity. Real2Sim contrasts with Sim2Real (simulation-to-reality) by focusing on mapping from real data to simulation, sometimes incorporating iterative back-and-forth (Real2Sim2Real) for closed-loop applications.

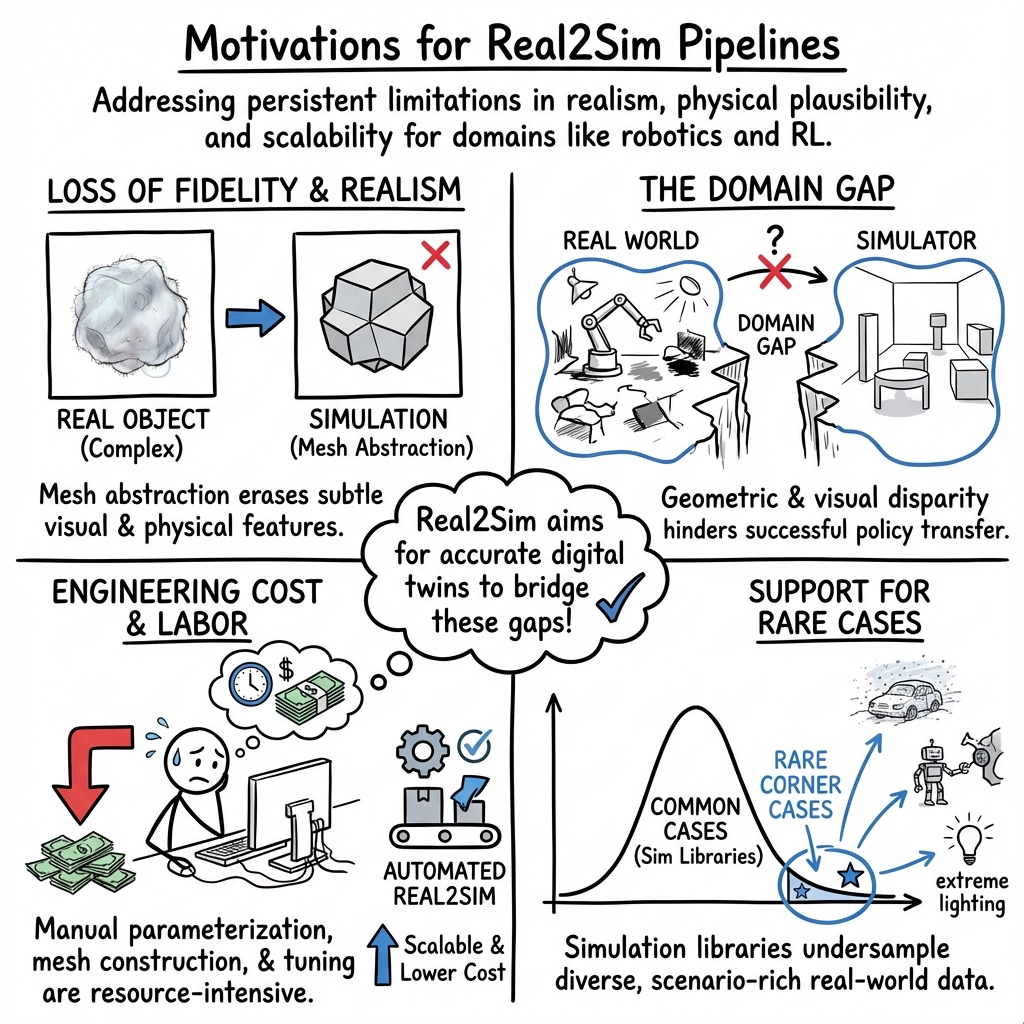

1. Motivations for Real2Sim Pipelines

Real2Sim pipelines are motivated by persistent limitations of direct simulation modeling and mesh-based or template approaches in domains requiring realism, physical plausibility, and scalability. Key challenges addressed include:

- Loss of fidelity in geometric or physical modeling: Classical pipelines depend on explicit mesh or point cloud representations that upper-bound achievable realism (especially for dynamic, translucent, fuzzy, or otherwise visually complex objects), as well as their physical properties. Mesh abstraction often erases subtle features found in real-world objects (Kondo et al., 2022).

- Domain gap in robotics and reinforcement learning: High-fidelity, realistic simulation environments are critical for successful policy transfer, but simulators often suffer from a geometric and visual gap relative to the real world. Real2Sim seeks to produce digital twins that match reality in both geometry and appearance (Han et al., 12 Feb 2025).

- Engineering cost and human labor: Manual parameterization, mesh construction, and tuning for simulation are resource-intensive, limiting scalability. Automated Real2Sim can lower these costs substantially by leveraging direct data capture and modern machine learning (Pfaff et al., 1 Mar 2025).

- Support for rare or diverse cases: Simulation asset libraries are typically undersampled for rare corner cases and do not span the diversity observed in real data. Automated Real2Sim pipelines enable scalable creation of diverse, scenario-rich virtual environments, e.g., for autonomous driving (Chen et al., 8 Sep 2025).

- End-to-end differentiability and optimization: For physical processes like photolithography, learned Real2Sim simulators act as differentiable, data-calibrated surrogates that bridge the gap between design and fabrication (Zheng et al., 2023).

2. Fundamental Methodologies and Architectures

Real2Sim pipelines can be broadly categorized by their input modality, output target, and internal representation. Dominant approaches include:

a. Implicit Neural Representations

- Neural Radiance Fields (NeRF) and 3D Gaussian Splatting (3DGS): Capture object or scene appearance via implicit neural fields that synthesize photorealistic images for arbitrary viewpoints, supporting VR applications and advanced scene reconstruction (Kondo et al., 2022, Han et al., 12 Feb 2025, Li et al., 18 Nov 2024, Xie et al., 12 Jan 2025).

- Hybrid mesh + neural rendering: Employ geometry from photogrammetric or multi-view stereo pipelines and neural rendering for detailed realism and correct physical interactions (Han et al., 12 Feb 2025).

b. Automated Asset Generation and Reconstruction

- Object-centric photometric reconstruction + physical modeling: Use robotic pick-and-place with RGB(-D) imagery and torque sensing to generate mesh, collision, and inertial properties aligned with physical reality, handling foreground occlusions and background separation for robust asset creation (Pfaff et al., 1 Mar 2025).

- Domain-adaptive simulation via GANs: Translate real camera observations into the simulation domain with learned image-to-image methods, enabling direct deployment of policies trained in sim but tested under real-world sensing (Chen et al., 2022).

- Digital twin assembly from multi-view geometry: Fuse sensor data, segmentation, and structure-from-motion for globally-aligned, articulated environment creation, critical for robustified RL (Han et al., 12 Feb 2025, Torne et al., 6 Mar 2024).

- Task and scenario generation with vision-LLMs (VLMs): Generate simulation environments, scene correspondences, and solvable robotic tasks directly from RGB-D images using VLMs and structured procedural elements (Zook et al., 20 Oct 2024).

c. Physics and Physical Parameter Extraction

- Inertial and friction parameter identification: Robotic manipulation combined with constrained optimization to infer object mass, center of mass, and inertia directly from executable robot trajectories and joint sensing (Pfaff et al., 1 Mar 2025).

- Physically accurate simulation parameter tuning: Employ differentiable simulation and Bayesian inference to tune digital twin properties from real or video data, closing the loop with real system dynamics (Heiden et al., 2022).

d. Data-Driven Process Emulation

- Fabrication and process surrogates: Use neural networks trained on real input-output pairs to emulate complex fabrication steps, e.g., lithography, integrating them into differentiable design loops for manufacturability regularization (Zheng et al., 2023).

3. Core Components and Technical Mechanisms

A Real2Sim pipeline typically includes:

| Component | Description | Example Papers |

|---|---|---|

| Data Acquisition | Multi-view images, RGB-D video, manipulation logs, sensor traces | (Pfaff et al., 1 Mar 2025, Han et al., 12 Feb 2025) |

| Segmentation & Object Extraction | Instance + semantic masks, object tracking, pose estimation | (Pfaff et al., 1 Mar 2025, Zook et al., 20 Oct 2024) |

| Scene/Asset Reconstruction | NeRF, 3DGS, MVS, surface meshing | (Kondo et al., 2022, Han et al., 12 Feb 2025) |

| Physical Property Identification | Mass, inertia, friction via trajectory optimization/comparative methods | (Pfaff et al., 1 Mar 2025, Heiden et al., 2022) |

| Rendering/Simulation Engines | Used for VR/interactive display, physics simulation | (Kondo et al., 2022, Li et al., 18 Nov 2024) |

| Policy/Task Synthesis (for RL) | Policy learning, imitation, task code/test generation | (Han et al., 12 Feb 2025, Zook et al., 20 Oct 2024) |

| Evaluation and Sim2Real Transfer | Closed-loop testing, policy deployment in sim and real world | (Li et al., 18 Nov 2024, Abou-Chakra et al., 4 Apr 2025) |

Specialized technical contributions include alpha-transparent training for handling occlusions and background removal in NeRF reconstruction (Pfaff et al., 1 Mar 2025), screen-space covariance culling for viewpoint robustness (Xie et al., 12 Jan 2025), or bilevel/bilevel differentiable design-to-manufacture loops in computational optics (Zheng et al., 2023).

4. Quantitative Performance and Empirical Evidence

Numerous empirical results across the literature demonstrate the effectiveness of Real2Sim pipelines:

- Photorealism & fidelity: Orders-of-magnitude improvement in visual detail over mesh/point cloud methods, especially for complex or dynamic objects (Kondo et al., 2022, Han et al., 12 Feb 2025, Li et al., 18 Nov 2024).

- Robust sim2real transfer: Achieves near-lossless, zero-shot transfer in complex robotics tasks, with average real-world policy success rates exceeding 58% without finetuning (Han et al., 12 Feb 2025), and 92.3% insertion success in vision-based RL with GAN adaptation (Chen et al., 2022).

- Automation & scalability: End-to-end asset creation pipelines operate without human-in-the-loop, requiring only standard hardware in industrial/warehouse setups and generating hundreds of assets per hour (Pfaff et al., 1 Mar 2025).

- Physics parameter accuracy: Achieves mass/center of mass estimation errors of ∼1–2% for robotic assets (Pfaff et al., 1 Mar 2025); reproduces real-world object dynamics and articulated motion with low error via video-based system identification (Heiden et al., 2022).

- Improved downstream task performance: In RL and imitation learning, policies trained on Real2Sim-generated environments outperform mesh/CG/standard randomization baselines, with up to +68.3% real-world navigation improvement and better detection rates for rare objects in perception (Xie et al., 12 Jan 2025, Chen et al., 8 Sep 2025).

- Manufacturing regularization: Learned process-aware photolithography simulators embedded into optical design reduce the gap between design intent and fabricated performance (as measured on HOE and MDL devices) (Zheng et al., 2023).

5. Distinctions from Traditional Sim2Real and Other Paradigms

Real2Sim departs from Sim2Real in both directionality and technical implications:

- Directionality: Rather than making simulation look more like reality, Real2Sim reframes the challenge by transforming real-world data and phenomena into digital twins faithfully reflecting real geometry, appearance, and dynamics (Chen et al., 2022).

- Reduction in domain gap: By leveraging data-driven, structure- or neural-based surrogates calibrated on real artifacts, Real2Sim pipelines often achieve tighter correspondence in both visual and physical domains than domain randomization, reducing the transfer difficulties often observed in classical sim-to-real approaches (Han et al., 12 Feb 2025).

- Closed-loop, bidirectional use: Some frameworks further enable Real2Sim2Real cycles, in which real data seeds simulation for scalable training and validation, with evaluation and policy improvements iteratively reconciled against new real-world data (Li et al., 18 Nov 2024, Abou-Chakra et al., 4 Apr 2025).

6. Current Limitations and Open Research Questions

Despite considerable progress, several challenges and tradeoffs remain:

- Camera and sensor dependency: Many pipelines assume fixed or known camera geometry; changes in sensor configuration may require new reconstruction or domain adaptation (Chen et al., 2022).

- Representation limitations: Implicit neural models excel at visual reproduction but may require surrogate geometric proxies (e.g., rough mesh colliders) for physical interaction (Kondo et al., 2022), potentially limiting physical realism or simulation generality.

- Scalability to highly deformable or large-scale scenes: While single-object, tabletop, and urban scenes are well-supported, massively dynamic or highly deformable scenes (e.g., fluids, soft tissues) pose ongoing challenges.

- Feature loss and adaptation in domain translation: GAN-based adaptation can sometimes lose fine features essential for precise control or manipulation (Chen et al., 2022); robustness to occlusion remains nontrivial.

- Automated scene/task generation fidelity: Automatic mapping from raw sensory input to semantically rich, actionable simulation scenes and tasks is still susceptible to error, requiring robust matching and aligned object/task definitions (Zook et al., 20 Oct 2024).

- Physical parameter estimation noise: Automated inertia and mass identification from limited or noisy data can yield plausible but imperfect models; further advances may be achieved by better excitation, regularization, or sensor fusion (Pfaff et al., 1 Mar 2025).

7. Application Domains and Impact

Real2Sim pipelines have rapidly proliferated across several domains:

- Virtual and augmented reality: Nearly lossless import of real-world objects, including highly dynamic, translucent, or irregularly-detailed entities, into immersive worlds, driving next-generation VR applications (Kondo et al., 2022).

- Robotics, manipulation, and RL: Enables robust imitation and reinforcement learning with minimized real-world trial-and-error; digital twins afford large-scale synthetic data, automatic labeling, and safe exploration (Han et al., 12 Feb 2025, Torne et al., 6 Mar 2024, Abou-Chakra et al., 4 Apr 2025).

- Perception and scene understanding: Automated, task-oriented simulation asset generation for detection, pose estimation, and rare event modeling in autonomous vision systems (Chen et al., 8 Sep 2025, Xie et al., 12 Jan 2025).

- Manufacturing and computational fabrication: Design-to-manufacture cycles harnessing data-driven surrogates for process-aware device optimization, decreasing the gap between simulated and realized function (Zheng et al., 2023).

- Agricultural robotics and geometric reasoning: Improves point cloud completion and topology extraction for challenging environments without annotation or parameter tuning (Qiu et al., 9 Apr 2024).

This breadth highlights the foundational role Real2Sim approaches now occupy in simulation-centric research and industrial engineering.

References:

- Deep Billboards towards Lossless Real2Sim in Virtual Reality (Kondo et al., 2022)

- Real2Sim or Sim2Real: Robotics Visual Insertion using Deep Reinforcement Learning and Real2Sim Policy Adaptation (Chen et al., 2022)

- ReSim: Generating High-Fidelity Simulation Data via 3D-Photorealistic Real-to-Sim for Robotic Manipulation (Han et al., 12 Feb 2025)

- Scalable Real2Sim: Physics-Aware Asset Generation Via Robotic Pick-and-Place Setups (Pfaff et al., 1 Mar 2025)

- Vid2Sim: Realistic and Interactive Simulation from Video for Urban Navigation (Xie et al., 12 Jan 2025)

- SynthDrive: Scalable Real2Sim2Real Sensor Simulation Pipeline for High-Fidelity Asset Generation and Driving Data Synthesis (Chen et al., 8 Sep 2025)

- Neural Lithography: Close the Design-to-Manufacturing Gap in Computational Optics with a 'Real2Sim' Learned Photolithography Simulator (Zheng et al., 2023)

- GRS: Generating Robotic Simulation Tasks from Real-World Images (Zook et al., 20 Oct 2024)

- RoboGSim: A Real2Sim2Real Robotic Gaussian Splatting Simulator (Li et al., 18 Nov 2024)

- Reconciling Reality through Simulation: A Real-to-Sim-to-Real Approach for Robust Manipulation (Torne et al., 6 Mar 2024)