Medical Chain-of-Thought Reasoning

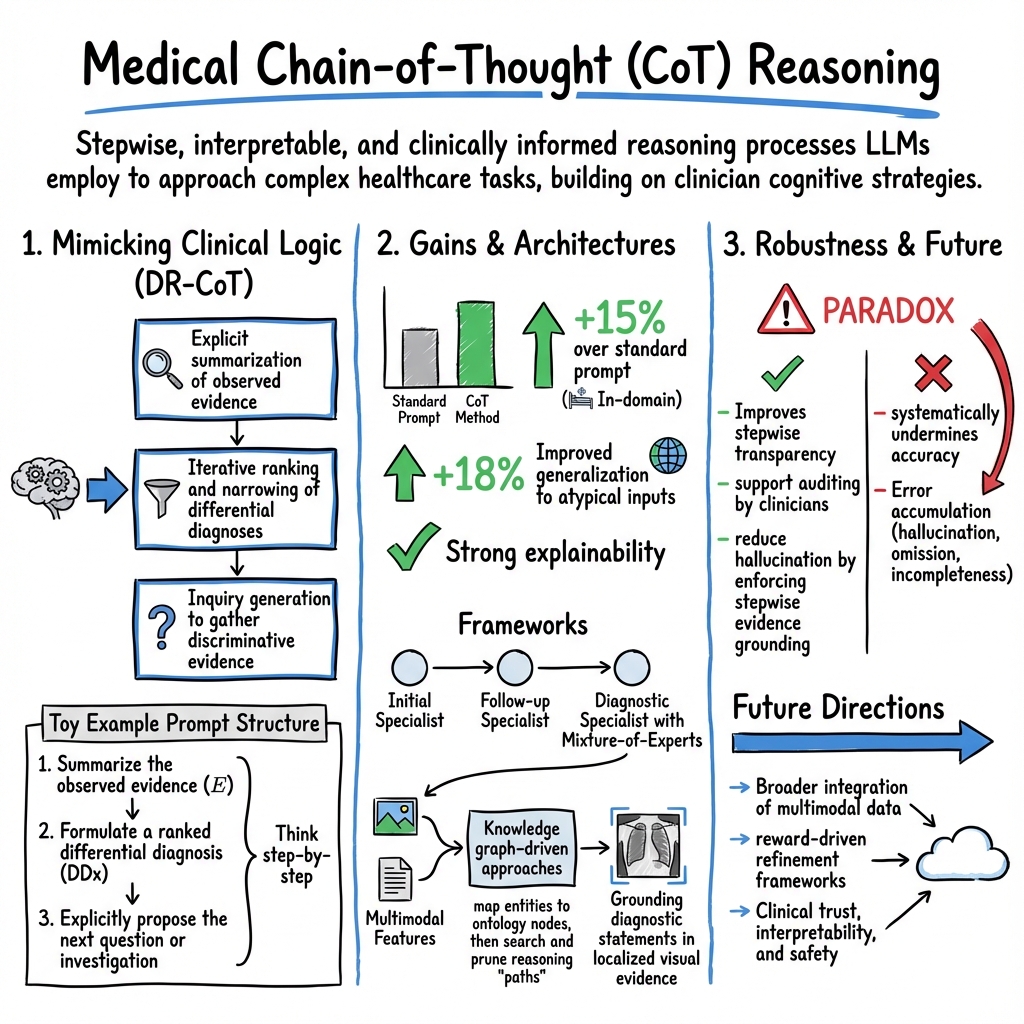

- Medical chain-of-thought reasoning is a structured, step-by-step approach that mirrors clinical diagnostic workflows using large language models.

- It leverages explicit evidence summarization and differential diagnosis ranking to improve accuracy by up to 18% in out-domain tasks.

- Integration with multimodal data, such as imaging and EHR, enhances transparency and error detection in complex clinical scenarios.

Medical chain-of-thought (CoT) reasoning refers to the stepwise, interpretable, and clinically informed reasoning processes that LLMs and multimodal models employ to approach complex healthcare tasks such as diagnosis, report generation, and decision support. This paradigm builds on the cognitive strategies used by clinicians—structuring problem-solving as an evolving sequence of evidence evaluation, hypothesis generation, and iterative refinement, and aims to enhance both AI accuracy and interpretability in medical AI systems.

1. Core Principles and Methodological Foundations

In medical domains, chain-of-thought reasoning formalizes a structured reasoning scaffold—translating clinical tasks into sequential logic that mirrors established workflows such as history-taking, differential diagnosis construction, and evidence synthesis (Wu et al., 2023, Nachane et al., 2024, Liu et al., 2024). Unlike generic CoT prompting, medical-specific approaches (e.g., DR-CoT and MedCodex frameworks) tailor prompts and exemplars to enforce clinical protocols, such as:

- Explicit summarization of observed evidence,

- Iterative ranking and narrowing of differential diagnoses,

- Inquiry generation to gather discriminative evidence.

A typical DR-CoT prompt in medical diagnosis augments standard instructions by requiring the LLM to:

- Summarize the observed evidence (),

- Formulate a ranked differential diagnosis (DDx),

- Explicitly propose the next question or investigation (as in: "Based on [E], the ranked DDx is [..]. To narrow down, the next question is...") (Wu et al., 2023).

This mirrors the iterative diagnostic reasoning in real clinical care, focusing the LLM on a “think step-by-step” approach that surfaces intermediate conclusions and routes.

2. Empirical Results and Quantitative Advances

The widespread application of CoT reasoning in medical LLMs has demonstrated significant accuracy gains and improved reliability in a range of tasks:

| Setting | CoT Method | Accuracy Improvement | Noted Advantages |

|---|---|---|---|

| In-domain QA | DR-CoT | +15% over standard prompt | Enhanced critical question generation, higher diagnostic yield |

| Out-domain QA | DR-CoT | +18% | Improved generalization to atypical inputs |

| Open-ended QA | MedCodex/CLINICR | Up to 89.5% expert agreement | Strong explainability, matches real-life clinical processes |

| Clinical Error | CoT-augmented ICL | Consistent accuracy gain | Error detection & correction, span identification |

In complex clinical note analysis, combining CoT with retrieval-augmented generation (RAG) (as in RAG-driven CoT or CoT-driven RAG) achieves top-10 candidate gene/disease accuracy exceeding 40% in rare disease diagnosis (Wu et al., 15 Mar 2025). CoT supervision during model fine-tuning has been empirically validated to boost LLM accuracy on specialized benchmarks by up to 7.7% (Wu et al., 1 Apr 2025).

A critical finding is the robust improvement in out-domain settings, indicating an ability to generalize reasoning beyond the scope of prompt exemplars—crucial for real-world clinical scenarios that diverge from textbook presentations (Wu et al., 2023).

3. Frameworks and Implementation Strategies

Hierarchical, Multi-Agent, and Knowledge-Guided CoT

- MedCoT (Liu et al., 2024) employs a multi-stage expert structure (Initial Specialist → Follow-up Specialist → Diagnostic Specialist with Mixture-of-Experts) mirroring clinical junior–senior–consensus workflows. Each stage validates and potentially refines the intermediate rationale, using self-reflection and majority voting to enhance both accuracy and transparency.

- Knowledge graph–driven approaches (MedReason (Wu et al., 1 Apr 2025)) first map entities in clinical Q&A pairs to ontology nodes, then search and prune reasoning “paths” through the graph. CoT explanations are generated conditioned on these shortest, clinically relevant paths, with an explicit validation loop ensuring the logical chain produces the correct outcome.

- Mentor–Intern Collaborative Search (MICS) (Sun et al., 20 Jun 2025) orchestrates interactions between strong “mentor” models and lighter “intern” models to search and evaluate high-quality stepwise reasoning, selecting optimal paths based on multi-model agreement (MICS-Score).

Reward Models and Response Verification

- In response selection and answer verification, reward models are trained on expert annotations to assign scalar rewards to candidate explanations (chosen/rejected), replacing brute-force elimination (Nachane et al., 2024). The reward loss,

encourages maximization of the reward gap between correct and incorrect reasoning outputs.

Resource-Efficient and Curriculum Learning

- Parameter-efficient fine-tuning (LoRA, QLoRA) allows small medical LLMs (e.g., LLaMA-3.2-3B) to be adapted for CoT without high computational cost, retaining chain-structured reasoning under resource constraints and enabling deployment in low-resource clinical environments (Mansha, 6 Oct 2025).

- Curriculum learning in Chiron-o1 (Sun et al., 20 Jun 2025) incrementally builds from text QA, then image-text alignment, then complex CoT cases, yielding robust visual and textual reasoning in medical VQA.

4. Visual and Multimodal Chain-of-Thought Models

Vision-Language Integration

- Medical VQA and report generation systems such as MedCoT (Liu et al., 2024), DiagCoT (Luo et al., 8 Sep 2025), BoxMed-RL (Jing et al., 25 Apr 2025), and V2T-CoT (Wang et al., 24 Jun 2025) extend CoT paradigms to imaging by:

- Extracting multi-modal features (e.g., ViT-based image encoders, concept recognizers, region-level attention),

- Employing LLM-based components for stepwise reasoning over both visual and textual features,

- Explicitly grounding diagnostic statements in localized visual evidence (bounding box predictions, region heatmaps).

Structured Supervision and Explainable Outputs

- BoxMed-RL (Jing et al., 25 Apr 2025) and DiagCoT (Luo et al., 8 Sep 2025) decompose report generation into concept identification, disease classification, and anatomical localization, then reinforce spatial verifiability through IoU-based RL signals. Chain-of-thought tagged outputs (> …, <answer>…</answer>) provide auditable, radiologist-style explainable reports.

- Ablation studies consistently underscore that the presence of CoT reasoning—specifically the explicit rationale and evidence mapping—improves both diagnostic accuracy and interpretability (cf. Table: removal of CoT or visual concept pathways degrades performance in X-Ray-CoT (Ng et al., 17 Aug 2025)).

5. Robustness, Limitations, and Error Analysis

Successes

- CoT improves stepwise transparency: intermediate reasoning steps align closely with clinical protocols and support auditing by clinicians (Ding et al., 11 May 2025, Liu et al., 2024).

- Chain-of-retrieval (Wang et al., 20 Aug 2025) and knowledge graph–anchored CoT (Wu et al., 1 Apr 2025) significantly reduce hallucination by enforcing stepwise evidence grounding.

Limits and Paradoxes

- Large-scale empirical studies (Wu et al., 26 Sep 2025) reveal a paradox: while CoT enhances interpretability, in many clinical text tasks it systematically undermines accuracy. For example, 86.3% of evaluated LLMs showed lower performance with CoT than with zero-shot prompts across diverse EHR tasks.

- Degradation is correlated with longer reasoning chains and decreased medical concept alignment, leading to error accumulation (hallucination, omission, incompleteness). Higher-performing models are more robust, but lower-performing ones may exhibit up to nearly 10% drop in accuracy under CoT prompts in noisy, fact-dense clinical text environments.

- These findings highlight patient-safety concerns when employing unrestricted CoT in real-world settings, emphasizing the need for controlled prompt engineering, tightly grounded reasoning, and active error mitigation.

Remediation Strategies

- Constrain reasoning trace length and reinforce tight input–output alignment.

- Employ domain-specific fine-tuning, calibration, and retrieval-augmented CoT pipelines.

- Use multi-step evaluation and an LLM-as-a-judge or human-in-the-loop systems for error filtering and arbitration.

- Incorporate structured knowledge bases/graphs to anchor reasoning steps.

6. Future Directions and Clinical Implications

Medical chain-of-thought reasoning is evolving rapidly, with several promising avenues:

- Extension from QA into comprehensive clinical decision support, triage, and treatment recommendation—always with rigorous clinical validation.

- Broader integration of multimodal (EHR, imaging, lab reports) and time-series data, leveraging curriculum learning with staged complexity (from textual, to visual, to composite clinical contexts).

- Advancement of error localization, self-correction, and reward-driven refinement frameworks to guarantee both the transparency and reliability of clinical outputs.

- Research into bias mitigation, safety frameworks, and the scalability of resource-efficient CoT adaptation for low-resource settings.

In sum, while medical CoT has demonstrated substantial potential for matching or exceeding clinical reasoning in controlled environments, its reliability is intricately tied to domain adaptation, data curation, prompt engineering, and systematic validation. As the field matures, transparent and stepwise medical reasoning will underpin the clinical trust, interpretability, and safety necessary for AI systems to be effectively and responsibly integrated into healthcare workflows.