Maximum Mean Discrepancy (MMD)

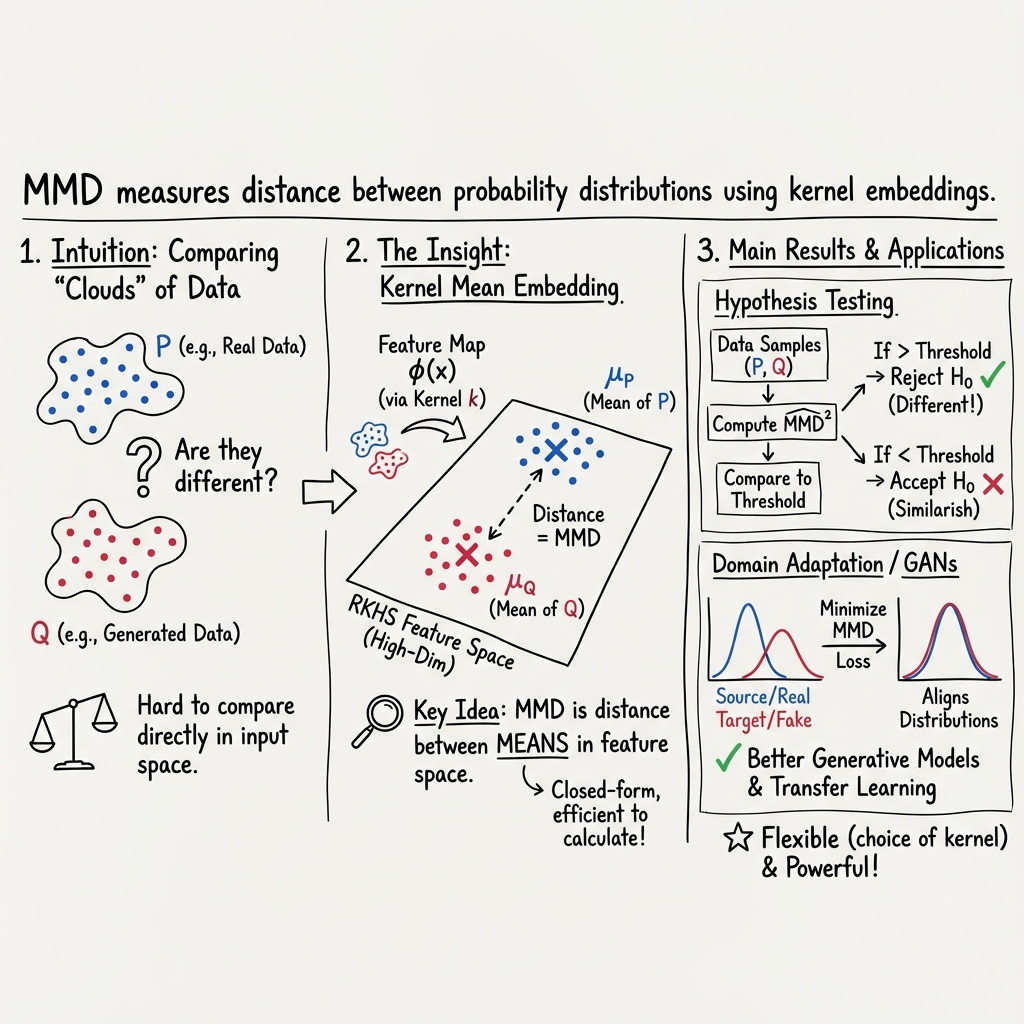

- Maximum Mean Discrepancy (MMD) is a nonparametric metric that quantifies differences between probability distributions by comparing their kernel mean embeddings in an RKHS.

- It is widely used for robust hypothesis testing, domain adaptation, and generative modeling through efficient, closed-form estimators and tailored kernel functions.

- Recent advancements enhance MMD's computational efficiency and extend its application to structured data and online settings, improving scalable inference and robust estimation.

Maximum Mean Discrepancy (MMD) is a nonparametric metric for quantifying the distance between probability distributions by embedding them into a reproducing kernel Hilbert space (RKHS). This framework enables robust hypothesis testing, domain adaptation, generative modeling, and distributional approximations across a wide range of applications in statistics and machine learning. MMD’s flexibility arises from its kernel-based definitions, which can be tailored to detect a variety of differences between distributions, and its closed-form empirical estimators, which facilitate efficient and interpretable implementation.

1. Mathematical Definition and Core Properties

MMD, for a positive-definite kernel associated with an RKHS , is defined as the RKHS distance between the mean embeddings of two probability distributions and :

where the mean embedding is . This metric can be written in terms of pairwise kernel evaluations:

for and (independent). For empirical datasets , , the unbiased estimator is:

When is characteristic, MMD is a true metric on the space of probability measures. The choice and property of is fundamental for the metric’s ability to distinguish distributions (Simon-Gabriel et al., 2020).

Key theoretical consequences include:

- If (functions vanishing at infinity) and is continuous and integrally strictly positive definite (i.s.p.d.), then MMD metrizes weak convergence (Simon-Gabriel et al., 2020).

- For compact spaces, continuity and being characteristic are sufficient.

2. Estimation, Hypothesis Testing, and Computational Aspects

MMD plays a prominent role in two-sample hypothesis testing. The null hypothesis is tested against by evaluating if the sample MMD statistic exceeds some threshold.

- The null distribution of the unbiased MMD statistic is degenerate and typically intractable, so permutation methods or resampling are commonly used to calibrate -values (Shekhar et al., 2022).

- Recently, permutation-free alternatives like the cross-MMD statistic have been proposed, splitting data into independent halves and yielding asymptotic normality under mild conditions, resulting in a test statistic with a known null distribution for efficient thresholding (Shekhar et al., 2022).

- MMD estimators are naturally quadratic in sample size, but advances such as signature-MMD extend MMD to path space using signature kernels for comparing distributions of stochastic processes (Alden et al., 2 Jun 2025).

Computational complexity considerations drive methodological innovation:

- Quadratic complexity is mitigated by methods such as the use of exponential windows for efficient online change detection (MMDEW) (Kalinke et al., 2022).

- Efficient approximations using neural tangent kernel representations (NTK-MMD) exploit online training and linear scaling (Cheng et al., 2021).

- Nonparametric extensions to discrete candidate sets and mini-batch point selection enable scalable quantization and approximation of target measures (Teymur et al., 2020).

3. Domain Adaptation, Robust Estimation, and Generative Modeling

MMD is widely adopted in unsupervised domain adaptation as a loss function to align source and target distributions:

- Traditional approaches use MMD to minimize the discrepancy in a latent or representation space, sometimes combining marginal and conditional distributions (Zhang et al., 2019).

- Recent work highlights that minimizing MMD can inadvertently increase intra-class dispersion and decrease inter-class separability, potentially harming classification performance. Discriminative MMD variants address this by balancing transferability and discriminability using explicit trade-off factors (Wang et al., 2020).

- In domain adaptation, DJP-MMD computes the discrepancy directly between joint probability distributions , providing both stronger theoretical alignment and empirical improvements in classification tasks (Zhang et al., 2019).

In parameter estimation and regression, MMD serves as a principled minimum distance criterion:

- The regMMD package implements estimation for a range of parametric and regression models by minimizing the MMD between the empirical distribution and the model, often yielding robust estimators in the presence of outliers (Alquier et al., 7 Mar 2025).

- Optimally weighted estimators further improve sample complexity for computationally expensive and likelihood-free inference (Bharti et al., 2023).

In generative modeling and GAN variants, MMD defines the minimization target between real and generated sample distributions (e.g., in GMMN and MMD-GANs) (Ni et al., 2024).

4. Extensions and Applications to Structured Data and Time Series

The kernel framework allows MMD to be adapted for non-vectorial data:

- The signature MMD uses the signature kernel to compare distributions over path space, such as for hypothesis testing on stochastic processes or time series (Alden et al., 2 Jun 2025).

- In natural language processing, MMD-based variable selection and scoring enable the detection and analysis of word sense changes over time from embedding distributions (Mitsuzawa, 2 Jun 2025).

- For hallucination detection in LLM outputs, MMD-Flagger traces the empirical MMD trajectory between deterministic outputs and stochastic samples under varying decoding temperatures, providing an effective criterion for flagging hallucinations (Mitsuzawa et al., 2 Jun 2025).

5. Regularization, Generalization Bounds, and Handling Data Imperfections

Higher-level analysis and methodological advances have further extended MMD's robustness:

- Gradient flow interpretations frame the minimization of MMD as a Wasserstein gradient flow, providing continuous-time perspectives for particle transport-based optimization, with rigorous convergence analysis and regularization strategies through noise injection (Arbel et al., 2019).

- Uniform concentration inequalities quantify the finite-sample estimation error of MMD even when optimized over rich neural network classes, providing generalization guarantees for fairness-constrained inference, generative model search, and GMMNs (Ni et al., 2024).

- Approaches to missing data derive explicit worst-case bounds for the MMD statistic under arbitrary missingness patterns, preserving Type I error control without requiring missing-at-random assumptions (Zeng et al., 2024).

6. Theoretical and Empirical Impact

MMD enjoys widespread use due to its strong theoretical properties and practical efficiency:

- The selection of kernel critically determines the test's power, convergence, and metric properties (Simon-Gabriel et al., 2020).

- MMD-based tests attain (under appropriate kernel smoothness and bandwidth scaling) minimax optimality for detecting local alternatives in high dimensions (Shekhar et al., 2022).

- Adaptive kernel learning and power maximization can vastly improve sensitivity in applications such as adversarial attack detection (Gao et al., 2020).

- Empirical studies across machine learning and linguistics, including online change detection, word sense evolution, robust regression, and multi-objective optimization, repeatedly validate MMD’s utility and interpretability.

7. Current Directions and Open Challenges

Ongoing research focuses on:

- Improving the computational and statistical efficiency of MMD estimators, especially in high-dimensional and streaming settings (Cheng et al., 2021, Kalinke et al., 2022, Bharti et al., 2023).

- Extending MMD to complex data types, such as paths, graphs, and structured objects (Alden et al., 2 Jun 2025).

- Developing principled methods for kernel selection, regularization, and power maximization to adapt MMD for specific applications (Gao et al., 2020, Ni et al., 2024).

- Tightening theoretical guarantees on generalization, concentration, and adaptivity under imperfect or missing data (Zeng et al., 2024, Ni et al., 2024).

- Integrating MMD-based discrepancy minimization in hybrid optimization and inference frameworks, such as combining Newton-type refinement and evolutionary algorithms in multi-objective optimization (Wang et al., 20 May 2025).

The maturation of MMD methodology continues to broaden its adoption in statistical learning, robust inference, and beyond, motivated by ongoing refinements in both statistical theory and computational practice.