Manifold-Constrained Hyper-Connections (mHC)

- mHC is a framework that applies manifold constraints to multi-stream residual connections, preserving identity mapping and ensuring stable gradient flows.

- It employs projections onto the Birkhoff polytope using the Sinkhorn-Knopp algorithm to enforce norm non-expansiveness and compositional closure in processing.

- Empirical results demonstrate that mHC improves training stability, accuracy, and memory efficiency compared to unconstrained hyper-connections.

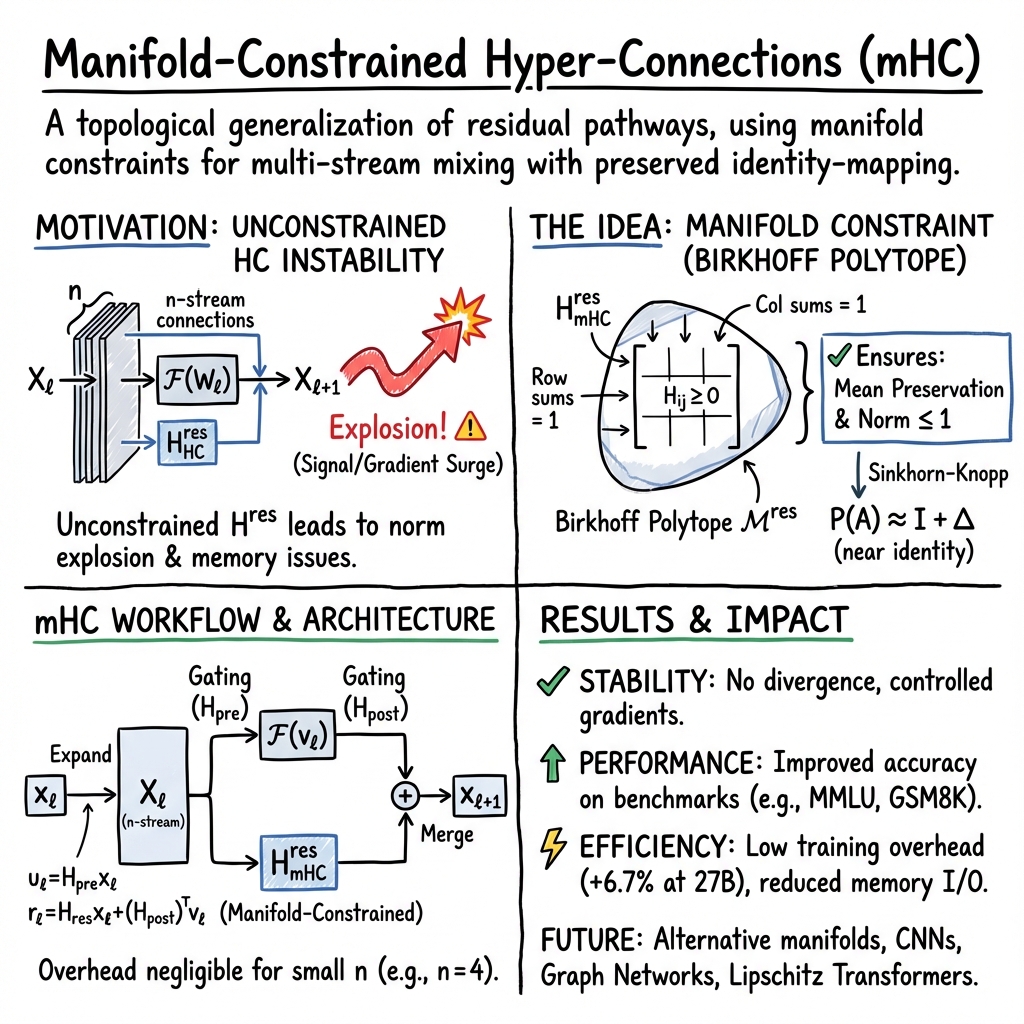

Manifold-Constrained Hyper-Connections (mHC) are a topological generalization of residual pathways in deep neural networks, designed to enable multi-stream mixing while rigorously preserving the identity-mapping property. As a principled extension of the Hyper-Connections (HC) architecture, mHC imposes a manifold constraint—specifically, projection onto the Birkhoff polytope (the set of doubly stochastic matrices)—on residual transformation matrices. This constraint provides compositional norm bounds and mean preservation, mitigating gradient pathologies and memory inefficiencies associated with unconstrained multi-stream residuals. mHC demonstrably improves training stability, downstream accuracy, and system efficiency at scale, and forms a flexible architectural primitive for next-generation foundational models (Xie et al., 31 Dec 2025).

1. Motivation and Historical Context

Residual connections, notably ResNet-style update rules of the form , are foundational in deep learning due to their identity-mapping property, which guarantees feature-mean preservation and stabilizes deep gradient flows. Hyper-Connections (HC) [Zhu et al. 2024] generalize this paradigm by expanding the residual stream from dimension to and introducing additional learnable mappings—, , and . However, unconstrained HC transforms the residual pathway into over layers, no longer guaranteeing norm or mean-preserving behavior, which can result in signal explosion/vanishing, severe gradient surges, and prohibitive memory I/O costs, particularly for large models (e.g., 27B parameters). This led to the search for a manifold-based constraint to restore the robust stability of classical residual architectures in a richer topological setting (Xie et al., 31 Dec 2025).

2. Manifold Projection and Identity Restoration

mHC addresses the instability in HC by constraining residual connection matrices to the Birkhoff polytope —the convex hull of all permutation matrices.

Key Manifold Properties

- Norm non-expansiveness: Any satisfies , ensuring no signal amplification through the residual path.

- Compositional closure: is closed under multiplication, so stacked layers preserve the manifold structure.

- Mean preservation: Exact restoration of the identity mapping property, with both row and column sums set to one.

Sinkhorn-Knopp Projection

Given an unconstrained , projection is accomplished by the Sinkhorn-Knopp algorithm:

- Set for positivity.

- Alternate row- and column-normalization for a fixed iterations: .

- approximates a doubly stochastic matrix.

Near the identity, , so mHC realizes "identity plus small perturbation" and maintains contractive stability across layers.

3. mHC Architecture and Algorithmic Workflow

In a typical pre-norm Transformer block, the scalar residual gate is replaced by an -stream residual structure with two gating maps and manifold-constrained mixing:

- Input Expansion: Duplicate to form , then flatten as .

- Gate and Residual Map Generation: Compute , via linear projections and scaling.

- Manifold Projection and Application:

- Update Path: ; ;

- Stream Merge: , typically by averaging or projection.

The following table summarizes computational steps per layer:

| Step | Operation | Output Dimension |

|---|---|---|

| Input Expansion | ||

| Gate Projections | , , | |

| Sinkhorn Projection | ||

| Residual Application | ||

| Merge |

For small (e.g., ), the computational overhead of the manifold projection is negligible relative to the main block .

4. Efficiency Engineering and System-Level Integration

mHC is implemented with several optimizations to control run-time overhead and peak memory usage:

- Kernel fusion: RMSNorm, linear projections, Sigmoid, and scaling are fused into custom kernels, reducing memory traffic.

- Mixed precision: Activations in bfloat16, weight multiplications in tfloat32, and accumulator/intermediate computations in FP32.

- In-place Sinkhorn: Sinkhorn iterations operate entirely in-register for matrices, eliminating global memory access.

- Activation recomputation: Intermediate states (, , , ) are discarded after the forward pass and recomputed only as needed.

- DualPipe pipeline parallelism: Residual stream kernels are overlapped on a high-priority CUDA stream, with recompute blocks aligned to pipeline boundaries.

Notably, for , end-to-end training time increases only by for a 27B-parameter Transformer relative to baseline. Memory I/O per apply kernel is reduced from reads/$3nC$ writes to reads/ writes.

5. Empirical Validation

Extensive experiments were performed on Mixture-of-Experts Transformers (DeepSeek-V3 backbone) at 3B, 9B, and 27B scale, with stream width. Key findings include:

- Stability: On 27B models, mHC maintains stable loss gap ( vs. baseline), while HC diverges at 12k steps. Gradient norms for mHC remain close to baseline, with HC exhibiting large spikes.

- Amax Gain Magnitude: mHC keeps single-layer gains , with aggregate , while unconstrained HC can reach .

- Benchmarks: Across eight zero- and few-shot benchmarks, mHC consistently outperforms both baseline and HC:

| Benchmark (shots) | Baseline | +HC | +mHC |

|---|---|---|---|

| BBH (3-shot) | 43.8 | 48.9 | 51.0 |

| DROP (3-shot) | 47.0 | 51.6 | 53.9 |

| GSM8K (8-shot) | 46.7 | 53.2 | 53.8 |

| HellaSwag (10-shot) | 73.7 | 74.3 | 74.7 |

| MATH (4-shot) | 22.0 | 26.4 | 26.0 |

| MMLU (5-shot) | 59.0 | 63.0 | 63.4 |

| PIQA (0-shot) | 78.5 | 79.9 | 80.5 |

| TriviaQA (5-shot) | 54.3 | 56.3 | 57.6 |

Compute scaling curves (3B→9B→27B) show the loss advantage of mHC is preserved with increasing model size, and token scaling (for fixed 1T tokens) shows mHC ahead throughout training (Xie et al., 31 Dec 2025).

6. Theoretical Foundations and Future Extensions

Theoretical Guarantees

- Norm-nonexpansiveness: For , . This spectral bound prevents gradient explosion/vanishing.

- Compositional stability: The manifold’s closure under multiplication ensures whole-network stability for extended depth.

- Birkhoff polytope geometry: Each is a convex combination of permutations, enabling controlled, unbiased mixing of residual streams.

Sketch of spectral bound: For any non-negative, doubly stochastic , the maximum singular value , following from Perron–Frobenius theory and the structure of stochastic matrices.

Extension Directions

- Alternative manifolds: Orthogonal (O()), Stiefel, or symplectic manifolds could be used to enforce stricter or alternative invariances (e.g., energy preservation, exact spectral norm).

- mHC in CNNs: Applying n-stream residual mixing to widen ResNet planes is a plausible extension.

- Graph networks: Node-feature mixing with stochastic adjacency constraints may benefit from mHC-style design.

- Lipschitz Transformers: Combining mHC with scaled-dot-product attention to bound layerwise Lipschitz constants is anticipated as a future pursuit.

The mHC framework demonstrates that manifold-constraint of multi-stream residuals yields provable stability, scale transferability, and performance gains, and serves as a robust foundation for further theoretical and architectural expansions in topological model design (Xie et al., 31 Dec 2025).