Deep-Learning Centering Procedure

- Deep-learning centering procedure is a reparameterization method that subtracts learned or empirical offsets to center activations, resulting in a well-conditioned optimization landscape.

- It refines energy functions and gradient computations in models like deep Boltzmann machines, aligning updates closer to the natural gradient for accelerated convergence.

- Empirical results indicate improved training stability, faster convergence, and enhanced hierarchical feature learning in various architectures including autoencoders and recurrent networks.

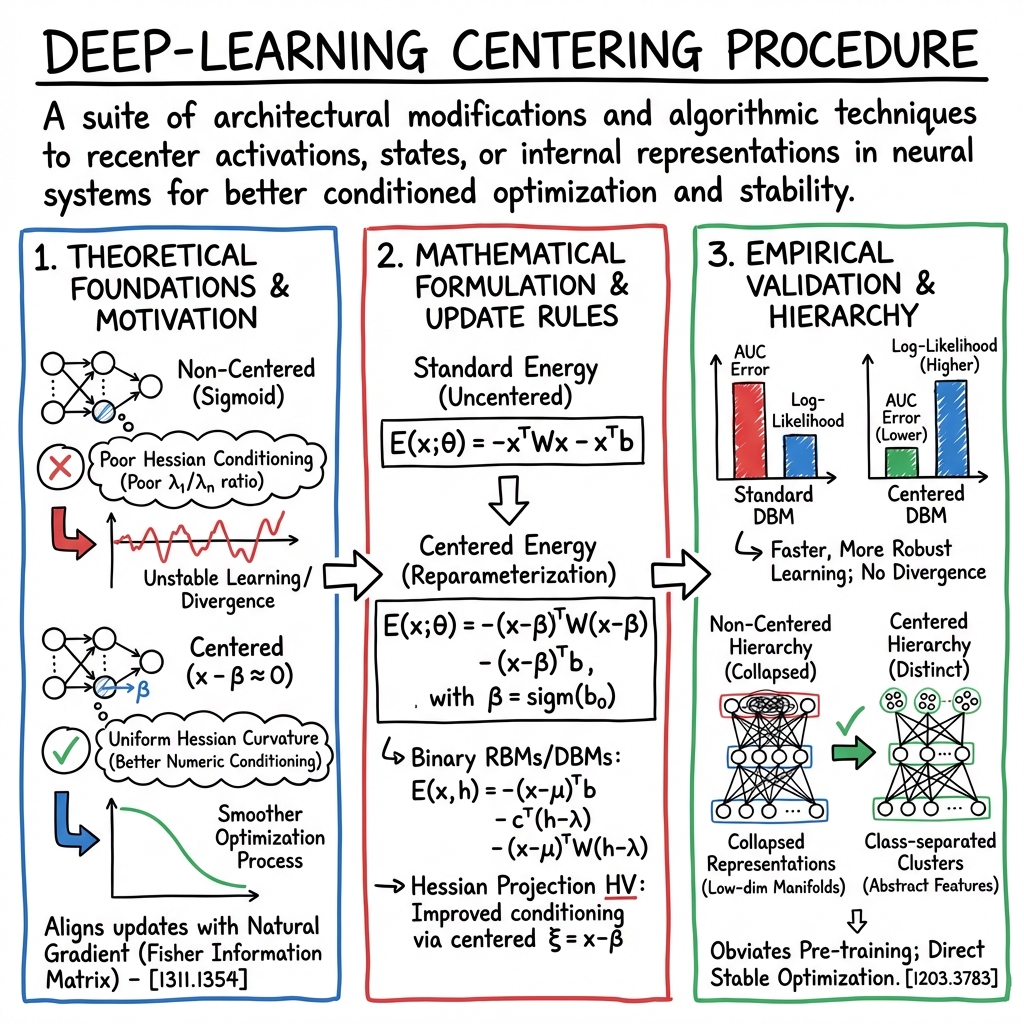

The deep-learning centering procedure refers to a suite of architectural modifications and algorithmic techniques designed to recenter activations, states, or internal representations in neural systems. Originally developed for deep Boltzmann machines, the procedure has since been extended to many other neural architectures. The central concept is to subtract offsets—either learned or empirically determined—from states or activations, reparameterize energy functions, and to construct update rules such that the outputs are centered around zero. This leads to better conditioned optimization landscapes, increased learning stability, and improved representational hierarchy in data-driven models. The following sections detail the theoretical principles, mathematical formulation, empirical validation, and implications of deep-learning centering procedures, emphasizing findings in deep Boltzmann machines and broader neural network models.

1. Theoretical Foundations and Motivation

The motivation for centering procedures in deep learning derives from the poor conditioning of the Hessian in standard deep Boltzmann machine (DBM) training, which often impairs gradient-based optimization and leads to unstable learning or divergence. Classical activation functions (e.g., sigmoid) are inherently positive, resulting in non-centered states. By recentring the outputs to zero—operationalized as , where is an offset—the curvature of the loss surface (specifically the Hessian of the log-likelihood) becomes more uniform. This reduces the ratio of the largest to smallest eigenvalues, induces better numeric conditioning, and significantly smooths the optimization process.

Centering is realized as a mere reparameterization; centered DBMs and standard DBMs describe identical probability distributions but in different coordinates. The analytical framework provided by (Melchior et al., 2013) establishes invariance under joint flips of data and offsets, ensuring coding-independent learning. It also demonstrates that centering aligns update directions closer to the natural gradient, which quantifies optimal steps in the statistical manifold via the Fisher information matrix.

2. Mathematical Formulation and Update Rules

The mathematical implementation of centering involves rewriting the energy function and associated gradients:

- Standard Energy (Uncentered):

- Centered Energy: , with

Conditional probabilities and gradients are described as:

- For the bias,

The projection of the Hessian along direction is:

This formulation ensures improved conditioning, as observed empirically.

For binary RBMs/DBMs, centering applies to both visible and hidden variables:

The update rules for weights and biases are similarly centered.

3. Empirical Validation and Performance Metrics

Extensive experiments on MNIST and other binary datasets demonstrate the stabilizing effect of centering. For DBMs trained with persistent contrastive divergence:

- Centered models achieved lower AUC classification error and higher log-likelihood as estimated via annealed importance sampling.

- Learning was notably faster and more robust; non-centered models experienced divergence or collapsed representations.

- Weight matrices in centered networks had smaller norms, with bias terms encoding the mean statistics, freeing weights to encode higher-order dependencies.

For autoencoders, incorporating offsets in encoder and decoder led to improved cost values and faster convergence.

Empirical results (e.g., Table 1 and 2 in (Montavon et al., 2012), Figures in (Melchior et al., 2013)) consistently favor centering for both discriminative and generative metrics.

4. Hierarchical Feature Learning and Representational Quality

Stacked architectures naturally induce hierarchical features. Centering substantially enhances this hierarchy by promoting abstract, class-separated representations in top layers. Kernel PCA visualizations of top-layer activations reveal that centered DBMs form distinct clusters aligned with class labels, while non-centered nets lose discriminative structure and often collapse to low-dimensional manifolds that fail to capture class separability.

The preservation of a rich, multi-layered representation is confirmed by improved generative estimates and increased stability in the training process.

5. Training Efficiency, Stability, and Pre-training

Centering improves training efficiency by aligning update directions with the natural gradient. Numerical evidence shows the angle between centered gradients and the ideal natural gradient is substantially reduced compared to standard updates. As the Fisher matrix governs the Riemannian geometry of the parameter space, this alignment facilitates faster, more reliable convergence.

Importantly, centering obviates the need for greedy layer-wise pre-training, long considered essential for DBMs. Not only does centering make joint training feasible, but in several cases pre-training worsened performance regardless of centering, highlighting the value of direct, stable optimization.

To mitigate the risk of divergence when offsets are noisy (e.g., with unsmoothed model means), an exponentially moving average (EMA) is employed in estimating the offset, decoupling gradient and offset noise and solidifying stability.

6. Broader Applicability to Neural Architectures

Centering via offsets generalizes to arbitrary neural networks. For a generic neuron,

Subtracting from the input (and compensating with bias ) amounts to reparameterizing activations across architectures including feed-forward, recurrent, and autoencoder models. This adjustment improves gradient flow, removes systematic activation bias, and yields better-conditioned optimization—all while preserving underlying functional expressivity.

The invariance under data coding (bit-flip symmetry) further underscores the robustness of centered models.

7. Limitations and Extensions

While centering substantially enhances training dynamics, care must be taken in choosing the offset update strategy. Variants relying solely on model mean as offset are prone to instability unless smoothed via EMA. The technique extends naturally to unsupervised and supervised contexts, but architectural idiosyncrasies (such as very deep networks or unusual loss surfaces) may demand careful adaptation.

Ongoing research explores centering methods in modern architectures, such as transformers and graph neural networks, suggesting potential benefits for conditioning and stability (Ali et al., 2023). Further theoretical work may clarify connections between centering and emerging geometric phenomena such as neural collapse (Ben-Shaul et al., 2022).

Conclusion

The deep-learning centering procedure, initially developed to recenter activation outputs in deep Boltzmann machines by subtracting learned offsets, is now recognized as a broadly applicable reparameterization. Its mathematical formulation ensures improved conditioning of the Hessian, stabilizes and accelerates optimization, enhances hierarchical feature abstraction, and supports the training of deep, multi-layered probabilistic and deterministic neural models. By systematically reducing intra-class variance and aligning update directions with the natural gradient, centering removes the need for laborious pre-training and enables more robust, generalizable representation learning. These findings are robustly supported by theoretical proofs and extensive experimental evidence across numerous architectures and datasets (Montavon et al., 2012, Melchior et al., 2013).