Deep Delta Learning in Neural Networks

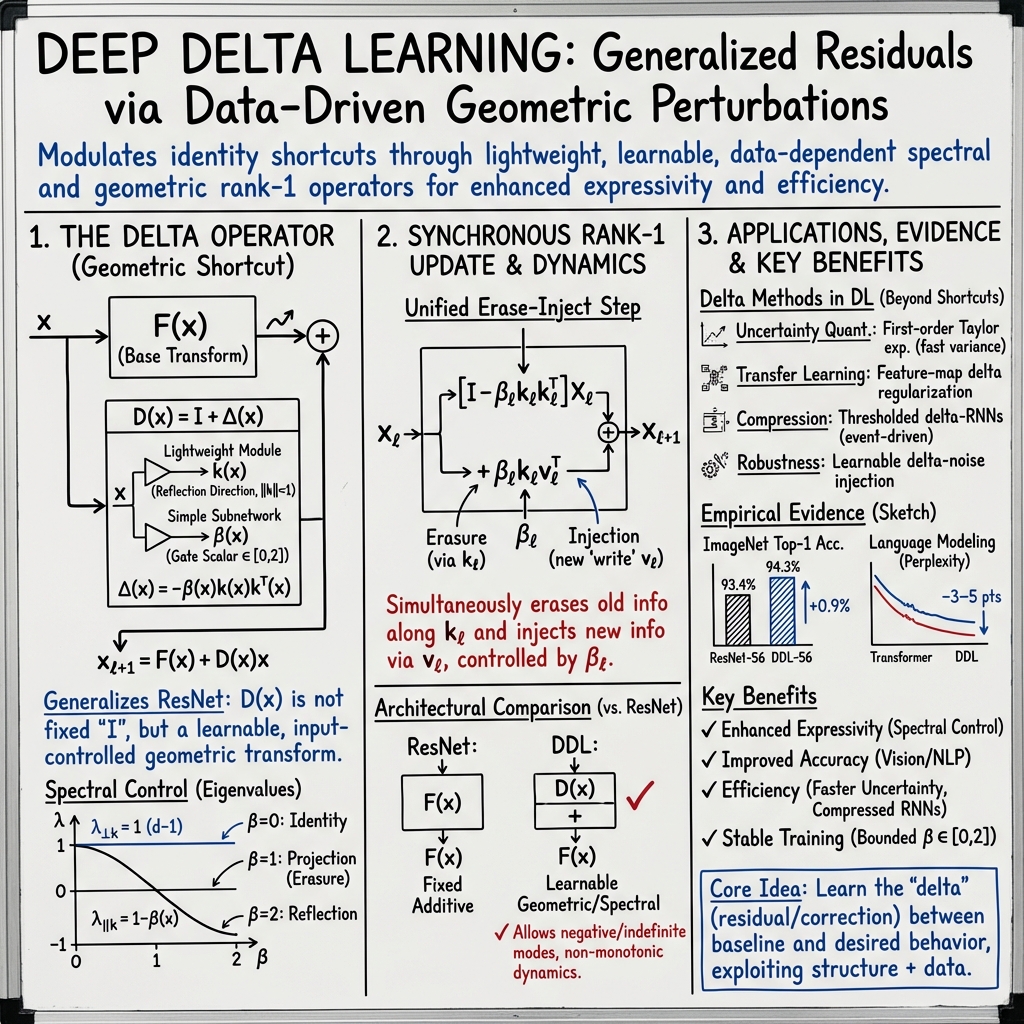

- Deep Delta Learning is a framework that uses data-dependent, rank-1 delta operators to modulate identity shortcuts for dynamic geometric control.

- It employs lightweight neural modules to generate reflection directions and gate scalars, achieving adjustable spectral properties and improved stability.

- The method is applied in uncertainty quantification, transfer learning, and network compression, yielding enhanced performance and reduced computational overhead.

Deep Delta Learning encompasses a set of approaches in deep learning that utilize parameter perturbations, geometric shortcut modulation, or feature-space residuals—each with "delta" as an operational motif—across uncertainty quantification, transfer learning, network compression, and model-based control. Most notably, modern Deep Delta Learning refers to the framework introduced in 2026, where residual connections are generalized by learning a data-dependent rank-1 perturbation (the "Delta Operator"), enabling dynamic, spectral, and geometric modulation of identity shortcuts in deep networks. This article presents a systematic overview of Deep Delta Learning in this contemporary sense, alongside key variants developed for uncertainty estimation and architectural efficiency.

1. Mathematical Formulation: The Delta Operator

Let denote the input or hidden state at a given layer. Deep Delta Learning introduces a family of shortcut operators of the form

where is the identity and

is a rank-1, data-dependent perturbation. Here,

- The reflection direction , with , is generated by a lightweight neural module.

- The gate scalar is produced via a simple subnetwork, ensuring that the operator interpolates between three geometric regimes.

This operator generalizes a Householder reflection (), with the parameterization: for . The shortcut is thus not a fixed identity but a learnable, input-controlled geometric transformation.

2. Spectral and Dynamical Properties

Spectral analysis of the Delta Operator yields the following eigenstructure:

- For all , , so the eigenvalue 1 has multiplicity .

- In the direction, , so the remaining eigenvalue is .

Thus, the spectrum of is , with the spectral radius for . This ensures stability of forward and backward signal propagation, preserving the hallmark ResNet property while introducing anisotropic, data-driven contraction, erasure, or reflection capabilities.

By modulating dynamically:

- recovers the identity map (no delta effect),

- achieves orthogonal projection (-component wipeout),

- yields a Householder reflection (eigenvalue -1 along ).

This parameteric control allows per-layer transitions between accumulation, forgetting, and feature inversion.

3. Synchronous Rank-1 Residual Updates

The residual update in Deep Delta Learning is reformulated as a synchronized erasure-injection step: or, equivalently,

where is the new write vector (learned via an auxiliary branch). The action is twofold:

- Old information along is erased (),

- New information () is injected, with both governed by the shared gate .

This unifies ResNet-style addition (fixed identity) with gated and multiplicative shortcut mechanisms, yet with explicit, data-driven geometric control.

4. Architectural and Theoretical Comparisons

Deep Delta Learning departs from classic identity residuals by providing layerwise, learnable, and data-dependent spectral manipulation:

- ResNet: Fixed additive shortcut, no erasure or negative feedback.

- Highway Network: Gated blend between identity and residual, but always additive and spectrum in [0,1].

- i-ResNet, Orthogonal Nets: Enforce contractivity or orthogonality, precluding negative or indefinite shortcut modes.

- DDL: Allows data-dependent reflection, projection, and contraction along arbitrary subspaces, with per-sample and per-layer spectrum that can include negative real values.

The additive complexity comprises lightweight side branches (for and ), typically insignificant relative to the base nonlinear transform. The approach increases representational capacity, particularly for modeling non-monotonic, oscillatory, or non-Eulerian dynamics.

5. Applications Beyond Shortcut Modulation: Delta Methods in Deep Learning

Deep Delta Learning as shortcut modulation (Gargani, 2023) stands alongside several established delta-based techniques:

| Application Area | Delta Methodology | Notable References |

|---|---|---|

| Uncertainty Quantification | First-order Taylor expansion, parameter covariance propagation | (Nilsen et al., 2021, Nilsen et al., 2019) |

| Transfer Learning | Regularization on feature-map deltas, attention-weighted channel-wise matching | (Li et al., 2019) |

| Activity Compression | Thresholded delta transmission (delta-RNNs, event-driven computation) | (Neil et al., 2016) |

| Stochastic Weight Robustness | Learnable delta-based noise injection (SDR), generalizing Dropout | (Frazier-Logue et al., 2018) |

| Model-based Hedging | Learning residual (delta) between Black-Scholes and empirical hedge, improved P&L | (Qiao et al., 2024) |

In each case, "delta" denotes explicit modeling of shifts, corrections, or activity changes—via gradient linearization (for uncertainty), feature-space distances (for transfer), thresholded dynamics (for RNNs), or residuals with respect to analytic solutions (for hedging).

6. Empirical Performance and Evidence

Empirical results in Deep Delta Learning consistently show:

- On image classification (CIFAR-10/100, Tiny-ImageNet, ImageNet-1k), DDL enhances top-1 accuracy by 0.5–1.5 percentage points over matched ResNets. For example, a ResNet-56 baseline at 93.4% is exceeded by DDL-56 at 94.3%.

- In language modeling, DDL layers lower perplexity by 3–5 points relative to baseline Transformer blocks.

- Ablations indicate critical roles for both the dynamic gate ()—static values degrade accuracy by up to 1pp—and the learned direction (), with random or fixed substitutes reducing accuracy by 0.4–0.7pp (Zhang et al., 1 Jan 2026).

- Complementary work in uncertainty estimation indicates that delta-based epistemic variances correlate at with bootstrap estimates but are computed 4–6× faster (Nilsen et al., 2021, Nilsen et al., 2019).

- Delta-RNN and compressed delta-CNNs achieve 5–10× MAC/weight fetch savings in large speech models, with <1% accuracy loss (Neil et al., 2016).

7. Practical Considerations and Limitations

Deep Delta Learning is compatible with standard autodiff frameworks, with the shortcut modulation requiring only additional light MLP branches. Critical elements are:

- Training remains stable by bounding in , constraining the spectral radius.

- The method only parametrizes rank-1 updates at each layer; more expressive or higher-rank modifications are conceivable but potentially computationally expensive.

- Dynamic shortcut modulation augments, but does not replace, the need for well-tuned residual transforms and regularization when dealing with highly multi-modal or irregular data regimes.

Limitations include that delta-based shortcut learning, in its current form, does not address global invertibility or manifold alignment as strictly as some i-ResNet architectures. The expressivity–stability tradeoff is shifted via the learnable spectral span, but not eliminated. In uncertainty estimation, the method captures local, not global, parameter covariance, missing uncertainty spread across disjoint minima (Nilsen et al., 2021, Nilsen et al., 2019).

In summary, Deep Delta Learning in its modern incarnation generalizes residual networks by modulating identity shortcuts through lightweight, data-driven, geometric delta operators—enabling explicit per-layer spectral control. This yields empirically superior results across vision and sequence modeling domains, and establishes a broader design principle: parameterize and learn the geometric or residual "delta" between baseline and desired network behavior, exploiting both analytic structure and data-driven corrections (Zhang et al., 1 Jan 2026, Nilsen et al., 2021, Nilsen et al., 2019, Li et al., 2019, Neil et al., 2016, Frazier-Logue et al., 2018, Qiao et al., 2024).