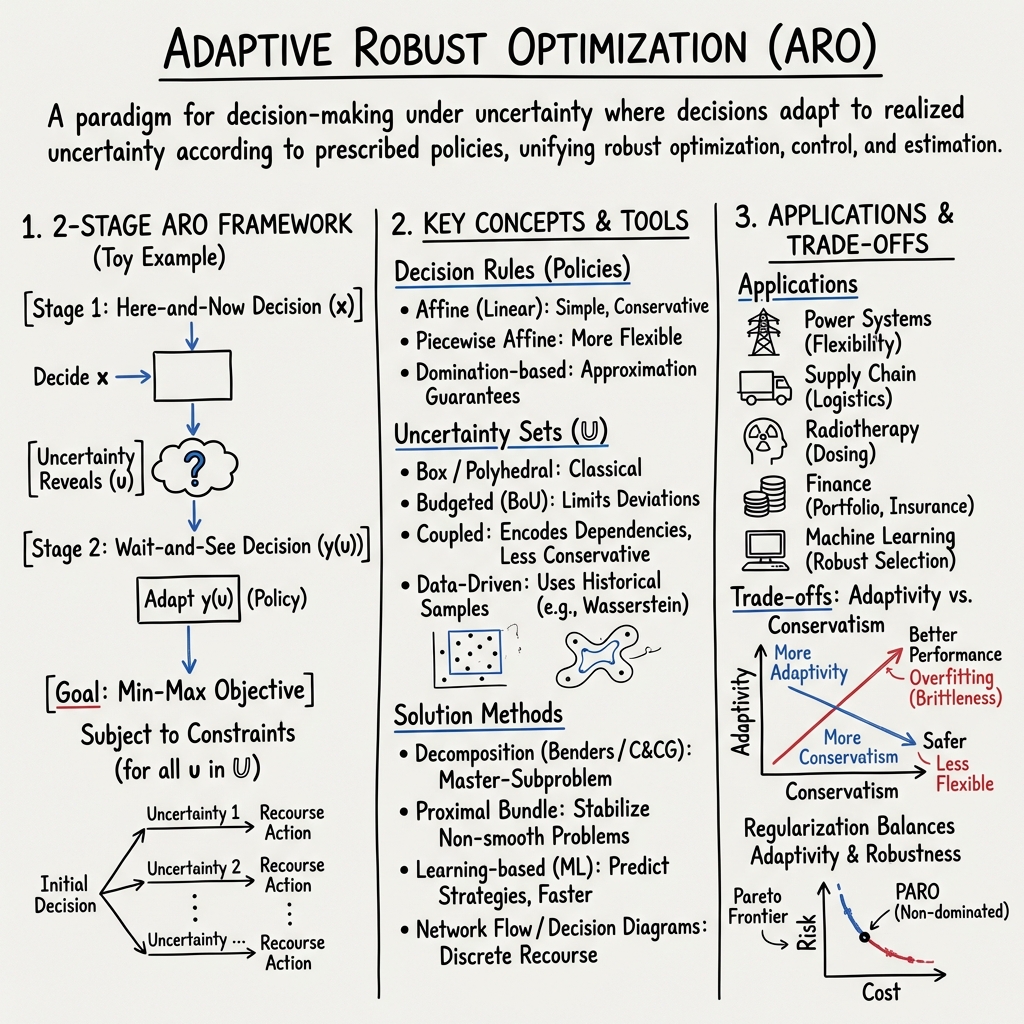

Adaptive Robust Optimization (ARO)

- Adaptive Robust Optimization (ARO) is a framework for decision-making under uncertainty by using adaptable, recourse-based policies instead of static decisions.

- It employs two-stage or multi-stage formulations with affine and piecewise rules to efficiently balance worst-case guarantees and computational tractability.

- ARO is widely applied in power systems, supply chain management, radiotherapy, and financial risk modeling to provide robust, adaptive solutions.

Adaptive Robust Optimization (ARO) is a paradigm in decision-making under uncertainty where decisions are allowed to adapt to realized uncertainty according to prescribed policies or rules. Unlike static robust optimization, which requires “here-and-now” decisions that are feasible for all possible uncertain scenarios within a predefined set, ARO leverages a two-stage or multi-stage structure in which recourse actions or policy parameters are adjusted in response to unfolding uncertainty, subject to nonanticipativity and feasibility requirements. This framework unifies concepts from robust optimization, optimal control, and high-dimensional estimation, and supports applications across diverse fields including statistical learning, power systems, supply chain management, radiotherapy planning, and insurance risk modeling.

1. Mathematical Formulations and Decision Rule Structures

ARO problems are typically formulated as multi-level min-max (or min-max-min) problems that distinguish between initial (here-and-now) decisions and subsequent adaptive (wait-and-see) decisions. Consider a two-stage linear ARO problem with uncertainty set :

The adaptive decision is a function (policy) of the realized uncertainty. In practice, tractable solution methods parameterize by restricting to affine (linear decision rules, LDRs), piecewise affine, or more general structured policies (e.g., (Thomä et al., 2022)). Affine policies are expressive but may be overly conservative, particularly in high-dimensional or multi-stage settings, prompting the development of piecewise and domination-based policies with explicit approximation guarantees.

Fourier–Motzkin elimination (FME) and duality arguments are often employed to characterize the structure of optimal adaptive policies and to derive equivalent static robust (piecewise linear) reformulations (Bertsimas et al., 2020). For stochastic or distributionally robust variants, Wasserstein-ball and empirical-data-driven uncertainty sets are incorporated, allowing direct use of historical samples (Ren et al., 28 May 2025).

2. Uncertainty Sets, Coupling, and Regularization

The definition of the uncertainty set profoundly influences the conservatism and adaptivity of ARO solutions. Classical sets are box, polyhedral, ellipsoidal, or budgeted sets.

Recent advances introduce:

- Budget of Uncertainty (BoU): These sets parameterize the maximum total deviation (the “uncertainty budget”) allowed among components, limiting simultaneous worst-case deviations and controlling conservatism (García-Muñoz et al., 22 Mar 2025).

- Coupled Uncertainty: Rather than modeling uncertainty in each constraint independently (constraint-wise), coupled uncertainty sets encode dependencies (through linear, budget, or ordering equations), producing less conservative and more realistic solutions. Objective-value improvements due to coupling can be tightly bounded in terms of set geometry (Bertsimas et al., 2023).

- Constraint-Specific Uncertainty Sets and Regularization: Assigning tailored uncertainty set sizes to individual constraints (or groups) serves to regularize the adaptive coefficients in the policy. Hard constraints receive larger sets (strong guarantees, regularization suppresses adaptivity), while softer constraints are assigned smaller sets to preserve flexibility. This acts analogously to regularization in statistical estimation, directly controlling overfitting of policies to the uncertainty set and balancing robustness with adaptivity (Zhu et al., 19 Sep 2025).

3. Solution Methodologies: Cutting Planes, Decomposition, and Learning-based Approaches

Because exact solution of ARO is often computationally intractable (especially with integer or multi-stage recourse), a spectrum of tractable schemes are developed:

- Decomposition Algorithms: Multi-level AROs are reformulated using master–subproblem (column-and-constraint generation, Benders decomposition). At each iteration, given a candidate here-and-now decision, a subproblem finds the worst-case scenario or verifies recourse feasibility. Optimality and feasibility cuts are added until convergence is established, with finite termination guaranteed under polyhedral uncertainty and linear policies (Ning et al., 2018, García-Muñoz et al., 22 Mar 2025, Daryalal et al., 2023).

- Proximal Bundle and Regularized Methods: For convex ARO with complex recourse structures, proximal bundle methods stabilize non-smooth cutting-plane models, ensuring convergence and practical robustness in settings such as inventory control and operational planning (Ning et al., 2018).

- Piecewise Affine and Domination-based Policy Synthesis: Structured policies partition the uncertainty space and construct local affine rules, utilizing “dominating uncertainty sets” to obtain strong approximation bounds and efficient LP reformulations (Thomä et al., 2022).

- Network Flow and Decision Diagram Reformulations: For problems with discrete (binary) recourse and selective adaptability, network-flow models inspired by binary decision diagrams enable scalable MILP formulations that yield simultaneously primal and dual bounds, with user-tunable complexity-accuracy tradeoffs (Bodur et al., 2024).

- Learning-Driven Methods: Offline computation of optimal strategies under varying parameters using classical algorithms (e.g., column-and-constraint generation) facilitates supervised learning models (e.g., XGBoost, policy trees) to rapidly predict near-optimal strategies for unseen problem instances, drastically reducing online solve times while preserving feasibility and solution quality (Bertsimas et al., 2023).

- Deep Generative Embedded Uncertainty Sets: Generative models such as variational autoencoders define pullback uncertainty sets in high-density zones of the data distribution, tightening conservatism and producing realistic adversarial scenarios during robust optimization (Brenner et al., 2024).

4. Applications Across Modern Data-centric and Engineering Domains

ARO is applied across domains where uncertainty is both high-dimensional and structurally complex:

- High-Dimensional Robust Variable Selection: AR-Lasso employs a two-step adaptive -penalized quantile regression, where the weights are adaptively set via an initial robust regression. This procedure attains the oracle property and asymptotic normality even under heavy-tailed errors, outperforming standard and nonadaptive Lasso in finite samples (Fan et al., 2012).

- Power Systems and DER Aggregation: ARO computes maximum flexibility regions (aggregated active/reactive power) for distributed energy resources, guaranteeing that any realized aggregate signal can be disaggregated to DERs while enforcing network constraints (Chen et al., 2020, García-Muñoz et al., 22 Mar 2025).

- Radiotherapy Optimization: Two-stage ARO models adapt the dose schedule upon observing mid-course biomarker information, supporting explicit decision rules, conservative reformulation for information inexactness, and the use of Pareto-adjustable robustly optimal (PARO) solutions—a concept ensuring dominance across uncertainty realizations (Eikelder et al., 2019).

- Insurance Risk Management: Affine ARO policies price catastrophe insurance dynamically, informed by both historical data and machine learning-derived risk estimates for major loss events, offering robust and adaptable premium structures (Bertsimas et al., 2024).

- Real-time and Online Portfolio Selection: Adaptive robust policies couple robust optimization with adaptive selection of risk and transaction cost parameters, tracking a cohort of experts and selecting portfolios based on recent outperformance, providing responsive risk management across shifting regimes (Tsang et al., 2022).

5. Trade-offs, Conservatism, and Overfitting

ARO models provide improved performance and flexibility compared to static robust optimization, but at the cost of potential overfitting to the modeled uncertainty set:

- Adaptive policies, by exploiting the structure within , may become brittle outside the prescribed set, making previously u-independent constraints uncertainty-dependent (and hence more prone to violation when confronted with out-of-set scenarios).

- This brittleness is mitigated by regularizing the adaptive coefficients—either implicitly, via larger uncertainty sets corresponding to hard constraints, or explicitly through constraint-specific probabilistic guarantees (Zhu et al., 19 Sep 2025).

- Coupling and data-driven adjustment of uncertainty sets (via sample inclusion maximization or robustification using Wasserstein balls) further balance robustness with tractability and empirical feasibility (Bertsimas et al., 2023, Ren et al., 28 May 2025).

6. Solution Quality, Approximation Guarantees, and Performance

Quantitative and qualitative performance characteristics depend on policy structure, problem class, and application context:

- Piecewise affine, network-flow, and dominating-set-based policies achieve approximation solutions with explicit bounds: , being a function of the set geometry (Thomä et al., 2022).

- In numerical studies, transformation-proximal bundle methods achieved over 30× reduction in suboptimality in inventory control compared to affine policy benchmarks (Ning et al., 2018); learning-based methods accelerated solve times by – over standard column-and-constraint algorithms (Bertsimas et al., 2023); and deep generative set methods achieved up to 11.6% cost reduction in power system expansion (Brenner et al., 2024).

- For multi-stage ARO, hybrid robust–stochastic frameworks (distinguishing LT and ST uncertainty) consistently approximate the “perfect information” optimum closely while controlling overinvestment—a critical property for high-value investments and public-infrastructure planning (García-Muñoz et al., 22 Mar 2025, Bernecker et al., 15 Jul 2025).

7. Conceptual Extensions: Pareto Optimality and Regularization Lens

- Pareto Adaptive Robust Optimality (PARO): Solutions are not merely worst-case optimal (over ), but are also non-dominated—delivering equal or improved outcomes on all scenarios and strictly better on some relative to any alternative solution. Fourier–Motzkin elimination, convex analysis, and piecewise linear rule construction are instrumental in establishing the existence and computability of PARO solutions (Bertsimas et al., 2020, Eikelder et al., 2019).

- Regularization-Overfitting Analogy: Interpreting the size of the uncertainty set as a regularization parameter directly links ARO policy adaptability to the overfitting phenomenon, guiding the principled assignment of robustness levels to individual constraints and enforcing adaptation only when it contributes to global feasibility and stability (Zhu et al., 19 Sep 2025).

Adaptive robust optimization has evolved into a mature and flexible framework combining worst-case robustness, adaptation to revealed uncertainty, and—in emerging directions—data-driven and learning-centric synthesis of both policies and uncertainty sets. Its theoretical advancements, algorithmic frameworks, and deep real-world applications continue to expand the boundary of tractable, high-performance decision-making under uncertainty.