FoundationSLAM: Unleashing the Power of Depth Foundation Models for End-to-End Dense Visual SLAM

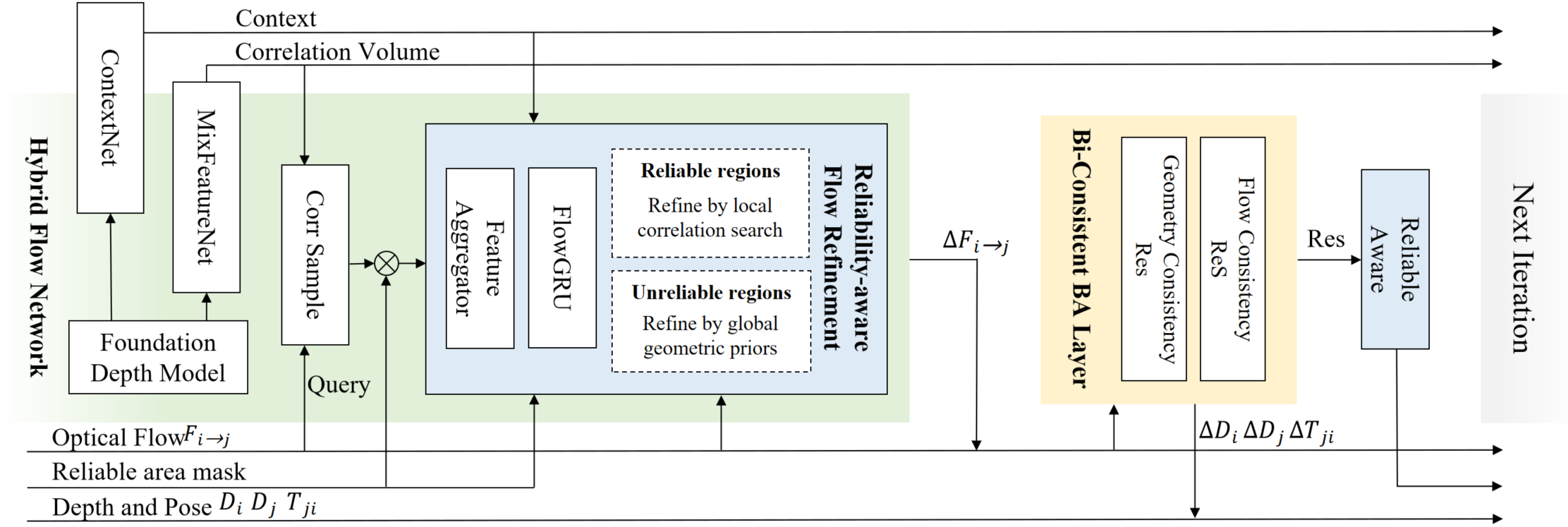

Abstract: We present FoundationSLAM, a learning-based monocular dense SLAM system that addresses the absence of geometric consistency in previous flow-based approaches for accurate and robust tracking and mapping. Our core idea is to bridge flow estimation with geometric reasoning by leveraging the guidance from foundation depth models. To this end, we first develop a Hybrid Flow Network that produces geometry-aware correspondences, enabling consistent depth and pose inference across diverse keyframes. To enforce global consistency, we propose a Bi-Consistent Bundle Adjustment Layer that jointly optimizes keyframe pose and depth under multi-view constraints. Furthermore, we introduce a Reliability-Aware Refinement mechanism that dynamically adapts the flow update process by distinguishing between reliable and uncertain regions, forming a closed feedback loop between matching and optimization. Extensive experiments demonstrate that FoundationSLAM achieves superior trajectory accuracy and dense reconstruction quality across multiple challenging datasets, while running in real-time at 18 FPS, demonstrating strong generalization to various scenarios and practical applicability of our method.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper introduces FoundationSLAM, a system that helps a single camera figure out where it is and build a detailed 3D map of the world around it at the same time. This is called “SLAM” (Simultaneous Localization and Mapping). The key idea is to use smart depth knowledge from large “foundation” models to make the camera’s tracking and mapping more accurate and consistent, even in hard situations (like shiny surfaces or low-texture walls). The system runs in real time at about 18 frames per second.

Key Questions and Goals

Here are the main questions the paper aims to answer:

- How can a single camera build a clean, accurate 3D map while tracking its path, without getting confused by tricky areas (like plain white walls or reflections)?

- Can “foundation” depth models (big AI models trained on many images to understand 3D) help the camera match pixels between frames more reliably?

- How do we make sure the 3D map and the camera’s position agree across many different views, not just between two frames?

- Can we detect which parts of the camera’s pixel matching are trustworthy and which parts need correction—and fix them on the fly?

Methods and Approach

To make this accessible, think of the camera as a person walking through a room, trying to draw a 3D map while moving. The system needs three big tools:

1) Hybrid Flow Network (better pixel matching with geometry hints)

- “Optical flow” is a way to track where each pixel moves from one frame to the next, like following tiny stickers between photos.

- Traditional systems only look at local image features (like patterns and textures) to match these pixels. That can fail on blank walls or shiny floors.

- The Hybrid Flow Network adds “geometry-aware features” from foundation depth models—these are like a tutor that already knows typical 3D shapes and distances from tons of training images.

- By mixing regular image features with these depth-aware features, the system finds more reliable pixel matches that make sense in 3D, not just 2D.

2) Bi-Consistent Bundle Adjustment (making all views agree)

- “Bundle adjustment” is a classic method that fine-tunes the camera’s position (pose) and the 3D map (depth) so everything lines up across multiple views, like adjusting puzzle pieces so they all fit together.

- The paper’s “Bi-Consistent” version enforces consistency in two directions:

- Flow consistency: Are the predicted pixel movements compatible with the estimated 3D depth and camera movement?

- Geometry consistency: If you project a 3D point from one frame into another, and then back, does it land where it started? If not, something’s off.

- By checking both, the system keeps the camera path and the 3D map tightly aligned across many frames, reducing errors.

3) Reliability-Aware Refinement (fixing untrustworthy areas)

- Not all pixel matches are equally trustworthy. Occlusions (things blocking view), repeated patterns (like tiles), or shiny surfaces can confuse matching.

- The system builds a “reliability mask” per pixel:

- Reliable areas: keep using detailed local matching (fast and precise).

- Unreliable areas: stop trusting local matching; instead, rely more on the geometry-aware context from the foundation model to correct mistakes.

- This creates a feedback loop: optimization measures where things don’t align well and tells the matching network where to be more careful or switch strategies.

Put simply, the camera first guesses how pixels move, then checks if those guesses make sense in 3D across multiple views, and finally corrects bad guesses using learned geometry and reliability checks.

Main Findings and Why They Matter

The experiments were run on well-known datasets (TUM-RGBD, EuRoC, 7Scenes, ETH3D). The system was tested against strong baselines (like DROID-SLAM and MASt3R-SLAM). The key results include:

- Better tracking accuracy: The camera’s path (trajectory) was more precise, with lower errors (ATE) than competing methods across most sequences.

- Better 3D reconstruction: The 3D maps had fewer artifacts (like layered or distorted surfaces), and closer alignment to ground truth (lower Chamfer distance).

- Strong performance in tough scenes: Works well with fast motion, grayscale footage (EuRoC), low texture, reflections, and wide viewpoint changes.

- Real-time speed: Runs at about 18 FPS on a single high-end GPU, making it practical for live use.

These results show that adding geometry-aware priors (from foundation depth models) and enforcing multi-view consistency can significantly improve SLAM quality without slowing it down too much.

Implications and Impact

FoundationSLAM teaches us that combining:

- smart pixel matching (guided by big depth models),

- multi-view consistency checks (so all frames agree),

- and reliability-aware corrections (fix issues where matching is weak),

creates a more robust SLAM system. This can help robots, drones, AR/VR devices, and self-driving tools understand and navigate the world better using just a single camera. The approach also bridges traditional SLAM ideas with modern AI foundation models, hinting at future systems that are both accurate and efficient, and that generalize well across many kinds of scenes.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a concise, actionable list of what remains missing, uncertain, or unexplored in the paper, to guide future research.

- Loop closure and relocalization: The system appears to operate without global place recognition or loop-closure constraints; investigate integrating geometry-aware priors into loop closure and relocalization for long-term, drift-free mapping.

- Scalability to long sequences: Provide analysis and strategies for scaling dense bi-consistent bundle adjustment to thousands of frames and large environments (memory/time complexity, graph sparsification, windowing policies).

- Keyframe management: The “dynamically maintained keyframe graph” is not specified; formalize and evaluate selection, culling, and connectivity heuristics and their impact on accuracy and runtime.

- Absolute scale in monocular setups: Clarify how metric scale is established and maintained (beyond ATE alignment); study scale drift and propose mechanisms (e.g., scale priors, self-calibration) for metric reconstruction.

- Camera intrinsics and calibration: Assess sensitivity to calibration errors; extend to uncalibrated cameras and auto-calibration, and report performance under varying intrinsics and lens distortions.

- Rolling-shutter and distortion robustness: Evaluate and adapt the pipeline for rolling-shutter cameras and non-linear distortions common in consumer devices.

- Dynamic scenes handling: Develop explicit modeling for independently moving objects (segmentation, motion layers, dynamic BA), beyond the current residual thresholding that may suppress constraints near motion boundaries.

- Occlusion reasoning: Replace residual-threshold heuristics with visibility-aware, differentiable occlusion models (z-buffering, ray consistency) and evaluate their effect on consistency and accuracy.

- Reliability mask design: The binary gating via fixed thresholds (, ) may be brittle; explore learned, soft probabilistic reliability maps and study sensitivity to threshold choices.

- Training objectives and supervision: The paper does not specify the training losses (photometric, BA residuals, GT supervision); document and ablate the training recipe, including supervision sources and their contribution.

- Foundation priors ablation: Quantify the marginal benefit of the geometry-aware features (FoundationStereo/DepthAnything v2) vs. task-specific branches; compare to other foundation models (DUSt3R/MASt3R/VGGT) as priors within the same framework.

- Domain generalization: The model is trained on TartanAir (synthetic); evaluate robustness across outdoor scenes, low-light/night, HDR, weather (rain/snow), and diverse sensors (color/grayscale) and devise adaptation/fine-tuning strategies.

- Hardware and efficiency: Real-time is reported on an RTX 4090; provide runtime breakdown, memory footprint, and energy usage; assess performance on embedded/edge hardware and propose optimizations (model compression, quantization).

- Wide-baseline and low overlap: System behavior with very wide baselines or low co-visibility is unclear; design experiments and mechanisms (global descriptors, coarse pose priors) to handle large viewpoint changes.

- Photometric robustness: Investigate performance under severe exposure changes, flicker, and non-Lambertian effects; consider photometric normalization or learned appearance-invariance in the hybrid flow network.

- Visibility-constrained multi-view consistency: The geometry residual only applies for pixels with small residuals; evaluate bidirectional constraints under robust visibility masks and multi-view consistency beyond pairwise checks.

- Map representation and fusion: The paper evaluates per-keyframe depth and point clouds; specify and assess global fusion (TSDF/mesh/volumetric) strategies, topology preservation, and surface quality (normals, watertightness).

- Uncertainty quantification: Expose calibrated uncertainty for depth and pose (e.g., covariance estimates) and integrate it into optimization and downstream decision-making.

- Convergence and stability analysis: Provide theoretical/empirical analysis of the Bi-Consistent BA convergence properties, failure modes, and robustness to noise/outliers.

- Relational feedback beyond binary gating: Investigate richer feedback channels from optimization to matching (e.g., gradient-based or meta-learning strategies) that adapt matching windows, priors, or network states.

- Fair comparative evaluation: Expand comparisons to recent Gaussian-splatting SLAM systems and more foundation-augmented SLAM baselines under matched settings (calibration, keyframe policies, sequence lengths).

- Evaluation breadth: Add quantitative results for TNT and other datasets mentioned qualitatively; report statistical significance, confidence intervals, and sensitivity to metric thresholds (e.g., 0.5 m chamfer clipping).

- Failure-case catalog: Document representative failure scenarios (specular surfaces, thin structures, repetitive textures, textureless walls) and analyze which component fails (flow, priors, BA), guiding targeted remedies.

- Extension to multimodal SLAM: Explore visual–inertial, stereo, and RGB-D variants within the same bi-consistent framework, including how foundation priors interact with extra modalities.

- Privacy and robustness in real deployments: Assess system behavior with realistic data constraints (privacy-filtered imagery, compression artifacts, packet loss) and propose robustness measures.

Glossary

- 3D Gaussian Splatting: A 3D scene representation that renders radiance fields using Gaussian primitives for real-time performance; commonly used for dense mapping. "3D Gaussian Splatting~\cite{splatam, yan2024gs, matsuki2024gaussian}"

- Ablation Studies: Controlled experiments that isolate and evaluate the contribution of individual components in a model or system. "Ablation Studies on EuRoC datasets."

- Absolute Trajectory Error (ATE): A metric that measures the difference between estimated and ground-truth camera trajectories. "ATE (TUM, EuRoC, 7Scenes, ETH3D)"

- Area Under the Curve (AUC): A summary metric that aggregates performance across varying error thresholds. "our method achieves the highest AUC across error thresholds"

- Back-end optimization: The optimization component of a SLAM pipeline that refines poses and geometry based on constraints and residuals. "frontend priors are not explicitly refined or guided by the backend optimization."

- Back-projection: Mapping a pixel with known depth from image space back into 3D space, or inversely checking consistency by projecting back to the original pixel. "back-projection to the original pixel"

- Bi-Consistent Bundle Adjustment Layer: A proposed layer that jointly optimizes depth and pose using both flow and geometry residuals to enforce multi-view consistency. "Bi-Consistent Bundle Adjustment Layer that jointly optimizes keyframe depth and pose using flow and geometry consistency residuals."

- Bidirectional consistency: A constraint ensuring mutual agreement of geometric predictions across views in both directions (i→j and j→i). "By enforcing bidirectional consistency, our formulation improves tracking and reconstruction accuracy in challenging scenes."

- Bundle Adjustment: Nonlinear optimization that jointly refines camera poses and 3D structure using multi-view constraints. "tracking, mapping, and bundle adjustment."

- Chamfer Distance: A symmetric distance metric between two point sets used for evaluating reconstruction accuracy and completeness. "Chamfer distance (7Scenes, EuRoC)."

- Confidence map: A per-pixel map indicating the reliability of flow or correspondence predictions, used to weight residuals or guide refinement. "predicts flow updates ($\DeltaF$) and confidence maps ()."

- Co-visibility graph: A graph connecting keyframes that observe overlapping scene regions, used to structure optimization or training. "Each sequence forms a co-visibility graph with 18 edges."

- Correlation volume: A multi-dimensional volume encoding local feature correlations across displacement hypotheses for matching. "4D Correlation Volume"

- Cost volume matching: Estimating correspondences by searching within a cost/correlation volume over a defined radius. "outside the scope of reliable cost volume matching"

- Dense optical flow: Per-pixel motion field between images used as a unified representation for tracking and mapping. "leveraging dense optical flow as a unified representation for tracking and mapping."

- Edge-wise Flow Reliability: A local reliability indicator derived from forward projection residuals for a specific keyframe pair. "Edge-wise Flow Reliability."

- Flow GRU: A recurrent module (Gated Recurrent Unit) specialized for iterative flow refinement. "Predicted flow is iteratively refined via Flow GRU, guided by context features and learned reliability masks."

- Foundation depth models: Large pretrained models that provide strong priors for depth estimation to guide downstream tasks. "leveraging the guidance from foundation depth models."

- Front-end perception: The SLAM component that produces measurements (e.g., features, flow) used by the optimizer. "front-end perception and back-end optimization"

- Gauss-Newton optimization: An iterative second-order optimization method for minimizing nonlinear least squares problems. "We minimize $\mathcal{L}_{\text{BA}$ using Gauss-Newton optimization."

- Jacobian: The matrix of partial derivatives of residuals with respect to parameters, used in gradient-based optimization. "We compute Jacobians with respect to both depth and pose"

- Keyframe: Selected frames used as reference points for tracking, mapping, and multi-view optimization. "Given a pair of keyframes, we estimate dense optical flow..."

- Keyframe-based matching paradigm: A SLAM approach that uses inter-keyframe correspondences to jointly optimize pose and geometry. "keyframe-based matching paradigm"

- Keyframe graph: A graph structure of keyframes and their connections used to manage correspondences and optimization. "Operating over a dynamically maintained keyframe graph"

- Loop closure: Detecting and enforcing consistency when the camera revisits a previously seen location to reduce drift. "even supports loop closure."

- Monocular dense SLAM: SLAM using a single RGB camera that reconstructs dense scene geometry and estimates trajectory. "a learning-based monocular dense SLAM system"

- Multi-view geometric constraints: Constraints that enforce consistency of geometry and pose across multiple views. "explicit enforcement of multi-view geometric constraints"

- NeRF (Neural Radiance Fields): A neural scene representation that models view-dependent radiance to render novel views. "NeRF~\cite{imap, nice-slam, johari2023eslam} or 3D Gaussian Splatting"

- Node-wise Geometric Reliability: A global reliability indicator computed by averaging geometry residuals across a keyframe’s neighbors. "Node-wise Geometric Reliability."

- Pose: The position and orientation of the camera in 3D space, often optimized jointly with depth. "jointly optimizes keyframe pose and depth"

- Reliability-Aware Refinement: A refinement mechanism that adapts the flow update based on residual-derived reliability masks. "Reliability-Aware Refinement mechanism"

- Simultaneous Localization and Mapping (SLAM): The problem of estimating a camera’s trajectory while reconstructing the environment. "Simultaneous Localization and Mapping (SLAM) is a fundamental problem in computer vision and robotics"

- Uncalibrated images: Image pairs without known camera intrinsics used by foundation models to predict geometry and poses. "from as few as two uncalibrated images."

- ViT-S: A small Vision Transformer variant used here for efficient encoding to achieve real-time performance. "adopting ViT-S"

Practical Applications

Immediate Applications

Below are actionable use cases that can be deployed now, leveraging FoundationSLAM’s real-time monocular dense SLAM (≈18 FPS on a high-end GPU), geometry-aware flow, bi-consistent bundle adjustment, and reliability-aware refinement.

- Indoor mobile robot navigation and mapping (AMRs, service robots) — Sector: Robotics, Logistics, Healthcare, Hospitality — What it enables: Robust localization and dense mapping in textureless or reflective indoor environments; better trajectory accuracy and denser maps for path planning and obstacle avoidance using a single RGB camera — Potential tools/products/workflows: ROS/ROS2 node integrating FoundationSLAM; drop-in SLAM module for AMR stacks; reliability heatmaps to gate navigation behavior in uncertain areas — Assumptions/dependencies: Known camera intrinsics; monocular scale ambiguity must be resolved via wheel odometry/IMU/fiducials; sufficient compute (desktop/embedded GPU); loop-closure not described in the paper for large-scale drift correction

- Handheld site capture and rapid as-built mapping — Sector: AEC (construction), Real estate, Facilities management — What it enables: Quick dense reconstructions from monocular walk-through videos for floor planning, quick measurements, and progress documentation (offline processing on workstation) — Potential tools/products/workflows: Desktop CLI/GUI “Monocular Scan-to-Model” tool; plug-in for existing photogrammetry pipelines to improve initialization and robustness in low-texture areas — Assumptions/dependencies: Metric scale needs external references (fiducials, tape measurements) or sensor fusion; privacy controls for indoor capture; compute availability for near-real-time processing

- Low-cost indoor inspection and inventory mapping — Sector: Retail, Warehousing, Manufacturing QA — What it enables: Dense mapping for small stores/stockrooms to update layouts and detect changes using a phone or monocular camera on a cart/robot — Potential tools/products/workflows: Store layout updater; anomaly/change detection pipeline using successive map comparisons; reliability-aware flags to prioritize human review — Assumptions/dependencies: Controlled motion and lighting; scale from known shelf dimensions or odometry; offline processing acceptable for consistency

- Teleoperation and telepresence localization — Sector: Robotics, Remote operations — What it enables: More stable pose tracking and dense scene context for remote operators in GPS-denied indoor spaces, aiding situational awareness — Potential tools/products/workflows: SLAM front-end for teleop stacks; reliability-driven UI that highlights uncertain regions for cautious maneuvering — Assumptions/dependencies: Network bandwidth; calibrated camera; potential fusion with IMU for fast maneuvers

- AR occlusion and room understanding on laptops/desktops — Sector: Software, AR/VR, E-commerce — What it enables: Improved real-time occlusion and placement stability for try-before-you-buy and design tools using monocular webcams — Potential tools/products/workflows: Unity/Unreal plugin that exposes dense depth, camera pose, and reliability masks; web/desktop AR authoring tools — Assumptions/dependencies: Not mobile-grade yet (paper results reported on powerful GPUs); scale may be relative unless anchored; illumination variation

- Drone-based indoor mapping in GPS-denied environments (short missions) — Sector: Robotics, Public safety, Facility inspection — What it enables: Robust pose tracking with dense recon in corridors/rooms where texture is limited and corridors create ambiguities — Potential tools/products/workflows: SLAM front-end for small indoor drones; post-flight dense map export for incident response or maintenance planning — Assumptions/dependencies: IMU fusion recommended; careful handling of motion blur; known intrinsics; regulatory compliance for indoor flight

- SLAM health monitoring and QA via reliability-aware refinement — Sector: Robotics software, Safety — What it enables: Real-time detection of unreliable regions (occlusions, repetitive textures, reflections) to slow or stop robots or request human confirmation — Potential tools/products/workflows: Reliability heatmap API; safety rules engine to adapt robot speed/behavior based on uncertainty — Assumptions/dependencies: Integration with planner/controller; calibrated uncertainty thresholds for each environment

- Academic research and teaching — Sector: Academia, Education — What it enables: A strong, reproducible dense monocular SLAM baseline for studying multi-view geometry, flow–geometry coupling, and differentiable BA — Potential tools/products/workflows: Course modules/labs; benchmarking on TUM/EuRoC/7Scenes/ETH3D; ablation studies of multi-view consistency and reliability modeling — Assumptions/dependencies: Availability of training/inference code and datasets; GPUs for real-time experiments

- Offline cultural heritage digitization (rooms, exhibits) — Sector: Culture, Museums, Tourism — What it enables: Cost-effective dense capture of indoor spaces where installing depth sensors is impractical — Potential tools/products/workflows: Volunteer/curator walk-through capture; post-processing to generate point clouds/meshes with confidence overlays — Assumptions/dependencies: Controlled capture routes; external scale references; permissions/privacy management for sensitive sites

- Post-disaster indoor assessment triage (pilot deployments) — Sector: Public sector, Emergency response — What it enables: Rapid situational mapping from volunteer videos to prioritize areas for expert inspection — Potential tools/products/workflows: Back-office triage pipeline using dense reconstructions and uncertainty scoring; lightweight capture guidelines for responders — Assumptions/dependencies: Varying video quality; safety and privacy policies; offline processing with human-in-the-loop review

Long-Term Applications

These require further research, optimization, or systems integration (e.g., scaling, sensor fusion, domain adaptation, regulatory validation).

- Consumer-grade mobile AR mapping on-device — Sector: Software, AR/VR, E-commerce — What it aims to enable: Real-time room-scale dense SLAM on smartphones for stable occlusion, persistent anchors, and home design — Potential tools/products/workflows: Mobile SDKs with model compression/quantization; semantic layering on top of dense maps — Assumptions/dependencies: Significant model optimization for mobile NPUs; battery/thermal constraints; privacy-preserving on-device processing

- Assistive navigation for the visually impaired — Sector: Healthcare, Accessibility — What it aims to enable: On-device, real-time dense mapping with uncertainty-aware guidance for safe indoor navigation — Potential tools/products/workflows: Wearable or phone-based navigation assistant that fuses SLAM with obstacle semantics and speech/haptic feedback — Assumptions/dependencies: Robustness in varied lighting and dynamic scenes; regulatory validation and clinical studies; low-latency edge inference

- Autonomous driving redundancy and mapping (submodules) — Sector: Automotive — What it aims to enable: Monocular dense SLAM as a redundancy layer for VO/SLAM stacks, providing uncertainty-aware cues in GPS-degraded zones — Potential tools/products/workflows: Multi-sensor fusion with stereo/LiDAR/IMU; reliability-gated map updates; long-horizon loop closure — Assumptions/dependencies: Automotive-grade robustness to weather/night; rigorous safety certification; extensive dataset adaptation

- Long-term multi-session indoor digital twins — Sector: AEC, Facilities, Smart buildings — What it aims to enable: Persistent, loop-closed dense maps across days/weeks with change detection and versioning — Potential tools/products/workflows: Multi-session mapping backend; loop closure and drift correction; change auditing dashboards using reliability cues — Assumptions/dependencies: Scalable back-end SLAM with loop closure and place recognition; identity/permission management; metric scale calibration

- Industrial inspection in challenging environments — Sector: Energy, Oil & Gas, Utilities, Mining — What it aims to enable: Dense mapping of GPS-denied, reflective, or low-texture spaces (substations, tunnels, vessels) for defect localization — Potential tools/products/workflows: Ruggedized robot/drone payloads; SLAM fused with IMU/ranging; anomaly detection on dense recon — Assumptions/dependencies: Domain adaptation to low light, dust, specular surfaces; safety protocols; electromagnetic interference mitigation

- Medical endoscopy/bronchoscopy 3D mapping — Sector: Healthcare — What it aims to enable: Dense monocular SLAM for surgical navigation and lesion measurement in deformable, low-texture scenes — Potential tools/products/workflows: Domain-specialized models with photometric/shape priors; clinical visualization tools with uncertainty quantification — Assumptions/dependencies: Strong domain shift vs. natural scenes; handling tissue deformations and specularities; clinical validation and regulation

- Smart-home robots with persistent maps and semantics — Sector: Consumer robotics — What it aims to enable: Reliable mapping across rooms/floors with semantic labels (furniture, no-go zones) and robust handling of dynamic rearrangements — Potential tools/products/workflows: Semantic SLAM layer atop FoundationSLAM; loop-closure and multi-floor alignment; user-friendly map editing using confidence overlays — Assumptions/dependencies: Compute and cost constraints; privacy; long-term autonomy and failure recovery

- Multi-agent collaborative SLAM — Sector: Robotics, Public safety, Industrial operations — What it aims to enable: Faster coverage and robust mapping via shared dense maps and cross-agent consistency checks — Potential tools/products/workflows: Map merging with multi-view geometric consistency; reliability-aware data association; distributed BA — Assumptions/dependencies: Communication bandwidth/latency; robust place recognition and loop closure; consistent scale alignment

- Insurance property assessment and claims automation — Sector: Finance/Insurance — What it aims to enable: Standardized indoor scans from monocular video for pre-underwriting surveys and post-claim damage assessment — Potential tools/products/workflows: Claims intake app; back-end dense recon with confidence scoring to flag ambiguous regions for manual review — Assumptions/dependencies: Metric calibration and legal admissibility; privacy and PII redaction; fraud detection and model risk governance

- Digital heritage and tourism experiences at scale — Sector: Culture, Tourism — What it aims to enable: Large catalogs of indoor 3D reconstructions with reliable geometry for interactive tours and education — Potential tools/products/workflows: Batch processing pipelines; automatic curation based on reliability thresholds; semantic annotation layers — Assumptions/dependencies: Loop closure for large complexes; content rights and privacy; multi-user session consolidation

- Policy and public-sector surveying with commodity cameras — Sector: Government, Urban planning, Disaster management — What it aims to enable: Low-cost mapping standards and workflows for municipal interiors, shelters, and public facilities — Potential tools/products/workflows: Procurement guidelines specifying calibration procedures and uncertainty reporting; volunteer capture programs for resilience planning — Assumptions/dependencies: Data governance and privacy frameworks; training and QA; standardized reporting of uncertainty and scale

Cross-cutting assumptions and dependencies

- Camera model and calibration: The paper assumes known intrinsics for accurate projection; uncalibrated use requires additional methods or priors.

- Scale ambiguity: Monocular SLAM does not provide absolute scale by default; external cues (IMU/odometry/fiducials/known object sizes) or calibrated depth priors are needed for metric use cases.

- Compute and platform: Reported 18 FPS is on a high-end GPU with half-resolution foundation encoding; embedded/mobile on-device deployment needs substantial optimization.

- Environment dynamics and conditions: While robustness improves (low texture, reflections), heavy motion blur, extreme dynamics, and low light require sensor fusion and domain adaptation.

- Loop closure and long-horizon mapping: The paper focuses on tightly coupled flow–geometry and multi-view consistency, not on large-scale loop closure; long-term, multi-session and city-scale deployments need additional components.

- Privacy, safety, and compliance: Indoor mapping raises privacy concerns; safety-critical uses must integrate uncertainty estimates into decision-making and undergo domain-specific validation.

Collections

Sign up for free to add this paper to one or more collections.