- The paper introduces a framework integrating deterministic flow-based generative models with operations research to enforce global constraints and traceable auditability.

- It demonstrates a minimax, game-theoretic approach that enhances safety by robustly addressing tail risks and worst-case adversarial scenarios.

- The work redefines operations research roles from solution providers to system architects, crucial for scalable autonomous operations across diverse domains.

Assured Autonomy: Operations Research as the Cornerstone of Generative AI Systems

Introduction: From Generative Models to Autonomous Operators

Generative AI (GenAI) is evolving from content synthesis (e.g., text, image generation) toward agentic systems that sense, decide, and act autonomously in real-world operational workflows. This transition exposes the autonomy paradox: increasing autonomy magnifies both potential impact and operational risk, mandating formal structure, hard constraints, and tail-risk control. The paper "Assured Autonomy: How Operations Research Powers and Orchestrates Generative AI Systems" (2512.23978) offers a comprehensive conceptual and technical framework for achieving assured autonomy by integrating operations research (OR) principles directly into generative AI system design and deployment.

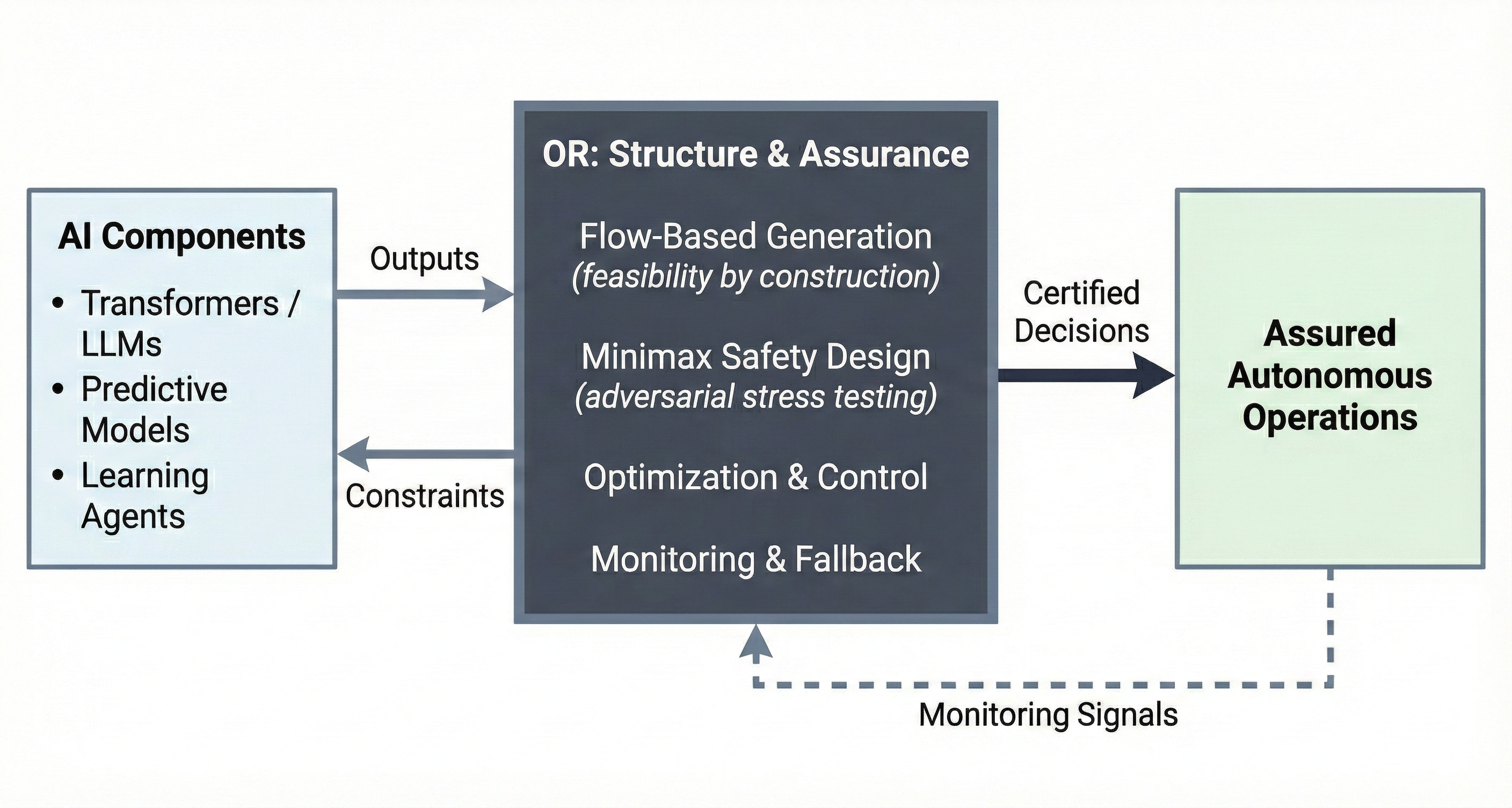

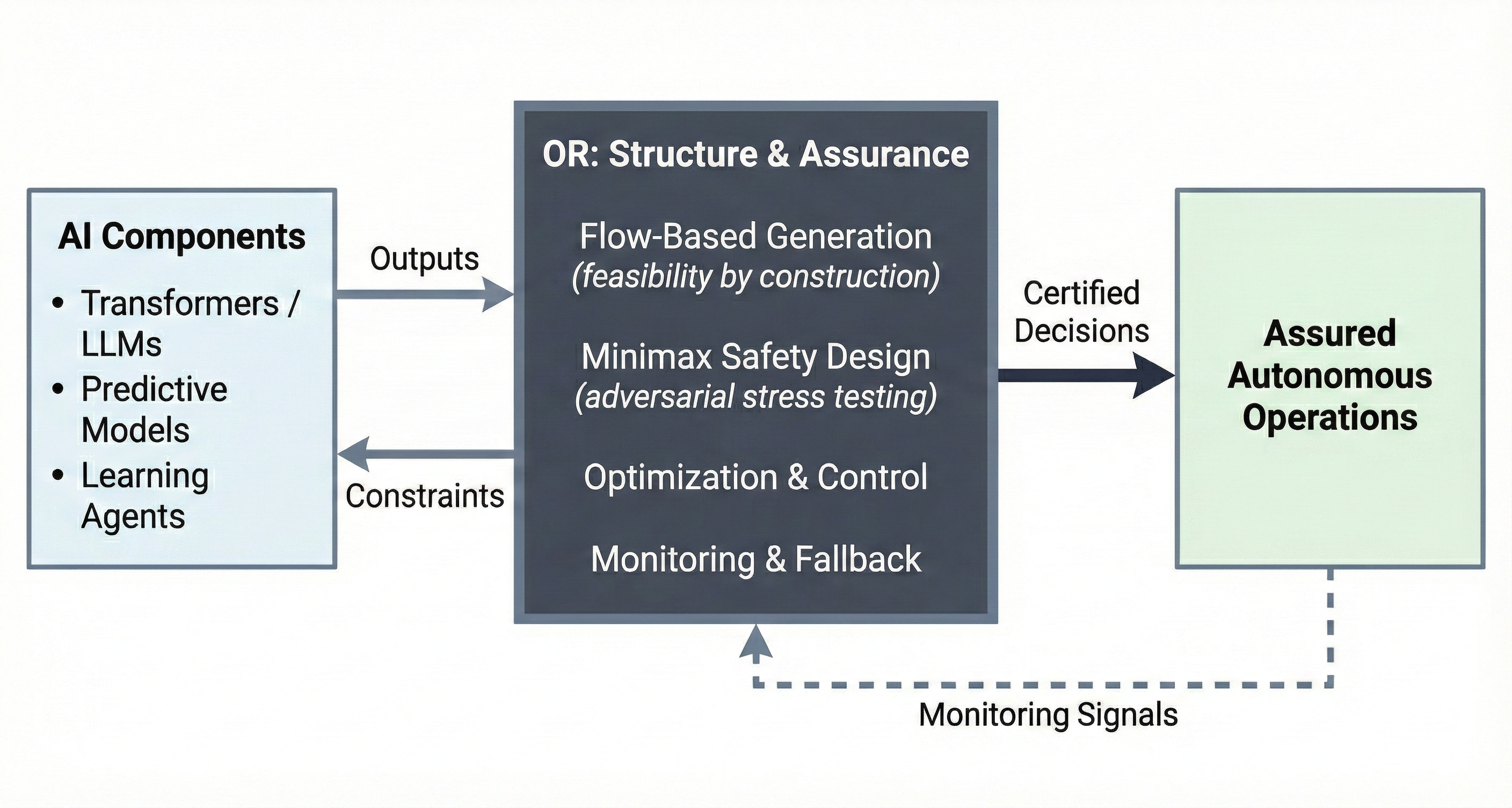

Figure 1: The OR-AI integration architecture for assured autonomy situates AI, generative modeling, and OR safety mechanisms in a unified end-to-end stack.

Limitations of Current GenAI Paradigms in Operational Autonomy

State-of-the-art GenAI systems, notably LLMs and diffusion models, achieve high performance on generative metrics but remain structurally ill-equipped for operational settings with strict constraints and rare, high-consequence failure modes. Most models are trained on next-token or likelihood objectives, emphasizing plausibility rather than certifiable feasibility, stability, or robustness under distribution shift. Stochastic generative mechanisms introduce irreducible, non-deterministic errors that persist as autonomy and criticality increase, rendering average-case certification insufficient for applications with effectively zero acceptable failure rates.

Efforts to impose constraints via prompting, penalty terms, or post hoc filtering yield at best semantic, not structural, guarantees. True operational invariants—such as flow conservation in supply chains, separation minima in aviation, or capacity requirements in healthcare and power grids—are global, dynamical, and not expressible as simple output attributes or sample-level conditions. Tail risks, responsible for catastrophic outcomes, are poorly represented in both training and evaluation regimes focusing on typical-case performance.

Deterministic Flow-Based Generative Models: Structure and Auditability

The authors advocate for flow-based generative models, such as continuous normalizing flows and flow matching architectures, as foundational technology for assured autonomy. These models define sample generation as deterministic transport governed by ordinary differential equations (ODEs), with stochasticity isolated in the initial sampling. The core benefit is that constraints and feasibility requirements can be imposed either on the evolution of the probability distribution or at the individual trajectory level. This architectural stance contrasts sharply with diffusion-based models, where stochastic sampling complicates enforceability and auditability of operational invariants.

Figure 2: A comparison between deterministic, constraint-compatible flow-based models and inherently stochastic diffusion models in terms of their ability to enforce feasibility during generation.

Flow-based approaches enable:

- Auditability: Every generated sample can be traced back to its latent source, supporting exact replay and forensic analysis.

- Constrainability: Constraints are incorporated directly into the transport dynamics, ensuring feasibility by construction rather than post hoc repair.

- Tail Sensitivity: Scenario generation can target tail events and distributional shifts that classical stochastic generation underrepresents.

Fine-tuning or conditioning flow-based generators with in-domain data and operational objectives bridges pretrained representation learning with operational constraint adherence, reducing engineering overhead.

Game-Theoretic Robustness and Minimax Stress Testing for AI Safety

Constrainable generation is necessary but insufficient for safety in high-autonomy systems. Assured autonomy requires that policies be robust to worst-case or adversarial scenarios—including distribution shifts, strategic interactions, or unmodeled events that dominate social cost. The framework formalizes operational safety as a minimax game, combining distributionally robust optimization (DRO) and game-theoretic adversarial design: the system designer (player) selects policies to minimize loss, while an adversary chooses distributions to maximize it subject to ambiguity set constraints.

Figure 3: The minimax game-theoretic framework: a designer selects a control policy robust to the worst-case, adversarially chosen perturbations within a prescribed ambiguity set.

Flow-based generators have a practical advantage in minimax setups: ambiguity sets over distributions correspond to parameterized spaces of explicit transport maps, enabling expressive search for least-favorable distributions and continuous, scenario-level stress testing. Constraints relevant to OR (e.g., capacity, balance, integrality) can be applied both at the sample and distribution level, supporting tight coupling of generation and optimization.

Redefining the Role of Operations Research: From Solvers to System Architects

The scale-up of autonomy entails a reorganization of OR's function. The paper delineates a progression of roles:

- Solver: In human-in-the-loop or decision-support systems, OR provides prescriptive solutions atop predictive AI.

- Guardrail: In partially autonomous systems, OR certifies feasibility, projects candidate actions onto admissible sets, and polices risk boundaries.

- Architect: In fully autonomous operations, OR designs the admissible action regime, monitoring protocols, escalation policies, and the constitutional rules by which agentic systems operate and interact.

This evolution is domain-agnostic: whether in supply chain management, mobility and aviation, healthcare, or power systems, OR governs admissible agent behavior, tail risk discipline, and system-level feedback mechanisms. The integration of OR with GenAI is also bidirectional: OR techniques underpin not only operational safety but also efficient inference scheduling, resource allocation, and online optimization within GenAI system infrastructure.

Domain Applications and Cross-Cutting Implications

- Supply Chains: Autonomous multi-agent systems require constraint-preserving scenario generation, closed-loop stability monitoring, and tail-risk stress testing. Flow-based generators facilitate auditable, feasible scenario synthesis for robust dynamic allocation and replenishment.

- Mobility/Aviation: Safety invariants such as separation and right-of-way demand trajectory generation regimes in which feasibility is an invariant. Deterministic transport allows certification and continuous monitoring, while minimax control design addresses rare interaction-induced failures.

- Healthcare: Workflow autonomy (e.g., ambient AI scribes) can be automated, but clinical autonomy over high-stakes decisions must be gated by statistical monitoring and escalation/fallback logic. Flow-based generators support structured, plausible surge and failure scenario generation for robust scheduling and triage.

- Power Grids: Grid control has long operated under explicit minimax stress testing (e.g., N-1 security). The GenAI/OR stack supports integration of forecast, remediation, and contingency planning while preserving hard invariants and enabling adversarial scenario exploration.

Research Agenda and Future Directions

The paper identifies four technical priorities:

- Feasibility-by-Construction: Directly integrating constraint satisfaction mechanisms into the generative and decision layers using differentiable optimization and safe RL methodologies.

- Minimax Safety and Verification: Scaling tractable, scenario-based stress testing, worst-case distribution generation, and formal certification of operational safety properties.

- Lifecycle Monitoring and Handoff: Developing continuous sequential detection and escalation protocols, aligning with SPC and control-theoretic approaches for real-time governance.

- Benchmarks and Data Readiness: Establishing standardized operational datasets, digital twins, and testbeds focused on tail-risk, stability, and violation rates rather than typical-case accuracy.

Conclusion

The transition to agentic GenAI in operational domains necessitates a shift from average-case plausibility to assured autonomy, anchored by explicit, enforceable structure, minimax safety, and continuous governance. Deterministic, constraint-compatible generative models, coupled with distributionally robust, adversarial design and OR-defined monitoring regimes, are essential to scaling autonomy responsibly. OR moves beyond solution delivery to systemic architecture, specifying the rules, monitoring, and escalation that bound agentic behavior. Further research must advance integration at the learning-optimization boundary, tractable minimax verification, and the construction of domain-relevant benchmarks. Only with this discipline can autonomy deliver high reliability and operational safety at scale.