- The paper demonstrates that experimental conditions (XR setting, speech modality, and processing locus) do not significantly alter overall acceptance, although privacy and trust concerns persist.

- It employs a detailed 2x2x3 factorial design and robust statistical models to reveal the influence of user demographics and familiarity with generative AI on acceptance and apprehension.

- The study highlights that high-sensitivity data types, such as location and video streams, trigger elevated concerns, underscoring the need for transparent data practices in XR systems.

User Acceptance and Concerns Toward LLM-powered Conversational Agents in Immersive Extended Reality

Introduction

The integration of LLMs as conversational agents within Extended Reality (XR) systems represents a significant confluence of generative AI and immersive technologies, with applications spanning training, maintenance, and entertainment. While such multimodal interfaces have unprecedented potential for adaptability and naturalistic interactions, they also exacerbate longstanding issues related to security, privacy, social acceptance, and trust, especially as sensor-rich XR devices collect diverse streams of behavioral data. This paper presents a rigorous empirical investigation, using a large-scale user study (n=1036), into users’ acceptance and concerns regarding LLM-powered conversational agents in XR environments. The study probes the nuanced roles of interaction modality, context, and data processing location in shaping adoption and apprehension.

Study Design and Analytical Framework

A 2×2×3 between-subjects factorial design manipulated three factors: XR setting (Mixed Reality [MR] versus Virtual Reality [VR]), speech interaction type (basic voice commands versus generative AI-powered conversation), and data processing location (on-device, user-controlled server, or application cloud). Participants were administered standardized acceptance (UTAUT2) and concern (MRC) measures, alongside targeted tasks ranking sensitivity of specific data types and rating perceived extractability from XR contexts. Robust OLS regression and mixed-effects modeling isolated the effects of experimental conditions and pertinent covariates, including demographic and usage attributes.

Survey Constructs and Distributions

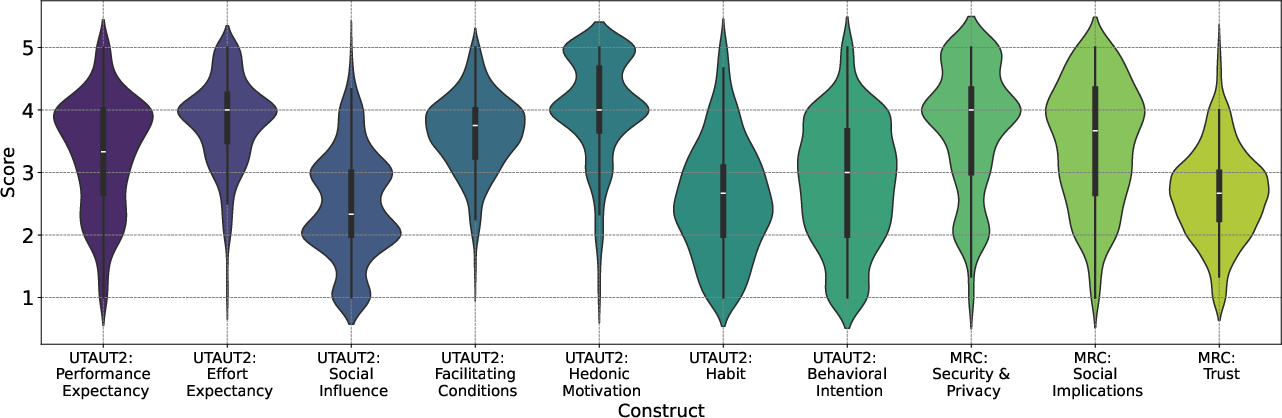

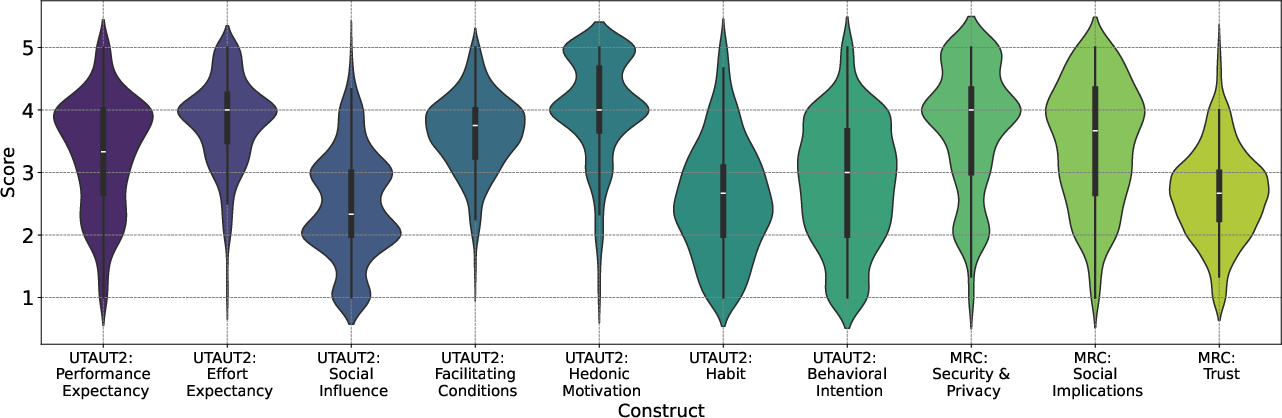

Analysis revealed that the primary experimental manipulations—XR setting, speech modality, and processing locus—yielded no significant main or interaction effects on technology acceptance or articulated concerns. Regardless of context, respondents indicated generally high acceptance for LLM-powered XR agents, but persistent reservations related to privacy, security, and trust persisted. The means and distributions of core UTAUT2 and MRC constructs were comparable across all condition permutations.

Figure 1: Survey response distributions for the different constructs of UTAUT2 and MRC. The Trust construct in the MRC questionnaire is reverse-coded according to the original survey.

Secondary analyses highlighted pronounced differences linked to user background and demographic factors. Notably, habitual generative AI users signaled greater acceptance and trust but, paradoxically, also reported elevated privacy and social implication concerns. In contrast, individuals with XR device ownership evinced more skepticism, with reduced behavioral intention, facilitating conditions, hedonic motivation, and social acceptance constructs. Across constructs, men reported higher acceptance and consistently lower concerns than women.

Sensitivity Analysis: Data Types and Perception

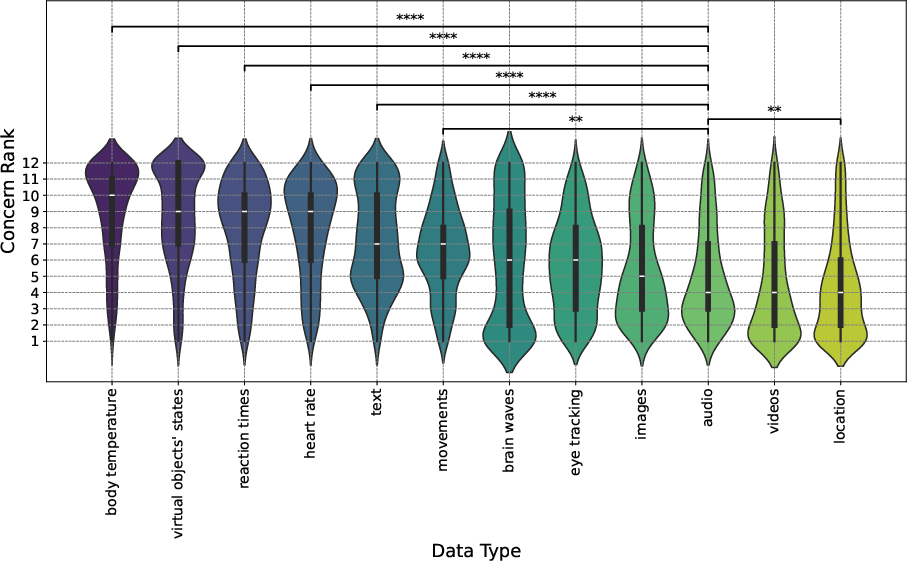

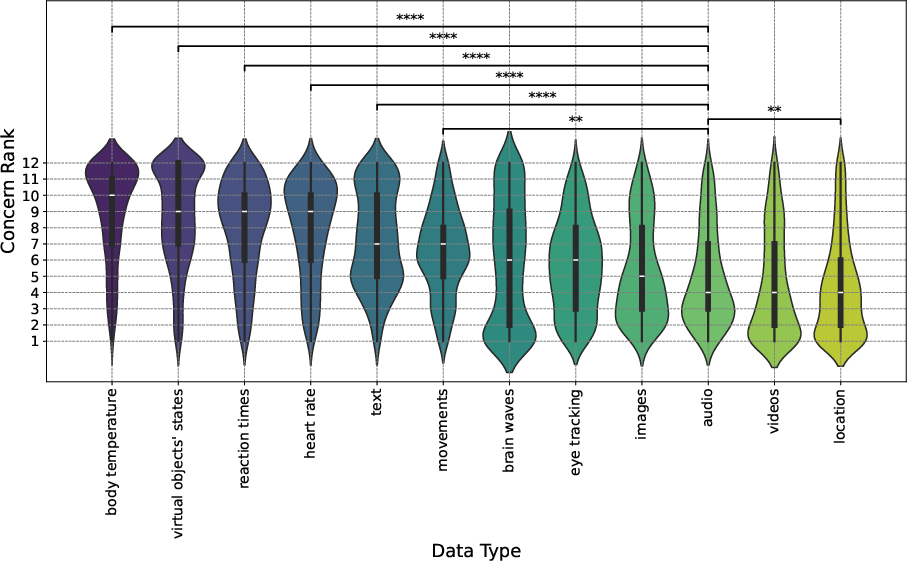

Data type sensitivity analysis revealed that location data triggers the highest apprehension, even above modalities most directly implicated in conversation (i.e., audio). Other high-concern modalities included video and image streams, whereas physiological signals (body temperature, heart rate) and interactional metrics (virtual object states, reaction times) were consistently rated as less sensitive.

Figure 2: Participants' concerns on different data types. The smaller the number, the higher the concerns participants had. *

and **** correspond to

p<.01 and

p<.0001, respectively.*

Concurrently, respondents’ judgments about the technical feasibility of data extraction were well-aligned with contemporary XR device capabilities, especially for audio, location, eye tracking, image, and video data. Physiological parameters such as brain waves, heart rate, and body temperature drew inconsistent attributions, reflecting both participant uncertainty and current technical limitations.

Figure 3: Participants' perception of what type of data can be extracted from the XR settings that include conversational agents. Frequency corresponds to the number of participants who thought the extraction was possible.

Implications and Recommendations

Empirical evidence rejects the hypotheses that novelty in interaction modality (LLMs versus command-based), difference in immersion context (MR versus VR), or decentralization of data processing (on-device versus cloud) inherently alter user acceptance or concern. Instead, outcomes are more tightly linked to users’ familiarity with generative AI and XR, and to stable demographic correlates. This underlines the salience of sociotechnical expectations over mere technical affordances.

Two principal recommendations emerge:

- Education and Familiarity Initiatives: Increasing generative AI literacy through structured education and enabling routine engagement is likely to foster greater acceptance and trust across user segments.

- Transparent and Granular Communication: Designers and vendors of XR systems must proactively communicate data practices and privacy safeguards, with particular obligation to clarify handling and processing of location and other high-sensitivity data types.

Limitations and Prospects for Future Research

Several limitations circumscribe the generalizability of these results. Sample participants were overwhelmingly familiar with generative AI, potentially inflating acceptance relative to naïve populations. Self-selection and hypothetical scenario-based responses may also bias results away from real-world revealed preferences. Longitudinal, in-the-wild deployments, and complementary qualitative approaches are warranted to further explicate mechanisms underlying observed covariate effects. The disconnect between data-type concerns and actual extractive capabilities, especially for novel signals (brain waves, physiological data), merits focused exploration.

Conclusion

This study provides a comprehensive empirical baseline quantifying user acceptance and concerns vis-à-vis LLM-powered conversational agents in XR settings. The findings demonstrate robust acceptance tempered by persistent—and data-type-specific—concerns, largely invariant to interaction modality, immersion context, or deployment architecture. These results clarify user expectations for practitioner and researcher communities, setting priorities for transparency, education, and responsible innovation as generative AI integration into XR ecosystems progresses.