AgentSHAP: Interpreting LLM Agent Tool Importance with Monte Carlo Shapley Value Estimation (2512.12597v1)

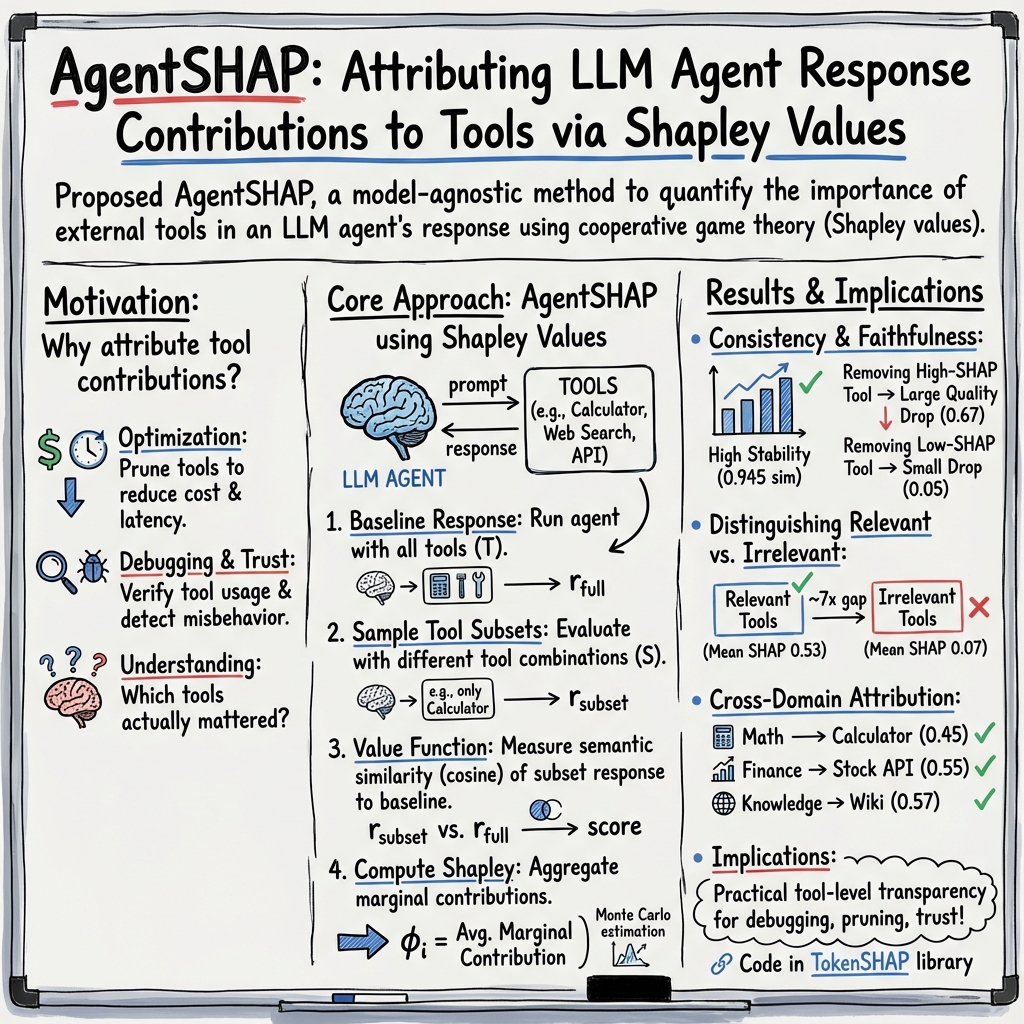

Abstract: LLM agents that use external tools can solve complex tasks, but understanding which tools actually contributed to a response remains a blind spot. No existing XAI methods address tool-level explanations. We introduce AgentSHAP, the first framework for explaining tool importance in LLM agents. AgentSHAP is model-agnostic: it treats the agent as a black box and works with any LLM (GPT, Claude, Llama, etc.) without needing access to internal weights or gradients. Using Monte Carlo Shapley values, AgentSHAP tests how an agent responds with different tool subsets and computes fair importance scores based on game theory. Our contributions are: (1) the first explainability method for agent tool attribution, grounded in Shapley values from game theory; (2) Monte Carlo sampling that reduces cost from O(2n) to practical levels; and (3) comprehensive experiments on API-Bank showing that AgentSHAP produces consistent scores across runs, correctly identifies which tools matter, and distinguishes relevant from irrelevant tools. AgentSHAP joins TokenSHAP (for tokens) and PixelSHAP (for image regions) to complete a family of Shapley-based XAI tools for modern generative AI. Code: https://github.com/GenAISHAP/TokenSHAP.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper introduces AgentSHAP, a new way to explain which tools matter most when a LLM acts like an “agent” and can use external tools (like a calculator, a stock API, or Wikipedia). The goal is to figure out, for any given answer, which tools actually helped and how much—so developers can understand, trust, and optimize these agent systems.

What questions did the researchers ask?

They focused on simple, practical questions:

- When an LLM agent uses multiple tools, which ones truly contribute to the final answer?

- Can we measure each tool’s importance in a fair way that doesn’t depend on the internal details of the LLM?

- Can we do this efficiently, even when there are many tools?

- Do these importance scores match reality (i.e., do they predict what happens if you remove a tool)?

How did they study it?

The key idea (in everyday terms)

Imagine the agent and its tools as a team playing a game to produce a great answer. To find out which teammates (tools) matter most, you:

- Run the agent with all tools and save the “best effort” answer.

- Run the agent again with different subsets of tools (some included, some removed).

- See how the answer changes. If removing a tool hurts the answer a lot, that tool is important.

What is a Shapley value?

Shapley values come from game theory. They are a way to fairly divide credit among players (here, tools). The idea:

- Look at all the ways tools could be added to the team.

- For each tool, average how much it improves the team’s performance when it joins different partial teams.

- This gives each tool a fair “importance score.”

Think of it like sharing credit after a group project: if one person consistently boosts the project’s quality when they join in, they get a higher score. If someone doesn’t help, they get a low score.

Making it efficient with Monte Carlo sampling

Calculating exact Shapley values means checking every possible combination of tools, which explodes in number as tools increase (think trying all combinations of 8 tools—there are 256). To speed this up, the researchers use Monte Carlo sampling:

- Instead of checking all combinations, they randomly sample a manageable number of them.

- This keeps results accurate enough, while saving time and money on API calls.

Measuring response quality

To compare answers, they use a meaning-based similarity score:

- They turn each answer into a “meaning vector” (an embedding).

- They measure how close two answers are using cosine similarity (a number from 0 to 1, where 1 means very similar in meaning).

- This way, they judge answers by their meaning, not just identical words.

What did they find?

Here are the main results from tests on the API-Bank benchmark using real tools and GPT-4o-mini:

- Consistency across runs:

- The importance scores were stable even with random sampling.

- Average similarity between runs was about 0.945 (very high), and top-1 accuracy (picking the most important tool) was 100% in that test.

- Faithfulness to reality:

- Removing the highest-scoring tool caused a big quality drop (about 0.67 on their similarity scale).

- Removing a low-scoring tool barely changed the answer (about 0.05 drop).

- That’s roughly a 13× difference, showing the scores reflect true importance.

- Distinguishing relevant vs. irrelevant tools:

- When they added extra tools that shouldn’t matter, AgentSHAP gave them very low scores.

- The “expected” tool for each domain (Calculator for math, QueryStock for finance, Wiki for knowledge) scored about 7× higher than irrelevant tools.

- Top-1 accuracy was 86% in this harder test.

- Correct attribution across domains:

- Math questions gave the highest score to Calculator.

- Finance questions gave the highest score to QueryStock.

- Knowledge questions gave the highest score to Wiki.

- Overall top-1 accuracy was 86%.

In short, AgentSHAP reliably identifies which tools matter, matches what happens when you remove tools, and stays stable across runs.

Why does this matter?

This helps people who build or use LLM agents to:

- Debug problems: If a math question ranks “Calculator” low, something’s off, and you can investigate.

- Reduce cost and delay: Unused or unhelpful tools can be removed, saving money and speeding up responses.

- Increase trust: Clear tool importance explanations help users understand and trust the agent’s behavior.

- A/B test and improve: Try new tools and see if they truly help.

AgentSHAP is “model-agnostic,” meaning it works with any LLM (like GPT, Claude, or Llama) without needing access to internal weights or gradients. It complements related tools (TokenSHAP for text tokens, PixelSHAP for image regions) to build a broader explainability toolkit for modern AI systems.

Limitations and what’s next

The authors point out a few limits and future directions:

- Tool interactions: It mostly measures each tool alone. Sometimes two tools together are better than either alone, and capturing that synergy requires extended methods.

- Multi-turn conversations: AgentSHAP analyzes a single prompt and answer. In longer chats, importance can change over time.

- Tool call order: If the agent calls tools in a specific sequence, that order isn’t fully explained yet.

- Cost: Running many sampled combinations means many API calls. They show it’s practical for moderate tool counts, but real-time explanations might still be hard.

Future work could automatically recommend tool additions/removals, integrate with agent training to make agents “tool-aware,” and even extend to multi-agent setups.

Bottom line

AgentSHAP is the first framework to fairly and efficiently explain which tools matter in LLM agents. It uses game-theory-based Shapley values with smart sampling to keep costs reasonable, and it works well across different tasks. This brings much-needed transparency to tool-using AI agents and helps developers build faster, cheaper, and more trustworthy systems.

Knowledge Gaps

Below is a consolidated list of concrete knowledge gaps, limitations, and open questions the paper leaves unresolved. These points are intended to guide follow-up research and experimentation.

- Value function validity: The use of cosine similarity between embeddings of subset responses and the “all-tools” baseline is not validated against task-ground-truth metrics (e.g., exact match, numeric accuracy, factuality). How well does the similarity score correlate with real task success across domains?

- Baseline dependence: Because importance is defined relative to the full-response baseline, AgentSHAP cannot detect “missed opportunities” (cases where the agent should have used a tool but did not). What alternative baselines (ground truth, oracle responses, model-only responses) yield more actionable attributions?

- Sensitivity to embedding model: SHAP scores may depend strongly on the embedding model (text-embedding-3-large). How sensitive are results to different embedding architectures (bi-encoder vs cross-encoder), multilingual embeddings, and domain-specific encoders?

- Non-text and structured outputs: Many tools return JSON, numbers, or images. The paper does not define similarity metrics for non-text modalities or structured data. What modality-specific value functions are appropriate, and how do they affect attribution?

- Agent stochasticity and settings: Robustness to LLM randomness (temperature, sampling parameters), prompt templates, and agent scaffolding (ReAct vs other frameworks) is not analyzed. How stable are attributions across agent configurations and vendors?

- Interaction effects: While acknowledged as a limitation, there is no implementation or evaluation of pairwise or higher-order Shapley interaction indices. How can we estimate and interpret tool synergies at scale without prohibitive cost?

- Tool call ordering and causal chains: The framework does not attribute importance along the sequence of tool calls or consider causal dependencies. How can path-specific or sequence-aware attribution (e.g., causal SHAP, off-policy replay) capture ordering effects?

- Scalability and sample complexity: There is no theoretical or empirical analysis of Monte Carlo sample complexity, variance, or convergence. What error bounds, confidence intervals, and adaptive sampling/stopping rules are needed to guarantee reliable scores for large toolsets?

- Efficiency strategies: Beyond random subset sampling, the paper does not explore cost-reduction techniques (e.g., importance sampling, caching/memoization, surrogate models, pruning tools before SHAP). Which strategies best reduce API calls while preserving fidelity?

- Handling negative contributions: The interpretation and actionability of negative SHAP values (tools that harm response quality) are not discussed. How can we detect and mitigate harmful or adversarial tools using negative attributions?

- Benchmark breadth: Evaluation is limited to API-Bank Level-1 with 8 tools and a small number of prompts. How do results generalize to ToolBench, larger tool libraries (tens to hundreds of tools), complex multi-step tasks, and specialized domains (medical, legal)?

- Statistical rigor: Reported metrics (top-1 accuracy, cosine similarity of SHAP vectors, quality drop) lack statistical significance testing and broader sampling. What larger-scale, statistically rigorous evaluations are needed?

- Agent awareness of tool availability: Removing tools changes the agent’s planning and behavior, potentially introducing distribution shift. What standardized protocols (e.g., masked tool lists, consistent instructions) ensure fair coalition comparisons?

- Tool parameterization granularity: Tools are treated as binary on/off units. How can attribution be extended to specific endpoints, parameters, versions, and configuration options within a tool?

- Reliability under tool failures: The framework does not address API errors, timeouts, and rate limits. How should value functions and sampling handle failures, retries, and partial responses?

- Enforcing Shapley axioms in practice: Finite-sample estimates can violate efficiency/symmetry. What normalization or projection methods ensure estimated SHAP values satisfy core axioms?

- Correlation with usage logs: The relationship between SHAP scores and actual tool usage (frequency, duration, latency) is not quantified. How can SHAP be calibrated against logs to guide pruning or system optimization?

- Misclassification analysis: Cross-domain experiments have 86% top-1 accuracy, with no analysis of failure modes. What causes misattributions, and how can diagnostic tooling surface and correct them?

- Internal knowledge vs tool contribution: The framework attributes only over tools, not between tools and the model’s intrinsic knowledge. How can we quantify the relative contribution of “no tools” vs each tool to the final response?

- Comparison to baselines: There is no empirical comparison with simpler heuristics (leave-one-out only, tool-call counts, attention over tool descriptions). When does Shapley outperform these baselines, and by how much?

- Multi-turn conversations: Tool importance is measured per single prompt-response. How can we track temporal attribution across turns, memory updates, and changing goals in dialogue agents?

- Multi-agent attribution: Extension to settings where multiple agents share tools is proposed but not developed. How should credit be assigned across agents and shared resources?

- Tool redundancy and overlap: Overlapping tools can dilute credit or create ambiguous attributions. What grouping, clustering, or redundancy-aware attribution methods handle highly similar tools?

- Real-time explanations: The paper notes real-time use is challenging but offers no method for streaming or incremental SHAP. How can we deliver budgeted, low-latency attributions during agent execution?

- Privacy and cost: Large numbers of API calls may be costly or privacy-sensitive. What privacy-preserving or cost-aware estimation techniques (e.g., differential privacy, federated estimation) are viable?

- Cross-language and locale robustness: The approach is evaluated in English; multilingual performance and locale-specific tools are unexplored. How do attributions behave across languages and cultural contexts?

- Calibration and uncertainty reporting: The method does not report uncertainty (confidence intervals) for SHAP scores. What bootstrapping or Bayesian estimators can provide actionable uncertainty estimates?

- Integration with training: Using SHAP scores to fine-tune tool-use policies, prune/add tools, or perform tool-aware curriculum learning is suggested but not demonstrated. What training pipelines most effectively leverage AgentSHAP?

- Reproducibility specifics: The repository link points to TokenSHAP, and AgentSHAP implementation details (prompt templates, agent scaffolding, temperature, seeds, tool wrappers) are not fully specified. What documentation and artifacts are needed to ensure reproducibility?

Glossary

- AgentSHAP: A model-agnostic framework that explains the importance of tools used by LLM agents using Shapley value estimation. "We introduce AgentSHAP, the first framework that explains tool importance in LLM agents."

- agentic systems: Systems in which autonomous agents act and use tools; a distinct setting for explainability. "AgentSHAP completes this family by explaining tool importance a new dimension of explainability unique to agentic systems."

- API-Bank: A benchmark with real executable APIs for evaluating tool-using LLM agents. "We evaluate AgentSHAP on the API-Bank benchmark \cite{li2023apibank}, which provides real executable tools and ground-truth annotations."

- attention visualization: A method that visualizes attention weights to interpret what tokens an LLM attends to. "Attention visualization \cite{vig2019analyzing} shows what tokens the model attends to, but attention doesn't always reflect importance."

- black box: Treating the agent only through input-output behavior without access to internals like weights or gradients. "AgentSHAP is model-agnostic: it treats the agent as a black box, requiring only input-output access."

- coalition: Any subset of tools considered as a group (player set) in the Shapley framework. "A coalition is any subset of tools "

- cooperative game theory: A branch of game theory used to fairly attribute contributions among players via Shapley values. "Shapley values from cooperative game theory \cite{shapley1953value} provide a principled, axiomatic approach to fair attribution."

- cosine similarity: A metric on embeddings used to measure semantic similarity between responses. "We use cosine similarity on text embeddings (text-embedding-3-large) to capture semantic meaning rather than surface-level word overlap."

- cross-domain attribution: Evaluating whether the method correctly assigns tool importance across different query domains. "Cross-domain attribution. The heatmap shows mean SHAP values per domain-tool pair."

- efficiency (axiom): A Shapley axiom stating total attributions sum to the overall value. "Shapley values satisfy key axioms (efficiency, symmetry, null player, linearity) that make them the unique fair attribution method \cite{lundberg2017unified}."

- faithfulness: The property that attribution scores reflect actual importance, validated by quality changes when removing tools. "Faithfulness test. Removing high-SHAP tools (red) causes much larger quality drops than removing low-SHAP tools (blue). This confirms SHAP values reflect actual tool importance."

- game-theoretic properties: Formal fairness criteria (e.g., efficiency, symmetry, null player) satisfied by Shapley-based attributions. "The scores satisfy key game-theoretic properties: efficiency, symmetry, and null player."

- Integrated Gradients: A gradient-based attribution method requiring model access to assign input importance. "Integrated Gradients \cite{sundararajan2017axiomatic} and LIME \cite{ribeiro2016lime} provide input attribution but require model access."

- Irrelevant Tool Injection: An experiment that tests robustness by adding unrelated tools and checking their low importance scores. "Irrelevant tool injection. AgentSHAP assigns low scores to injected irrelevant tools (gray). The expected tool (red) receives the highest score."

- leave-one-out: An estimation step that measures direct effect by removing each tool individually. "Leave-one-out: We always test removing each tool individually."

- LIME: A local surrogate-model approach to explain predictions, typically requiring access to the model. "Integrated Gradients \cite{sundararajan2017axiomatic} and LIME \cite{ribeiro2016lime} provide input attribution but require model access."

- linearity (axiom): A Shapley axiom ensuring attributions are consistent under linear combinations of value functions. "Shapley values satisfy key axioms (efficiency, symmetry, null player, linearity) that make them the unique fair attribution method \cite{lundberg2017unified}."

- LLM agent: A LLM configured to act as an agent that can call external tools during problem solving. "LLM agents that use external tools can solve complex tasks"

- marginal contribution: The added value a tool provides when included in a coalition, averaged across permutations in Shapley. "This formula averages the marginal contribution of tool across all possible orderings in which tools could be added."

- model-agnostic: Methods that do not require internal model details, working purely with inputs and outputs. "A key advantage is that AgentSHAP is model-agnostic: it treats the agent as a black box, requiring only input-output access."

- Monte Carlo sampling: A stochastic approximation technique to estimate Shapley values by sampling subsets or permutations. "We use Monte Carlo sampling to reduce this to practical levels while maintaining accuracy."

- Monte Carlo Shapley: An algorithmic approach that uses Monte Carlo techniques to estimate Shapley values efficiently. "Monte Carlo Shapley for Tools"

- null player (axiom): A Shapley axiom stating a player that contributes nothing receives zero attribution. "Shapley values satisfy key axioms (efficiency, symmetry, null player, linearity) that make them the unique fair attribution method \cite{lundberg2017unified}."

- PixelSHAP: A Shapley-based method for attributing importance to image regions in vision-LLMs. "PixelSHAP \cite{goldshmidt2025pixelshap} extends this to vision-LLMs."

- random permutations: The basis of Monte Carlo estimation for Shapley values, used to average contributions over orderings. "Monte Carlo sampling \cite{castro2009polynomial} addresses this by estimating values through random permutations, trading exactness for efficiency."

- ReAct: A method that interleaves reasoning and acting to guide tool use in agents. "ReAct \cite{yao2022react} introduced interleaved reasoning and acting."

- sampling ratio: The parameter controlling how many coalitions are sampled for Monte Carlo estimation. "With sampling ratio , we evaluate approximately coalitions instead of ."

- semantic similarity: A measure of meaning-level closeness between texts, often computed via embeddings. "The value function uses semantic similarity (cosine similarity on embeddings) to compare responses."

- SHAP: A unified Shapley-based framework for feature importance in machine learning. "SHAP \cite{lundberg2017unified} popularized this for machine learning feature importance, with extensions to tree models \cite{lundberg2020local}."

- SHAP Gap: The difference in SHAP scores between relevant and irrelevant tools used as an evaluation metric. "We report Top-1 Accuracy (does the highest-SHAP tool match the expected tool), Cosine Similarity (how stable are SHAP vectors across runs), Quality Drop (response quality decrease when removing a tool), and SHAP Gap (difference between relevant and irrelevant tool scores)."

- Shapley values: A game-theoretic attribution method assigning fair contributions to players (tools) based on all coalitions. "The Shapley value for tool is:"

- symmetry (axiom): A Shapley axiom indicating that equally contributing players receive equal attributions. "Shapley values satisfy key axioms (efficiency, symmetry, null player, linearity) that make them the unique fair attribution method \cite{lundberg2017unified}."

- text embeddings: Vector representations of text used to compute semantic similarity. "We use cosine similarity on text embeddings (text-embedding-3-large) to capture semantic meaning rather than surface-level word overlap."

- text-embedding-3-large: A specific embedding model used to compute semantic similarity. "We use cosine similarity on text embeddings (text-embedding-3-large) to capture semantic meaning rather than surface-level word overlap."

- TokenSHAP: A Shapley-based, model-agnostic method to explain token-level importance in LLM outputs. "TokenSHAP \cite{goldshmidt2024tokenshap} uses Monte Carlo Shapley values to explain which input tokens matter for LLM outputs in a model-agnostic way."

- Top-1 Accuracy: An evaluation metric indicating whether the highest-scoring item matches the expected one. "We report Top-1 Accuracy (does the highest-SHAP tool match the expected tool)"

- value function: The function that assigns a score to a coalition by comparing its response to the full-tool response. "The value function measures how similar the response with only tools is to the full response with all tools ."

Practical Applications

Immediate Applications

Below are concrete ways to use AgentSHAP now, based on the paper’s model-agnostic method and the released implementation in the TokenSHAP library.

- Sector: Software/SaaS platforms Application: Tool pruning and cost/latency optimization Potential products/workflows: “Tool Pruner” job that runs AgentSHAP on a representative prompt suite to produce keep/drop/gate lists; monthly cost reports showing contribution-per-dollar by tool. Assumptions/dependencies: Ability to run the agent with arbitrary tool subsets; sufficient evaluation prompts to avoid dropping rare-but-critical tools; batching/caching to control API costs.

- Sector: Software/DevOps for AI (AIOps, MLOps) Application: Agent observability dashboards Potential products/workflows: Per-prompt SHAP traces, time-series drift detection when a tool’s importance shifts (e.g., after an API version change), incident triage linking quality regressions to specific tools. Assumptions/dependencies: Centralized logging of prompts/responses; embedding service for similarity; stable agent configuration for comparability across runs.

- Sector: Product/Platform (plugin ecosystems, internal tool catalogs) Application: A/B testing and canary rollout of new tools Potential products/workflows: Experiment harness that computes SHAP deltas vs. baseline to decide rollout; release criteria like “new tool must contribute ≥X SHAP on target intents.” Assumptions/dependencies: Matched prompt sets; traffic splitting; acceptance thresholds tied to business KPIs.

- Sector: Enterprise IT, Procurement, Data Vendor Management Application: API/data spend optimization and vendor ROI Potential products/workflows: Vendor scorecards ranking each data/API by average SHAP; renegotiation or deprecation of low-contribution subscriptions. Assumptions/dependencies: Aggregation across diverse tasks; safeguards for low-frequency/high-criticality use cases.

- Sector: Compliance and Risk (Finance, Healthcare, Government) Application: Audit artifacts and policy checks for tool usage Potential products/workflows: Decision records including top-k tool SHAPs; guardrails that require high SHAP for authoritative tools (e.g., drug database) before returning clinical guidance. Assumptions/dependencies: Policy-to-tool mappings (e.g., what counts as “authoritative”); regulator acceptance of similarity-based attribution; storage and governance of explanation logs.

- Sector: Safety/Security Application: Prompt-injection and data exfiltration detection Potential products/workflows: Alerts when untrusted web tools dominate SHAP in contexts that should be internal-only; quarantine or human review when expected tools do not rank highly (e.g., calculator on math). Assumptions/dependencies: Trust tiers for tools; baselines per task type; potential false positives due to interaction effects not yet modeled.

- Sector: Customer Support, E-commerce Application: Reliability checks for CRM/order DB usage Potential products/workflows: Policies that block responses if CRM or order DB doesn’t register as highly important for account/billing queries; privacy guard that downranks web search in PII contexts. Assumptions/dependencies: Intent detection; accurate SHAP under domain shifts; side-effect-free replay of tool calls for evaluation.

- Sector: Education (EdTech) Application: Trust-building “Why this answer?” with tool attribution Potential products/workflows: UI widget showing top tools (e.g., calculator, citation database) with SHAP scores; QA pipeline that flags math answers with low calculator SHAP. Assumptions/dependencies: Student-appropriate explanations; embedding similarity aligns with pedagogical quality.

- Sector: Search/Content (RAG, web-browsing chat) Application: Source/tool transparency and hallucination reduction Potential products/workflows: Surfacing which retrievers/browsers contributed most; gating answers that lack high SHAP from curated sources. Assumptions/dependencies: Baseline “full-tool” responses are correct enough for similarity-based attribution; retriever variability.

- Sector: Developer Experience (DX) Application: Debugging and tool design iteration Potential products/workflows: “SHAP-while-debugging” CLI to detect mismatched tool descriptions or routing; regression tests that fail when expected tools lose importance. Assumptions/dependencies: Deterministic agent modes or sufficient sampling to overcome variance; reproducible environments.

- Sector: Research/Academia Application: Benchmarking tool-using agents Potential products/workflows: New leaderboard metric: top-1 tool attribution accuracy; papers reporting SHAP distributions across tasks to compare agent-tool integrations. Assumptions/dependencies: Public benchmarks with runnable tools (e.g., API-Bank); community consensus on value functions.

- Sector: Consumer Assistants (Daily life) Application: Plugin permissioning and battery/data use control Potential products/workflows: Settings that auto-disable low-SHAP plugins; per-task “minimal toolset” mode to reduce latency and mobile data. Assumptions/dependencies: Background batching of SHAP evaluations; privacy-preserving logging.

- Sector: Data/ML Application: Training/eval data curation for tool use Potential products/workflows: Sampling prompts with high/low marginal contribution to create focused datasets for tool-use fine-tuning and regression tests. Assumptions/dependencies: Access to training pipelines; careful handling of bias introduced by similarity-based labels.

Long-Term Applications

The following leverage AgentSHAP’s core idea but require further research, scaling, or development (e.g., interaction effects, multi-turn, real-time constraints).

- Sector: Software/Platforms Application: Real-time, in-loop tool selection with SHAP-guided bandits Potential products/workflows: Adaptive routers that estimate marginal value online to pick a minimal tool subset per turn. Assumptions/dependencies: Low-latency estimators, caching/proxies, or surrogate models; tighter variance bounds for streaming use.

- Sector: XAI/Methodology Application: Higher-order interaction attribution (synergies/conflicts) Potential products/workflows: Pairwise/group SHAP for tool chains (e.g., Retriever→Calculator); design of composite tools informed by interaction heatmaps. Assumptions/dependencies: Efficient estimators for combinatorial interactions; sampling strategies to control explosion in evaluations.

- Sector: Conversational AI Application: Multi-turn attribution timelines and policies Potential products/workflows: Turn-by-turn SHAP dashboards; guardrails that reason over cumulative tool importance across a dialogue. Assumptions/dependencies: Conversation tracing; value functions that reflect evolving goals and context.

- Sector: Causality/Tracing Application: Ordering-aware, causal credit assignment for tool call chains Potential products/workflows: Counterfactual replays to measure order effects; causal graphs over tool steps for safer automation. Assumptions/dependencies: Structured traces, safe re-execution/simulation to avoid side effects; instrumentation standards (e.g., OpenTelemetry for agents).

- Sector: Autonomous Ops Application: Self-managing tool portfolios Potential products/workflows: Agents that auto-add, retire, or reweight tools based on long-horizon SHAP trends and SLOs. Assumptions/dependencies: Human-in-the-loop approvals; rollback plans; robustness to distribution shifts.

- Sector: Regulation/Policy Application: Standardized “tool transparency” reporting and certification Potential products/workflows: Compliance profiles that include tool-attribution artifacts aligned with AI Act, financial advisory, or medical device guidelines. Assumptions/dependencies: Policymaker consensus on attribution metrics; mitigation of gaming; sector-specific guidance.

- Sector: Marketplaces/Economics Application: Contribution-based billing/revenue sharing for tools Potential products/workflows: Pricing models that pay tool vendors by marginal contribution; “contribution per dollar” leaderboards for buyers. Assumptions/dependencies: Robustness against manipulation; long-horizon averaging; legal/antitrust considerations.

- Sector: Training/Optimization Application: SHAP-regularized fine-tuning and RL Potential products/workflows: Rewards that encourage reliance on trusted tools; penalties for overusing irrelevant tools. Assumptions/dependencies: Access to weights/training loops; careful avoidance of reward hacking toward the similarity metric.

- Sector: Multi-agent Systems Application: Cross-agent and shared-tool attribution Potential products/workflows: Budget allocation and arbitration across agents based on contribution; detection of free-riding agents. Assumptions/dependencies: Unified tracing across agents; joint evaluation protocols.

- Sector: Robotics/Cyber-physical Application: Safety cases for modular tool stacks (planning, perception) Potential products/workflows: Simulator-based SHAP to justify module criticality; evidence for functional safety audits. Assumptions/dependencies: High-fidelity simulators; safe replay to avoid real-world side effects; mapping from module outputs to “tool” abstraction.

- Sector: Privacy/Security Application: Privacy-preserving attribution Potential products/workflows: On-prem embedding similarity and differential privacy to generate SHAP without leaking content; federated analysis across units. Assumptions/dependencies: Local embedding models; DP techniques; infrastructure investment.

- Sector: Consumer Platforms Application: Trust badges and disclosure standards for assistants Potential products/workflows: Platform policies requiring tool-attribution UX for sensitive tasks (finance, health); third-party audits. Assumptions/dependencies: Ecosystem buy-in; usability research to keep explanations understandable.

Notes on feasibility across applications:

- All applications inherit core assumptions: the agent must be runnable with arbitrary tool subsets; attribution is computed against the full-tool baseline using embedding similarity, which can misalign with correctness if the baseline is wrong; computational cost scales with the number of tools and sampling ratio (mitigated by batching/caching).

- Limitations called out in the paper (no ordering awareness, limited interaction modeling, single-turn focus) constrain high-stakes or real-time uses until extended methods are available.

Collections

Sign up for free to add this paper to one or more collections.