- The paper presents a new Model Context Protocol (MCP) that decouples LLM/VLA reasoning from real-time ROS control.

- It achieves dynamic sensor abstraction and kinematics-aware manipulation through URDF parsing and auto-generated ROS message translation.

- Experiments validate unified control across heterogeneous platforms, demonstrating robust performance in simulation and real-world settings.

A Modular Runtime and Integration Architecture for Embodied AI: RoboNeuron

Introduction

"RoboNeuron: A Modular Framework Linking Foundation Models and ROS for Embodied AI" (2512.10394) introduces a systematic approach to linking advanced LLM and VLA reasoning with robotic execution via ROS middleware. The primary contribution is the Model Context Protocol (MCP), deployed as a robust semantic interface bridging the cognitive capabilities of LLMs/VLAs and the heterogeneity of robot hardware managed through ROS. The framework is motivated by three persistent challenges in embodied AI: lack of cross-scenario adaptability, rigid inter-module coupling, and fragmented or ad hoc acceleration of VLA inference.

Architectural Overview

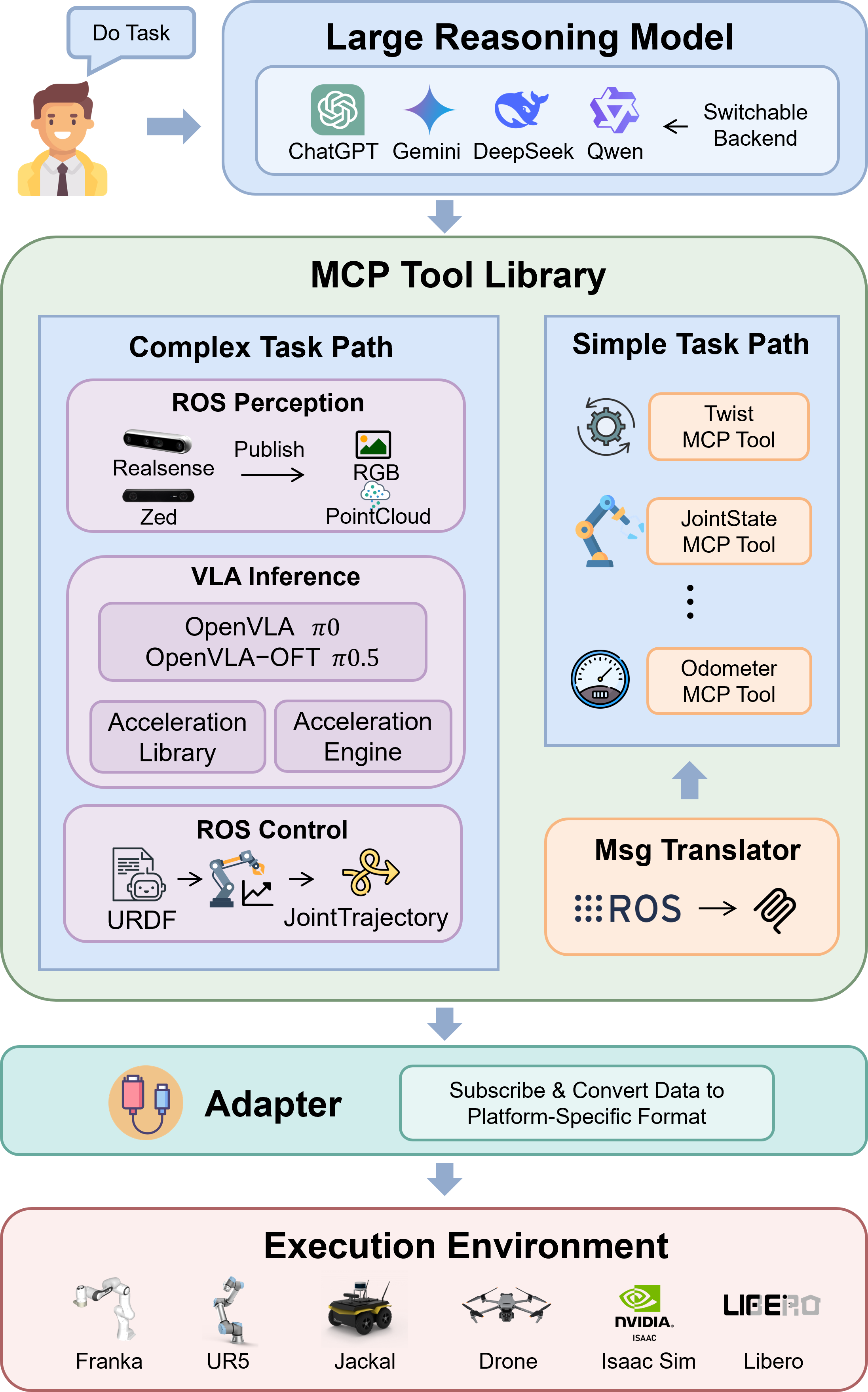

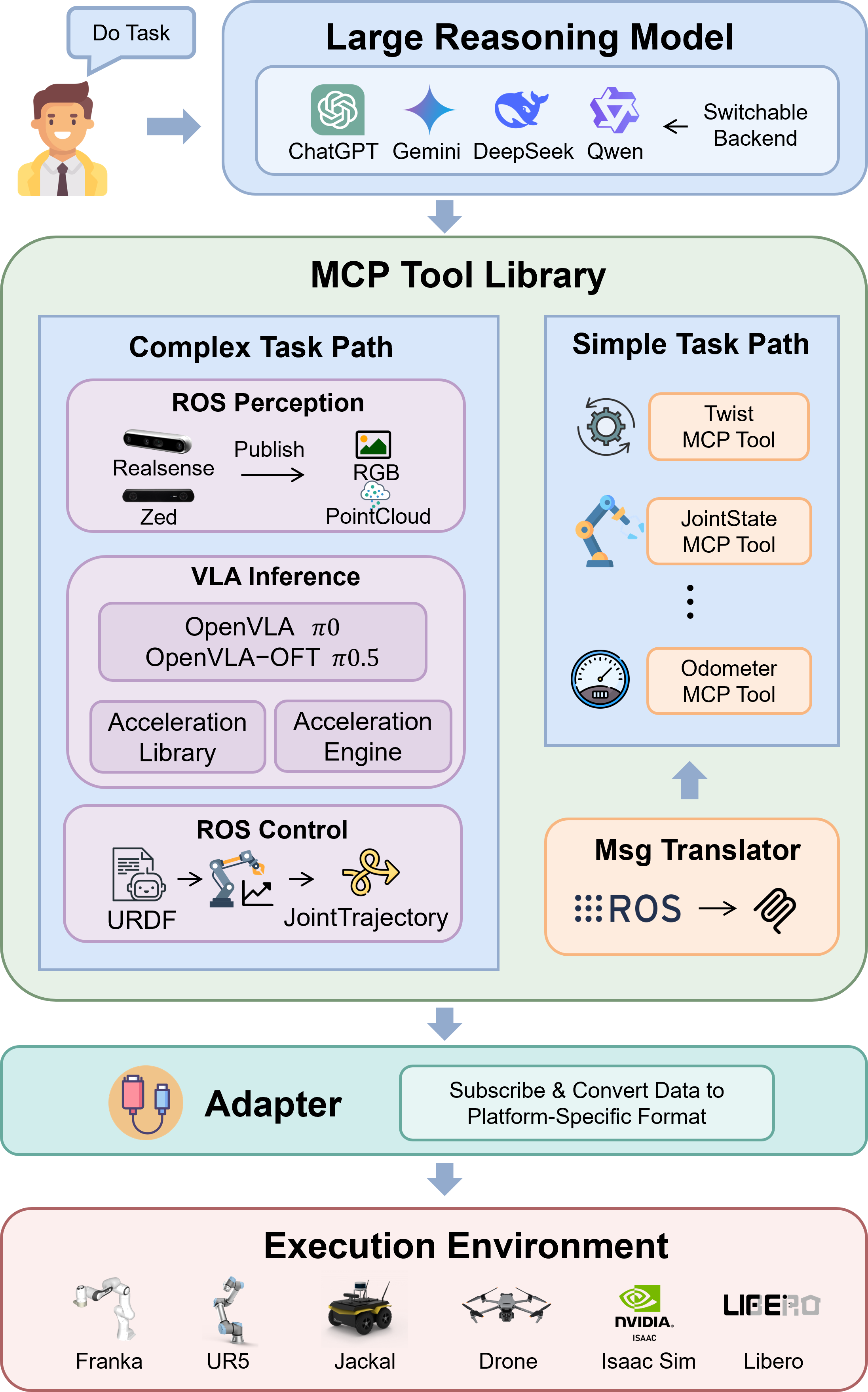

RoboNeuron is architected as a layered cognitive-execution runtime, characterized by a strict decoupling of LLM-based logic from the real-time control of robots. The Cognitive Core, realized with an LLM, plans and orchestrates actions at a semantic level, relying on MCP tools that abstract underlying robot and sensor functionalities. The MCP Tool Library is dynamically populated via the MSG Translator, which automatically parses and wraps ROS message types so that type-safe, easily callable abstractions are accessible by the LLM. The bifurcated control path—Simple Path for direct ROS command interfaces and Complex Path for perception-intertwined decision-making using VLA models—serves to optimize latency and resource utilization.

Figure 1: The Layered Cognitive-Execution Architecture of RoboNeuron detailing the core decoupling and adaptive protocol bridging.

The execution environment is further abstracted via adapters, enabling uniform deployment across both physical hardware and simulation backends, fostering portability and reproducibility.

Core Components and Mechanisms

Capability Abstraction and Dynamic Scheduling

RoboNeuron’s Perception Module enforces a sensor abstraction that eliminates system-specific driver coupling. All perception hardware is wrapped using unified interfaces with methods for stream management, permitting both hardware interchangeability and dynamic, event-driven activation. Sensors can thus be initialized and scheduled on demand through LLM-driven invocation of MCP tools rather than persistent activation, optimizing both energy and computational resources.

Dynamic Kinematics and Adaptive Control

The Control Module employs runtime URDF parsing and dynamic IK resolution. Incoming high-dimensional commands are converted into platform-specific actuation via an Adapter Layer that translates standardized ROS trajectories into protocols understood by proprietary and simulated environments. This method introduces extensibility with minimal software overhead when swapping manipulators or deploying across ROS/non-ROS ecosystems.

ROS2MCP Automated Translation

A critical advancement in RoboNeuron is the ROS2MCP Translator, which automates the parsing and semantic mapping of arbitrarily complex ROS message chains into Pydantic-validated MCP tool definitions. This ensures that all interface invocations originating from the LLM are subject to strict type and range checks prior to execution, improving safety and reducing susceptibility to LLM hallucinations or invalid argument specifications. The auto-generated MCP tools thus serve as a semantically robust and scalable control API for system interaction.

VLA Inference Node and Unified Benchmarking

The VLA Inference Node introduces a standardized wrapper for integrating and benchmarking arbitrary VLA models and their inference acceleration variants. Model selection, backend configuration, and execution-specific optimizations are all exposed as callable MCP tools, allowing the LLM to instantiate and manage VLA pipelines based on task or hardware constraints. This decoupled, plug-and-play approach enables rigorous horizontal benchmarking of inference engines and policies within a unified environment.

Case Studies and Experimental Validation

Three experiments establish the practical effectiveness of RoboNeuron's architectural principles:

Unified Control of Heterogeneous Vehicles

The framework demonstrated protocol unification by commanding multiple robots with distinct kinematics using a single MCP-issued Twist command derived from the standard geometry_msgs/Twist interface. The salient outcome is consistent behavioral execution across divergent platforms, evidencing the success of interface abstraction and semantic bridging.

Figure 2: Multiple mobile platforms synchronously execute a velocity command via a unified abstraction.

Kinematic-Aware Manipulation with Dynamic Integration

In simulation, RoboNeuron parsed custom URDFs and ROS messages to enable precise, kinematics-aware manipulation tasks. The runtime resolution of IK and successful trajectory execution with custom message formats substantiates the dynamic and extensible nature of the control stack.

Figure 3: Precise linear manipulation realized through a dynamically integrated URDF model and MCP-wrapped custom ROS interface.

Real-World VLA-Driven Grasping

On physical hardware, RoboNeuron orchestrated a closed-loop perception-action pipeline comprising real-world image acquisition (RealSense), VLA policy inference (OpenVLA), and adaptive FR3 manipulator motion. All module scheduling and pipeline composition were managed modularly via LLM-issued MCP calls. The successful grasp trial confirms robust system integration, async orchestration, and real-time cross-module communication.

Figure 4: Closed-loop manipulation utilizing VLA reasoning, modular sensor wrapping, and adaptive control on the FR3 platform.

Practical and Theoretical Implications

RoboNeuron establishes a type-safe, rapidly extensible bridge between cognitive AI models and real-time embodied systems, addressing deficiencies in current deployment regimes that are typically hamstrung by brittle APIs, bespoke integration, and poor maintainability. By enforcing modularity at both abstraction and communication levels, RoboNeuron supports flexible benchmarking, model interchangeability, and hardware-agnostic deployment—all prerequisites for large-scale embodied AI research and scalable, real-world application.

Strong claims center on the ability to instantaneously expose new robot capabilities to LLM cognitive cores via zero-shot ROS message translation, and the successful demonstration of end-to-end orchestrated grasping using fully decoupled and dynamically scheduled modules across both simulation and physical robots.

Future Directions

The framework is positioned as a foundation for lifelong robot learning systems, RL research under policy/model swapping, and rapid integration of emerging VLA/LLM architectures. Prospective enhancements include deepening real-time inference acceleration integration and expanding coverage to new categories of sensors, actuators, and cognitive backends.

Conclusion

RoboNeuron achieves robust decoupling, modularity, and extensibility in embodied AI systems by systematically unifying LLM/VLA reasoning mechanisms with the ROS control and communication standard. Its MCP-centric design automates the exposure of robot and sensor capabilities in a type-safe manner, simplifying both control logic and integration workflows. These advances collectively lay the groundwork for highly adaptable, scalable, and reproducible embodied intelligence research and application.