MeshSplatting: Differentiable Rendering with Opaque Meshes (2512.06818v1)

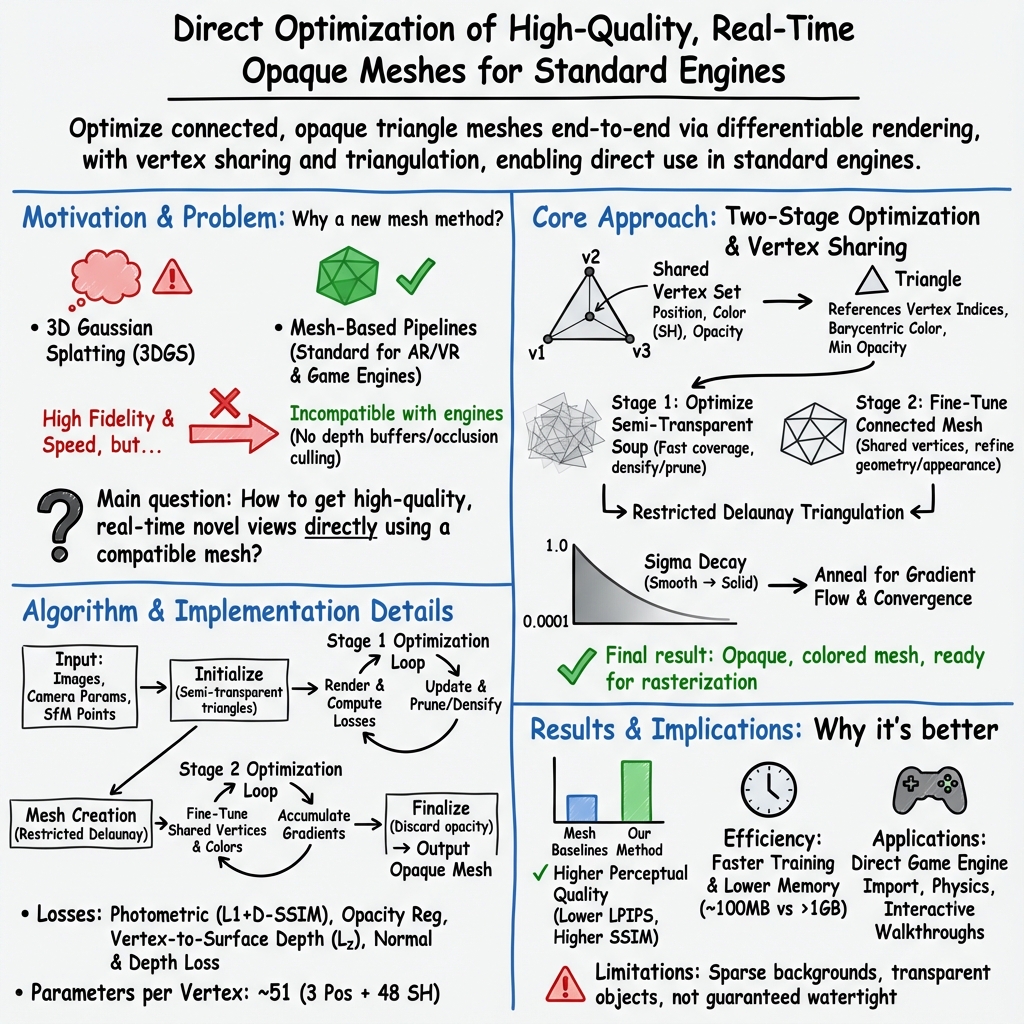

Abstract: Primitive-based splatting methods like 3D Gaussian Splatting have revolutionized novel view synthesis with real-time rendering. However, their point-based representations remain incompatible with mesh-based pipelines that power AR/VR and game engines. We present MeshSplatting, a mesh-based reconstruction approach that jointly optimizes geometry and appearance through differentiable rendering. By enforcing connectivity via restricted Delaunay triangulation and refining surface consistency, MeshSplatting creates end-to-end smooth, visually high-quality meshes that render efficiently in real-time 3D engines. On Mip-NeRF360, it boosts PSNR by +0.69 dB over the current state-of-the-art MiLo for mesh-based novel view synthesis, while training 2x faster and using 2x less memory, bridging neural rendering and interactive 3D graphics for seamless real-time scene interaction. The project page is available at https://meshsplatting.github.io/.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper introduces MeshSplatting, a new way to build 3D scenes from photos so they look realistic from any viewpoint, while also working smoothly in common 3D tools like game engines (Unity, Unreal) and AR/VR. The key idea is to use “opaque triangle meshes” (think of a scene built from tiny solid tiles) instead of millions of fuzzy points or semi-transparent blobs. This makes the scenes both high-quality and easy to use in real-time apps.

Key Objectives

Here are the main things the researchers set out to do:

- Make a method that learns realistic 3D scenes directly as solid, connected triangle meshes (no post-processing or special shaders).

- Keep or improve visual quality compared to popular methods (like 3D Gaussian Splatting), while training faster and using less memory.

- Ensure the meshes work natively in standard game engines, supporting things like physics, ray tracing, and interactive walkthroughs.

- Allow simple scene editing, such as extracting or removing objects, without complex extra steps.

How the Method Works (Approach)

Why meshes matter

Most modern game engines and AR/VR systems expect scenes to be made of triangle meshes—like a big 3D jigsaw puzzle made of tiny flat pieces. Many recent “neural rendering” methods use millions of soft points or transparent shapes that look great but don’t plug in easily to these engines. MeshSplatting builds scenes from opaque triangle meshes so the result is ready to use right away.

Two-stage training: from “soup” to “mesh”

To make learning easier, the method trains in two stages:

- Stage 1: Triangle soup

- The system starts with lots of small, semi-transparent triangles scattered through space (“triangle soup”).

- It renders the scene from the training photos, compares the result to the real images, and nudges the triangles to better match (this is “differentiable rendering,” like a camera-and-model that learn by looking at differences and adjusting).

- Each triangle can move and change color independently at first, which helps quickly cover the scene and fit local details.

- Stage 2: Connected mesh

- After the triangles are roughly in the right places, a smart algorithm called “restricted Delaunay triangulation” connects nearby triangles into a clean, well-shaped mesh. Think of it as stitching the tiles together so they form a single, continuous surface.

- Now triangles share vertices, so adjustments are consistent across neighbors. The system fine-tunes both geometry (shape) and colors to restore any visual quality lost during stitching.

Making triangles opaque (solid) and stable

Early on, triangles are semi-transparent to let light from hidden surfaces contribute gradients (so the method can still learn blocked areas). Later, the method gradually pushes triangles to become fully opaque (solid), and makes their edges sharper:

- Opacity scheduling: Starts flexible, then smoothly ramps to fully opaque.

- Smoothness scheduling: A parameter called σ (“sigma”) starts high (soft edges), then slowly drops so triangles become crisp, solid surfaces by the end.

This careful scheduling gives strong learning signals early and solid meshes later.

Extras that help

- Densification and pruning: The system adds more triangles where needed and removes ones that don’t contribute, keeping the mesh compact.

- Vertex colors: Colors are stored at triangle corners (vertices) and smoothly blended inside each triangle, which helps match appearance without heavy textures.

- Simple, robust losses: The method compares rendered images to real ones to guide learning, and uses extra hints (like estimated depth and normals) to keep surfaces smooth and geometrically accurate.

Main Findings and Why They Matter

Here are the key results demonstrated in the paper:

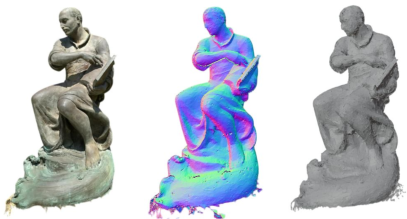

- Better visual quality for mesh-based rendering: On a standard benchmark (Mip-NeRF360), MeshSplatting improves the PSNR score by about +0.69 dB over the strongest mesh-based baseline (MiLo), and has better perceptual quality (lower LPIPS). In simple terms, images look sharper and closer to the original photos.

- Faster and lighter: Training is about 2× faster and uses about 2× less memory compared to leading mesh-based methods. This means it’s more practical and can run on everyday hardware.

- Ready for game engines: The output is a connected, opaque, colored mesh—no special conversion needed. It works out of the box with standard features like depth testing (engines know what’s in front) and occlusion culling (don’t draw hidden stuff), physics, and ray tracing.

- Clean geometry: The final meshes are well connected (like a proper 3D surface), avoiding scattered “triangle soup,” which makes interactions and simulations more reliable.

- Easy object editing: Because each pixel is covered by exactly one triangle (not blended from many transparent shapes), selecting and removing or extracting objects is straightforward. Masks from 2D images can be mapped directly to the triangles that form the object.

To translate the metrics:

- PSNR and SSIM measure how similar the rendered images are to the real photos (higher is better).

- LPIPS measures perceptual difference (lower is better), aligned with human judgments of visual quality.

Implications and Potential Impact

MeshSplatting bridges the gap between advanced neural rendering and the practical needs of interactive 3D apps:

- Game engines and AR/VR: Developers can import these meshes directly, enabling real-time walkthroughs, physics-based interactions, ray tracing, and scene editing without custom hacks.

- Efficiency: Faster training and lower memory mean it’s easier to scale to bigger scenes and use on regular computers.

- Clean scene editing: Object extraction and segmentation become simpler, opening doors for content creation, cleanup, and customization.

- Research foundation: This approach shows how to learn both geometry and appearance end-to-end in a mesh format, pointing toward future systems with even richer materials or textures.

In short, MeshSplatting makes high-quality, learned 3D scenes practical for the tools people already use, helping move neural rendering from demos into real-world interactive applications.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a focused list of unresolved issues and open directions that emerge from the paper. Each point is intended to be concrete and actionable for future research.

- Geometry ground truth and evaluation: Large-scale scenes (e.g., Mip-NeRF360, Tanks and Temples) lack ground-truth geometry, limiting rigorous assessment of mesh accuracy. Establish or adopt benchmarks with scanned meshes and report manifoldness, watertightness, self-intersections, and Chamfer/normal metrics at scale.

- Manifold and watertightness guarantees: Restricted Delaunay triangulation reuses vertex positions but does not ensure 2-manifold topology or watertight surfaces; connectivity statistics (high percentage of triangles with 4+ neighbors) suggest potential non-manifold adjacency. Develop post-processes or training objectives to enforce manifold topology, remove T-junctions, resolve self-intersections, and guarantee watertightness for physics.

- Transparency and participating media: The method enforces fully opaque triangles at convergence, making glass, translucency, and volumetric effects unrepresentable in the final output. Explore z-buffer-compatible mixed opaque/translucent representations or layered meshes that retain engine compatibility.

- Material and lighting expressivity: Per-vertex spherical harmonics bake appearance, are not physically based, and are not relightable. Investigate decoupled geometry/appearance via neural textures, BRDF/BSDF models, and PBR material parameters to support novel lighting and shadows while preserving engine interoperability.

- View-dependent effects: Quantify how well per-vertex SH captures specular highlights and anisotropic view-dependent appearance. Evaluate artifacts and propose more expressive, engine-compatible view-dependent appearance models (e.g., neural reflectance fields with texture atlases).

- Texture resolution vs. vertex count: Vertex colors (no UVs) raise questions about the number of vertices required to represent high-frequency textures. Characterize the quality–memory–FPS trade-offs, and study automatic UV unwrapping and texture baking to reduce vertex counts while preserving detail.

- Reliance on external priors: Depth alignment (Depth Anything v2) and normal supervision (Metric3D / 2DGS self-supervision) can bias geometry. Analyze failure modes when monocular priors are inaccurate, quantify sensitivity, and explore jointly learned or uncertainty-aware priors.

- Gradient stability under sorting: Training relies on depth-ordered blending; triangle order changes introduce gradient discontinuities. Study order-agnostic formulations (e.g., soft z-buffers), continuous relaxations of sorting, or robustness strategies to mitigate optimization instabilities.

- Connectivity strategy during training: Restricted Delaunay triangulation is performed once; no mechanism adapts connectivity thereafter. Investigate incremental re-triangulation/mesh surgery criteria (edge flips, splits/merges) driven by photometric/geometric residuals.

- Robustness to thin structures and occluded geometry: Midpoint subdivision and opacity-driven densification may miss thin or heavily occluded surfaces. Develop visibility-aware sampling (e.g., silhouette-driven refinement, multi-view contour constraints) and quantify failure cases.

- Hyperparameter sensitivity: Initial opacity (0.28), pruning threshold (o<0.2 at 5k), opacity and window schedules are tuned ad hoc. Perform systematic sensitivity analysis and automatic curricula/schedulers based on convergence indicators.

- Anti-aliasing and edge fidelity: Supersampling is enabled only in final iterations; aliasing/jaggies and coverage issues are not quantitatively evaluated. Assess multi-sample anti-aliasing, coverage masks, and their impact on both training stability and final visual quality.

- Scalability and deployment: FPS results are reported on a MacBook M4; scalability to mobile GPUs/VR headsets and very large scenes (tens of millions of triangles) is not studied. Characterize performance-resource scaling, engine integration costs (draw calls, batching, LOD), and memory constraints across platforms.

- Dynamic scenes and non-rigid motion: The method targets static scenes; handling moving objects or time-varying geometry is unexplored. Extend to temporal meshes with motion regularization and multi-time optimization, and evaluate temporal consistency.

- Pose robustness: Assumes accurate SfM poses. Quantify sensitivity to pose errors and explore joint optimization of camera poses and mesh, including global bundle adjustment within the differentiable pipeline.

- Export pipeline to standard engines: Mesh uses per-vertex SH colors; most engines expect textures and PBR materials. Provide an automated pipeline for UV generation, texture baking, normal/displacement maps, and material parameterization compatible with engine shaders.

- Ray tracing performance: The paper claims ray tracing support but lacks benchmarks. Measure BVH build/update times, ray–triangle intersection costs, and compare to Gaussian-based approaches under ray-traced rendering (quality and speed).

- Object segmentation quality: Object extraction uses SAMv2 masks without quantitative evaluation of multi-view consistency or boundary leakage (triangles straddling masks). Add multi-view consistency constraints and evaluate precision/recall of extracted object meshes.

- Physics robustness: Using non-convex colliders on potentially non-manifold meshes may cause simulation instabilities. Assess collision robustness (penetration depth errors, tunneling) and develop mesh healing/remeshing pipelines for stable physics.

- Rendering simplification assumption: The claim that “only one triangle covers each pixel at convergence” may fail for coplanar overlaps or micro-overdraw. Characterize conditions and artifacts when multiple opaque triangles overlap and propose conflict resolution.

- Domain coverage: Evaluate across challenging domains (indoor glossy/reflective surfaces, glass/water, low light, outdoor vegetation with thin structures) and document failure cases to guide method extensions.

- Comparative fairness in appearance capacity: Baselines often require post-processing for coloring; reported PSNR/LPIPS may reflect differences in appearance models. Include comparisons with matched appearance capacity (e.g., neural textures) to isolate geometric contributions.

- Reproducibility and variance: Provide code, full training schedules, seeds, and scene-wise variance (e.g., across 5–10 random initializations) to substantiate speed/quality claims and enable reproducible benchmarking.

Glossary

- 3D Gaussian Splatting: A primitive-based neural rendering approach that uses many Gaussian blobs to achieve real-time novel view synthesis. "Primitive-based splatting methods like 3D Gaussian Splatting have revolutionized novel view synthesis with real-time rendering."

- Alpha blending: A transparency rendering technique that blends colors of overlapping primitives using their alpha (opacity) values. "since 3DGS relies on sorting and alpha blending, preventing the use of standard techniques like depth buffers and occlusion culling"

- Anisotropic Gaussians: Gaussian functions with different variances along different axes, used as rendering primitives for better fidelity. "The advent of 3D Gaussian Splatting~\cite{Kerbl20233DGaussian} showed that it is possible to fit millions of anisotropic Gaussians in minutes, enabling real-time rendering with high fidelity."

- Barycentric coordinates: A coordinate system within a triangle used to interpolate attributes like color across its surface. "its color at a point inside the triangle is obtained by interpolating vertex colors with barycentric coordinates."

- Bernoulli sampling: A probabilistic sampling method where each event has two outcomes, used here to select triangles for densification. "At each densification step, candidate triangles are selected by sampling from a probability distribution constructed directly from their opacity using Bernoulli sampling."

- Chamfer Distance: A metric to measure geometric difference between two point sets, commonly used to evaluate mesh quality. "Finally, to quantitatively assess the mesh quality of our method, we compute the Chamfer Distance on~DTU."

- Delaunay tetrahedralization: A 3D generalization of Delaunay triangulation producing tetrahedra with optimal local properties. "This operation first compute a standard Delaunay tetrahedralization, and then identifies tetrahedral faces whose dual Voronoi edges intersect the surface of the input triangle soup."

- Delaunay triangulation: A triangulation maximizing minimum angles, used to structure meshes and improve connectivity. "MiLo performs Delaunay triangulation at every iteration, whereas we run it only once."

- Depth Anything v2: A pretrained depth estimation model used to align predicted depths via scale-and-shift. "We follow~\citet{Kerbl2024AHierarchical}, and employ Depth Anything v2~\cite{Yang2024DepthV2} to align the predicted depths using their scale-and-shift procedure."

- Depth alignment loss: A loss term that penalizes discrepancies between predicted and observed depths to encourage manifold surfaces. "Our training loss combines the photometric~ and~$\mathcal{L}_{\text{D-SSIM}$ terms from 3DGS~\cite{Kerbl20233DGaussian}, the opacity loss~ from~\citet{Kheradmand20243DGaussian}, the depth alignment loss~ (detailed below)..."

- Depth buffers: GPU buffers storing per-pixel depth to manage visibility and occlusions during rasterization. "opening the door for classical techniques like the use of depth buffers and occlusion culling~\cite{AkenineMoller2018RealTimeRendering, Hughes2014CGPP}."

- Depth order: The sorting of primitives by distance from the camera to correctly accumulate colors through semi-transparent layers. "The final color of each image pixel~ is computed by accumulating contributions from all overlapping triangles in depth order:"

- Densification: A strategy to add more primitives during training to better cover the scene and improve quality. "As the initial triangle soup may not be sufficiently dense, we adapt the ideas from 3DGS-MCMC~\cite{Kheradmand20243DGaussian} to spawn additional triangles."

- Differentiable rendering: Rendering formulations that allow gradients to flow from image losses to scene parameters for optimization. "Differentiable rendering enables end-to-end optimization by propagating image-based losses back to scene parameters, allowing for the learning of explicit representations..."

- Incenter: The point inside a triangle equidistant from all edges, used as a stable reference for the window function. "with be the incenter of the projected triangle"

- Laplacian (regularization): A smoothing regularization based on the Laplacian operator, encouraging locally consistent geometry. "Unlike losses like normal consistency~\cite{Boss2025SF3D,Choi2024LTM} or Laplacian~\cite{Boss2025SF3D, Wang2018Pixel2Mesh-arxiv,Choi2024LTM} that rely on local mesh connectivity, this formulation acts on each vertex independently."

- LPIPS: A perceptual image metric that correlates well with human visual judgments of similarity. "In terms of LPIPS~(the metric that best correlates with human visual perception), \methodname significantly outperforms all concurrent methods."

- Marching Tetrahedra: A mesh extraction algorithm operating on tetrahedral grids to reconstruct isosurfaces. "applying Marching Tetrahedra on Gaussian-induced tetrahedral grids~\cite{Yu2024Gaussian}"

- Mip-NeRF360: A dataset for large-scale neural radiance field evaluation across 360-degree views. "On Mip-NeRF360, it boosts PSNR by +0.69 dB over the current state-of-the-art MiLo for mesh-based novel view synthesis"

- MobileNeRF (NeRF): A method that distills Neural Radiance Fields into efficient polygonal representations. "MobileNeRF~\cite{Chen2023MobileNeRF} distills a NeRF into a compact set of textured polygons."

- Non-convex mesh collider: A physics collider that uses the actual non-convex mesh surface for interaction, typical in game engines. "We demonstrate this by using an off-the-shelf non-convex mesh collider: the one provided in the Unity game engine."

- Normal consistency: A regularization encouraging adjacent surface normals to be consistent for smoother geometry. "Unlike losses like normal consistency~\cite{Boss2025SF3D,Choi2024LTM} or Laplacian~\cite{Boss2025SF3D, Wang2018Pixel2Mesh-arxiv,Choi2024LTM} that rely on local mesh connectivity..."

- Object segmentation: The process of identifying and separating objects from a scene for extraction or editing. "With \methodname, objects can be easily extracted or removed from a reconstructed scene, in this case the flower pot from the Garden scene."

- Occlusion culling: A rendering optimization that discards objects not visible due to occlusions. "opening the door for classical techniques like the use of depth buffers and occlusion culling~\cite{AkenineMoller2018RealTimeRendering, Hughes2014CGPP}."

- Pinhole camera model: A standard camera model used to project 3D points to 2D image coordinates through a pinhole. "using a standard pinhole camera model"

- Poisson reconstruction: A method for reconstructing surfaces from point samples by solving a Poisson equation. "followed by Poisson reconstruction~\cite{Guedon2024SuGaR}"

- PSNR: Peak Signal-to-Noise Ratio, an image quality metric measuring fidelity relative to a reference. "We evaluate the visual quality using standard metrics: SSIM, PSNR, and LPIPS."

- Rasterization: The process of converting 3D geometry into pixel-based images using scan conversion and depth buffering. "mesh-based rasterization with depth buffers."

- Ray tracing: A rendering technique that simulates light paths for realistic images, supported by the final opaque mesh. "(d) ray tracing."

- Restricted Delaunay triangulation: A surface-conforming Delaunay triangulation restricted to a subset (surface) to enforce connectivity. "By enforcing connectivity via restricted Delaunay triangulation and refining surface consistency, MeshSplatting creates end-to-end smooth, visually high-quality meshes"

- Signed distance field: A function giving the distance to a surface with sign indicating inside/outside, used for differentiable windowing. "the signed distance field of the 2D triangle in image space is defined as:"

- Spherical harmonics: A basis for modeling view-dependent color or lighting via low-frequency functions on the sphere. "We set the spherical harmonics to degree 3, which yields 51 parameters per vertex"

- SSIM: Structural Similarity Index, an image metric assessing perceived quality based on structural information. "We evaluate the visual quality using standard metrics: SSIM, PSNR, and LPIPS."

- Structure-from-Motion (SfM): A pipeline that estimates camera poses and sparse 3D points from multiple images. "a set of posed images and corresponding camera parameters obtained from structure-from-motion (SfM)~\cite{Schonberger2016Structure}"

- Supersampling: A technique that samples multiple sub-pixel locations to improve gradient coverage and reduce aliasing. "In the final iterations of training, we enable supersampling, allowing even small triangles to receive gradients and be properly optimized."

- Truncated Signed Distance Fields (TSDF): SDFs with distances truncated for stability, used in mesh extraction pipelines. "rely on Truncated Signed Distance Fields for mesh extraction."

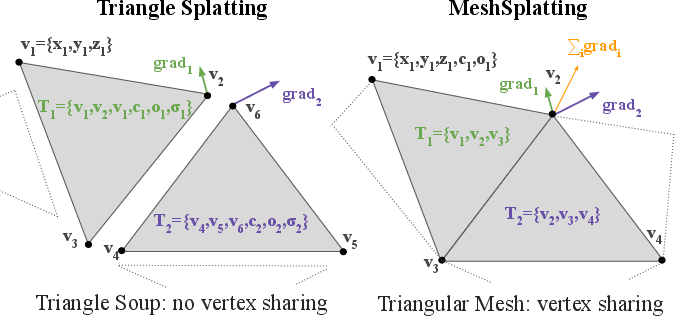

- Triangle soup: An unstructured collection of triangles without shared vertices or connectivity. "We begin the optimization with this unstructured triangle soup, i.e. without any connectivity or manifold constraints between triangles."

- Triangle Splatting: A differentiable rendering approach that splats triangles with window functions instead of Gaussians. "In Triangle Splatting~\cite{Held2025Triangle-arxiv}, each triangle is defined by three vertices , a color , a smoothness parameter and an opacity~."

- Volumetric rendering: Rendering that accumulates light contributions along rays through semi-transparent volumes. "optimize (potentially transparent) triangles via volumetric rendering, therefore effectively replacing Gaussians with triangles."

- Voronoi edges: Edges of the dual Voronoi diagram used to detect surface-aligned faces in restricted Delaunay triangulation. "identifies tetrahedral faces whose dual Voronoi edges intersect the surface of the input triangle soup."

- Window function: A smooth indicator over a triangle in screen space used to make rasterization differentiable. "The window function is then defined as:"

Practical Applications

Immediate Applications

The following items can be deployed now, leveraging MeshSplatting’s end-to-end opaque, connected, colored meshes that are directly compatible with standard game engines and common 3D tools.

- Game engine–ready scene capture and import (Software, Gaming, XR)

- Use case: Convert photo collections or short videos of real environments into ready-to-use level assets for Unity/Unreal without custom transparency shaders.

- Tools/products/workflows: SfM (e.g., COLMAP) → MeshSplatting → FBX/GLTF → Unity/Unreal; optional Blender for fine edits.

- Assumptions/dependencies: Static scenes; posed images from SfM; GPU training (~48 min on A100 per scene; faster than competitors); sufficient image coverage and consistent lighting; opaque surfaces (no translucency).

- Rapid physics prototyping with non‑convex colliders (Robotics, Gaming, Simulation)

- Use case: Drop scanned environments into physics-enabled engines to test robot navigation, gameplay interactions, or crowd dynamics using native mesh colliders.

- Tools/products/workflows: Unity non‑convex collider + MeshSplatting mesh; PhysX/Havok physics; scripted interaction tests.

- Assumptions/dependencies: Mesh connectedness and opacity support depth buffers/occlusion culling; physics realism depends on geometric accuracy and scale calibration.

- Interactive walkthroughs and VR tours (Real Estate, Cultural Heritage, Education)

- Use case: Create real-time, navigable experiences (with ray tracing support) of homes, museums, or labs from photos; deploy on consumer hardware (e.g., Mac M4).

- Tools/products/workflows: MeshSplatting → Unity/Unreal VR templates; WebXR for browser delivery; optional ray tracing.

- Assumptions/dependencies: Sufficient coverage and camera poses; comfort-level VR framerates (MeshSplatting reaches ~220 FPS HD on consumer hardware); static content.

- Object removal/extraction and scene editing (E-commerce, VFX, Content Creation)

- Use case: Remove or isolate objects using 2D masks mapped to triangles; quickly produce clean backgrounds or extracted sub-mesh assets for compositing.

- Tools/products/workflows: SAMv2 masks → per-pixel triangle mapping → sub-mesh extraction; Blender/Unreal for re-lighting or re-placement.

- Assumptions/dependencies: Accurate object masks; static scenes; opaque triangle coverage (one triangle per pixel simplifies selection); color fidelity via SH per-vertex.

- AR occlusion meshes and spatial anchors (XR/AR)

- Use case: Use opaque, connected meshes for occlusion and anchoring, improving realism of AR product placement and interactions in captured environments.

- Tools/products/workflows: MeshSplatting → ARKit/ARCore occlusion pipeline → anchor alignment; mobile streaming of compact assets (~100 MB).

- Assumptions/dependencies: Static background; scale alignment (SfM or additional calibration); mobile performance dependent on mesh size.

- Real-time marketing/visualization with ray tracing (Advertising, Design, Film Previs)

- Use case: Generate photorealistic renders of scanned spaces with standard ray tracers in engines (no special transparent shaders needed).

- Tools/products/workflows: MeshSplatting → Unreal Lumen/Path Tracer or Unity HDRP; rapid iteration on lighting and camera paths.

- Assumptions/dependencies: Material realism bounded by SH-based colors; PBR textures not yet captured (appearance is not fully decoupled).

- Synthetic dataset generation for perception and NVS research (Academia, Vision)

- Use case: Create controlled mesh-based datasets (with ground-truth camera poses) for evaluating novel view synthesis, mesh quality, and geometry regularization.

- Tools/products/workflows: MeshSplatting training + export of meshes, depths, normals; evaluation with PSNR/LPIPS/SSIM and Chamfer on DTU-like tasks.

- Assumptions/dependencies: Depth/normal supervision optional; can use self-supervised regularization; scale/shift alignment via Depth Anything v2 improves consistency.

- Web streaming of interactive scenes (Software, Media)

- Use case: Deploy lightweight, real-time interactive environments to browsers using WebGL/WebGPU due to compact meshes and standard rasterization.

- Tools/products/workflows: MeshSplatting → GLTF + Draco compression → WebGL/WebGPU viewer; CDN distribution.

- Assumptions/dependencies: Mesh size and triangle count tuned for bandwidth/latency; static environments for precomputed assets.

Long‑Term Applications

The following items are feasible with further research, scaling, or productization—particularly in geometry accuracy, appearance modeling (e.g., neural textures), dynamic scene support, and large-scale training/deployment.

- Mobile, on‑device, near‑real‑time scanning (XR/Consumer Software)

- Use case: Capture and convert spaces to meshes directly on phones/tablets for instant AR occlusion, decor planning, or VR previews.

- Tools/products/workflows: On-device SfM/SLAM → MeshSplatting with hardware acceleration → ARKit/ARCore integration.

- Assumptions/dependencies: Efficient training on mobile SoCs; incremental updates; robust camera tracking in low-light/clutter; dynamic scene handling.

- City‑scale digital twins and AR cloud (Smart Cities, Mapping)

- Use case: Build large outdoor environments (streets, campuses) for AR navigation, simulation, and urban planning with standard mesh pipelines.

- Tools/products/workflows: Distributed SfM + MeshSplatting tiling/streaming → cloud asset management; level-of-detail (LOD) schemes.

- Assumptions/dependencies: Scalable training and meshing; consistent geo-referencing and scale; handling of vegetation/translucent materials.

- High‑fidelity material capture via decoupled appearance (AEC, VFX, Product Design)

- Use case: Replace per-vertex SH with neural textures/PBR materials for realistic, editable appearance; enable relighting and material editing.

- Tools/products/workflows: MeshSplatting geometry + neural texture training; export as PBR maps; DCC integrations (Blender/Maya).

- Assumptions/dependencies: Joint optimization of geometry and appearance; capture of BRDFs; multi-view reflectance calibration; increased compute budgets.

- Measurement‑grade geometry for BIM and energy modeling (AEC, Energy)

- Use case: Generate accurate, watertight meshes of buildings for BIM integration, thermal/energy simulations, and refurbishment planning.

- Tools/products/workflows: Calibrated multi-sensor capture (RGB + depth/LiDAR) → constrained MeshSplatting → BIM import.

- Assumptions/dependencies: Metric accuracy and watertightness; material properties; standard compliance (IFC, gbXML); dynamic occlusions and reflective surfaces.

- Autonomous driving and robotics simulation at scale (Automotive, Robotics)

- Use case: Create large training/simulation worlds from real scenes, with physics and occlusion-correct meshes, improving transfer to reality.

- Tools/products/workflows: Fleet data → large-scale MeshSplatting → Unreal/Unity simulation; sensor simulation (lidar/radar/camera).

- Assumptions/dependencies: Handling of dynamic objects; multi-sensor synchronization; precise scale; temporal consistency.

- Industrial inspection and digital factory twins (Manufacturing)

- Use case: Scan factories/warehouses for safety assessment, workflow optimization, and robot training; deploy standardized mesh assets.

- Tools/products/workflows: Scheduled capture → MeshSplatting → simulation and layout planning; integration with MES/PLM systems.

- Assumptions/dependencies: High geometric accuracy; frequent re-scans for changes; integration with enterprise data pipelines.

- Medical facility planning and training (Healthcare)

- Use case: Build interactive, physics-aware meshes of operating rooms and clinics for procedure rehearsal and equipment placement planning.

- Tools/products/workflows: Controlled imaging → MeshSplatting → VR training modules; scenario simulation (flow, ergonomics).

- Assumptions/dependencies: Strict privacy/security; metric fidelity; support for dynamic human motion; compliance with healthcare IT standards.

- Standardization of neural‑mesh asset formats and benchmarks (Policy, Standards, Academia)

- Use case: Define interoperable formats and metrics for mesh-based neural reconstructions (fidelity, geometry accuracy, size, performance).

- Tools/products/workflows: Community benchmarks; GLTF/USD extensions; best-practice guidelines for training and deployment.

- Assumptions/dependencies: Cross‑vendor collaboration; evaluation datasets; privacy and IP considerations for scanned environments.

- Generative content pipelines mixing AI models and scanned assets (Media, Gaming)

- Use case: Blend generative models (textures, props) with MeshSplatting geometry to auto‑assemble playable levels or film sets.

- Tools/products/workflows: MeshSplatting → procedural generation → DCC edit → engine deploy; versioning and asset catalogs.

- Assumptions/dependencies: Style/semantic control; robust editing tools; QA automation for playability and performance.

- Marketplace for scanned, engine-ready environments (Software, Commerce)

- Use case: Create a catalog of real-world meshes (rooms, streets, venues) for immediate use in AR/VR, simulation, and media production.

- Tools/products/workflows: Capture → MeshSplatting → quality vetting → storefront with GLTF/FBX; licensing and updates.

- Assumptions/dependencies: Clear IP/licensing; scalability of processing; consistent quality standards; regional privacy regulations.

Collections

Sign up for free to add this paper to one or more collections.