AI & Human Co-Improvement for Safer Co-Superintelligence (2512.05356v1)

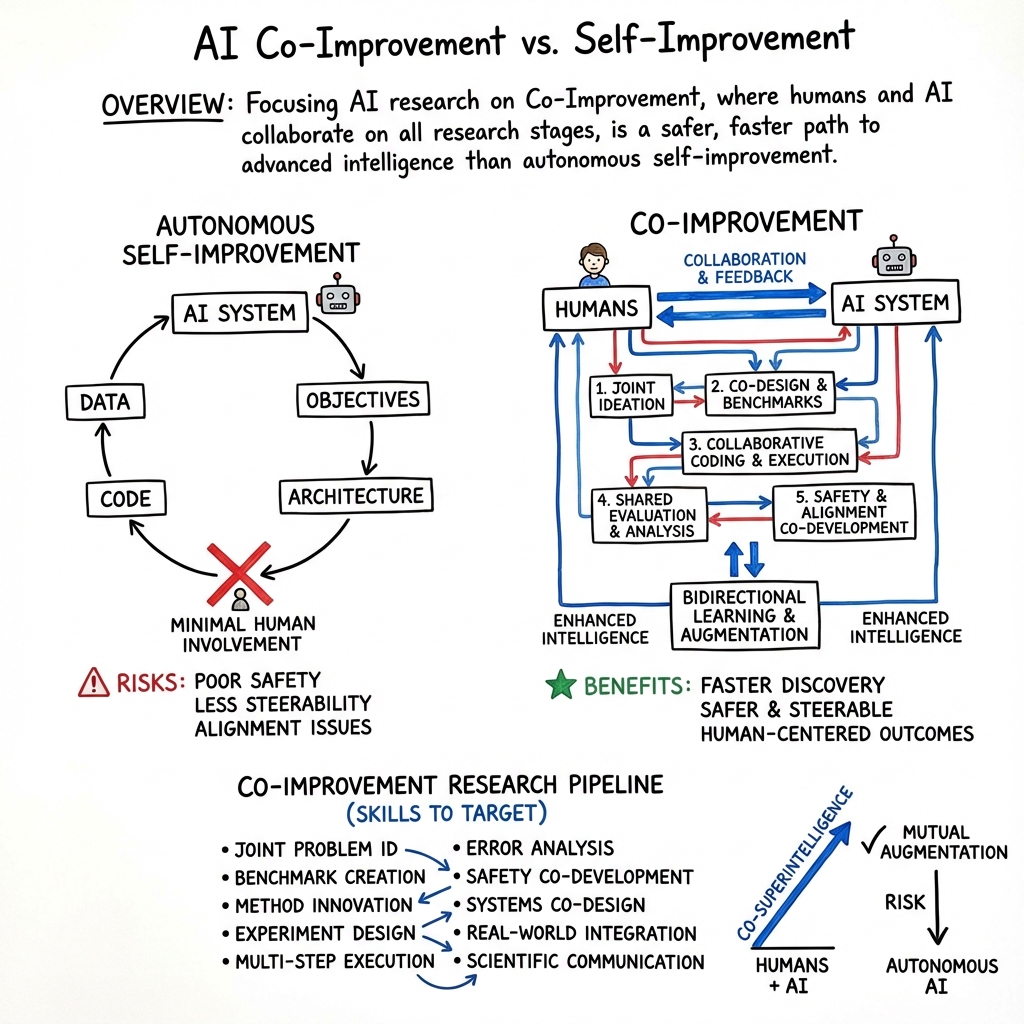

Abstract: Self-improvement is a goal currently exciting the field of AI, but is fraught with danger, and may take time to fully achieve. We advocate that a more achievable and better goal for humanity is to maximize co-improvement: collaboration between human researchers and AIs to achieve co-superintelligence. That is, specifically targeting improving AI systems' ability to work with human researchers to conduct AI research together, from ideation to experimentation, in order to both accelerate AI research and to generally endow both AIs and humans with safer superintelligence through their symbiosis. Focusing on including human research improvement in the loop will both get us there faster, and more safely.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What this paper is about (the big idea)

This paper argues that instead of trying to build AI that improves itself all alone, we should focus on building AI that improves together with people. The authors call this co-improvement: AI and humans teaming up to do research, make discoveries, and get better side by side. They believe this path will get us to very powerful, safer AI faster than going for fully “self-improving” AI right away.

What questions the paper asks

The paper centers on simple, practical questions:

- How can we design AI that works well with humans on research, from the first idea to the final experiment?

- Can human–AI teamwork make progress quicker and safer than fully autonomous AI?

- What skills should AI learn to be a great research partner, not just a solo problem-solver?

How the authors approach the problem

This is a position paper, which means it doesn’t run new experiments; it sets out a plan and reasons for a better approach. The authors compare two paths:

- Self-improvement: AI learns to improve itself on its own (changing its own data, rules, or even code), aiming to remove humans from the loop.

- Co-improvement: AI is built to collaborate with humans at every step of research, so both the AI and the humans get smarter together.

To make co-improvement real, they suggest creating “report cards” (benchmarks) and training goals for AI to practice the same tasks researchers do. Think of it like training a smart lab partner to help with:

- Picking good problems

- Designing fair tests

- Brainstorming methods and architectures

- Planning and running experiments

- Checking results and fixing mistakes

- Writing and explaining findings

- Building safer systems and deciding what to deploy in the real world

In everyday terms: instead of teaching a robot to run the lab alone, teach it to be your best co-researcher—someone who helps you think, build, test, and learn, while you steer and keep things aligned with human values.

What the paper finds and why it matters

Because this is a viewpoint paper, the “findings” are arguments and a roadmap, not measurements. The main takeaways are:

- Co-improvement can be faster than full autonomy With humans in the loop, AI can learn research skills more directly (like coding, planning, and analyzing), and humans can steer efforts toward what matters most. This helps find “paradigm shifts” (big breakthroughs) sooner.

- Co-improvement is safer Fully self-improving AI could drift away from human goals or make choices we can’t easily review. In contrast, collaboration keeps humans involved in decisions, values, and safety checks.

- We can target the right skills AI already helps write and code, but research involves much more. If we train and evaluate AI specifically on research-collaboration skills, those skills will improve faster—just like AI coding improved when we focused on it.

- Co-improvement builds toward “co-superintelligence” The goal isn’t just super-smart AI; it’s super-strong teams. As AI and humans learn together, both become more capable. That creates systems that are not only powerful but also aligned with human needs.

- Openness helps science advance The authors support sharing results and making research reproducible when it’s safe to do so. This speeds up learning for everyone while allowing careful limits when necessary to prevent misuse.

What this could change in the real world

If researchers and companies follow this plan:

- AI could become a trusted, transparent research partner that helps us invent safer, stronger systems.

- Humans would remain decision-makers—augmented by AI—rather than being pushed aside by fully autonomous systems.

- We might solve tough problems (in AI and beyond) faster by combining human judgment and creativity with AI’s speed and scale.

- Safety improves because the same AI that gains capability also helps us find and fix risks along the way.

In short

The paper’s message is simple: build AI that works with us, not without us. Train it to be an excellent teammate in research—one that helps pick problems, design tests, run experiments, and explain results—so we reach powerful, human-centered, and safer AI sooner.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

The paper articulates a vision for human–AI co-improvement but leaves several critical aspects underspecified or untested. The following list highlights concrete gaps and open questions future researchers could address:

- Operational definitions are missing: specify measurable constructs for “co-improvement” and “co-superintelligence” (e.g., research quality, pace, safety, human cognitive augmentation) and how to quantify them.

- Benchmarking collaboration is not defined: design standardized, multi-stage benchmarks for AI–human research tasks (problem selection, ideation, experiment design, execution, error analysis), including task specs, datasets, scoring rubrics, and baselines.

- Attribution of contributions is unclear: develop experimental designs (e.g., randomized controlled trials, crossover studies) to isolate and quantify AI vs. human causal contributions to research outcomes.

- No empirical evidence that co-improvement is faster/safer: run controlled comparisons against self-improvement agents on capability growth, safety incidents, and misalignment risk, with pre-registered metrics and protocols.

- “Research quality” is undefined: operationalize and automate measurements of novelty, correctness, replicability, impact, and ethical soundness to prevent artifact-centric optimization (e.g., paper-milling).

- Safety and alignment mechanisms lack specifics: detail methods for co-developing values, constitutions, constraints, and their verification (e.g., formal guarantees, scalable oversight, conflict resolution procedures).

- Reliability of self-judgment tools is unaddressed: evaluate LLM-as-a-judge and self-reward signals for bias, calibration, uncertainty, and failure under distribution shifts; define meta-evaluation and abstention policies.

- Group dynamics risks are untreated: model and mitigate manipulation, social engineering, agenda steering, collusion, polarization, and conformity in multi-human/multi-AI teams.

- Ideation diversity is not enforced: specify mechanisms to elicit and preserve diverse hypotheses (e.g., structured debate, portfolio search, adversarial idea generation, ideation de-correlation metrics).

- Human skill decay and dependence are unexamined: measure long-term effects of cognitive offloading on human expertise; design interventions that maintain or enhance human skills.

- Governance and “managed openness” lack concrete policies: define capability hazard assessments, staged release criteria, red-teaming protocols, disclosure norms, and coordination mechanisms across labs and jurisdictions.

- Data pipeline for collaboration skills is unspecified: identify sources, collection protocols, and privacy/IP safeguards for training data that teach ideation, critique, experiment planning, and scientific writing.

- Incentive design is missing: propose reward functions and evaluation signals that prioritize correctness, novelty, safety, and reproducibility over speed or quantity of outputs.

- Integration with tooling is underspecified: provide reference architectures for experiment tracking, version control, dataset/provenance management, CI/CD, and safe code execution in co-research workflows.

- Formal theory of collaborative search efficiency is absent: develop models and bounds for sample/computation efficiency gains from human–AI collaboration vs. autonomous search; identify regimes where collaboration dominates.

- Robustness and generalization are not tested: assess whether collaboration skills transfer across domains, modalities, cultures/languages, and adversarial/OOD scenarios.

- Multi-party protocols are missing: design scalable mechanisms for multi-human and multi-AI collaboration (consensus algorithms, argumentation frameworks, computational social choice, aggregation with diversity guarantees).

- Tool-use and self-modification guardrails lack detail: specify verification, sandboxing, code provenance, supply-chain security, and rollback policies for AI-assisted architecture/code changes.

- Quantifying bidirectional improvement is undefined: create instruments and longitudinal studies to measure how AI improves human cognition/learning and how humans improve AI across iterations.

- Ethical and legal questions are unresolved: clarify authorship, IP ownership, accountability, liability, and compliance for co-generated research artifacts and decisions.

- Value pluralism handling is unspecified: develop procedures to reconcile heterogeneous stakeholder values, incorporate democratic input, and avoid cultural bias in co-developed constitutions.

- Continuous monitoring is not described: build real-time oversight systems for co-research loops to detect reward hacking, emergent misalignment, and malicious code or backdoors.

- Trust calibration is unaddressed: implement uncertainty quantification, confidence reporting, selective abstention, and escalation pathways for high-stakes decisions in collaboration.

- Interoperability is not covered: define shared ontologies, schemas, APIs, and knowledge graph formats for cross-system co-research and reproducible exchange of artifacts.

- Compute and sustainability trade-offs are unknown: analyze resource demands and environmental impacts of co-improvement vs. self-improvement pathways; propose efficiency targets.

- Human-in-the-loop scalability lacks a plan: design triage, active learning, interface ergonomics, and attention allocation strategies to use human time efficiently without overload.

- Roadmap and milestones are absent: articulate a staged transition plan from today’s assistance (coding/writing) to end-to-end co-research, with concrete milestones and evaluation gates.

- Failure analysis and learning capture are unspecified: standardize postmortem templates, error taxonomies, and knowledge management practices to retain and leverage learnings from co-research failures.

- Cross-disciplinary expansion is not detailed: propose domain-specific adaptations for extending co-improvement beyond AI into fields requiring physical experiments or regulated data.

- Comparative cost-effectiveness is unknown: run ablations and ROI analyses to determine when investment in collaboration skills yields greater gains than scaling base model capabilities alone.

Glossary

- Ablations: Controlled experiments that remove or vary components to assess their impact on results. "proposed ablations"

- AGI: Artificial General Intelligence; a hypothetical AI with broad, human-level capabilities across diverse tasks. "AGI/SI"

- AI research agents: Autonomous AI systems designed to conduct parts of the research workflow (e.g., ideation, implementation, evaluation). "autonomous AI research agents"

- Alignment: Ensuring an AI system’s objectives and behavior are consistent with human values and intentions. "value alignment"

- Bidirectional collaboration: A two-way process where humans and AI mutually enhance each other’s capabilities over time. "bidirectional collaboration"

- Chain-of-thought (CoT): Explicit intermediate reasoning steps used in prompting/training to improve problem-solving. "training chain-of-thought"

- Co-improvement: A paradigm focused on human-AI collaboration to jointly advance research quality, capabilities, and safety. "co-improvement can provide:"

- Co-superintelligence: A state in which humans and AI collectively achieve superior intelligence through collaboration. "co-superintelligence"

- Collective intelligence: Aggregated reasoning and decision-making from multi-human and AI collaboration. "Collective intelligence {paper_content} group research"

- Compiler transforms: Automated code transformations performed by compilers; here, potential targets of AI self-modification. "compiler transforms"

- Constitutional AI: Training approach where a model follows a written “constitution” of principles to guide behavior and safety. "Constitutional AI"

- Desiderata: The set of desired properties and criteria that define success for a benchmark or method. "define desiderata"

- End-to-end AI scientist approaches: Fully automated pipelines where AI carries out the entire research process without human intervention. "end-to-end AI scientist approaches"

- Goal misspecification: Defining an AI’s objectives incorrectly or incompletely, causing unintended or harmful behavior. "goal misspecification"

- Gödel Machine: A theoretical self-referential system that can optimally rewrite itself upon proving improvements. "Gödel Machine"

- Jailbreaking: Inducing a model to bypass its safety constraints and produce restricted or unsafe outputs. "jailbreaking"

- LLM-as-a-Judge: Using a LLM to evaluate or score other models’ outputs. "LLM-as-a-Judge"

- Managed openness: Controlled dissemination of research to balance transparency, verification, and risk mitigation. "managed openness"

- Misalignment: A mismatch between an AI’s learned objectives or behavior and human values or intentions. "misalignment"

- Neural architecture search (NAS): Automated methods to design or optimize neural network architectures. "Neural arch. search"

- Out-of-distribution generalization: A model’s ability to perform well on data that differs from its training distribution. "out-of-distribution generalization"

- Reward hacking: Exploiting flaws in the reward function to achieve high reward via unintended behavior. "reward hacking"

- Reward model: A learned model that assigns scores or rewards to outputs to guide training. "reward model"

- RLAIF: Reinforcement Learning from AI Feedback, using AI-generated feedback for training. "RLAIF"

- RLHF: Reinforcement Learning from Human Feedback, aligning models via human-provided preferences or ratings. "RLHF training"

- RLVR: Reinforcement Learning from Verifiable Rewards; training using rewards derived from objectively checkable outcomes. "RLVR for training chain-of-thought"

- Self-challenging: Models generate tasks or adversarial problems to push their own capabilities. "Self-Challenging Agents"

- Self-evaluation: Models generate and apply their own feedback or reward signals to improve. "Self-evaluation / Self-reward"

- Self-improvement: Autonomous improvement across parameters, data, objectives, architecture, and code. "self-improving AI"

- Self-Instruct: A method where models generate their own instructions to improve alignment and task performance. "(CoT-)-Self-Instruct"

- Self-modification: An AI system changing its own architecture, code, or learning algorithm. "Algorithm or architecture self-modification"

- Self-play: Training by playing against oneself or one’s variants to improve performance. "Self-play"

- Self-refinement: Iteratively revising and improving model outputs via model-generated feedback. "Self-refinement"

- Self-Rewarding: Models create their own reward signals to guide learning without external labels. "Self-Rewarding"

- Self-training: Learning from the model’s own generated outputs or action traces. "Self-training on own generations or actions"

- Synthetic data creation: Generating artificial training data to improve model performance and coverage. "synthetic data creation"

- Verifiable reasoning tasks: Problems whose solutions can be checked objectively, used for training/evaluating reasoning. "verifiable reasoning tasks"

Practical Applications

Practical Applications Derived from the Paper’s Co-Improvement Paradigm

The paper advocates building AI systems expressly designed to collaborate with humans across the full research pipeline (problem identification, benchmarking, method innovation, experiment design and execution, evaluation, safety and alignment, systems co-design, scientific communication, collective intelligence). The following applications translate this position into concrete tools, products, and workflows across sectors.

Immediate Applications

These can be piloted or deployed with current-generation AI systems, provided human-in-the-loop governance and standard MLOps practices are in place.

- AI Research Co-Pilot for ML/AI R&D (academia, software/AI industry)

- What: An end-to-end assistant that co-ideates research directions, drafts benchmark desiderata, proposes ablations, plans experiments, writes and reviews code, and performs error analysis.

- Tools/Workflows: “Co-Research Hub” integrating notebooks (e.g., Jupyter), experiment orchestrators (Weights & Biases/MLflow), LLM-based proposal reviewers, and automated ablation planners.

- Assumptions/Dependencies: Access to foundation models/APIs, reproducible pipelines, data governance, human oversight for validation and safety.

- Benchmark and Dataset Co-Construction Assistant (academia, education, robotics)

- What: AI helps define task desiderata, generate synthetic tasks (Self-Instruct/CoT variants), curate and refine benchmarks, and calibrate LLM-as-a-judge evaluations.

- Tools/Workflows: “Benchmark Studio” with synthetic data generators, evaluator chaining (LLM-as-a-judge + inter-model competition), and human QC panels.

- Assumptions/Dependencies: Reliable evaluators, bias detection, domain expert review, clear metrics; risk of synthetic task overfitting mitigated by human validation.

- Safety and Alignment Co-Design Workbench (AI safety teams, compliance in finance/healthcare)

- What: Collaborative constitution drafting, structured red-teaming, RLAIF/RLVR loop setup, value elicitation and constraint testing across the research cycle.

- Tools/Workflows: “Constitution Lab” for policy authoring, multi-agent debate frameworks, red-team harnesses, risk dashboards.

- Assumptions/Dependencies: Organizational safety policies, audit trails, guardrails against reward hacking and hallucination; alignment norms defined by stakeholders.

- Collaborative Experiment Planning and Ablation Designer (academia, industrial R&D)

- What: Co-design of experimental protocols, resource-aware scheduling, ablation matrices, result interpretation, and iterative refinement.

- Tools/Workflows: “Experiment Planner” integrating SLURM/Kubernetes schedulers, data versioning, sample size calculators, and auto-generated preregistration templates.

- Assumptions/Dependencies: Integration with compute and lab tools, reproducibility standards (data lineage, seeds), human sign-off.

- Multi-Human + AI Collective Intelligence Facilitation (product teams, research groups, policy think tanks)

- What: Structured ideation, argument mapping, automated synthesis of competing viewpoints into consensus and action items.

- Tools/Workflows: “Consensus Synthesizer” supporting structured debates, argument trees, and decision rationales.

- Assumptions/Dependencies: Privacy-preserving collaboration, bias controls, moderator oversight; clear decision rights.

- Scientific Communication Assistant (academia, industry)

- What: Joint drafting of papers, documentation, figures, and result narratives; consistency checks across text, tables, and plots.

- Tools/Workflows: “Doc+Figure Co-Author” with citation validation, figure-generation prompts, and reproducibility checklists.

- Assumptions/Dependencies: Citation accuracy, plagiarism detection, adherence to venue/style guidelines.

- Systems and Infrastructure Co-Design (MLOps, software engineering)

- What: Co-architecture of pipelines, configurations, performance optimizations (e.g., memory management such as PagedAttention), reproducibility improvements.

- Tools/Workflows: “Pipeline Architect” with configuration recommender, profiling/telemetry integration, and reproducible builds.

- Assumptions/Dependencies: Access to infrastructure telemetry, CI/CD integration, secure change management.

- Research-to-Product Integration Advisor (software, robotics prototypes)

- What: Translating research artifacts into deployable systems, defining monitoring and rollback plans, collecting post-deployment feedback to inform new research cycles.

- Tools/Workflows: “Research-to-Prod Coach” with evidence-to-decision traces, safety checklists, and user feedback triage.

- Assumptions/Dependencies: Risk assessments, runtime monitoring, incident response; domain-specific compliance (e.g., ISO for robotics).

- Evaluation at Scale via AI (model development across sectors)

- What: Use LLM-as-a-judge and inter-model competition (e.g., ZeroSumEval) to triage errors, analyze failure modes, and prioritize fixes.

- Tools/Workflows: “Eval Arena” with judge calibration, adjudication workflows, and human auditing.

- Assumptions/Dependencies: Reliability and calibration of evaluators, adversarial test design, periodic human audits; avoid evaluator drift.

- Team/Classroom Co-Facilitator (daily life, education)

- What: Meeting moderation, structured brainstorming, action-item synthesis, and learning reinforcement.

- Tools/Workflows: “Meeting Co-Facilitator” integrated with calendars, note-taking, and task management.

- Assumptions/Dependencies: Consent and data security, organizational adoption, transparency of AI suggestions.

Long-Term Applications

These require advances in model capabilities, benchmarking, safety guarantees, and deeper integration into physical and social systems.

- Co-Superintelligence for Provably Safe AI (AI safety and theory)

- What: AI systems collaborating with human researchers to produce formal proofs, safety guarantees, and robust alignment methods at scale.

- Tools/Workflows: “ML Theory Partner” for interactive theorem proving (linking LLMs with proof assistants) and formal verification of training algorithms.

- Assumptions/Dependencies: Significant advances in reasoning, formal methods integration, trusted verification pipelines.

- Cross-Domain Co-Research Platforms (healthcare, materials/energy, hardware design)

- What: AI-human platforms that autonomously propose experiments, design assays, and iterate on hypotheses in medicine and materials, under human oversight.

- Tools/Workflows: “Co-Lab for Materials/Drug Discovery” with wet-lab robotics integration, simulation environments, and data-driven hypothesis managers.

- Assumptions/Dependencies: High-fidelity simulators, lab automation, curated domain data, regulatory approvals (FDA/EMA), rigorous safety protocols.

- Civic Deliberation and Policy Co-Design (public policy, urban planning, governance)

- What: Large-scale multi-human+AI platforms to synthesize diverse viewpoints, structure debates, and produce accountable policy proposals.

- Tools/Workflows: “Civic Co-Deliberation Network” with transparency dashboards, bias audits, and public participation mechanisms.

- Assumptions/Dependencies: Governance legitimacy, inclusivity safeguards, misuse prevention, legal frameworks for AI-mediated policy.

- Managed Openness Standards and Toolkits (policy, academia)

- What: Operationalizing “managed openness” to balance scientific sharing with misuse prevention in AI and other sensitive domains.

- Tools/Workflows: “Risk-Gated Release Manager” controlling staged disclosure, capability red-teaming, and community verification.

- Assumptions/Dependencies: International coordination, shared risk taxonomies, incentive alignment among labs and regulators.

- Education: Co-Superintelligent Tutors for Research Literacy (EdTech)

- What: AI tutors that scaffold research skills—problem formulation, experimental design, evaluation, replication—and foster collaboration competencies.

- Tools/Workflows: “Research Studio for Students” with progressive curricula, debate-based learning, and portfolio-based assessment.

- Assumptions/Dependencies: Curriculum integration, measurement of learning gains, accessibility and equity, teacher professional development.

- Robotics and Autonomy Co-Design (robotics, autonomous vehicles)

- What: Human-in-the-loop safety co-design using self-play, simulation-based stress testing, and risk-sensitive planning.

- Tools/Workflows: “Safety Co-Designer” combining adversarial simulation, interpretability, and policy constraints for autonomy stacks.

- Assumptions/Dependencies: Robust simulators, scenario coverage, certifiable safety frameworks, regulatory acceptance.

- Finance: Model Risk Governance Co-Pilot (banking, insurance, fintech)

- What: AI-human co-governance of model lifecycle (development, validation, monitoring) with transparent decision logs and stress testing.

- Tools/Workflows: “Model Governance Co-Pilot” integrating explainability, bias/fairness checks, and regulatory reporting.

- Assumptions/Dependencies: Compliance alignment (e.g., SR 11-7), explainability sufficiency, secure data handling.

- Software: Guardrailed Self-Modification and Architecture Co-Design (software engineering)

- What: Systems that propose and validate architecture changes and code rewrites with interpretability and safety constraints.

- Tools/Workflows: “Refactor+Architect Co-Designer” with proof-of-correctness where possible, automated regression suites, rollback plans.

- Assumptions/Dependencies: Verifiable transformations, robust testing, human approval workflows; prevention of unsafe modifications.

- Inter-Lab Collective Intelligence Commons (science ecosystem)

- What: Cross-institutional knowledge synthesis networks for research ideation, evaluation, and replication, mediated by AI facilitators.

- Tools/Workflows: “Inter-Lab Commons” with interoperable data sharing, debate synthesis, and benchmark registries.

- Assumptions/Dependencies: Data interoperability standards, IP agreements, privacy protections, incentives for participation.

- Human Cognitive Augmentation for Scientists (daily life, academia)

- What: Personal research agents that enhance memory, reasoning, and meta-research skills, enabling individuals to reach co-superintelligence with AI.

- Tools/Workflows: Wearable/ambient AI, personal knowledge bases, longitudinal learning plans, reflective practice coaches.

- Assumptions/Dependencies: Trustworthy on-device AI, privacy-preserving data ownership, long-term safety and wellbeing metrics.

Cross-Cutting Assumptions and Dependencies

- Availability of capable foundation models and safe fine-tuning methods (RLAIF, Constitutional AI, RLVR).

- New benchmarks and metrics to measure “research collaboration skill” and collective intelligence quality.

- Strong human-in-the-loop governance, auditability, and reproducibility standards.

- Security, privacy, and responsible data-sharing frameworks; managed openness where capabilities are sensitive.

- Organizational buy-in, clear roles and decision rights, and training for effective AI-human collaboration.

- Regulatory compliance for domain-specific deployments (healthcare, finance, robotics, public policy).

Collections

Sign up for free to add this paper to one or more collections.