Radiance Meshes for Volumetric Reconstruction (2512.04076v1)

Abstract: We introduce radiance meshes, a technique for representing radiance fields with constant density tetrahedral cells produced with a Delaunay tetrahedralization. Unlike a Voronoi diagram, a Delaunay tetrahedralization yields simple triangles that are natively supported by existing hardware. As such, our model is able to perform exact and fast volume rendering using both rasterization and ray-tracing. We introduce a new rasterization method that achieves faster rendering speeds than all prior radiance field representations (assuming an equivalent number of primitives and resolution) across a variety of platforms. Optimizing the positions of Delaunay vertices introduces topological discontinuities (edge flips). To solve this, we use a Zip-NeRF-style backbone which allows us to express a smoothly varying field even when the topology changes. Our rendering method exactly evaluates the volume rendering equation and enables high quality, real-time view synthesis on standard consumer hardware. Our tetrahedral meshes also lend themselves to a variety of exciting applications including fisheye lens distortion, physics-based simulation, editing, and mesh extraction.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper introduces “radiance meshes,” a new way to build and render 3D scenes from photos. Instead of using millions of tiny blobs (like in 3D Gaussian Splatting) or complex shapes (like Voronoi cells), the scene is filled with simple 3D building blocks called tetrahedra (triangular pyramids). Each tetrahedron has a controlled amount of “fog” (density) and a color that changes smoothly inside it. Because tetrahedra are made of triangles, modern graphics cards can render them extremely fast and accurately.

Objectives

The paper aims to solve a long-standing trade‑off in 3D scene methods:

- Make rendering fast and compatible with existing graphics hardware (like the triangle-based GPU pipeline).

- Keep the model easy to train and stable (so it learns from photos without glitches).

- Avoid visual problems like “popping” (sudden flickers when the scene changes slightly).

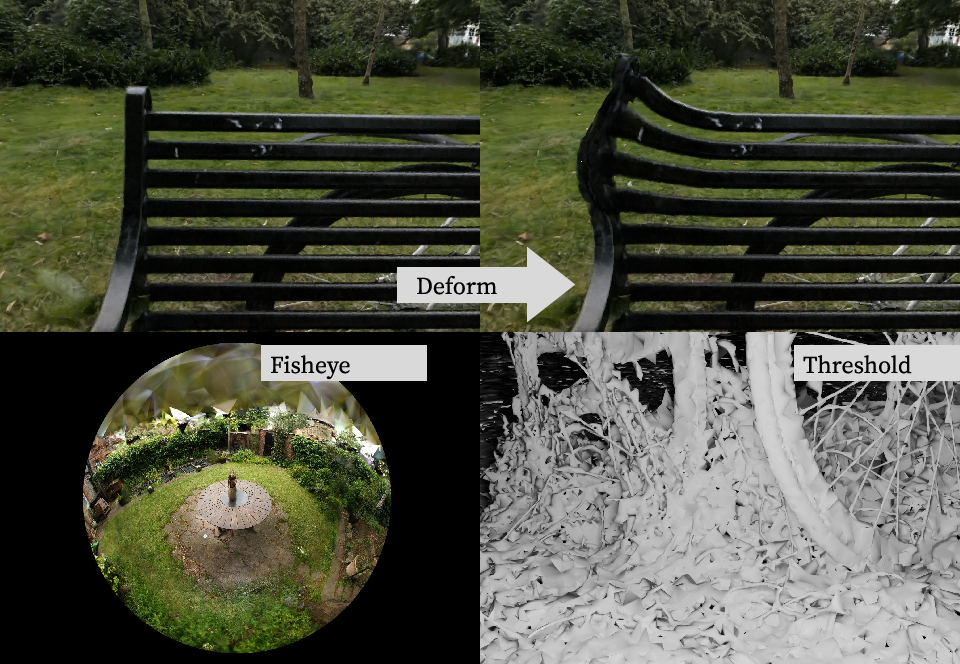

- Support tricky cameras (like fisheye lenses), editing, physics, and extracting standard surface meshes.

Approach

What is a radiance mesh?

Imagine filling the space of a scene with lots of small, see‑through triangular pyramids (tetrahedra). Each one:

- Has a constant fogginess (density), which controls how transparent it is.

- Has color that changes smoothly from one side to another (a linear color gradient), so it can represent fine details.

These tetrahedra are created by Delaunay tetrahedralization: a standard method that connects nearby points to make non-overlapping tetrahedra.

How does rendering work?

Think of a pixel as a laser beam (“ray”) that goes through several tetrahedra:

- For each tetrahedron the ray enters, we compute exactly where it enters and exits.

- We then integrate (sum up) how much color and fog the ray picks up along that short segment. This is exact math, not an approximation.

- To make it fast, the tetrahedra are sorted in a smart back‑to‑front order using a simple trick involving their “circumsphere” (an imaginary bubble around each tetrahedron). That sorting works even for fisheye lenses.

- Because tetrahedra are triangles, the GPU’s triangle rasterizer can render them at high speed on many platforms (desktop, web, and mobile).

How do they keep training smooth when the mesh changes?

As points move during training, the tetrahedra can flip and change (called “edge flips”), which usually causes training problems. Instead of storing colors on vertices, the paper queries a smooth grid (Instant‑NGP, a fast learned field) at the center of each tetrahedron to get its properties (density, base color, and gradient). This avoids glitches when the mesh topology changes.

How do they add more detail?

Sometimes a tetrahedron covers too much space and misses fine details. The method adds new points (densification) inside tetrahedra that cause the biggest image errors. It uses:

- An image‑error score (based on SSIM, a measure of visual similarity) to back‑project errors into the tetrahedra that caused them.

- A variance score to catch thin, low‑density structures that would otherwise be ignored early on. The new point is placed near where two high‑error viewing rays overlap, so it lands where detail is actually needed.

How is it made fast on GPUs?

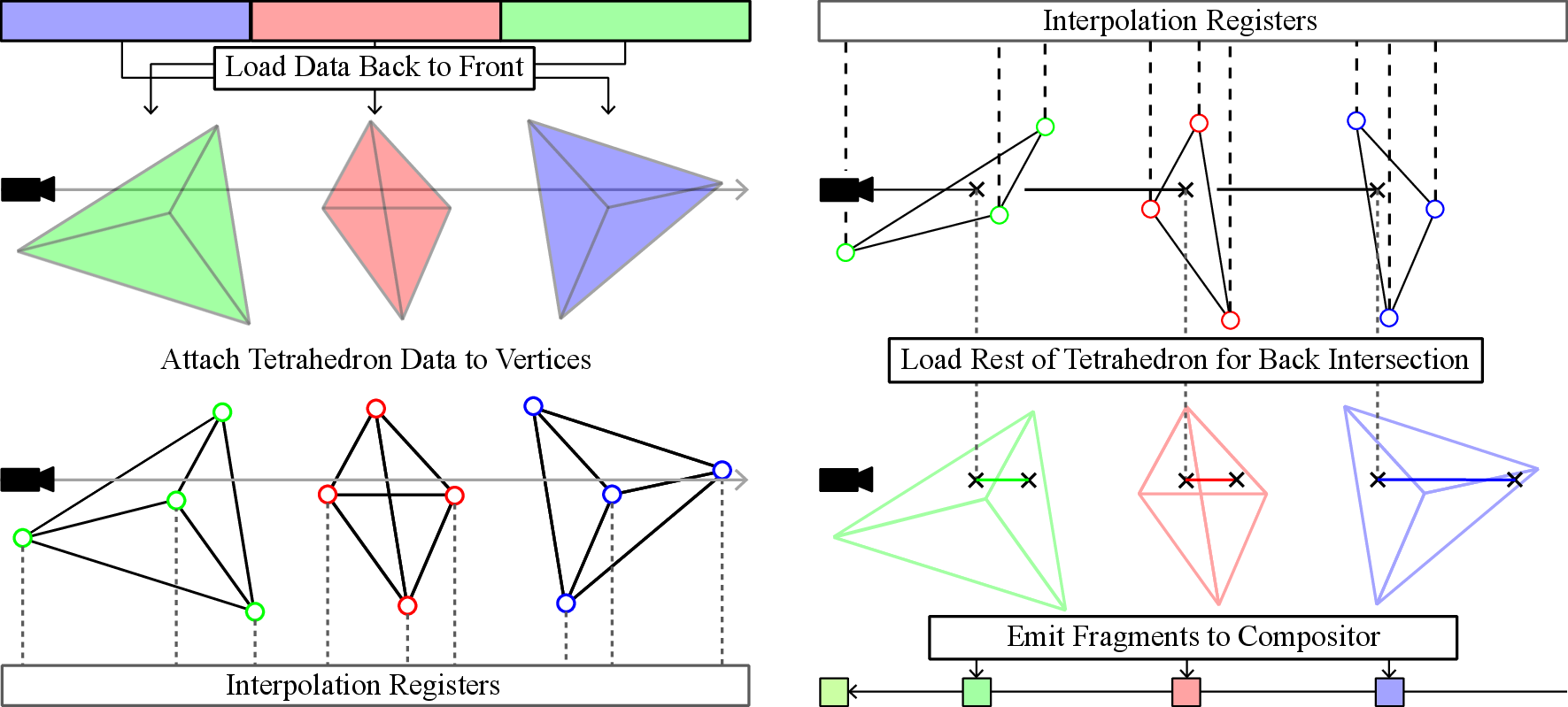

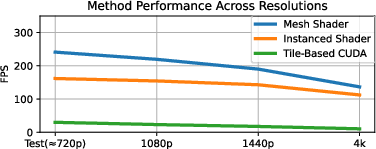

- They use mesh shaders (where available) so one block of GPU threads can load a tetrahedron’s data once and share it efficiently.

- They interpolate the information needed to find the ray’s exit point for each triangle, then integrate the segment exactly in the fragment shader.

- They also provide versions for Vulkan (desktop), WebGL (web), and a differentiable PyTorch renderer for training.

Main Findings

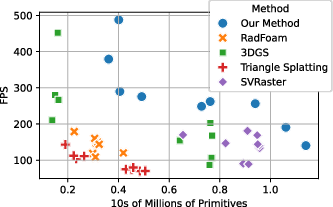

- Faster rendering: The new rasterization method is about 32% faster than the original 3D Gaussian Splatting at 1440p, assuming similar scene size and resolution.

- Exact visibility: Unlike splatting, this method sorts perfectly and integrates exactly, so there’s no “popping” or flicker when the camera moves.

- Broad GPU support: Because it’s triangle-based, it runs fast on standard consumer GPUs and even in the browser.

- Ray tracing: When ray tracing is used, it’s about 17% faster than Radiant Foam on indoor scenes and works well with complex lens models (like fisheye).

- Quality and robustness: It matches or beats similar methods (like Radiant Foam) in image quality and doesn’t crash on difficult scenes. It can also handle many more tetrahedra within typical GPU memory.

- Practical extras: You can run physics simulations on the mesh (e.g., jiggle or bend it), edit it, and extract a clean surface mesh for standard 3D workflows.

Why This Matters

Radiance meshes combine the best of both worlds:

- The speed and compatibility of triangle-based graphics (like games and real-time apps).

- The flexibility and accuracy of volumetric rendering (like NeRFs), with exact math and stable training.

This opens doors to:

- Real-time, high-quality view synthesis on everyday hardware.

- Support for unusual cameras and lenses without special hacks.

- Easier editing and simulation, since it behaves like a mesh.

- Converting to standard surface models for animation, CAD, AR/VR, and games.

In short, radiance meshes make photorealistic 3D reconstruction more practical, portable, and reliable—without giving up quality or speed.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a concise, actionable list of what remains missing, uncertain, or unexplored in the paper. Items are grouped to improve readability.

Representation and modeling

- The model assumes emission-only volume rendering with constant density per tetrahedron and linear color in position; it does not address scattering, absorption with in-scattering, or complex participating media, limiting applicability to scenes needing non-emissive volumetric effects.

- View dependence is handled only via spherical harmonics in the base color

c_k^0; the linear color gradient_kis position-only and shared across RGB channels (monochrome). The impact of per-channel and/or direction-dependent gradients on quality/speed is unexamined. - The non-negativity activation for the color gradient (to avoid negative colors) trades off expressiveness; no analysis quantifies how often this clamping is active, how it biases color ramps, or whether more flexible constraints (e.g., bounded polynomials) would improve fidelity.

- The choice of constant density per tet may cause aliasing at tet boundaries or limit fine-scale transparency modeling. There is no evaluation of higher-order density (e.g., linear/quadratic) within tets and its rendering/runtime impact.

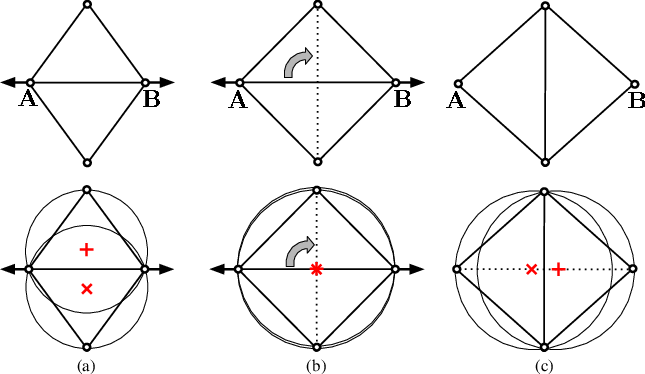

- Circumcenter-based querying is continuous under edge flips but numerically unstable for skinny tets; centroid-based querying is stable but discontinuous. A principled, provably stable and continuous query (e.g., “regularized circumcenter,” “power center,” or blended centers) is not provided or analyzed.

- The paper claims a closed-form integral (Eq. 7–8) for constant-density, linear-color segments, but no derivation, numerical conditioning analysis, or error bounds (especially under large

d_kor extreme color deltas) are given. Shader-side precision/stability for the exponentials is not quantified. - The model does not consider non-linear color transfer functions (gamma), HDR ranges, or spectral rendering; how these choices interact with linear interpolation and blending is unstudied.

Optimization and training

- Training time is substantially higher than baselines (GPU-hours in Table 2), but the paper does not profile what dominates (Delaunay recomputation, sorting, differentiable rendering, densification passes, NGP queries) or propose optimizations. How training scales beyond 10–15M tetrahedra is unclear.

- Delaunay recomputation every 10 iterations introduces frequent topology changes; the convergence properties, gradient stability, and failure modes under aggressive vertex motion are not theoretically analyzed.

- The Zip-NeRF-style downweighting based on circumradius is heuristic; there is no ablation on the filter’s schedule, sensitivity to

R_k, or alternative anti-aliasing strategies tailored to tetrahedra (e.g., facet-aware filtering). - Regularization choices (distortion loss applied to density rather than alpha weights) are motivated but not compared against alternatives (e.g., regularizing color gradients, enforcing smoothness across tet boundaries).

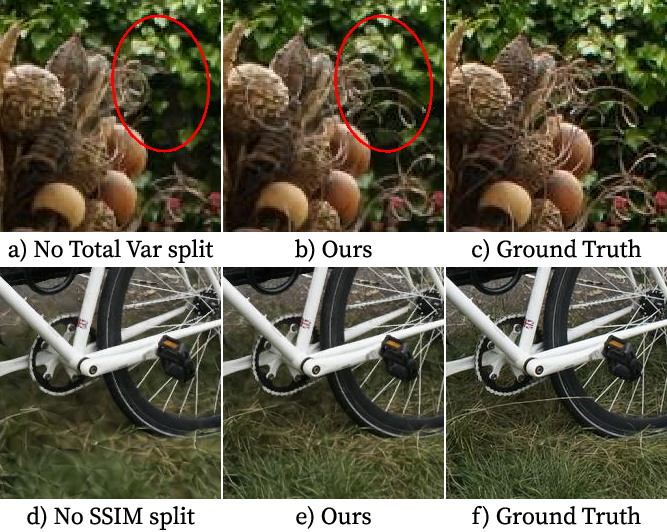

- No paper quantifies sensitivity to the densification cadence (“every 500 iterations”) or the sample size

M; thresholds (SSIM > 0.5,TotalVar > 2.0) are fixed without tuning analysis.

Densification and querying strategy

- The SSIM-based split score and total-variance split score rely on fixed heuristics; the trade-off between the two, and their sensitivity to scene content (thin structures vs textures), is not systematically evaluated beyond two scenes.

- The densification point selection via intersection of two “mean rays” can fail in low-baseline regions, wide-FOV or rolling-shutter imagery, and when tris intersect near-parallel surfaces; fallback behavior and failure rates are not quantified.

- The method does not consider multi-view geometric constraints when adding points (e.g., epipolar consistency, multi-view triangulation uncertainty). Incorporating geometric uncertainty or confidence measures remains unexplored.

- Querying Instant-NGP with a single center

O_kper tet (circumcenter or centroid) is a strong assumption; multi-sample or boundary-aware queries (e.g., at faces or vertices) and their cost/quality trade-offs are not investigated.

Rasterization, sorting, and ray tracing

- Power sorting is assumed to be front-to-back correct and efficient, but the per-frame cost of sorting millions of tetrahedra (including radix sort implementation details, memory bandwidth, and GPU/CPU placement) is not reported.

- Mesh-shader-based pipelines eliminate duplicate loads and improve speed, but portability to mobile/embedded GPUs and APIs without mesh shaders (beyond the WebGL instanced fallback) is not evaluated in performance or energy terms.

- The approach emits duplicated static geometry (faces/vertices) per tet to avoid variable geometry counts. Its impact on overdraw, fill rate, blending bandwidth, and performance under high screen-space overlap is not quantified.

- Fragment-shader computation of

t_in/t_outvia plane equations (Cyrus–Beck) for every hit triangle may be expensive; the paper does not benchmark the per-fragment cost or propose early-ray-termination strategies compatible with hardware blending. - Hardware blending is used for compositing fixed-order fragments; the feasibility of per-pixel early ray termination (to avoid integrating through many semi-transparent tets) within standard rasterization pipelines remains an open efficiency question.

- Numerical robustness under degenerate/near-parallel intersections in rasterization and ray tracing is not analyzed; while RadFoam’s failures are noted, similar edge cases for tetrahedral meshes (thin/sliver tets, grazing rays) need systematic testing.

- Support for dynamic scenes (moving objects/cameras, temporal consistency across frames) is not addressed; whether per-frame re-triangulation and sorting are fast enough for real-time capture and rendering is unclear.

Evaluation and comparisons

- Speed comparisons are confounded by differing primitive counts and FPS measurement methodology (authors include frame-buffer display, others report bare render times). A standardized, equal-quality, equal-primitive, equal-memory comparison suite is missing.

- Quality remains below top baselines like 3DGS on several metrics; the paper does not quantify which error modes dominate (e.g., view-dependent effects, fine texture aliasing, thin geometry) or how to close the gap (richer per-tet attributes, better filtering).

- Fisheye lens and arbitrary lens models are claimed to be supported, but there is no quantitative evaluation of quality under extreme distortions, nor a paper of sorting/fragment precision in such settings.

- The evaluation datasets exclude highly translucent, glossy, or specular scenes where direction-dependent radiance is critical; how the method performs on such data is unknown.

- VRAM scaling beyond ~15M tetrahedra on 24GB GPUs, memory footprint breakdown (geometry, per-tet attributes, NGP), and out-of-core strategies are not provided; memory-efficient representations (compression, quantization) remain unexplored.

Applications and downstream uses

- Physics-based simulation with XPBD is demonstrated qualitatively, but there is no quantitative validation that semi-transparent, constant-density tets accurately support physical properties (mass, stiffness) or collision stability.

- Surface mesh extraction via thresholding “peak color contribution” lacks guarantees of watertightness, manifoldness, and topology correctness; sensitivity to the 0.1 threshold and post-processing steps (simplification, repair) are not studied.

- Editing workflows (e.g., local material/geometry edits) are mentioned but not evaluated; authoring tools and constraints (e.g., keeping rasterization validity after edits) are not specified.

Theory and guarantees

- There is no formal analysis that the Zip-NeRF-style backbone “smoothly” compensates for topological discontinuities during optimization with centroid queries; continuity and differentiability across flips remain heuristic.

- The continuity claim for circumcenter queries is compelling but unused; a rigorous approach to handle flips with stability (e.g., robust center choice with conditioning-aware blending) is an open design problem.

- The acyclicity/sort correctness under power ordering is cited, but per-pixel correctness when multiple tetrahedra overlap in complex configurations (e.g., non-convex unions) and under numerical error has not been formally or empirically validated.

Engineering details needed for reproducibility

- Delaunay recomputation details (algorithm, implementation, parallelization, time/memory cost per recompute on millions of vertices) are not reported; GPU-based tetrahedralization feasibility remains open.

- The exact mesh-shader data layout, synchronization strategy, and warp-shuffle usage for collaborative loading are not described in enough detail to reproduce performance claims across vendors/drivers.

- The Slang.D differentiable renderer and Vulkan implementations are referenced, but gradient correctness across rasterization stages, memory synchronization costs, and numerical precision settings are not detailed.

Glossary

- 3D Gaussian Splatting (3DGS): A particle-based rendering approach that models scenes with Gaussian primitives and composits their contributions to render images efficiently. "A particularly effective point on this trade-off continuum is 3D Gaussian Splatting (3DGS)~\cite{kerbl20233d}."

- Alpha compositing: A technique for blending semi-transparent layers by weighting color with opacity (alpha) along a viewing ray. "largely based on the same splatting-based alpha compositing technique as 3DGS."

- Barycentric interpolation: Interpolation of attributes across a triangle/tetrahedron using weights derived from barycentric coordinates. "Thus naively assigning colors to vertices and using barycentric interpolation would not give a smooth optimization objective."

- Centroid: The average position of the vertices of a tetrahedron; used as a stable center for querying attributes. "where is either the circumcenter or centroid of ."

- Circumcenter: The center of the sphere passing through all vertices of a tetrahedron; used to define sorting and querying but can be numerically unstable. "where is either the circumcenter or centroid of ."

- Circumradius: The radius of a tetrahedron’s circumsphere; used to prefilter and downweight queries for skinny tets. "The circumradius is used to prefilter the NGP to address skinny tetrahedra."

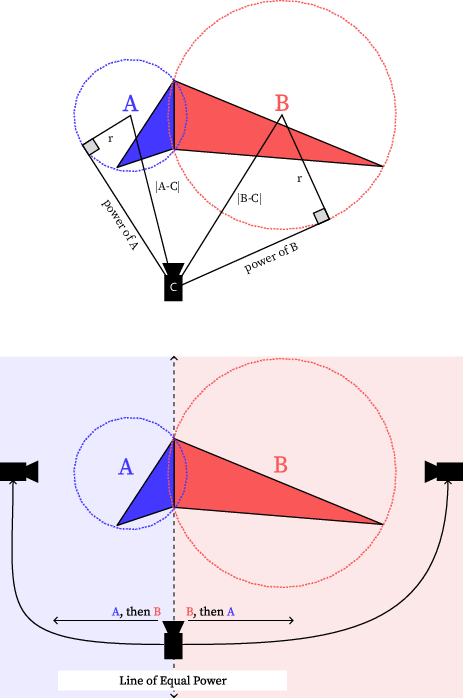

- Circumsphere: The unique sphere that passes through all vertices of a tetrahedron; its power enables front-to-back sorting. "A Delaunay tetrahedralization can be sorted from front to back~\cite{max1990area, edelsbrunner1989acyclicity} with respect to a camera origin according to the power of each circumsphere with respect to the camera origin:"

- COLMAP: A Structure-from-Motion toolkit used to initialize camera poses and sparse point clouds for optimization. "initialized from the COLMAP SfM reconstruction~\cite{schoenberger2016sfm}"

- Convex polyhedra: 3D solids with planar faces and no indentations; Voronoi cells are convex polyhedra that are expensive to rasterize. "it relies on ray tracing arbitrarily complex convex polyhedra (the Voronoi cells)."

- Cyrus-Beck algorithm: A line-clipping algorithm for convex polytopes used to compute ray–tetrahedron entry/exit intersections. "computed using the Cyrus-Beck algorithm~\cite{cyrus1978}."

- Delaunay tetrahedralization: A partition of space into tetrahedra satisfying the empty circumsphere property; used as the volumetric representation. "We introduce radiance meshes, a technique for representing radiance fields with constant density tetrahedral cells produced with a Delaunay tetrahedralization."

- Delaunay triangulation: The triangulation (in 2D or 3D) that maximizes the minimum angle and satisfies the empty circumsphere property; dual to the Voronoi diagram. "A radiance mesh parameterizes a radiance field using the tetrahedra produced by a Delaunay triangulation of a set of points"

- Edge flip: A discrete change in mesh topology where shared faces/edges are reconfigured during optimization. "Optimizing the positions of Delaunay vertices introduces topological discontinuities (edge flips)."

- Emission-only volume rendering: Rendering where volumes emit light without scattering, integrated along rays through density and color fields. "Neural Radiance Fields use numerical quadrature to integrate emission-only volume rendering"

- Extended Position Based Dynamics (XPBD): A physics simulation method that extends PBD with constraint compliance; used for interactive effects. "we can support physics-based simulations using Extended Position Based Dynamics~\cite{macklin2016xpbd}"

- Fisheye lens distortion: Extreme wide-angle lens distortion modeled during rendering; sorting remains valid for distorted projections. "including fisheye lens distortion, physics-based simulation, editing, and mesh extraction."

- Fragment shader: A GPU shader that computes per-pixel outputs, used here to integrate tetrahedral contributions and opacity. "For each pixel, the and values are computed once per tetrahedra within the fragment shader"

- Hardware blending operations: GPU-accelerated operations that combine fragment outputs, used to accumulate transmittance/opacity efficiently. "and the product of opacities is efficiently computed using hardware blending operations."

- Hardware triangle intersector: A GPU hardware unit that accelerates ray–triangle intersections; leveraged for fast ray tracing. "and to utilize the hardware triangle intersector to accelerate ray tracing."

- Hardware triangle rasterizer: A fixed-function GPU pipeline that efficiently converts triangles into fragments; used to render semi-transparent tetrahedral faces. "which allows our model to be rendered using a hardware triangle rasterizer very quickly on a wide variety of platforms"

- Instant-NGP datastructure: A multiresolution hashed grid (Neural Graphics Primitives) used to parameterize fields queried at tetrahedron centers. "and an Instant-NGP datastructure ."

- Instanced shaders: Rendering technique where the same geometry is drawn multiple times with different instance data to avoid duplication. "When mesh shaders are not available, we use instanced shaders, drawing an instance of a triangle strip for each tetrahedron"

- Mesh shader: A modern GPU shader stage that replaces vertex/geometry shaders and enables collaborative mesh generation per thread block. "Mesh shaders replace vertex shaders, allowing an entire thread block to collaboratively generate mesh data"

- Mip-NeRF 360 contraction function: A spatial contraction mapping used to compact unbounded scenes for field queries. "parameterized by an Instant-NGP datastructure~\cite{mueller2022instant} and the mip-NeRF 360 contraction function~\cite{barron2022mipnerf360}"

- Neural Radiance Field (NeRF): A neural representation of 3D scenes that models density and color fields and renders via volumetric integration. "Radiance fields have become the standard paradigm for 3D reconstruction since NeRF~\cite{mildenhall2020nerf}."

- Numerical quadrature: Approximate numerical integration used to evaluate the volume rendering integral in early NeRF methods. "Neural Radiance Fields use numerical quadrature to integrate emission-only volume rendering"

- OptiX: NVIDIA’s ray-tracing engine used to implement a fast ray tracer for the tetrahedral mesh. "we were able to straightforwardly implement a ray-tracer based on OptiX"

- Pareto front: The set of optimal trade-offs between competing objectives (e.g., speed vs. primitives), defining performance efficiency. "our method clearly defines the pareto front in terms of performance per primitive"

- Power sorting: Ordering tetrahedra front-to-back by circumsphere power relative to the camera origin to ensure correct compositing. "Before rendering, we sort utilizing power sorting (Equation ~\ref{eq:power_sort})."

- Premultiplied alpha: Representing color values already multiplied by their alpha to simplify blending computations. "The color contribution of tetrahedron to the ray, premultiplied by alpha, has the form:"

- Radical axis: The locus of points with equal power w.r.t. two circles/spheres; used to explain the correctness of power sorting. "We offer a visual intuition of why this works using the radical axis"

- Radiance mesh: A volumetric representation using Delaunay tetrahedra with constant density and linearly varying color. "We introduce radiance meshes, a technique for representing radiance fields with constant density tetrahedral cells produced with a Delaunay tetrahedralization."

- Radiative transfer equation: The integral equation governing volumetric light transport; here, the emission-only form is used. "also known as the radiative transfer equation~\cite{chandrasekhar1960radiative}"

- Radix sort: A non-comparative integer sorting algorithm used to sort tetrahedra by circumsphere power efficiently. "using a radix sort over the power of the circumsphere."

- Rasterization: Converting triangles to fragments on the GPU; leveraged for high-speed semi-transparent volume rendering. "As such, our model is able to perform exact and fast volume rendering using both rasterization and ray-tracing."

- Ray marching: Stepping along a ray through a volume to accumulate color and opacity; foundational in NeRF’s integral. "and ray marching using standard volume rendering integral"

- Ray tracing: Computing ray–geometry intersections to integrate volumetric contributions exactly; accelerated via triangle intersectors. "As such, our model is able to perform exact and fast volume rendering using both rasterization and ray-tracing."

- Slang.D: A differentiable shader language used to implement a tile-based renderer with tracked derivatives. "The differentiable PyTorch renderer is implemented as a tile based renderer in Slang.D~\cite{bangaru2023slangd}"

- Spherical harmonics: A set of basis functions on the sphere used to model view-dependent color efficiently. "a base color given by spherical harmonics "

- SSIM: Structural Similarity Index, a perceptual image quality metric used for error-based densification. "This first and most important selection method, the SSIM split , is based on Error Based Densification"

- Structure-from-Motion (SfM): A technique to recover camera poses and sparse geometry from images; used to initialize points. "initialized from the COLMAP SfM reconstruction~\cite{schoenberger2016sfm}"

- Tessellation: Subdividing polygonal faces into smaller triangles for rasterization; costly for complex Voronoi cells. "Rasterizing a single cell would require tessellating these faces into dozens of triangles."

- Voronoi diagram: A partition of space into regions closest to given sites; dual to Delaunay and expensive to rasterize as polyhedra. "Unlike a Voronoi diagram, a Delaunay tetrahedralization yields simple triangles"

- Warp shuffle operations: GPU warp-level intrinsics for data exchange among threads, used to share per-tetrahedron data efficiently. "using warp shuffle operations, so we only need to load each tetrahedron once."

- Watertight mesh: A surface mesh without holes or gaps; extracted by thresholding volumetric contributions. "Additionally, we can extract a watertight opaque surface triangle mesh by thresholding our primitives"

- Zip-NeRF: A NeRF variant with anti-aliasing via filtered queries and downweighting of higher-resolution features. "Zip-NeRF~\cite{barron2023zipnerf} introduced a querying scheme where the output of the instant-NGP is convolved with a gaussian."

Practical Applications

Immediate Applications

The following applications can be deployed now using the paper’s methods, code references (desktop/web rasterizers and OptiX ray tracer), and workflows described in the text.

- Gaming and XR (software/graphics)

- Real-time radiance-field rendering without popping for in-engine previews and experiences

- Tools/products: Unity/Unreal plugins that import radiance meshes for level previews, cinematic previsualization, and XR prototypes; WebGL/WebGPU viewers for sharing scenes.

- Workflows: Capture → COLMAP SfM → radiance mesh optimization → hardware rasterization on desktop or web → distribution via web viewer.

- Assumptions/dependencies: Static scenes; sufficient VRAM (up to ~15M tets fits on RTX 4090); mesh shaders for maximum performance (fallback: instanced rasterization); camera poses available; GPU support for Slang/Vulkan/OptiX or WebGL.

- Fisheye/ultra-wide lens view synthesis for VR/AR content creation

- Tools/products: Lens-accurate preview modules leveraging power sorting with respect to ray origin; camera-specific pipelines for headset lenses.

- Assumptions/dependencies: Accurate lens calibration; scenes are emission-only volumes (no complex scattering); direction-dependent color via SH supported.

- Film/VFX and Virtual Production (media/entertainment)

- High-quality, artifact-free volumetric previz at interactive speeds

- Tools/products: Radiance mesh renderer for DCC tools (e.g., Houdini/Maya/Blender add-ons) to preview relighting and complex lenses without splatting artifacts.

- Assumptions/dependencies: Pipeline integration for Instant-NGP queries and Zip-NeRF-style downweighting; GPU with triangle hardware rasterizer.

- Architecture, Engineering, and Construction (AEC) and Real Estate (digital twins)

- Web-deployable interactive site walkthroughs with exact visibility and fast loading

- Tools/products: Browser viewers for property tours and construction progress documentation using radiance meshes; fisheye support for wide-FOV capture rigs.

- Workflows: Drone/handheld capture → SfM → radiance mesh → web viewer.

- Assumptions/dependencies: Static scenes; pose accuracy; bandwidth for mesh streaming; privacy compliance for captured spaces.

- Robotics and Teleoperation (robotics)

- Robust scene visualization maps with exact visibility for operator UIs and simulation

- Tools/products: Operator dashboards using radiance meshes to visualize environments with no temporal popping; OptiX-based ray-traced visualizers.

- Assumptions/dependencies: Static or quasi-static environments; integration with robot perception stack; GPU available on edge/server.

- Scientific Visualization and Cultural Heritage (education/research/public web)

- Portable, high-speed web demos of reconstructed artifacts/sites

- Tools/products: Public-facing web demos (as noted in the paper) for museums and outreach; educational materials demonstrating Delaunay power sorting.

- Assumptions/dependencies: Static capture; browser support for WebGL; moderate device GPU performance.

- Physics-based editing and prototyping (software/graphics/animation)

- Interactive editing with XPBD-style physics on semi-transparent triangle meshes

- Tools/products: Editors that leverage XPBD to deform radiance meshes (e.g., for cleanup, alignment, or stylization) while preserving volumetric properties.

- Assumptions/dependencies: XPBD integration with mesh data; scene edits remain consistent with learned radiance; care with density/color continuity.

- Asset generation pipelines (content creation)

- Surface mesh extraction from volumetric radiance meshes for downstream use

- Tools/products: Automated “mesh extraction” tools that threshold tetrahedra by peak color contribution to produce watertight opaque meshes for games and CAD.

- Workflows: Radiance mesh → contribution analysis → thresholding → connected component filtering → export to standard mesh formats.

- Assumptions/dependencies: Threshold tuning (e.g., 0.1 recommended); static radiance field; possible post-processing for topology/UVs.

- Cloud/Edge Rendering Services (software/infra)

- Efficient volumetric streaming/rendering services with hardware rasterization

- Tools/products: Cloud APIs for uploading captures and receiving radiance meshes that render efficiently on client hardware; OptiX ray tracing backend for lens-accurate previews.

- Assumptions/dependencies: GPU acceleration on server; client-side hardware rasterizer; capture-to-mesh workflow orchestration.

- Education (academia)

- Teaching modules for computational geometry and radiative transfer

- Tools/products: Course labs showing Delaunay tetrahedralization, circumsphere power sorting, and closed-form segment integral evaluation in real-time rendering.

- Assumptions/dependencies: Access to sample datasets; basic GPU programming environment (Slang/Vulkan/WebGL).

Long-Term Applications

These applications are promising but require further research, scaling, or broader ecosystem development (e.g., mobile mesh shaders, dynamic scenes, compression/streaming standards).

- Mobile XR and On-device Capture (software/hardware)

- Instant radiance mesh reconstruction and viewing on smartphones/headsets

- Products/workflows: Integrated capture app performing SfM, fast densification, and on-device rasterization with mesh shaders or future mobile GPU features.

- Dependencies: Mobile hardware support for mesh shaders; faster training/optimization (lower GPU-hr); energy-efficient pipelines; robust lens calibration.

- Live digital twins and streaming (AEC/IoT/infra)

- Incremental radiance mesh updates for near-real-time site monitoring

- Products/workflows: Streaming radiance mesh updates from periodic captures; differential updates with compression for large tetrahedral meshes.

- Dependencies: Incremental Delaunay updates across topology changes; streaming formats; scene change detection; privacy/security policies.

- Autonomous Navigation and SLAM backends (robotics)

- Radiance mesh as a mapping representation with exact visibility and lens robustness

- Products/workflows: Perception modules that use radiance meshes for rendering-based localization, path planning visualization, and sensor simulation.

- Dependencies: Handling dynamic objects; tight coupling with SLAM graph; uncertainty-aware updates; memory and compute constraints.

- Standardized 3D content pipelines (policy/industry standards)

- File formats and compression standards for semi-transparent triangle-based volumes

- Products/workflows: Exchange formats for radiance meshes (density + linear color per tet, SH coefficients), streaming over web, archival policies.

- Dependencies: Industry consortium efforts; interoperability with glTF/USD; legal/policy frameworks for sharing captured real-world spaces.

- High-fidelity VR rendering with complex lens models (XR/optics)

- Accurate headset optics simulation using OptiX-based ray tracing of radiance meshes

- Products/workflows: VR runtimes using lens-specific ray tracing paths for distortion correction and foveated rendering tied to radiance meshes.

- Dependencies: Real-time ray tracing budgets; device-level APIs; integration with eye-tracking; perceptual studies.

- Medical visualization and training (healthcare/education)

- Fast, web-portable volumetric views for training and education scenarios

- Products/workflows: Teaching tools simulating volumetric visualization pipelines; potential adaptation to learned radiance from endoscopy-like data.

- Dependencies: Clinical validation; adaptation to medical volumes (different physics, noise models); regulatory compliance; privacy.

- Large-scale urban or facility scans (smart cities/asset management)

- City-scale radiance mesh reconstructions for planning and analytics

- Products/workflows: Capture fleets → scalable densification → GPU-friendly viewing; integration with BIM/GIS systems.

- Dependencies: Distributed optimization; memory and storage scaling; compositing multiple models; policy for public space capture.

- Interactive volumetric editing and authoring tools (software/creator tools)

- Node-based editors for density/color ramp authoring with physics-aware tweaks

- Products/workflows: Creator tools to retouch radiance meshes, reweight SH color fields, adjust density gradients, and export variants.

- Dependencies: UX research; stability across topology changes; undo/redo with Delaunay recomputation; artist-friendly controls.

- Energy-efficient rendering at scale (energy/green computing)

- Reduced runtime energy for volumetric experiences via hardware rasterization

- Products/workflows: Hosting platforms that switch from splatting to tetra rasterization to cut power usage during viewing.

- Dependencies: Empirical energy measurements; standardized benchmarks; broader hardware support and driver optimizations.

- Research extensions (academia)

- Dynamic scenes, reflectance/material models, and compression

- Topics: Handling non-emission-only models; per-channel gradients; temporal coherence across topology flips; mesh compression; differential densification.

- Dependencies: New loss functions; robust optimization across edge flips; perceptual evaluations; open datasets for dynamics and materials.

Cross-cutting assumptions and dependencies

- Scene nature: The method assumes static or quasi-static scenes and models emission-only radiance fields with density and direction-dependent color (spherical harmonics).

- Input requirements: Posed images and a sparse point cloud (e.g., COLMAP) are needed; lens calibration affects quality for non-pinhole cameras.

- Hardware: Best performance relies on mesh shaders and hardware triangle rasterization/intersection; web deployments use instanced rasterization; VRAM availability dictates max tetra count.

- Optimization and quality: Training time can be higher than some baselines; densification heuristics (SSIM and total variance splits) impact thin structures and texture fidelity.

- Continuity and numerical stability: Centroid querying is more stable than circumcenter; Zip-NeRF-style downweighting mitigates aliasing and skinny tetrahedra issues.

- Distribution and policy: Sharing radiance meshes of real spaces involves privacy, licensing, and potential building/site access regulations; standardization needed for file formats.

Collections

Sign up for free to add this paper to one or more collections.