Jina-VLM: Small Multilingual Vision Language Model (2512.04032v2)

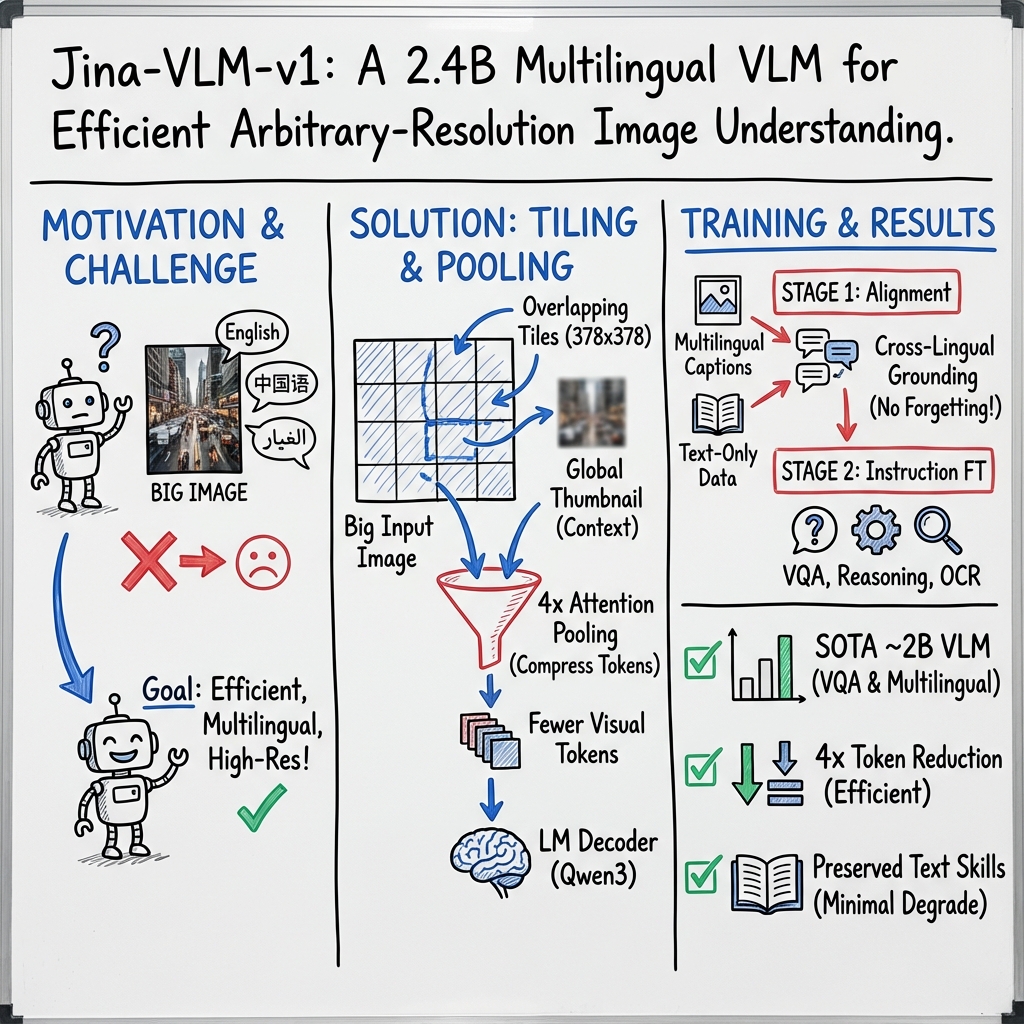

Abstract: We present Jina-VLM, a 2.4B parameter vision-LLM that achieves state-of-the-art multilingual visual question answering among open 2B-scale VLMs. The model couples a SigLIP2 vision encoder with a Qwen3 language backbone through an attention-pooling connector that enables token-efficient processing of arbitrary-resolution images. The model achieves leading results on standard VQA benchmarks and multilingual evaluations while preserving competitive text-only performance. Model weights and code are publicly released at https://huggingface.co/jinaai/jina-vlm .

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What this paper is about

This paper introduces Jina VLM v1, a small yet powerful “vision-LLM” (VLM). A VLM can look at images and read text, then answer questions or explain what it sees—often in many languages. The goal is to make a compact model (2.4 billion parameters) that understands pictures well and works across different languages, while staying fast and affordable to use.

The main questions the paper asks

Here are the questions the researchers set out to answer:

- Can a small model understand images and text in many languages as well as (or better than) similar-sized models?

- Can we make image processing more efficient so the model doesn’t slow down or become too expensive when images are large or detailed?

- Can we train the model to see and read well without forgetting how to do regular text tasks (like answering questions with no image)?

How the model works (in everyday terms)

Think of the model as a team with three roles:

- A “vision encoder”: This is like a careful observer that turns an image into a set of tiny, meaningful pieces. The model uses SigLIP2 for this job.

- A “connector”: This is a translator. It takes the image pieces and converts them into a form the language part can understand. It also cleverly reduces the amount of image data, so things run faster.

- A “LLM (decoder)”: This is the writer and problem-solver (Qwen3). It reads both the translated image information and any text, then produces an answer.

Two key ideas make it efficient and accurate:

- Image tiling: Big images are cut into overlapping squares (like slicing a large photo into tiles). Each square is processed separately, plus a small thumbnail of the whole image is added for context. This helps the model notice tiny details (like small text) without missing the big picture.

- Attention pooling: Imagine you’re looking at a 2×2 block of tiny image pieces. The model focuses on what matters and merges those pieces into one “token” (a chunk of information). This reduces the total number of tokens by 4×, making processing faster while keeping important details.

Training happens in two stages:

- Stage 1 (Alignment): The model learns to connect what it sees with what words mean across many languages, using lots of image captions and a bit of text-only data. This helps it avoid “forgetting” how to handle pure text tasks.

- Stage 2 (Instruction fine-tuning): The model practices following instructions—like answering questions about charts, documents, scenes, math problems, and more—in many languages. It trains on a mix of data sources so it can handle different real-world tasks.

What the model found and why it matters

Here are the main results:

- Strong multilingual performance: Among small, open models (around 2 billion parameters), Jina VLM v1 reaches state-of-the-art scores in multilingual visual question answering. In short, it does great across languages, not just English.

- Good at general visual tasks: It scores highly on questions about diagrams, charts, documents, and text in images (OCR). This means it can read and explain a wide range of visuals.

- Low hallucination: It’s less likely to “make things up” about what’s in an image.

- Solid math and reasoning: It performs competitively on complex visual reasoning and math-focused benchmarks.

- Minimal loss on text-only tasks: Even after training on images, it still does well on pure text tests—close to its original LLM on many benchmarks.

Why this matters: It shows that you don’t need a massive model to get strong, multilingual visual understanding. This makes advanced VLMs more accessible to schools, researchers, and companies with limited computing resources.

What this could mean for the future

- Better access: Smaller, efficient VLMs mean more people can use advanced AI for tasks like document reading, chart analysis, and multilingual help.

- Strong multilingual support: The training recipe suggests we can build models that work well across languages without sacrificing English or general abilities.

- Practical improvements: The tiling and attention pooling tricks help handle large or detailed images without slowing the model too much.

Limitations and next steps:

- Handling very high-resolution images still costs more compute (more tiles mean more work).

- Safety and alignment (teaching the model to avoid harmful or biased outputs) weren’t a major focus and could be improved.

- The same training approach might boost larger models further—something to explore next.

In short, Jina VLM v1 is a compact, multilingual model that sees, reads, and reasons well. It’s a step toward making powerful, cross-language image understanding widely available and efficient.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a focused list of what remains missing, uncertain, or unexplored in the paper, framed to be actionable for future research.

- No ablation studies on the vision–language connector: impact of concatenating layers −3 and −9 versus other layer choices, using only final-layer features, or multi-layer feature fusion (e.g., FPN-like schemes).

- Unexplored design space of the attention-pooling module: effect of 2×2 neighborhood size, mean-as-query versus learned/gated queries, single-head versus multi-head pooling, and alternative pooling/compression methods (e.g., FastV, PruMerge, PyramidDrop, VisionZip).

- Missing quantification of the global thumbnail’s contribution: ablations measuring when and how the thumbnail helps cross-tile/global context and whether it mitigates boundary artifacts.

- Lack of explicit cross-tile positional encoding evaluation: compare current <im_col>-based token structure versus 2D RoPE, learned tile-level positional embeddings, or content-aware alignment to ensure spatial coherence across overlapping tiles.

- Tiling hyperparameters are not justified: sensitivity analysis on tile count (e.g., 6/12/24 tiles), overlap size (e.g., 64/112/160 px), stride, and crop size (378×378) and their trade-offs on OCR, diagrams, and high-detail images.

- No adaptive/content-aware tiling: investigate saliency/text-region detection to allocate more tiles to dense information areas and fewer to backgrounds for better efficiency/accuracy.

- Missing high-resolution stress tests: performance curves when increasing tile budget beyond 4×3 grids (e.g., long documents, maps, blueprints) and the accuracy vs. memory/latency scaling behavior.

- No inference efficiency metrics: report wall-clock latency, throughput, memory footprint, and cost per sample across GPU/CPU/mobile devices; compare against baselines under identical settings.

- Token compression claims are not substantiated with compute profiles: measure end-to-end speedups and memory reductions versus a non-pooled baseline and alternative compression strategies.

- Unclear reproducibility of the tiling pipeline: pseudocode in the appendix is truncated, and complete algorithmic/implementation details are missing for exact replication.

- Limited evaluation of multilingual coverage: training spans 30+ languages, but benchmarks cover only 6; add low-resource scripts (e.g., Hindi, Bengali, Thai, Khmer, Tamil), abjads (Arabic variants), CJK languages, and code-switching scenarios.

- No multilingual OCR assessment: evaluate reading accuracy for non-Latin scripts, mixed-language documents, and challenging text layouts (curved, rotated, low contrast).

- Safety and alignment are not addressed: perform toxicity/bias assessments, jailbreak resistance, privacy risks in document images, and refusal behavior on unsafe prompts; include multilingual safety evaluations.

- Multi-image reasoning remains weak with no mitigation experiments: test interleaved encoding, cross-image attention, retrieval-augmented multi-image context, or specialized training data to boost BLINK/Muir/MMT scores.

- Robustness is underspecified: beyond R-Bench results, analyze performance under real-world corruptions (blur, glare, compression, occlusion), adversarial perturbations, and domain shifts with targeted augmentation strategies.

- No calibration/uncertainty analysis: add confidence estimation, selective answering, abstention mechanisms, and calibration metrics to reduce hallucinations beyond POPE/HallBench.

- Text-only degradation is not analyzed: run ablations on text-only data ratios (stage 1 and 2), curriculum schedules, partial backbone freezing, and separate LLM refresher phases to recover MMLU/GSM-8K performance.

- Batch mixing strategy lacks quantification: provide controlled experiments comparing single-source versus mixed-source batches over time, including catastrophic forgetting metrics and cross-task stability.

- Evaluation comparability is mixed: unify all baselines under consistent prompts, decoding strategies (e.g., “thinking mode”), and toolkits, and report statistical significance/error bars for fairness.

- Encoder choice is not ablated: compare SigLIP2-So400M/14-384 to alternative backbones (e.g., NaViT, CLIP variants, EVA), especially for multilingual and OCR-heavy domains.

- Connector projection choice is unexplored: test SwiGLU versus linear or gated projections for stability, efficiency, and downstream accuracy.

- Embedding weight tying decision is not justified: evaluate tying/untying input–output embeddings in the decoder and measure impacts on text-only and multimodal tasks.

- Spatial token ordering (<im_col>) versus 2D positional embeddings is untested: quantify structural misalignment risks and downstream effects on tasks requiring precise spatial reasoning (diagrams, charts).

- Data mixture transparency is limited: publish per-language/domain/source distributions, filtering criteria, and quality controls; audit potential evaluation contamination and quantify its impact.

- Lack of error analysis: provide qualitative/quantitative break-downs of failure cases (e.g., misread fonts, chart types, diagram categories, math subskills) to inform targeted data curation/training.

- Complexity accounting is incomplete: report overall sequence lengths, attention FLOPs before/after pooling, and how LLM quadratic costs interact with increased tile counts.

- Missing exploration of dynamic token pooling: adapt pooling ratios based on visual content density or task type to balance detail preservation and efficiency.

- Scaling recipe to larger models is unverified: test whether the multilingual training mix and connector design transfer to larger backbones (e.g., 7B–10B), including stability and cost trade-offs.

- No paper of model size scaling downwards: determine if similar multilingual VQA performance is achievable with smaller decoders (<1B), and what adjustments (data, connector, pooling) are needed.

- MTVQA performance trails top baselines without analysis: investigate why multilingual text-centric VQA lags and whether targeted training (fonts, multi-script OCR, layout-heavy data) improves results.

- Incomplete documentation on licenses and ethics: detail dataset licensing, consent, known biases, and governance practices for multilingual and document data.

Glossary

- Attention-pooling connector: A mechanism that allows the model to focus on specific tokens by summing their importance, thus enabling efficient processing of high-resolution images. Example: "The model couples a SigLIP2 vision encoder with a Qwen3 language backbone through an attention-pooling connector that enables token-efficient processing of arbitrary-resolution images."

- Multimodal reasoning: Reasoning that involves multiple types of data, such as text and images, to make informed decisions or answer questions. Example: "Recent VLMs have achieved strong results on visual question answering (VQA), OCR, and multimodal reasoning."

- OCRBench: A benchmark that evaluates the model's performance in Optical Character Recognition tasks, which involves reading text from images. Example: "https://huggingface.co/jinaai/jina-vlm-v1 achieves state-of-the-art performance on multilingual multimodal benchmarks including MMMB and Multilingual MMBench, demonstrating that small models can excel at cross-lingual visual understanding without sacrificing general capabilities."

- Pyramiddrop: A token compression method that reduces the redundancy of visual tokens by discarding low-information regions. Example: "Images often contain low-information regions (e.g., sky backgrounds), making visual tokens highly redundant. Token compression methods address this."

- Dynamic High-Resolution Tiling: A technique for handling high-resolution images by splitting them into smaller tiles that overlap, allowing detailed processing without excessive computational cost. Example: "Token compression methods address this \citep{fastv,prumerge,visionzip,pyramiddrop}. \citet{internvl_1_5} develop Dynamic High-Resolution Tiling..."

- Multimodal instruction tuning: A tuning strategy that involves adjusting a model to follow instructions across different data types, like text and images. Example: "Training strategies vary: \citet{qwen_2_vl, internvl_2_5} alternate between multimodal instruction tuning and general training..."

- 22 attention pooling: A specialized form of pooling that clusters tokens into groups of four, then applies attention mechanisms to summarize these clusters. Example: "Attention pooling is then computed as: $\mathbf{H}_{\text{pooled} = (\text{softmax}\left(\frac{\mathbf{Q}\mathbf{W}_Q(\mathbf{H}_{\text{concat}\mathbf{W}_K)^\top}{\sqrt{d_k}\right)\mathbf{H}_{\text{concat}\mathbf{W}_V) \mathbf{W}_O \in \mathbb{R}^{M \times d_v}$..."

- SigLIP2-So400M/14-384: A vision encoder that consists of specific layers and processes images into a grid format as part of the model architecture. Example: "The vision encoder, SigLIP2-So400M/14-384, is a 27-layer Vision Transformer..."

- SwiGLU: A variant of the Gated Linear Units (GLU), which improves transformer models by utilizing a particular activation function. Example: "Visual tokens are combined with text embeddings for the Qwen3 decoder \citep{qwen3}."

- 2.4B parameter VLM: Describes the size and complexity of the Vision-LLM in terms of parameters, where 'B' stands for billion. Example: "This work introduces https://huggingface.co/jinaai/jina-vlm-v1, a 2.4B parameter VLM that addresses both challenges...

Practical Applications

Practical Applications of the jina-vlm-v1 Paper

The paper introduces a small, open 2.4B multilingual vision-LLM (VLM) with an efficient arbitrary-resolution pipeline and attention-based token pooling that reduces visual tokens by 4×. Below are actionable applications derived from its methods, training recipe, and empirical performance.

Immediate Applications

- Multilingual document understanding and OCR automation

- Description: Extract and answer questions from text-rich images (invoices, contracts, forms, IDs) across 30+ languages; strong performance on DocVQA, TextVQA, and OCRBench facilitates robust information extraction.

- Sectors: finance, government, logistics, healthcare, insurance

- Tools/products/workflows: “Multilingual DocAI” service; RPA pipelines for accounts payable; KYC/AML doc readers; claims processing assistants

- Assumptions/dependencies: High-resolution images benefit from increased tile budget; privacy/PII controls needed; not safety-certified; domain-specific post-processing (field validation) required.

- Chart and infographic Q&A for business analytics

- Description: Answer questions about charts and infographics, summarize insights, and detect anomalies; top performance on ChartQA/AI2D supports reliable visual analytics assistance.

- Sectors: finance, energy, operations, media

- Tools/products/workflows: BI assistant plugin for Tableau/PowerBI; “ChartQA Copilot” that explains dashboards; report validators

- Assumptions/dependencies: Visual reasoning may miss dataset context; human-in-the-loop for critical decisions; standardized prompts improve accuracy.

- Screenshot understanding for software QA and localization

- Description: Parse UI text, detect layout issues, and triage bugs from screenshot attachments in many languages.

- Sectors: software, UX, localization

- Tools/products/workflows: “Screenshot QA Assistant” integrated with Jira/GitHub; localization QA bot for multi-language UIs

- Assumptions/dependencies: Works best with single images; multi-image workflow support is limited; consistent screenshot quality improves results.

- Multilingual customer support co-pilot

- Description: Handle user-submitted photos (error screens, device panels), summarize problems, and suggest fixes across languages.

- Sectors: consumer electronics, telco, fintech

- Tools/products/workflows: Call-center chatbots; ticket triage assistant with image + text intake

- Assumptions/dependencies: Safety/alignment not emphasized; human escalation required; domain-specific fine-tuning improves reliability.

- Accessibility: alt-text generation and on-image text translation

- Description: Generate descriptive captions for images and translate embedded text (signs, labels) for assistive technologies.

- Sectors: public sector, education, media, web accessibility

- Tools/products/workflows: Browser and CMS plugins for alt-text; “Alt-Text Translator” for multilingual sites

- Assumptions/dependencies: OCR accuracy depends on image quality; editorial oversight recommended for public content.

- E-commerce product catalog enrichment

- Description: Extract attributes and compliance statements from packaging/labels in various languages; generate product Q&A.

- Sectors: retail, FMCG, marketplaces

- Tools/products/workflows: “Label Reader” pipeline; catalog QA assistant; attribute normalization service

- Assumptions/dependencies: Requires mapping to schema/taxonomy; high-res packaging images; guardrails for regulated claims.

- Compliance and KYC document review

- Description: Verify consistency in IDs, certificates, and forms across languages; detect mismatches between text and image.

- Sectors: finance, fintech, travel, HR

- Tools/products/workflows: “KYC Document Reader” with discrepancy flags; onboarding assistants

- Assumptions/dependencies: Not a fraud detector; must integrate with liveness/fraud checks; regulatory approval needed in some jurisdictions.

- Visual RAG for enterprise knowledge

- Description: Combine jina-vlm-v1 with document retrieval (e.g., ColPali) to answer queries grounded in visual documents (manuals, diagrams, infographics).

- Sectors: manufacturing, field service, education, enterprise knowledge management

- Tools/products/workflows: “Visual RAG Pipeline” for doc hubs; search over scanned PDFs with answer citations

- Assumptions/dependencies: Requires indexing pipeline and citation tracking; careful prompt design for grounding.

- Media and publishing: captioning, summarization, and localization

- Description: Generate multilingual captions and summaries for photos/infographics; assist in localizing visual assets.

- Sectors: newsrooms, marketing, public communications

- Tools/products/workflows: Editorial co-pilot; “Infographic Localizer” for Adobe/Figma

- Assumptions/dependencies: Editorial review necessary; brand/style constraints require post-editing.

- Supply chain and logistics label reading

- Description: Read shipping labels, safety signs, and compliance marks across languages; streamline intake and routing.

- Sectors: logistics, manufacturing, warehousing

- Tools/products/workflows: WMS integrations; “Label-to-Route” assistant; receiving dock triage

- Assumptions/dependencies: Image quality in warehouse environments; domain-specific label formats.

- Academic and research tooling

- Description: Reuse the attention-pooling connector and multilingual training recipe to paper token-efficiency, catastrophic forgetting mitigation, and cross-lingual VQA.

- Sectors: academia, research labs

- Tools/products/workflows: Open-source training scripts; connector libraries; benchmark suites (VLMEvalKit)

- Assumptions/dependencies: Compute availability for replication; data curation impacts cross-lingual outcomes.

- Policy evaluation and digital inclusion pilots

- Description: Prototype multilingual visual assistance for public services (forms, signage) to evaluate equitable access.

- Sectors: public sector, NGOs

- Tools/products/workflows: “Multilingual Forms Assistant” kiosks; pilot programs for service navigation

- Assumptions/dependencies: Privacy and accessibility compliance; human assistance fallback; domain-specific fine-tuning.

Long-Term Applications

- On-device/edge AR translation and assistance

- Description: Quantize and compress the pipeline for mobile or smart-glasses to translate signs/labels in real time and provide contextual assistance.

- Sectors: consumer devices, travel, accessibility

- Tools/products/workflows: “On-device Visual Translator” with attention pooling; AR overlays

- Assumptions/dependencies: Further compression (4-bit/INT8), latency optimization, power constraints; improved safety/alignment.

- High-resolution document automation at scale

- Description: Deploy dynamic tiling and token pooling for bulk digitization (legal archives, government records) with multilingual extraction and QA.

- Sectors: government, legal, enterprise records management

- Tools/products/workflows: “Arbitrary-Resolution DocAI” with adaptive tile budgets; audit trails and confidence scoring

- Assumptions/dependencies: Storage/compute scaling; robust error handling; domain-specific validators.

- Multimodal localization and creative tooling

- Description: End-to-end infographic/diagram localization including typography-aware text extraction/reflow and culturally adapted visuals.

- Sectors: marketing, education, publishing

- Tools/products/workflows: Figma/Adobe plugins that integrate OCR, layout preservation, and translation memory

- Assumptions/dependencies: Advanced layout understanding and rendering; human creative oversight.

- Multilingual multi-image and video reasoning

- Description: Extend from single images to multi-image sequences/video for comparative reasoning, diagnostics, and tutorials across languages.

- Sectors: robotics, maintenance, education, media

- Tools/products/workflows: “Procedure Coach” that reasons over image sequences; video Q&A assistants

- Assumptions/dependencies: Training data for multi-image/video; memory-efficient interleaving; stronger temporal grounding.

- Safety-critical clinical and industrial use

- Description: Assist with pathology/radiology diagrams and industrial schematics with regulated workflows and deterministic guardrails.

- Sectors: healthcare, manufacturing, energy

- Tools/products/workflows: “Clinical Diagram Assistant” with validated pathways; “Plant Diagram Interpreter” for maintenance

- Assumptions/dependencies: Domain-specific fine-tuning, rigorous safety/alignment, regulatory approvals, auditability.

- Autonomous field-service agents with visual reasoning

- Description: Agents that diagnose equipment from photos, read multilingual labels, and guide repairs.

- Sectors: telecom, utilities, manufacturing

- Tools/products/workflows: “Multilingual Field Technician Copilot” integrating RAG + tool use

- Assumptions/dependencies: Reliability and tool integration; offline capability; robust error recovery.

- Token-efficient connectors adopted across VLM ecosystem

- Description: Standardize attention-pooling connectors to reduce visual tokens for arbitrary-resolution inputs in many open VLMs.

- Sectors: software, AI platforms

- Tools/products/workflows: “Attention-Pooling Connector Library” for Hugging Face/Transformers; inference SDKs

- Assumptions/dependencies: Compatibility across encoders/LLMs; benchmarking to validate trade-offs.

- Education: advanced diagram-centric math tutoring

- Description: Interactive tutoring that deeply reasons over diagrams and visual math problems with step-by-step explanations in many languages.

- Sectors: education, EdTech

- Tools/products/workflows: “Diagram Math Tutor” with curriculum alignment; explainable reasoning logs

- Assumptions/dependencies: Additional training for mathematical reasoning; guardrails for correctness; teacher oversight.

- National-scale accessibility and service kiosks

- Description: Visual assistance stations that help citizens navigate forms and procedures in multiple languages.

- Sectors: public sector, public health

- Tools/products/workflows: “Service Navigation Kiosks” combining OCR + VQA + translation

- Assumptions/dependencies: Privacy and compliance; robust fallback to human support; funding and infrastructure.

- Enterprise visual knowledge hubs

- Description: Unified search and Q&A over diagrams, scanned manuals, and text-rich images with multilingual support and provenance.

- Sectors: manufacturing, aerospace, energy

- Tools/products/workflows: “Visual SharePoint Search” with citations and approvals

- Assumptions/dependencies: Indexing pipelines; document access controls; long-term maintenance.

- Robotics and industrial inspection

- Description: Assist robots/inspectors in reading gauges, labels, and procedural diagrams in diverse languages and conditions.

- Sectors: robotics, industrial IoT

- Tools/products/workflows: “Inspection Copilot” with multimodal reasoning and task planning

- Assumptions/dependencies: Sensor integration, environment variability, multi-image/video reasoning improvements.

Cross-cutting assumptions and dependencies

- Compute and cost: Arbitrary-resolution tiling scales memory linearly with tile count; attention pooling reduces tokens by 4×, but high-res processing still requires careful cost control (quantization, batching).

- Safety/alignment: The paper notes limited emphasis on safety-critical alignment; regulated use cases require guardrails, audits, and human oversight.

- Data quality and domain adaptation: Performance depends on image clarity and domain-specific fine-tuning (e.g., medical, legal); prompt standardization improves consistency.

- Privacy and compliance: PII in documents demands secure handling, redaction, and policy compliance (GDPR/CCPA).

- Multi-image/video support: Current performance is modest for multi-image reasoning; applications needing temporal or comparative context may require further training and architectural updates.

Collections

Sign up for free to add this paper to one or more collections.