- The paper introduces a content-aware texturing method for Gaussian Splatting that decouples appearance from geometry to enhance efficiency.

- It employs progressive texel size adaptation and resolution-aware primitive splitting to optimize texture mapping based on scene complexity.

- Experimental results demonstrate improved SSIM, PSNR, and LPIPS scores while using an order-of-magnitude fewer primitives compared to prior methods.

Content-Aware Texturing for Gaussian Splatting: An Expert Review

Introduction and Motivation

Gaussian Splatting has recently become a leading paradigm for 3D scene reconstruction and real-time novel view synthesis by using collections of semi-transparent ellipsoidal primitives. However, the standard paradigm constrains each primitive to a single color sample, resulting in an inefficient representation of fine appearance details: intricate textures must be encoded with numerous small primitives regardless of underlying geometric simplicity. This method introduces unnecessary redundancy and limits scalability. The authors address this limitation by proposing a content-aware texturing scheme for 2D Gaussian primitives, drawing upon principles from traditional texture mapping but optimizing parameter allocation in a data-driven, adaptive manner.

Technical Approach

The core contribution is a textured Gaussian primitive with a per-primitive texture map whose texel size is fixed in world space and dynamically adapted to scene content during optimization. This decouples appearance parameters from geometric ones and enables independent, resource-efficient refinement. The texel resolution of each primitive is established such that the smallest texel projected in camera space never exceeds the size of the corresponding image pixel, mitigating potential overparameterization of appearance.

Textures contribute spatially varying RGB offset to the base color modeled via spherical harmonics (SH), hence encoding only diffuse reflectance (albedo modulated by irradiance). The mapping between intersection points and UV texture coordinates is constructed such that the texture's spatial frequencies are invariant to primitive scale/rotation changes, effectively avoiding spectrum distortion during optimization.

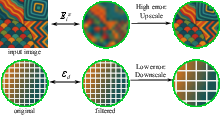

Figure 1: Naive texture mapping induces stretching upon scale changes, while content-aware texturing preserves appearance by fixing texel size in world space.

Progressive Texel Size Adaptation

Texel size adaptation is formulated as a progressive optimization routine, governed by two operations:

- Downscaling: If the local frequency content falls below a pre-set threshold (error computed via low-pass filtering is under τds), the texel size is increased and the texture map is downsampled, reducing parameter count.

- Upscaling: Primitives with high per-pixel error (top 10% by contribution-weighted sum) have their texture maps upsampled (halved texel size) to increase expressive fidelity.

The ratio of texel size to pixel size (t2pr) is discretized to powers of two and regularly updated throughout optimization. This mechanism ensures that resource allocation aligns dynamically with both geometric and appearance complexity.

Figure 2: The adaptive up/down-scaling process enables efficient balancing of texel resolution with scene frequency content.

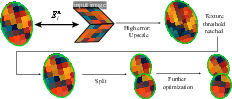

Resolution-Aware Primitive Management

Geometric and appearance errors are distinguished during optimization. If appearance error cannot be reduced by upscaling (i.e., texture map proliferation saturates), remaining error is attributed to geometric misfit. The method thus performs resolution-aware splitting of primitives with excessive texture resolution, spawning localized children with reduced scale and texture size, which is critical for accurately modeling high-frequency geometry without redundancy.

Figure 3: Primitives subject to high error and saturated texture resolution are split, producing finer geometric details without unbounded parameter growth.

Implementation and Resource Efficiency

The algorithm is implemented atop the 3DGS codebase using 2D planar primitives, with custom data structures managing adaptive, per-primitive texture grids (“jagged tensors”). Training requires per-ray texture queries, resulting in moderate overhead: ~1.5-2x longer training and 25% slower rendering than non-textured 2DGS, but with commensurate savings in parameter count and improved representation efficiency. Regularization strategies promote texture sparsity and cull low-contribution primitives. The sigmoid-constrained texel encoding facilitates effective compression (e.g., via k-means clustering).

Experimental Results

Evaluation across thirteen scenes from Mip-NeRF360, Deep Blending, and Tanks & Temples datasets demonstrates competitive or superior qualitative and quantitative performance (SSIM, PSNR, LPIPS) compared to previous 2DGS-based texturing schemes such as BBSplat and GSTex, which either use primitive-constrained fixed texture resolutions or static texel distributions, respectively.

Critically, the method achieves image fidelity while using an order-of-magnitude fewer primitives and parameters, validating the efficiency of its content-aware allocation.

Figure 4: Split-up and densification allow error reduction by dynamically adding primitives only where geometric detail necessitates, keeping parameter growth tractable.

Extensive ablations reveal that constraining models to matched parameter budgets causes competing methods to degrade substantially in image quality, whereas this method maintains robust high-frequency reconstruction due to its adaptive capabilities.

Limitations and Prospects

The approach inherits some limitations of 2DGS, notably suboptimal geometry quality relative to 3DGS methods. Anti-aliasing is not explicitly handled, though the choice of minimal t2pr and the overlap of primitives mitigate most artifacts within the convex hull of input cameras. The implementation is not currently optimized for hardware-accelerated texture operations (e.g., GPU texture fetch), suggesting potential avenues for improvement, especially in low-end hardware scenarios.

The separation of appearance and geometry parameters enables nuanced control over the tradeoff between geometric precision and texture representation, but future work could investigate extension to 3D primitives with volumetric texturing or more advanced anti-aliasing mechanisms.

Conclusion

This work presents a principled, adaptive content-aware texturing framework for Gaussian Splatting that overcomes inefficiencies of prior methods by decoupling geometry and appearance parameter allocation. The proposed data-driven optimization routines balance primitive and texel budgets in response to scene content, achieving high-fidelity novel view synthesis with significantly reduced resource consumption. The techniques introduced represent a meaningful expansion of the design space for primitive-based graphics and inverse rendering, with promising implications for both compact model deployment and real-time rendering efficiency.