AirSim360: A Panoramic Simulation Platform within Drone View (2512.02009v1)

Abstract: The field of 360-degree omnidirectional understanding has been receiving increasing attention for advancing spatial intelligence. However, the lack of large-scale and diverse data remains a major limitation. In this work, we propose AirSim360, a simulation platform for omnidirectional data from aerial viewpoints, enabling wide-ranging scene sampling with drones. Specifically, AirSim360 focuses on three key aspects: a render-aligned data and labeling paradigm for pixel-level geometric, semantic, and entity-level understanding; an interactive pedestrian-aware system for modeling human behavior; and an automated trajectory generation paradigm to support navigation tasks. Furthermore, we collect more than 60K panoramic samples and conduct extensive experiments across various tasks to demonstrate the effectiveness of our simulator. Unlike existing simulators, our work is the first to systematically model the 4D real world under an omnidirectional setting. The entire platform, including the toolkit, plugins, and collected datasets, will be made publicly available at https://insta360-research-team.github.io/AirSim360-website.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

AirSim360, in simple terms

Overview: What is this paper about?

This paper introduces AirSim360, a virtual world for testing and training drones that can see in all directions (360 degrees). Think of it like a super realistic video game, but built for research. It helps drones learn to navigate, understand scenes, and interact with people by providing lots of high-quality, panoramic data and labels.

Key objectives: What were the researchers trying to do?

The researchers wanted to make it easier and faster to train smart drones. Their goals were:

- Create accurate 360-degree images and labels that match how panoramic cameras really work.

- Simulate realistic people walking, talking, and moving, and label their body positions (keypoints).

- Automatically generate smooth, realistic flight paths for drones.

- Build and share large datasets so others can train and test AI models.

Methods: How does AirSim360 work?

You can imagine AirSim360 as a toolkit built on top of a game engine (Unreal Engine) that lets drones see “with eyes all around” and move realistically. Here’s the approach in everyday language:

- Panoramic vision by stitching six cameras:

- They point six virtual cameras in different directions: front, back, left, right, up, and down.

- These six pictures are stitched into a single 360-degree image using a format called equirectangular projection (ERP). It’s like turning a globe into a flat world map.

- To keep this fast, they copy images directly on the graphics card (GPU) using a system called RHI, like using a super quick photocopier right inside the computer.

- Depth redefined for 360°:

- In normal photos, “depth” is measured straight ahead.

- In 360° view, there’s no single “front,” so they measure depth along the direction you’re looking—like tracing how far something is along the line of sight.

- Pixel-level labels for understanding scenes:

- Semantic segmentation: giving each pixel a category (like road, building, tree). Think of it as coloring pixels by what they represent.

- Entity segmentation: marking each distinct object separately (each car, each person), like giving everything its own name tag.

- They designed special methods to handle many objects at once, even beyond typical limits.

- Sensors and timing:

- All virtual sensors (cameras, depth, labels) capture data at the same time to avoid delays. This keeps the drone’s “memory” consistent while it’s moving.

- Simulating people (IPAS: Interactive Pedestrian-Aware System):

- People in the scene move and interact using behavior trees and state machines (tools that define how characters act, like “walk,” “chat,” or “take a phone call”).

- The system automatically logs 3D human keypoints (important body joints), so models can learn how people move.

- Automatic flight paths:

- They use a method called Minimum Snap to turn a few chosen waypoints into a smooth, realistic route. Imagine placing dots on a map and letting the system draw a neat, safe curve through them that a real drone could follow.

Main findings: What did they discover, and why does it matter?

- They built and released large panoramic datasets:

- Omni360-Scene: over 60,000 images with depth and detailed segmentation.

- Omni360-Human: around 100,000 samples with labeled human body keypoints and behaviors.

- Omni360-WayPoint: over 100,000 drone flight waypoints in multiple outdoor scenes.

- Better performance in real tasks:

- Pedestrian distance estimation: Training with their human dataset improved accuracy across several public test sets.

- Panoramic depth estimation: Models trained on AirSim360 data generalized better to new environments than those trained on other datasets.

- Panoramic segmentation: Adding their data boosted performance on identifying both categories (like “road”) and individual objects (like “this car”).

- Faster data generation: Their GPU-side stitching and smart settings increased frame rates, so they can collect more data quickly.

- Panoramic navigation helps decision-making:

- In vision-language navigation (following written instructions), drones with 360° vision could often move directly to targets without extra turning—“You Only Move Once” (YOMO)—because they can see the goal right away.

Implications: Why is this important?

AirSim360 makes it much easier to build smart drones that understand the world around them. This can lead to:

- Safer and more efficient flying, since drones can see obstacles from all directions.

- Faster, cheaper training of AI systems, because the simulator creates rich, labeled data without needing to hand-annotate everything in the real world.

- Better models for tasks like depth estimation, scene parsing, human pose detection, and navigation.

- Wider access for researchers and developers, since the platform, tools, and datasets are being released publicly and work with multiple versions of Unreal Engine.

In short, AirSim360 gives drones “eyes all around” inside a realistic virtual world, along with the labels and tools needed to learn. That helps build smarter aerial robots that can safely and intelligently navigate complex environments.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a single, concise list of what remains missing, uncertain, or unexplored in the paper. Each item is phrased to be actionable for future research.

- Quantitative validation of UAV dynamics: no systematic comparison of the custom flight controller against real quadrotor logs (attitude tracking, thrust allocation, trajectory tracking), including under disturbances (wind, ground effect, propwash).

- Aerodynamic realism: wind fields, turbulence, gusts, propwash, and ground effect are not modeled; the impact of these on perception and control remains untested.

- Sensor realism and noise models: cameras are ideal pinhole; rolling shutter, motion blur, HDR/exposure, noise, vignetting, chromatic aberration, and realistic fisheye optics used by 360° cameras are absent; their effect on downstream performance is unknown.

- ERP-specific artifacts: seam handling at cube-face boundaries and polar distortions are not quantified; no seam-aware training or latitude-aware evaluation is provided.

- Photometric consistency across stitched faces: the claim of “maintaining lighting consistency” lacks a measurable evaluation (e.g., boundary intensity/gradient discontinuity metrics) and failure-case analysis.

- Depth definition mismatch: omnidirectional “slant range” vs perspective “z-depth” is not reconciled across datasets; conversion utilities, evaluation protocols, and cross-domain comparability remain unspecified.

- Instance ID capacity and persistence: the proposed entity segmentation method claims coverage beyond stencil limits but does not disclose max number of addressable instances per frame, ID stability across time, or performance in highly crowded scenes.

- Semantic ontology: mapping of UE category names to standardized taxonomies (e.g., ADE20K or open-vocabulary labels) is under-specified; ambiguity resolution, hierarchy alignment, and cross-scene consistency need documentation and tooling.

- Pedestrian behavior realism: behaviors are limited (walk, chat, phone) with no social dynamics, physics-based locomotion, or interaction with vehicles/obstacles; realism and diversity are unvalidated.

- Pedestrian keypoint accuracy: no comparison to motion-capture ground truth; cross-character skeletal consistency, occlusion handling, and error analysis are missing.

- Dataset diversity gaps: scenes are predominantly outdoor urban; missing indoor, rural, forest, industrial, maritime, suburban, and off-grid environments; limited variation in altitude, weather, lighting, seasonality, and traffic participants (vehicles, cyclists, animals).

- Temporal data scarcity: despite video-level claims, released assets appear frame-based; sequence datasets with per-instance tracking, motion ground truth, and long-term identity persistence are not clearly provided.

- Multimodal sensor suite: IMU, GPS, magnetometer, barometer/altimeter, LiDAR, radar, and event cameras are not modeled; synchronization, timestamps, and noise/latency profiles are unreported.

- Multi-agent scenarios: cooperative UAV teams, inter-agent communication delays, bandwidth limits, and collision avoidance are not supported or evaluated.

- Performance scaling: benchmarking is limited to a single mid-range GPU; scaling to 4K–8K ERP, multi-GPU, multi-scene concurrent rendering, and throughput vs latency trade-offs are unexplored.

- Cross-platform reliability: RHI-based GPU texture copying and stitching are not tested across OSes (Windows/Linux), GPUs, and UE versions; robustness, memory footprint, and failure modes are undocumented.

- Trajectory generation limits: Minimum Snap planning does not account for dynamic obstacles, NFZs, wind, battery/energy constraints, or sensor occlusions; evaluation with onboard controllers and replanning is missing.

- Navigation task rigor: panoramic VLN results show low SR and limited analysis; no direct controlled comparison to forward-camera baselines, instruction complexity stratification, or ablations isolating panoramic benefits.

- Sim-to-real transfer: depth experiments are largely synthetic; broader evaluation on real 360° datasets and field trials is missing; segmentation transfer to real panoramas is limited and lacks detailed domain gap analysis.

- Annotation quality assurance: no human-in-the-loop validation, error rates, or confidence measures for semantic/entity/depth labels; camera extrinsic/intrinsic accuracy and calibration drift are not reported.

- Reproducibility and licensing: asset licenses, scene provenance, deterministic seeds, and version-controlled configurations for exact recreation are not fully specified.

- Safety and regulatory modeling: geofencing, altitude limits, risk assessment, and regulatory constraints (e.g., BVLOS, urban air mobility restrictions) are not represented.

- Panoramic evaluation metrics: latitude-aware mIoU/mAP weighting, seam/pole-aware metrics, and ERP vs equal-area projection fairness are not standardized or reported.

- Projection choices: only ERP is used; comparative analysis of cubemap, equal-area, stereographic, and hybrid projections on task performance is absent.

- Sensor synchronization: Event Dispatcher-based sync is not quantified (timestamp accuracy, jitter, drift); cross-sensor temporal alignment under motion remains unverified.

- Identity tracking across time: methods for maintaining entity IDs through occlusions, merges/splits, and long sequences are unspecified; benchmarks for video panoptic consistency are missing.

- Benchmark breadth: task coverage is narrow; additional panoramic tasks (3D layout estimation, SLAM/mapping, obstacle avoidance, path planning under uncertainty, open-vocabulary detection) and standardized splits/baselines are needed.

- Data scale and redundancy: deduplication via SSCD is mentioned but thresholds, false positives/negatives, and the effect on distribution diversity are not analyzed.

- Backward compatibility details: although UE 4.27–5.6 are supported, feature parity, degraded capabilities, and migration guides are not documented.

Glossary

- Add Socket: A Unreal Engine editor feature to attach named reference points to a skeleton for consistent access to locations on a mesh. Example: "we use the Add Socke method to incorporate them into the Skeleton Tree, thereby enabling users to access and output all designed body keypoint coordinates."

- Behavior Tree: A hierarchical decision-making model often used for autonomous agent logic in games and robotics. Example: "we enable autonomous interactions by combining NPC Behavior Trees with State Machines."

- Blueprint: Unreal Engine’s visual scripting system for creating gameplay logic and tools without C++. Example: "we implement a Blueprint-based approach that invokes pre-existing universal skeletal points in the Skeletal Mesh via blueprint functions."

- Closed-loop simulator: A simulation where control outputs affect the system state, and updated sensor inputs feed back into the controller, enabling interaction during runtime. Example: "AirSim360 is an online closed-loop simulator compatible with multiple versions ranging from UE 4.27 to UE 5.6."

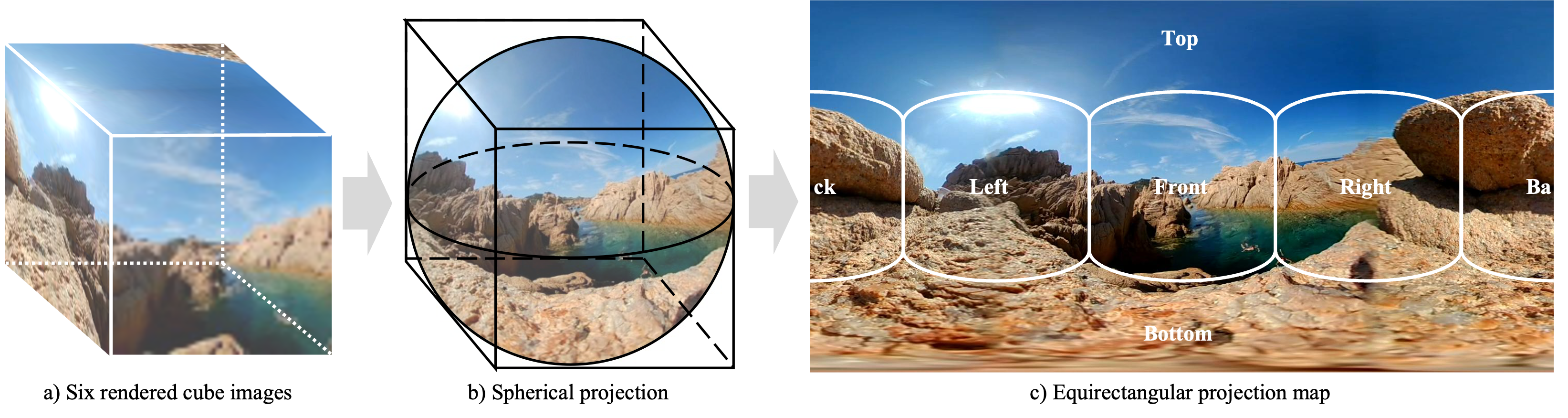

- Equirectangular projection (ERP): A spherical-to-rectangular mapping commonly used to represent 360° panoramas as a 2D image. Example: "The equirectangular projection serves as a standard representation for panoramic imagery."

- Entity segmentation: Pixel-level labeling that assigns a unique identifier to each individual object instance across the entire image, not just selected classes. Example: "Panoptic segmentation has two components: semantic and entity segmentation."

- Event Dispatcher: An Unreal Engine mechanism to broadcast and handle events, useful for synchronizing components like sensors. Example: "we introduced an Event Dispatcher to synchronize all sensors with a unified trigger signal, enabling simultaneous acquisition of multiple data types."

- Minimum Snap: A trajectory optimization method that minimizes higher-order derivatives (snap) to generate smooth, dynamically feasible paths for quadrotors. Example: "we adopt Minimum Snap trajectory planning~\cite{mellinger2011minimum} into our simulation pipeline."

- NPC: Non-Player Character; simulated agents in a virtual environment used to model human or agent behaviors. Example: "we enable autonomous interactions by combining NPC Behavior Trees with State Machines."

- Omnidirectional depth: Depth defined along each pixel’s viewing ray in a 360° image, rather than along a single camera axis. Example: "For example, omnidirectional depth represents slant range along the viewing ray rather than the orthogonal z-axis distance used in perspective projection."

- Panoptic segmentation: A unified task combining semantic segmentation (stuff) and instance/entity segmentation (things) in a single output. Example: "Unlike existing platforms, our simulator supports full runtime interaction, enabling the generation of video-level panoptic segmentation annotations."

- Quadrotor: A type of UAV with four rotors, commonly used in research and applications due to mechanical simplicity and maneuverability. Example: "the resulting polynomial coefficients of the trajectories can be applied directly to real quadrotors."

- Render Hardware Interface (RHI): Unreal Engine’s low-level rendering abstraction layer that allows efficient GPU operations and resource management. Example: "Leveraging the Render Hardware Interface (RHI) described in Section~\ref{sec:render_aligned}, we reimplement the C++ pipeline, increasing the frame rate from 14 to 18 FPS."

- Render Target: A GPU buffer used as a destination for rendering operations, enabling custom passes like depth output. Example: "we proposed a material-based pipeline to extract depth data and render the results into a Render Target."

- Skeletal Mesh: A mesh type in Unreal Engine rigged with a skeleton for animation and joint/keypoint access. Example: "we implement a Blueprint-based approach that invokes pre-existing universal skeletal points in the Skeletal Mesh via blueprint functions."

- Spherical projection: Mapping from 3D directions on a sphere to a 2D parameterization used as an intermediate in panoramic conversions. Example: "we use the spherical projection which serves as a bridge between the input and output representations in Figure~\ref{fig:pro_cube_erp}."

- State Machine: A computational model for behavior control where an agent transitions between discrete states based on events. Example: "a pedestrian state machine can trigger transitions based on a multi-actor message dispatch and receive mechanism, allowing an agent to switch from a walking state to a chatting state when meeting another agent, or to randomly activate an OnPhoneCall state while walking (see Figure~\ref{fig:ipais_logic})."

- Stencil Buffer: A per-pixel buffer in the graphics pipeline used to mask or categorize pixels; often repurposed for labeling. Example: "The Stencil Buffer stores an integer value between 0 and 255 for each pixel in the scene."

- Vision-Language-Action (VLA): A paradigm/interfaces that connect visual perception, natural language understanding, and action/control for embodied agents. Example: "providing an open external interface such as Vision-Language-Action (VLA)~\cite{jiang2025survey} for flexible integration."

- Vision-Language Navigation (VLN): A task where an agent follows natural language instructions to navigate using visual observations. Example: "Vision-Language Navigation (VLN)~\cite{anderson2018vision} for unmanned aerial vehicles (UAVs) represents a challenging yet crucial direction toward embodied intelligence in aerial agents, , requiring joint understanding of spatial scenes and language instructions."

- You Only Move Once (YOMO): A decision-making principle where panoramic awareness enables near one-shot movement toward a goal without extra exploration. Example: "to demonstrate the You Only Move Once (YOMO) capability of panoramic UAVs in decision-making tasks, we place most targets as salient objects and designed short flight trajectories."

- Z-Buffer: A depth buffer storing per-pixel depth values used for visibility; can be read to obtain scene depth. Example: "Since Z-Depth can be directly acquired from the precomputed depth buffer (Z-Buffer) in the Unreal Engine, we proposed a material-based pipeline to extract depth data and render the results into a Render Target."

- Z-Depth: The per-pixel depth value output by the graphics pipeline relative to the camera, often stored in the depth buffer. Example: "Since Z-Depth can be directly acquired from the precomputed depth buffer (Z-Buffer) in the Unreal Engine, we proposed a material-based pipeline to extract depth data and render the results into a Render Target."

Practical Applications

Immediate Applications

Below are applications that can be deployed now using AirSim360’s platform, datasets, and toolchain.

- Panoramic data-as-a-service for perception model training

- Sector: software, robotics, academia

- Use case: Generate large volumes of annotated 360° ERP images with depth, semantic, and entity labels using the Render-Aligned Data and Label Generation pipeline to train/fine-tune panoramic depth estimation, segmentation, and 3D human localization models.

- Tools/products/workflows: “Omni360-X” dataset subsets (Scene, Human, WayPoint), GPU-side RHI stitching pipeline, entity segmentation beyond Stencil limits, ERP-aware depth pipeline; automated training pipelines for panoramic CV.

- Assumptions/dependencies: Requires Unreal Engine 4.27–5.6, capable GPU, storage; sim-to-real domain adaptation may be needed; access to the public dataset and simulator repository.

- Closed-loop UAV algorithm prototyping in panoramic settings

- Sector: robotics, software, industry R&D

- Use case: Rapidly prototype obstacle avoidance, target search, and navigation with panoramic sensors; test control policies in a UE-based closed-loop simulation with the custom flight controller and synchronized multi-sensor capture.

- Tools/products/workflows: Python/Blueprint APIs, event dispatcher for sensor sync, minimum-snap trajectory planner, Vision-Language-Action interface for integrating learned policies, UE5 scenes with dynamic illumination.

- Assumptions/dependencies: Requires UE integration; fidelity depends on scene content and physics modeling; real-world transfer requires tuning for specific drone platforms.

- Human-aware UAV safety testing

- Sector: public safety, policy, robotics

- Use case: Evaluate UAV behavior around pedestrians using IPAS (Interactive Pedestrian-Aware System) to simulate dynamic, interacting human agents with 3D keypoints; assess near-ground flight safety rules and geofencing behaviors.

- Tools/products/workflows: IPAS behavior trees/state machines, skeleton-based keypoint binding, message-passing multi-agent interactions; scenario libraries for safety QA.

- Assumptions/dependencies: Pedestrian motions and interaction rules are simulated; realism depends on behavior models; regulator acceptance requires validation against real-world data.

- Panoramic VLN (Vision-Language Navigation) benchmarking and rapid iteration

- Sector: academia, robotics, software

- Use case: Evaluate and improve language-conditioned navigation for drones using omnidirectional observations; demonstrate “You Only Move Once” (YOMO) behavior in short-range target acquisition without yaw exploration.

- Tools/products/workflows: Panoramic VLN tasks and metrics (SR, SPL, NE), VLA integration, scripted instruction–video–action alignment.

- Assumptions/dependencies: Current LLM-VLM policies need task-specific adaptation; small trajectories favor YOMO; real-world deployment requires reliable panoramic cameras and robust language grounding.

- Curriculum design and teaching labs for UAV panoramic perception

- Sector: education, academia

- Use case: Use AirSim360 to teach ERP geometry, panoramic depth semantics, entity segmentation, UAV dynamics, and minimum-snap trajectories; run reproducible experiments and assignments with Omni360 datasets.

- Tools/products/workflows: Course modules on UE-based simulation, data collection toolkit, standardized benchmarks for segmentation/depth/MPDE/VLN.

- Assumptions/dependencies: Access to UE environments and GPUs; institutional support for software installation; student familiarity with Python/C++/UE Blueprints.

- Pre-visualization for 360° content capture and flight planning

- Sector: media/entertainment, construction, energy

- Use case: Simulate 360° capture missions (e.g., site surveys, cinematic shots) to assess coverage, lighting consistency, and safe trajectories before field operations.

- Tools/products/workflows: Six-camera panoramic stitching with lighting consistency; minimum-snap trajectory synthesis; scene-specific waypoint generation; preview and export workflows.

- Assumptions/dependencies: Scene realism must match target location; weather and environmental factors are not fully modeled; integration with real drone autopilots needed for execution.

- Panoramic mapping and pose-supervised reconstruction prototyping

- Sector: mapping/GIS, construction, robotics

- Use case: Use Omni360-WayPoint (poses and flight dynamics) and panoramic imagery to prototype SLAM/localization pipelines that exploit omnidirectional observations for improved robustness.

- Tools/products/workflows: Pose-aligned panoramic sequences; ERP-aware mapping; physics-consistent trajectories for state estimation and system identification.

- Assumptions/dependencies: Sim-to-real transfer requires sensor calibration and motion-model alignment; ERP distortions must be handled in mapping algorithms.

- Benchmark augmentation for panoramic segmentation and depth

- Sector: academia, software

- Use case: Augment existing benchmarks with Omni360-Scene to improve generalization; demonstrated gains in mIoU/mAP and depth metrics show immediate benefits in model robustness.

- Tools/products/workflows: Fine-tune baseline models (e.g., Mask2Former, UniK3D) with Omni360 data; evaluate cross-domain performance.

- Assumptions/dependencies: Benchmark governance; domain gaps persist; careful train/validation splits to avoid leakage.

- Hobbyist and pilot training in panoramic drone operation

- Sector: consumer/daily life

- Use case: Train hobbyists on panoramic drones to improve situational awareness and target search; practice urban low-altitude flight rules in simulated environments.

- Tools/products/workflows: Prebuilt UE scenarios; safe flight exercises; language-guided mission practice.

- Assumptions/dependencies: Consumer access to the simulator; actual drone hardware with panoramic cameras is less common; regulatory compliance required for urban flights.

Long-Term Applications

Below are applications that will require further research, scaling, regulatory alignment, hardware integration, or productization.

- Autonomous urban delivery with omnidirectional perception and language interfaces

- Sector: logistics, retail, robotics

- Use case: Deploy drones that use panoramic sensors for efficient “one-shot moves” toward targets (YOMO); follow natural language tasking for pickup/drop-off and dynamic rerouting.

- Tools/products/workflows: Panoramic VLN policies trained in sim, robust ERP-aware obstacle avoidance, language-action planners; fleet management dashboards.

- Assumptions/dependencies: Reliable 360° cameras on drones, onboard compute/battery trade-offs, BVLOS approvals, urban airspace integration, robust sim-to-real transfer.

- Human-aware flight safety standards and certification pipelines

- Sector: policy/regulation, public safety

- Use case: Establish standard simulation test suites for pedestrian interactions, crowd scenarios, and near-ground operations; certify panoramic UAV systems against quantitative safety criteria.

- Tools/products/workflows: IPAS-based scenario catalogs; regulator-facing validation reports; standardized metrics for proximity, reaction time, and risk scoring.

- Assumptions/dependencies: Multi-stakeholder consensus on test protocols; legal frameworks for simulation-based certification; validation using real-world incident data.

- Panoramic foundation models for embodied aerial intelligence

- Sector: software, robotics, academia

- Use case: Train large multimodal models on ERP data for cross-task generalization (segmentation, depth, human pose, navigation); leverage Omni360 for pretraining and domain diversification.

- Tools/products/workflows: Panoramic model zoo; unified ERP encoders; curriculum learning across Omni360 subsets; transfer to real drones and mobile robots.

- Assumptions/dependencies: Computing scale, data governance, representation learning for ERP distortions; robust adaptation strategies to real sensors and environments.

- Digital twins with omnidirectional aerial sampling

- Sector: smart cities, infrastructure, construction

- Use case: Create high-fidelity urban digital twins by planning panoramic survey flights; update 3D models for maintenance, traffic analysis, and crowd flow monitoring.

- Tools/products/workflows: Waypoint planners aligned with physics; panoramic photogrammetry pipelines; change detection dashboards.

- Assumptions/dependencies: Persistent data collection, privacy controls, scalable storage and compute, weather and illumination modeling, integration with city GIS.

- Language-guided inspection for energy and utilities

- Sector: energy, utilities

- Use case: Panoramic VLN agents conduct guided inspections of power lines, substations, wind turbines, and solar farms; respond to operators’ natural language instructions (e.g., “circle the insulator and record corrosion”).

- Tools/products/workflows: Task libraries; ERP-aware defect detection; long-duration autonomous flight planning; incident report generation.

- Assumptions/dependencies: Ruggedized panoramic sensors, flight endurance, safety rules near critical infrastructure; domain-specific defect datasets.

- Agriculture: panoramic crop scouting and yield estimation

- Sector: agriculture

- Use case: Use 360° perception to cover fields with fewer maneuvers; language prompts for anomalies (“scan rows 5–10 for pest damage”); improve mapping under canopy occlusions.

- Tools/products/workflows: Panoramic multispectral integration; ERP-aware segmentation of crops/weeds; route planners optimizing coverage.

- Assumptions/dependencies: Sensor payload weight, battery life, environmental variability; robust domain adaptation for crop types and seasons.

- Crowd-aware aerial monitoring for events and disaster response

- Sector: public safety, healthcare (emergency support)

- Use case: Real-time panoramic situational awareness during large events or disasters; human keypoint and pose estimation supports triage and safety oversight.

- Tools/products/workflows: Onboard panoramic inference; edge/cloud fusion; alerting and geofenced policies; multi-UAV coordination.

- Assumptions/dependencies: Real-time compute, comms reliability, strict privacy/legal compliance; high-fidelity human models under occlusion and clutter.

- Standardization of omnidirectional depth and segmentation semantics

- Sector: policy, academia, software

- Use case: Formalize ERP-aware depth definitions (slant range) and entity-level semantics for panoramic data; create interoperable datasets and evaluation protocols.

- Tools/products/workflows: Standards documents, public benchmarks, API conventions for ERP cameras.

- Assumptions/dependencies: Community consensus; backward compatibility with perspective standards; vendor alignment.

- Consumer drones with integrated panoramic perception

- Sector: consumer/daily life, hardware

- Use case: Mass-market drones with compact 360° cameras for improved safety, auto-orbiting, and target following; simplified language-guided filming.

- Tools/products/workflows: Embedded ERP encoders, lightweight model distillation, UX for language prompts, auto-editing pipelines.

- Assumptions/dependencies: Hardware miniaturization, affordable sensors, energy-efficient compute, robust UX and safety features.

- Insurance and risk analytics for aerial operations

- Sector: finance/insurance

- Use case: Use standardized simulation scenarios to price risk for panoramic UAV operations in urban environments and events; assess mitigation benefits of omnidirectional sensing.

- Tools/products/workflows: Risk simulation portfolios, incident probability modeling, compliance scoring.

- Assumptions/dependencies: Access to incident data; regulator-supported risk metrics; insurer acceptance of simulation evidence.

Collections

Sign up for free to add this paper to one or more collections.