Z-Image: An Efficient Image Generation Foundation Model with Single-Stream Diffusion Transformer (2511.22699v1)

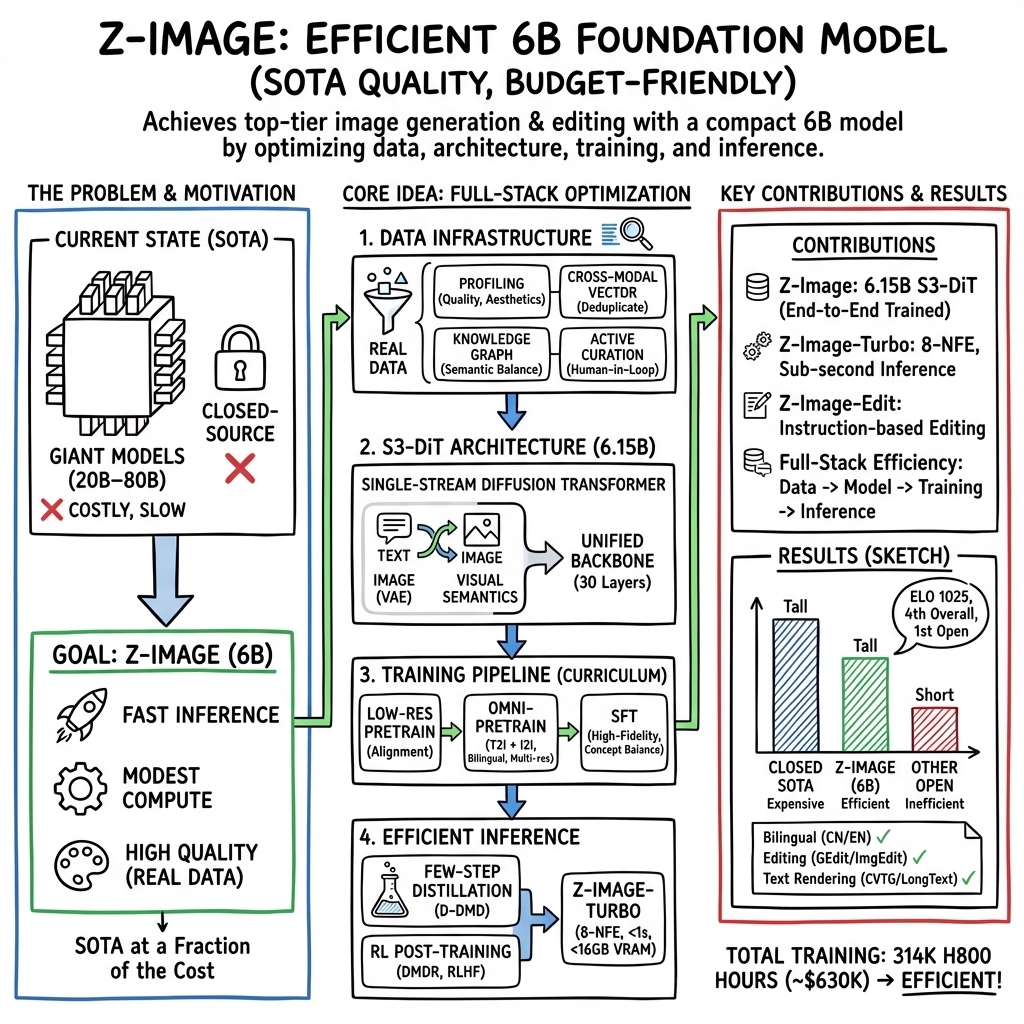

Abstract: The landscape of high-performance image generation models is currently dominated by proprietary systems, such as Nano Banana Pro and Seedream 4.0. Leading open-source alternatives, including Qwen-Image, Hunyuan-Image-3.0 and FLUX.2, are characterized by massive parameter counts (20B to 80B), making them impractical for inference, and fine-tuning on consumer-grade hardware. To address this gap, we propose Z-Image, an efficient 6B-parameter foundation generative model built upon a Scalable Single-Stream Diffusion Transformer (S3-DiT) architecture that challenges the "scale-at-all-costs" paradigm. By systematically optimizing the entire model lifecycle -- from a curated data infrastructure to a streamlined training curriculum -- we complete the full training workflow in just 314K H800 GPU hours (approx. $630K). Our few-step distillation scheme with reward post-training further yields Z-Image-Turbo, offering both sub-second inference latency on an enterprise-grade H800 GPU and compatibility with consumer-grade hardware (<16GB VRAM). Additionally, our omni-pre-training paradigm also enables efficient training of Z-Image-Edit, an editing model with impressive instruction-following capabilities. Both qualitative and quantitative experiments demonstrate that our model achieves performance comparable to or surpassing that of leading competitors across various dimensions. Most notably, Z-Image exhibits exceptional capabilities in photorealistic image generation and bilingual text rendering, delivering results that rival top-tier commercial models, thereby demonstrating that state-of-the-art results are achievable with significantly reduced computational overhead. We publicly release our code, weights, and online demo to foster the development of accessible, budget-friendly, yet state-of-the-art generative models.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper introduces Z-Image, a new computer model that can create and edit high-quality pictures from text descriptions. Its big idea is to prove you don’t need a giant, super-expensive model to get top results. Z-Image has about 6 billion “parameters” (you can think of these as settings or “brain cells”), which is much smaller than many popular models, but it still produces realistic images, writes clear text inside images in both English and Chinese, and runs fast enough to work on regular graphics cards at home.

What questions did the researchers ask?

They focused on a few simple questions:

- Can a smaller, carefully designed model make images as good as huge, costly models?

- Can we build and train it using mostly real, high-quality data instead of copying (distilling) from secret commercial models?

- Can we speed it up so it makes great images in just a few steps and runs on normal hardware?

- Can it also follow detailed instructions to edit images accurately?

How did they do it?

Efficient data, like a well-organized library

Instead of just grabbing millions of random pictures, they built a smart data system to pick and prepare the “right” images at the “right” time. Think of it like a library that:

- Profiles each image and caption for quality, clarity, and meaning (filters out blurry, over-compressed, or off-topic images).

- Removes repeats and near-duplicates by checking meaning, not just pixels.

- Uses a “knowledge graph” (a map of concepts) to ensure broad coverage (people, places, objects, cultures) and to fill in gaps.

- Actively improves itself: it finds topics the model struggles with and adds better examples, with a loop where humans and AI both check and refine captions and scores.

Model design: one conveyor belt for everything

Most image models process text and image parts separately. Z-Image uses a “single-stream” approach: it treats text pieces and image pieces as one long sequence, like mixing Lego bricks on one conveyor belt so every piece can interact. This design:

- Helps text and image information mix deeply at every layer.

- Reuses parameters efficiently, making the model strong even at a smaller size.

- Works for both making new images and editing existing ones in the same framework.

To understand text, they use a strong bilingual text encoder. For images, they use a tool called a VAE, which compresses images into tokens (tiny building blocks) and reconstructs them later. For editing, they add a visual encoder to understand the “reference” image you want to change.

Training steps: from basics to finesse

They trained the model in stages, like learning a sport:

- Low-resolution pre-training at 256×256: learn the basics of matching words to pictures and drawing clear shapes and scenes.

- Omni-pre-training: practice many tasks together (different sizes, text-to-image, image-to-image), so skills transfer and the model gets versatile without separate, expensive training runs.

- Supervised fine-tuning with a prompt enhancer: a helper improves the text instructions so the model better understands complex requests.

They also trained a “captioner” that writes multi-level descriptions for images, including any text found inside (OCR). This helps the model learn to render words correctly in generated images.

Making it fast: fewer clean-up steps

Image generation here is like starting with a noisy image and “cleaning” it step by step. Many models need dozens of steps. Z-Image-Turbo (their fast version) does it in only 8 steps, thanks to:

- A distillation trick: teaching the model to get high quality in fewer steps.

- Reward post-training (a form of reinforcement learning): giving the model feedback scores so it learns which images are better and adjusts accordingly.

This makes Z-Image-Turbo very quick, even under a second on a strong GPU, and it runs on consumer GPUs with under 16 GB of memory.

Image editing data: smart ways to get pairs

Editing needs pairs: the original image and the edited result. They built these pairs by:

- Using expert tools to create many kinds of edits on one image, then mixing and pairing them in different ways to create lots of training examples.

- Pulling related frames from videos (same person, place, movement), which naturally gives diverse editing tasks.

- Rendering text with full control (font, color, position) to teach precise text editing, since real photos with clear, labeled text are rare.

What did they find?

- Z-Image generates very realistic photos and handles English and Chinese text inside images well.

- Z-Image-Edit follows editing instructions precisely, handling complex changes.

- Z-Image-Turbo is fast and efficient: high quality in about 8 steps, sub-second on enterprise GPUs, and fits consumer hardware.

- Training the entire system cost about 314,000 H800 GPU hours (around $628,000 at$2/hour). That’s much cheaper than many state-of-the-art models.

- Despite being smaller, Z-Image matches or beats leading open-source and commercial models in several tests and examples.

Why does this matter?

- Accessibility: High-quality image generation no longer requires massive budgets or specialized hardware. This helps researchers, small companies, and hobbyists.

- Real-data focus: Training on carefully curated real-world data avoids copying from closed models and reduces the risk of “feedback loops” where errors repeat and creativity stalls.

- Practical speed: Fast generation means better user experiences, interactive tools, and lower costs.

- Strong text rendering: Clear bilingual text inside images is useful for signs, designs, ads, and education.

- Editing power: Accurate instruction-following helps creators modify images safely and precisely.

The team is releasing code, model weights, and demos. The bigger picture is that top-tier results are possible with smarter design and training—not just “making everything bigger.”

Knowledge Gaps

Unresolved Knowledge Gaps, Limitations, and Open Questions

Below is a focused list of what remains missing, uncertain, or unexplored, framed to guide future research and replication efforts.

- Data transparency and reproducibility: The training corpora are described as “large-scale internal copyrighted collections,” but dataset composition, size, source breakdown, licensing, and release plans are not specified, hindering reproducibility and auditability.

- Data diversity and coverage: No quantitative analysis of concept, scene, demographic, cultural, and language coverage across the curated dataset; unclear how balanced sampling affects minority and long-tail concepts over time.

- Multilingual scope beyond Chinese/English: Claims focus on bilingual text rendering (Chinese/English); coverage, accuracy, and robustness for non-Latin scripts (Arabic, Devanagari), vertical/curved text, and mixed-language prompts are not evaluated.

- Text rendering robustness: No metrics or stress tests for small fonts, occluded text, perspective distortions, stylized/handwritten fonts, multi-line layouts, or dense textual scenes.

- World Knowledge Topological Graph release/evaluation: The graph’s contents, scale, taxonomy quality, update cadence, and objective evaluation (precision/recall of entity coverage, alignment to user prompts) are not provided.

- Impact of graph-driven sampling: Lacks ablations on how semantic-level balanced sampling from the knowledge graph affects model learning stability, generalization, and bias, and how weights are tuned over training stages.

- CN-CLIP dependence: Deduplication and retrieval rely on CN-CLIP embeddings; the effect on non-Chinese/English data, cross-language generalization, and potential embedding-induced biases is unexamined.

- AIGC filtering trade-offs: The classifier to exclude synthetic images may hamper learning of certain aesthetics or styles; effects on generalization and style diversity are not quantified.

- Captioner evaluation: Z-Captioner’s performance, error rates (OCR accuracy, named-entity correctness), robustness across domains, and comparisons with state-of-the-art captioners are not reported.

- OCR integration method: The Chain-of-Thought style OCR-to-caption pipeline is asserted to improve text rendering but lacks controlled experiments quantifying the gain and its sensitivity to OCR errors.

- Simulated user prompts: No empirical assessment of how “incomplete” simulated prompts match real user prompt distributions and whether this improves instruction-following or harms robustness.

- Editing data construction biases: Mixed-editing and synthetic pairs via expert models may inject model-specific artifacts; diversity and realism of edit operations, as well as the distribution of edit types, remain unquantified.

- Video-frame pairing for editing: No metrics on semantic similarity thresholds, pairing quality, and how this strategy influences temporal consistency or introduces spurious correlations.

- Text-editing rendering system: The controllable text rendering pipeline’s domain gap to natural images and its impact on generalization to real-world text edits are not studied.

- Quantitative benchmarking: The paper emphasizes qualitative showcases but lacks standardized quantitative benchmarks (e.g., FID/KID, CLIPScore/PickScore, ImageReward, TIFA/TextOCR accuracy, GenEval, compositionality tests) across resolutions and tasks.

- Comparative baselines: No head-to-head quantitative comparisons with dual-stream DiT architectures or other 6–10B open models on shared benchmarks to substantiate efficiency and performance claims.

- High-resolution generation: Training begins at 256² with “arbitrary-resolution” omni-pre-training; there are no detailed results on performance, artifacts, and stability at higher resolutions (e.g., 1024², 2048²) and extreme aspect ratios.

- Single-stream architecture scalability: It is unclear how S3-DiT scales beyond 6B parameters in terms of training stability, inference latency, memory footprint, and cross-modal interference relative to dual-stream designs.

- 3D Unified RoPE design choices: Missing ablations on spatial/temporal positional encoding configurations, the effect of reference/target temporal offsets in editing, and failure modes at long sequence lengths.

- Conditioning mechanism details: The low-rank conditional projection (shared down-projection + layer-specific up-projection) lacks ablations quantifying parameter savings vs. conditioning fidelity and the impact on controllability.

- Normalization choices: QK-Norm and Sandwich-Norm are proposed for stability, but their individual and combined contributions and failure cases under different hyperparameters are not presented.

- Flux VAE reliance: The dependence on Flux VAE is stated, but reconstruction quality trade-offs, compatibility with alternative VAEs, and how VAE choices affect text rendering and photo-realism are unexplored.

- Few-step distillation specifics: The teacher model, loss functions, schedules, and the exact role of Decoupled DMD and DMDR in achieving 8 NFEs are not described; robustness across prompts and resolutions remains unknown.

- Turbo model generalization: The speed-quality trade-off for Z-Image-Turbo across diverse domains, OOD prompts, extreme aspect ratios, and long prompts is not benchmarked.

- RL with distribution matching: RLHF details (human annotation budgets, rubric, inter-rater reliability, reward model architecture, reward hacking checks) and their impact on aesthetics vs factual alignment are absent.

- Safety and bias audits: Beyond NSFW filtering, there is no systematic evaluation of demographic biases, stereotyping, unsafe generation, harmful content propensity, or jailbreak robustness; mitigation strategies are unspecified.

- Copyright and privacy risk: With internal copyrighted data, policies to prevent reproduction of identifiable individuals, logos, or protected content are not outlined; watermark detection is profiled but generative watermark handling is not discussed.

- Failure case analysis: No taxonomy of systematic failure modes (e.g., compositional errors, object counting, spatial relations, fine-grained attributes) with targeted remediation strategies.

- Hardware inference claims: Sub-second latency requires FlashAttention-3 and torch.compile; speed/memory benchmarks on consumer GPUs (e.g., RTX 3060, 4060, 3080) with typical batch sizes and resolutions are not provided.

- Energy and cost accounting: GPU-hour costs are cited, but total FLOPs, energy usage, and carbon footprint are not reported; comparisons to the training budgets of competing models lack normalized compute measures.

- Edit instruction-following metrics: Z-Image-Edit performance is demonstrated qualitatively; quantitative evaluation (e.g., HCEdit, PIE-Bench, CLIP-guided edit consistency, human studies with structured criteria) is missing.

- Integration and inference flow of Prompt Enhancer (PE): The PE’s architecture, training data, inference-time latency, and measurable gains vs baseline text encoder are not described; ablations on PE-aware SFT are missing.

- Robustness to adversarial/ambiguous prompts: No evaluation of resistance to prompt injection, contradictory instructions, typos, polysemy, or culturally specific idioms/slang across languages.

- Open-source completeness: While code and weights are released, the absence of dataset, knowledge graph, captioner training data, reward model assets, and active curation tooling limits full reproducibility and external validation.

Glossary

- 3D Unified RoPE: A rotary positional encoding scheme extended to model spatial and temporal axes jointly for mixed token sequences. "We employ 3D Unified RoPE~\cite{qin2025lumina,wu2025omnigen2} to model this mixed sequence, wherein image tokens expand across spatial dimensions and text tokens increment along the temporal dimension."

- Active Curation Engine: A dynamic, human-in-the-loop system that iteratively filters, annotates, and augments data to address long-tail and quality issues. "Active Curation Engine: This module operationalizes our infrastructure into a truly dynamic, self-improving system."

- AIGC Content Detection: Classification to detect AI-generated imagery and remove it from training data. "AIGC Content Detection: Following the findings of Imagen 3~\cite{baldridge2024imagen}, we trained a dedicated classifier to detect and filter out AI-generated content."

- BM25: A probabilistic ranking function for text retrieval used to weight tags during sampling. "By considering both the BM25~\cite{robertson2009probabilistic} score of a tag and its hierarchical relationships (i.e., parent-child links) within the graph, we compute a semantic-level sampling weight for each data point."

- bytes-per-pixel (BPP): A compression-based proxy for image complexity used to filter low-information images. "performing a transient JPEG re-encoding and using the resulting bytes-per-pixel (BPP) as a proxy for image complexity."

- Chain-of-Thought (CoT): A prompting strategy that structures intermediate reasoning steps to improve outputs. "shares the same spirit as Chain-of-Thought (CoT) \cite{wei2022chain}, by first explicitly recognizing all optical characters in the image and then generating a caption based on the OCR results."

- CN-CLIP: A Chinese-language adaptation of CLIP used for cross-modal alignment and similarity scoring. "We use CN-CLIP~\cite{yang2022chinese} to compute the alignment score between an image and its associated alt caption."

- community detection algorithm: A graph clustering method (e.g., Louvain) used here to identify duplicate or related data groups. "and subsequently apply the community detection algorithm \cite{traag2019louvain}."

- Cross-modal Vector Engine: A large-scale embedding and retrieval system for semantic deduplication and targeted data search. "Cross-modal Vector Engine: Built on billions of embeddings, this module is the engine for ensuring efficiency and diversity."

- Decoupled DMD: A distillation technique that separates quality-improving and stability roles to enable fast sampling without quality loss. "Decoupled DMD~\cite{liu2025decoupled}, which explicitly disentangles the quality-enhancing and training-stabilizing roles of the distillation process,"

- Diffusion Transformer: A generative model that combines diffusion processes with transformer architectures for image synthesis. "we present Z-Image, a powerful diffusion transformer model that challenges both the ``scale-at-all-costs'' paradigm and the reliance on synthetic data distillation."

- DMDR: A training method that integrates reinforcement learning with a distribution-matching regularizer during distillation. "DMDR~\cite{jiang2025dmdr}, which integrates Reinforcement Learning by employing the distribution matching term as an intrinsic regularizer."

- FlashAttention-3: An optimized attention kernel enabling faster, memory-efficient transformer inference and training. "FlashAttention-3~\cite{shah2024flashattention and torch.compile~\cite{ansel2024pytorch} is necessary for achieving sub-second inference latency."

- Flux VAE: A variational autoencoder used for high-quality image tokenization and reconstruction. "For image tokenization, we utilize the Flux VAE~\cite{flux2023} selected for its proven reconstruction quality."

- k-nearest neighbor (k-NN) search: A similarity search approach replacing range search to scale deduplication via proximity graphs. "we substitute it with a highly efficient k-nearest neighbor (k-NN) function."

- Modality-agnostic architecture: A design that treats tokens from different modalities uniformly to maximize parameter sharing. "This modality-agnostic architecture maximizes cross-modal parameter reuse to ensure parameter efficiency, while providing flexible compatibility for varying input configurations in both Z-Image and Z-Image-Edit."

- Number of Function Evaluations (NFEs): The count of sampling steps used during generation in diffusion models. "Z-Image-Turbo, which delivers exceptional aesthetic alignment and high-fidelity visual quality in only 8 Number of Function Evaluations (NFEs)."

- Omni-pre-training: A unified, multi-task training stage that jointly learns across varying resolutions and tasks (T2I and I2I). "Omni-pre-training, a unified multi-task stage that consolidates arbitrary-resolution generation, text-to-image synthesis, and image-to-image manipulation."

- PageRank: A graph centrality metric used to filter low-importance nodes from the knowledge graph. "centrality-based filtering removes nodes with exceptionally low PageRank~\cite{page1999pagerank} scores, which represent isolated or seldom-referenced concepts;"

- PE-aware Supervised Fine-tuning: Fine-tuning that jointly optimizes the model with a prompt enhancer to improve instruction following. "PE-aware Supervised Fine-tuning, a joint optimization paradigm where Z-Image is fine-tuned using PE-enhanced captions."

- perceptual hash (pHash): A compact fingerprint of an image used for efficient near-duplicate detection. "we compute a perceptual hash (pHash) from the image's byte stream."

- Prompt Enhancer (PE): An auxiliary module that enriches prompts to improve model understanding without scaling core parameters. "The compact model size is also made possible in part by our use of a prompt enhancer (PE) to augment the model's complex world knowledge comprehension and prompt understanding capabilities,"

- Proximity graph: A graph built from nearest-neighbor relationships used for scalable deduplication and clustering. "We construct a proximity graph from the k-NN distances and subsequently apply the community detection algorithm \cite{traag2019louvain}."

- QK-Norm: A normalization technique applied to query/key vectors to stabilize attention activations. "we implement QK-Norm to regulate attention activations~\cite{karras2024analyzing, luo2018cosine, gidaris2018dynamic, nguyen2023enhancing}"

- Reinforcement Learning: An optimization paradigm using reward signals, here integrated with distribution matching in distillation. "DMDR~\cite{jiang2025dmdr}, which integrates Reinforcement Learning by employing the distribution matching term as an intrinsic regularizer."

- RMSNorm: A normalization layer that scales activations by their root-mean-square, used throughout the model. "Finally, RMSNorm~\cite{zhang2019root} is uniformly utilized for all the aforementioned normalization operations."

- Sandwich-Norm: Normalization applied before and after layers (attention/FFN) to stabilize training signals. "and Sandwich-Norm to constrain signal amplitudes at the input and output of each attention / FFN blocks~\cite{ding2021cogview,gao2024lumina-next}."

- semantic deduplication: Removing near-duplicate data based on semantic similarity in embedding space. "a Cross-modal Vector Engine for semantic deduplication and targeted retrieval,"

- SigLIP 2: A vision-LLM variant used to encode high-level semantics from reference images for editing. "Exclusively for editing tasks, we augment the architecture with SigLIP 2~\cite{tschannen2025siglip} to capture abstract visual semantics from reference images."

- Single-Stream Multi-Modal Diffusion Transformer (S3-DiT): A unified transformer that processes concatenated text and image tokens for efficient cross-modal learning. "we propose a Scalable Single-Stream Multi-Modal Diffusion Transformer (S3-DiT)."

- text-to-image (T2I) generation: The task of generating images from textual descriptions. "The field of text-to-image (T2I) generation has witnessed remarkable advancements in recent years,"

- Vision-LLM (VLM): A model jointly trained on visual and textual data to understand and describe images. "We have trained a specialized Vision-LLM (VLM) to generate rich semantic tags."

- visual generatability filtering: Filtering concepts that cannot be coherently visualized to improve training relevance. "second, visual generatability filtering uses a VLM to discard abstract or ambiguous concepts that cannot be coherently visualized."

- World Knowledge Topological Graph: A hierarchical knowledge graph of concepts used to guide balanced data sampling and coverage. "World Knowledge Topological Graph: This structured knowledge graph provides the semantic backbone for the entire infrastructure."

Practical Applications

Overview

This paper introduces Z-Image, a 6B-parameter single-stream diffusion transformer for text-to-image generation and image editing, trained on real-world data with an efficiency-first pipeline. Key contributions include: a scalable S3-DiT architecture; an omni-pre-training curriculum; few-step distillation plus RL for 8-NFE high-quality inference (Z-Image-Turbo); an instruction-following editing model (Z-Image-Edit); a bilingual-capable captioner with OCR (Z-Captioner); and a full data infrastructure for efficient curation (profiling, cross-modal vector engine, knowledge-graph-guided sampling, and human-in-the-loop active curation). The model fits <16GB VRAM and achieves sub-second latency on enterprise GPUs with FlashAttention-3 and torch.compile.

Below are practical applications derived from these findings, methods, and tools. Each bullet specifies sector links, potential products/workflows, and feasibility assumptions.

Immediate Applications

These are deployable now with the released code/weights and standard engineering integration.

- Creative production and marketing content at scale

- Sectors: Advertising, Media, Entertainment, E-commerce.

- Use cases: Photorealistic ad creatives, product lifestyle shots, hero images, seasonal campaign variants, social media assets, concept art and storyboards, bilingual (Chinese/English) posters and signage with accurate in-image text.

- Tools/workflows: Z-Image-Turbo microservices for prompt-to-asset pipelines; prompt enhancer for stylistic/semantic control; A/B variant generators with 8-NFE fast sampling; batch generation APIs embedded in DAM/creative suites.

- Dependencies/assumptions: Content safety filters and brand/compliance checkers; FlashAttention-3 and torch.compile for sub-second GPU latency; review of license terms for commercial deployment; quality of prompts and reward model alignment.

- Instruction-following image editing for rapid iteration

- Sectors: E-commerce, Publishing, Design, Media Operations.

- Use cases: Background replacement, object/attribute edits, text updates on packaging or banners, colorways and minor SKU changes, touch-ups for catalog standardization, multilingual poster localization.

- Tools/workflows: Z-Image-Edit endpoints in retouch pipelines; batch edit queues; “difference caption” assisted edit checklists; human-in-the-loop approvals.

- Dependencies/assumptions: Clear editing instructions and content policy guardrails; audit logs for edits; adherence to legal/ethical norms (e.g., no deceptive image manipulation).

- Bilingual text rendering inside images

- Sectors: Marketing, Education, Public Communication, Product Design.

- Use cases: Localized graphics (CN/EN), physical/digital signage mocks, UI mockups requiring legible copy, festival/event materials.

- Tools/workflows: Templates with slot-filling for text-in-image; prompt enhancer to ensure brand-voice consistency.

- Dependencies/assumptions: Best performance for Chinese/English; additional languages may require fine-tuning; strict typography/spec constraints may still need QA.

- Structured captioning and OCR-augmented metadata generation (Z-Captioner)

- Sectors: Search/Indexing, Accessibility, DAM/Archives, SEO.

- Use cases: Alt-text generation, multi-level captions (tags/short/long) for asset search and SEO, OCR-aware captions to index embedded text, “difference captions” for change tracking across design iterations.

- Tools/workflows: Captioning services integrated into ingestion pipelines; auto-tagging with knowledge graph linking for improved retrieval.

- Dependencies/assumptions: Caption quality depends on image domain and world-knowledge coverage; human spot-checking for high-stakes contexts.

- Data curation infrastructure for multimodal teams

- Sectors: AI/ML Platform, MLOps, Research Labs.

- Use cases: Semantic deduplication at scale (GPU-accelerated k-NN + community detection), long-tail concept coverage using the World Knowledge Topological Graph, cross-modal retrieval for failure diagnosis and remediation, human-in-the-loop active curation with reward/captioner retraining.

- Tools/workflows: Data Profiling Engine (quality filters, AIGC detection, aesthetics, entropy); Cross-modal Vector Engine (CAGRA-based indexing); balanced sampling using the knowledge graph; active curation dashboards for data fixes.

- Dependencies/assumptions: GPU resources for indexing (billions of embeddings); rights-cleared data; organization-specific safety and provenance checks.

- Cost- and compute-efficient domain fine-tuning

- Sectors: Enterprise AI, SMEs, Academia.

- Use cases: Building domain-specific but small-footprint T2I/editing models that run on a single consumer GPU (<16GB VRAM); on-prem/private deployments where data cannot leave the firewall.

- Tools/workflows: S3-DiT checkpoints + omni-pre-training recipes; few-step distillation and RL post-training for target aesthetics/brand alignment.

- Dependencies/assumptions: Curated domain data; reward models aligned with business KPIs; engineering for inference acceleration on available hardware.

- Education and outreach materials

- Sectors: K–12, Higher Ed, Nonprofits, Government.

- Use cases: Visual aids, bilingual handouts, infographics with legible text, custom practice materials for language learning.

- Tools/workflows: Prompt libraries for curriculum topics; teacher-facing UIs with content safety presets.

- Dependencies/assumptions: Content vetting for age appropriateness; accessibility reviews; device/GPU availability or cloud access.

- On-device or on-prem creative assistants

- Sectors: Software, Privacy-focused Enterprises, Regulated Industries.

- Use cases: Private creative generation/editing where data residency matters; offline workflows for sensitive assets.

- Tools/workflows: Local deployment leveraging <16GB GPUs; integration into desktop authoring tools.

- Dependencies/assumptions: Local GPU and driver stack; FlashAttention-3 support for best latency; model license compliance.

- Academic baselines and methodological re-use

- Sectors: Academia, Open-Source ML.

- Use cases: Studying single-stream diffusion transformers; evaluating QK-Norm/Sandwich-Norm conditioning; reproducing low-cost training pipelines (314K H800 hours); exploring few-step distillation (Decoupled DMD) and RL regularization (DMDR).

- Tools/workflows: Released code/weights/demos; ablation-friendly configs; data-engine components as reusable modules.

- Dependencies/assumptions: Compute availability for replication; dataset provenance; ethical review for data use.

- Policy and sustainability references

- Sectors: Public Sector, Standards Bodies, ESG.

- Use cases: Evidence for compute- and cost-efficient SOTA alternatives; frameworks to avoid synthetic data feedback loops; governance patterns for data curation and safety scoring; energy/carbon reporting for model development.

- Tools/workflows: The paper’s cost accounting as a template; adoption of AIGC detection and NSFW scoring in public deployments.

- Dependencies/assumptions: Transparent reporting of training data sources and rights; organizational adoption of safety pipelines.

- Daily-life creativity tools

- Sectors: Consumer Apps.

- Use cases: Personalized posters/invitations, memes with accurate text, photo edits for social sharing.

- Tools/workflows: Lightweight web/mobile frontends calling Z-Image-Turbo APIs; preset styles and safe-prompt templates.

- Dependencies/assumptions: Cloud inference for mobile; rate limits and moderation; clear UI for attribution and responsible use.

Long-Term Applications

These require further research, domain data, scaling, or productization.

- Multilingual text-in-image beyond CN/EN

- Sectors: Global Marketing, Localization, Government Communications.

- Use cases: Accurate rendering across scripts (e.g., Arabic, Devanagari, Cyrillic); strict typographic fidelity in complex scripts.

- Tools/workflows: Extend Z-Captioner OCR and training data to more languages; typography-constrained generation and layout control.

- Dependencies/assumptions: High-quality multilingual OCR and caption corpora; font/layout priors; additional fine-tuning.

- Domain-specific synthetic data generation for safety-critical fields

- Sectors: Healthcare, Autonomous Driving, Industrial Inspection, Robotics.

- Use cases: Augment scarce datasets with photorealistic, labeled images (e.g., rare pathologies, edge-case scenes, defect patterns).

- Tools/workflows: Knowledge-graph-guided coverage planning; difference captions for controlled edits; reward models for domain realism.

- Dependencies/assumptions: Strong domain supervision and expert validation; regulatory compliance; preventing bias and ensuring clinical/operational validity.

- Real-time AR/VR content generation with legible overlays

- Sectors: XR, Retail, Museums, Field Service.

- Use cases: On-device generation of environment-aware visuals and signage; dynamic exhibits; guided workflows with in-scene text.

- Tools/workflows: Low-latency generators optimized for mobile GPUs/NPUs; spatially-aware prompt conditioning.

- Dependencies/assumptions: Further latency reduction and memory optimization; robust safety and context grounding.

- Closed-loop creative optimization with integrated reward models

- Sectors: AdTech, E-commerce.

- Use cases: Auto-generate, evaluate, and iterate creatives to maximize CTR/ROAS; personalized creatives per cohort.

- Tools/workflows: RL post-training (DMDR) aligned to business metrics; active curation loop for failure remediation; online learning safeguards.

- Dependencies/assumptions: Reliable offline-to-online metric transfer; bias and fairness controls; experiment governance.

- Automated dataset governance at enterprise scale

- Sectors: MLOps, Compliance, Legal.

- Use cases: Continuous data profilers that flag rights risks, NSFW, duplication, and concept gaps; lineage tracking and audit readiness.

- Tools/workflows: Productionization of the four-module data infrastructure; policy-driven sampling and retention.

- Dependencies/assumptions: Data contracts/provenance tooling; integration with DLP and rights management; human oversight.

- Multimodal co-pilots for design and documentation

- Sectors: Software, Product, Technical Writing.

- Use cases: From rough prompts to spec-compliant UI mockups with labeled components; technical diagrams with editable text; iterative design edits via instructions.

- Tools/workflows: Single-stream architecture extended with layout/semantic tokens; PE-guided reasoning for constraints.

- Dependencies/assumptions: Training on design/UX corpora; constraint satisfaction modules; interaction design research.

- Public-service content generation at population scale

- Sectors: Public Health, Emergency Response, Education Ministries.

- Use cases: Rapid multilingual advisories and posters with clear in-image text; localized visuals for diverse communities.

- Tools/workflows: Pre-approved prompt libraries; distribution via civic platforms; automated accessibility checks.

- Dependencies/assumptions: Strong governance to prevent misinformation; review boards; cultural/linguistic validation pipelines.

- Agentic remediation of model failures via retrieval and curation

- Sectors: AI Platform, Research.

- Use cases: Agents that detect failure modes, retrieve counterexamples via the Cross-modal Vector Engine, and propose data fixes or fine-tuning sets.

- Tools/workflows: Integration of retrieval, diagnostics, and active curation; semi-automated labeling with human verification.

- Dependencies/assumptions: Robust failure detectors and reward models; oversight to avoid dataset drift or mode collapse.

- Energy- and cost-aware training playbooks for public institutions

- Sectors: Academia, Nonprofits, Government Labs.

- Use cases: Building high-quality generative models within modest budgets; transparent reporting and reproducibility.

- Tools/workflows: Replicable curricula (low-res pretrain → omni-pretrain → PE-aware SFT → distill/RL); green scheduling and carbon accounting.

- Dependencies/assumptions: Access to affordable compute; open datasets with clear licensing; community benchmarks.

- Robust watermark and provenance-aware generation ecosystems

- Sectors: Media, Platforms, Policy.

- Use cases: Training captioners/generators that detect/descriptively handle watermarks and provenance signals; discouraging illicit removal or misuse.

- Tools/workflows: Watermark-aware captioning; provenance-preserving pipelines (e.g., C2PA interop); deterrence through policy and UX.

- Dependencies/assumptions: Standardized provenance signals; platform adoption; legal frameworks.

Notes on feasibility and risk

- Content safety and misuse: Deploy with NSFW filters, deepfake abuse mitigations, watermark/provenance respect, and strong policy enforcement.

- Hardware/software stack: Sub-second latency assumes FlashAttention-3 and torch.compile on supported GPUs; consumer-grade runs are feasible but slower without these optimizations.

- Licensing and data rights: Review model and code licenses; replicate data pipelines only with rights-cleared sources.

- Generalization limits: Best bilingual performance is CN/EN; other scripts may require fine-tuning. Domain-critical applications demand expert validation and regulatory compliance.

Collections

Sign up for free to add this paper to one or more collections.