- The paper presents an adversarial iterative approach that refines tests and patches for reliable software issue resolution.

- It leverages dual generators in a containerized environment to enhance patch robustness against evolving test cases.

- Empirical results on SWE-bench Verified show a state-of-the-art performance of 79.4%, validating the framework's effectiveness.

InfCode: Adversarial Iterative Refinement of Tests and Patches for Reliable Software Issue Resolution

Introduction

The paper "InfCode: Adversarial Iterative Refinement of Tests and Patches for Reliable Software Issue Resolution" (2511.16004) addresses the persistent challenge of automating software issue resolution using LLMs. Although LLMs have shown competence in tasks such as code generation and repair at a function level, their efficacy in resolving repository-level issues remains undeveloped. These issues demand intricate understanding and manipulation across multiple files within a repository, necessitating robust reasoning and verification capabilities.

The proposed InfCode framework aims to refine this process through a multi-agent adversarial approach. It emphasizes the interaction between a Test Patch Generator and a Code Patch Generator, enabling iterative strengthening of both test cases and code patches. The Selector agent then evaluates candidate patches, integrating the most reliable one into the software repository. Empirical results demonstrate InfCode's superiority over existing solutions, achieving a new state-of-the-art (SOTA) performance on the benchmark SWE-bench Verified.

Methodology

Framework Overview

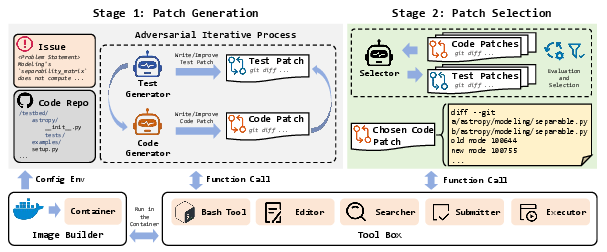

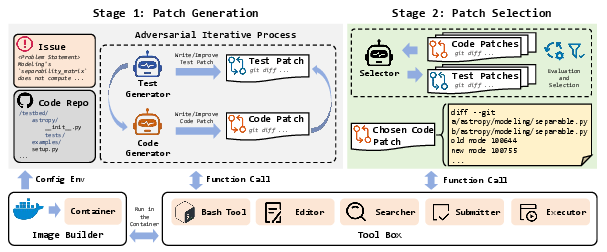

InfCode consists of several core components working in tandem to resolve software issues effectively. As depicted (Figure 1), the system is divided into two main stages: Patch Generation and Patch Selection. The process initiates with establishing a controlled, containerized environment, facilitating repository inspections, modifications, and validation through a set of integrated tools.

Figure 1: Overview of InfCode for automated code patch generation and selection.

The adversarial interaction between the Code Patch Generator and the Test Patch Generator lies at the heart of this framework. This interaction ensures continuous refinement, where tests are progressively enhanced to expose more nuanced flaws, prompting the generation of more robust code patches. This mechanism ensures the creation of patches that maintain both functionality and robustness against extensive testing.

Stage 1: Patch Generation

Patch Generation involves producing preliminary patches via an iterative adversarial format. Initially, the Test Generator crafts test patches intended to highlight the bug's behavior. The Code Generator produces code patches aiming to fulfill these tests within a containerized setting, ensuring consistency and reproducibility.

Adversarial Iterative Refinement: The adversarial mechanism is key to this stage. It drives the Test Generator to develop stronger test suites, while the Code Generator adapts by refining patches to satisfy these challenges. This iterative enhancement results in a dynamic equilibrium, leading to high-quality patches that are both broadly applicable and resistant to regression.

Stage 2: Patch Selection

Stage 2 emphasizes selecting the optimal patch from several candidates produced in Stage 1. The Selector agent evaluates patches based on metrics such as correctness, coverage, execution stability, and compatibility. The evaluation occurs within the same controlled environment, ensuring reliable and reproducible outcomes. Patches are empirically verified through execution, and the most reliable solution, which resolves the issue while meeting enhanced test conditions, is integrated into the repository.

Results and Discussion

Experimental Validation

InfCode's evaluation encompasses two main datasets: SWE-bench Lite and SWE-bench Verified. The results illustrate InfCode's consistent enhancements over baseline methods, with an SOTA achievement of 79.4% on SWE-bench Verified. The framework's robustness is further validated by Claude 4.5 Sonnet's performance, confirming InfCode's resilience across different modeling capabilities.

Figure 2: Illustrate the overlap between issues resolved by InfCode and the top baseline methods.

A detailed analysis of tool invocation failures reveals that while most operations are robust, execution errors in Bash Tool warrant further refinement. Despite its infrequent failure, such errors predominantly stem from environmental assumptions or command existence, underscoring the necessity for environment-aware command utilization.

Figure 3: Distribution of the average number of tool invocations per problem and the corresponding failure rates.

Implications and Future Work

InfCode's adversarial approach highlights the importance of iterative co-evolution between test and patch generation in automated software repair. This methodology not only improves the reliability of patch generation but also brings forth a scalable strategy for applying LLMs to complex, real-world problems. Future enhancements could explore expanding this framework to other programming languages, integrating more intelligent heuristics for test generation, and refining tool implementations to mitigate invocation failures.

Conclusion

InfCode sets a benchmark in automated software issue resolution by introducing an adversarial, multi-agent framework that iteratively refines test and patch quality. This strategy, paired with robust evaluation in containerized environments, positions InfCode as a leader in advancing repository-level issue resolution. Its success suggests a promising trajectory for integrating LLMs into practical software engineering workflows, paving the way for further developments and applications across diverse programming contexts.