- The paper demonstrates that scaling human motion data, model capacity, and compute significantly improves whole-body motion tracking for humanoid control.

- It introduces a universal policy with a shared latent token space that unifies diverse control modalities, enabling seamless sim-to-real transfer.

- The system achieves state-of-the-art tracking accuracy and robustness, supporting rapid interactive control and teleoperation in real-world scenarios.

SONIC: Supersizing Motion Tracking for Natural Humanoid Whole-Body Control

Introduction and Motivation

SONIC proposes a scalable, data-driven paradigm for humanoid control by identifying dense, physics-based whole-body motion tracking as the foundation task. The key insight is to leverage massive human motion-capture datasets for dense supervision, avoiding reliance on brittle, per-task reward engineering. SONIC trains a universal control policy capable of generalizing to diverse modalities—robot, human, and hybrid commands—enabling robust sim-to-real transfer and flexible downstream applications ranging from teleoperation to multi-modal semantic control.

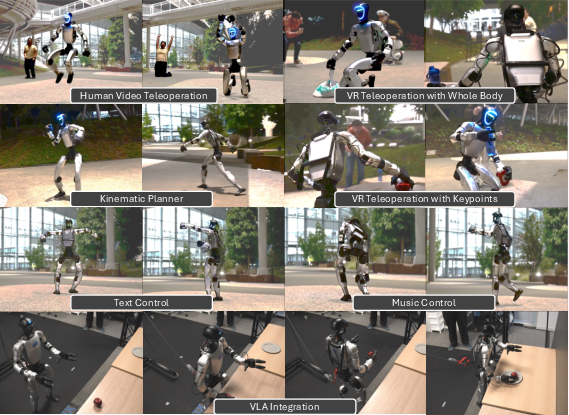

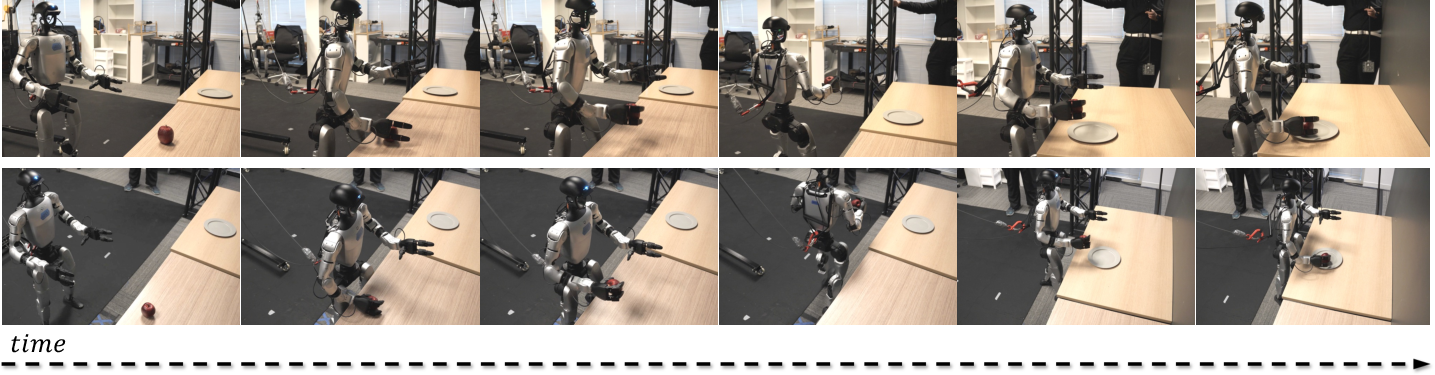

Figure 1: SONIC enables diverse humanoid tasks through a universal control policy that handles diverse input modalities and control interfaces.

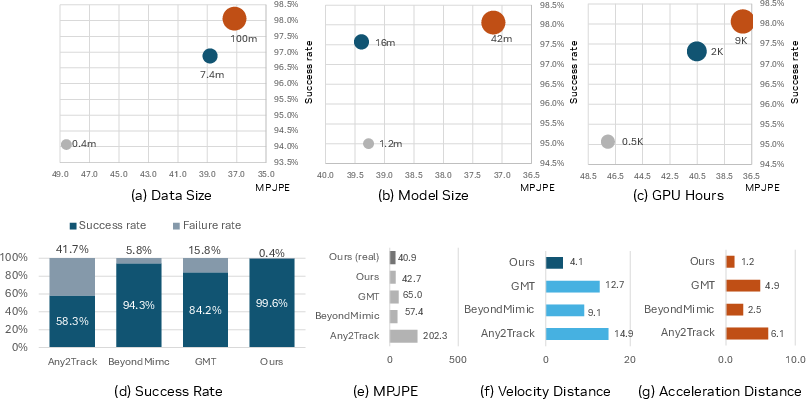

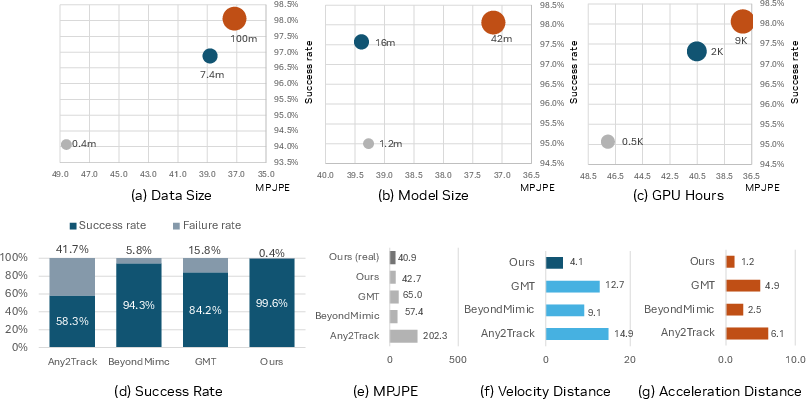

Scaling Law Analysis and Core Results

The empirical scaling paper focuses on three axes: dataset size (up to 100M frames), model capacity (1.2M–42M parameters), and compute (up to 128 GPUs, 9k GPU-hr). The central finding is a monotonic improvement in motion imitation performance as scale along each axis increases. Scaling data yields the strongest effects, followed by model and then compute, with diminishing returns at lower scales. Increasing parallelism (e.g., 8 vs 128 GPUs) is strictly necessary to reach optimal performance even at fixed total GPU-hours.

Motion tracking is evaluated primarily with MPJPE and success rates on large, out-of-distribution benchmarks. SONIC outperforms state-of-the-art methods (e.g., Any2Track, BeyondMimic, GMT) across all metrics, especially in tracking long-horizon, complex behaviors that require stable whole-body balance and contact-rich skills.

Figure 2: SONIC achieves lower mean per joint position error as dataset, model, and compute scale. It outperforms baselines in both tracking accuracy and robustness on unseen motion sequences.

In real-world evaluation on the Unitree G1, SONIC achieves 100% success rate over 50 diverse, zero-shot motion sequences, showing sim-to-real robustness with negligible performance loss relative to simulation.

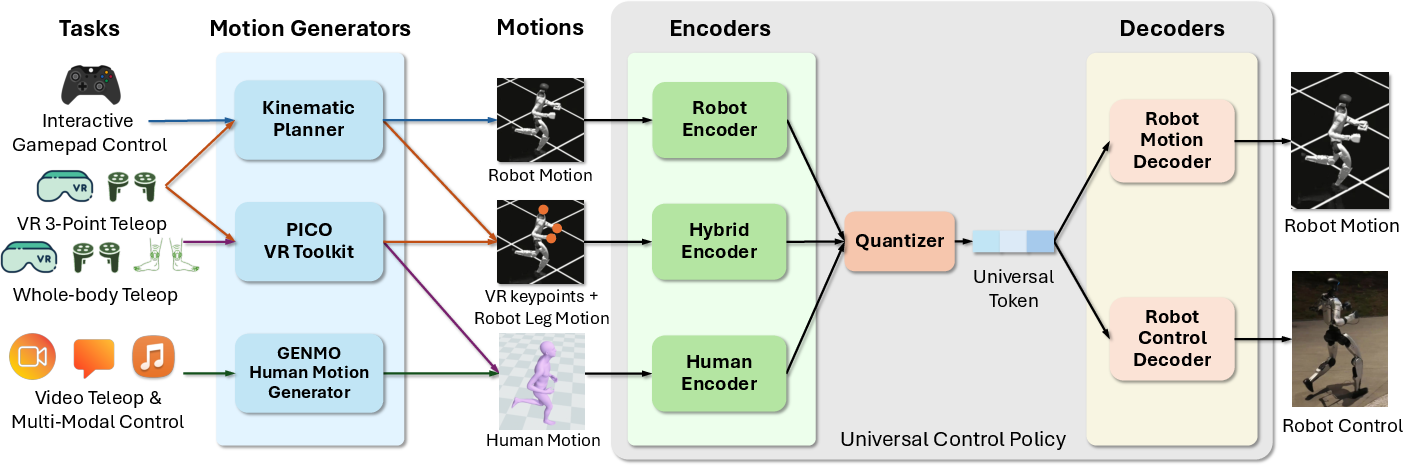

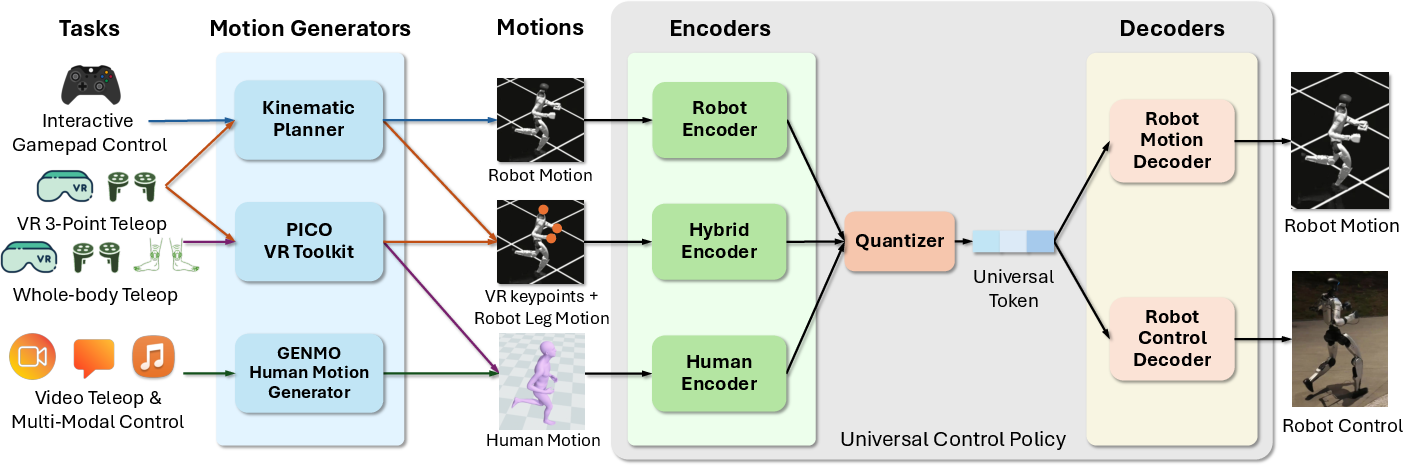

Universal Policy and Token Space Design

The architecture unifies disparate motion command types through a shared latent token space produced by specialized encoders (robot, human, hybrid). All commands are mapped to this universal embedding, which the robot-control decoder uses to generate joint actions. A vector quantizer (FSQ) enforces discretization, supporting downstream resilience and enabling multitask, cross-embodiment transfer. Auxiliary decoders and cycle-consistency losses further regularize latent-space alignment between human and robot trajectories.

Figure 3: Universal control policy with multi-modal encoders and decoders enables seamless integration of diverse applications and input modalities.

This universal structure makes SONIC amenable to diverse control modalities and paves the way for policy compositionality and zero-shot generalization beyond the training distribution.

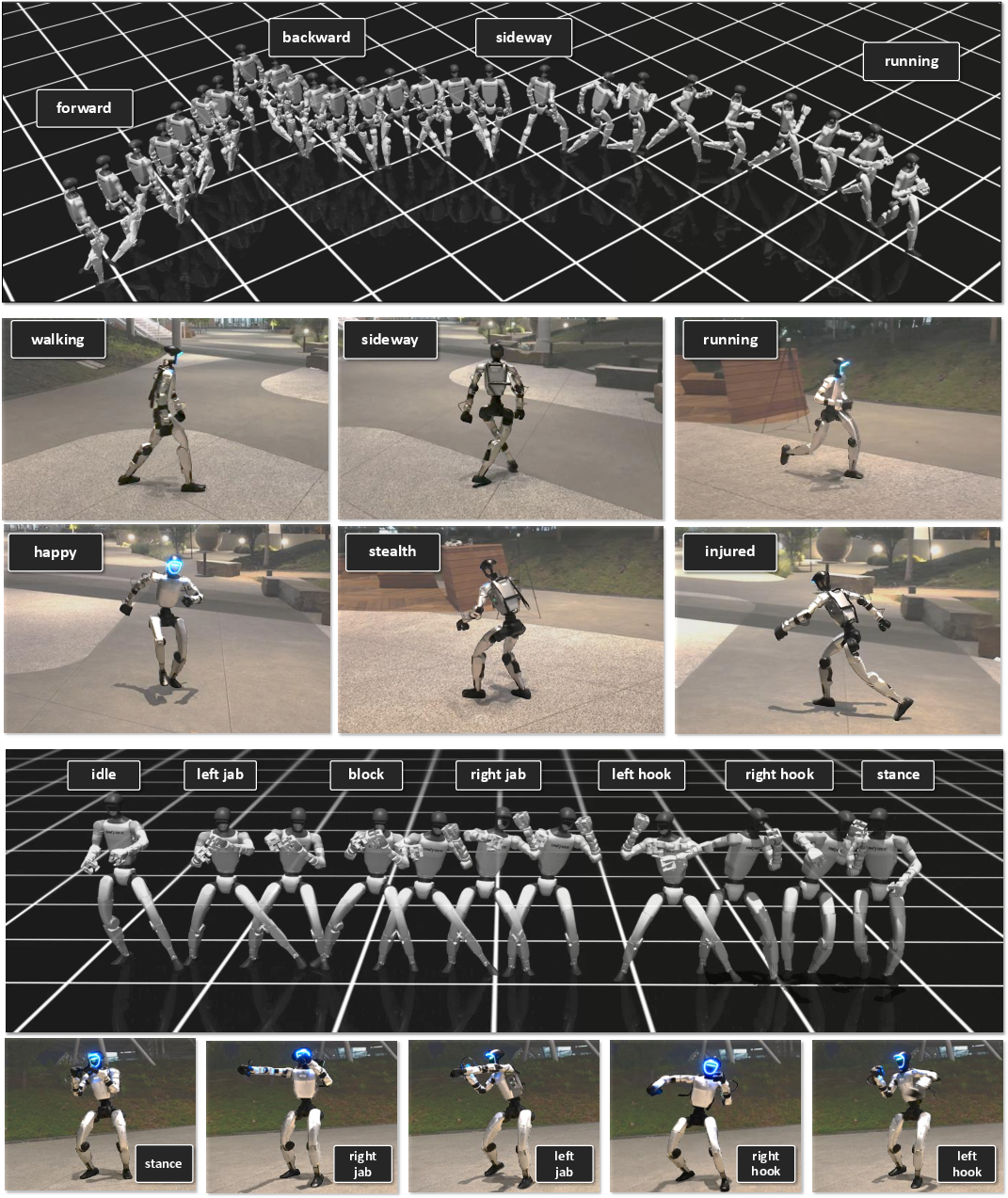

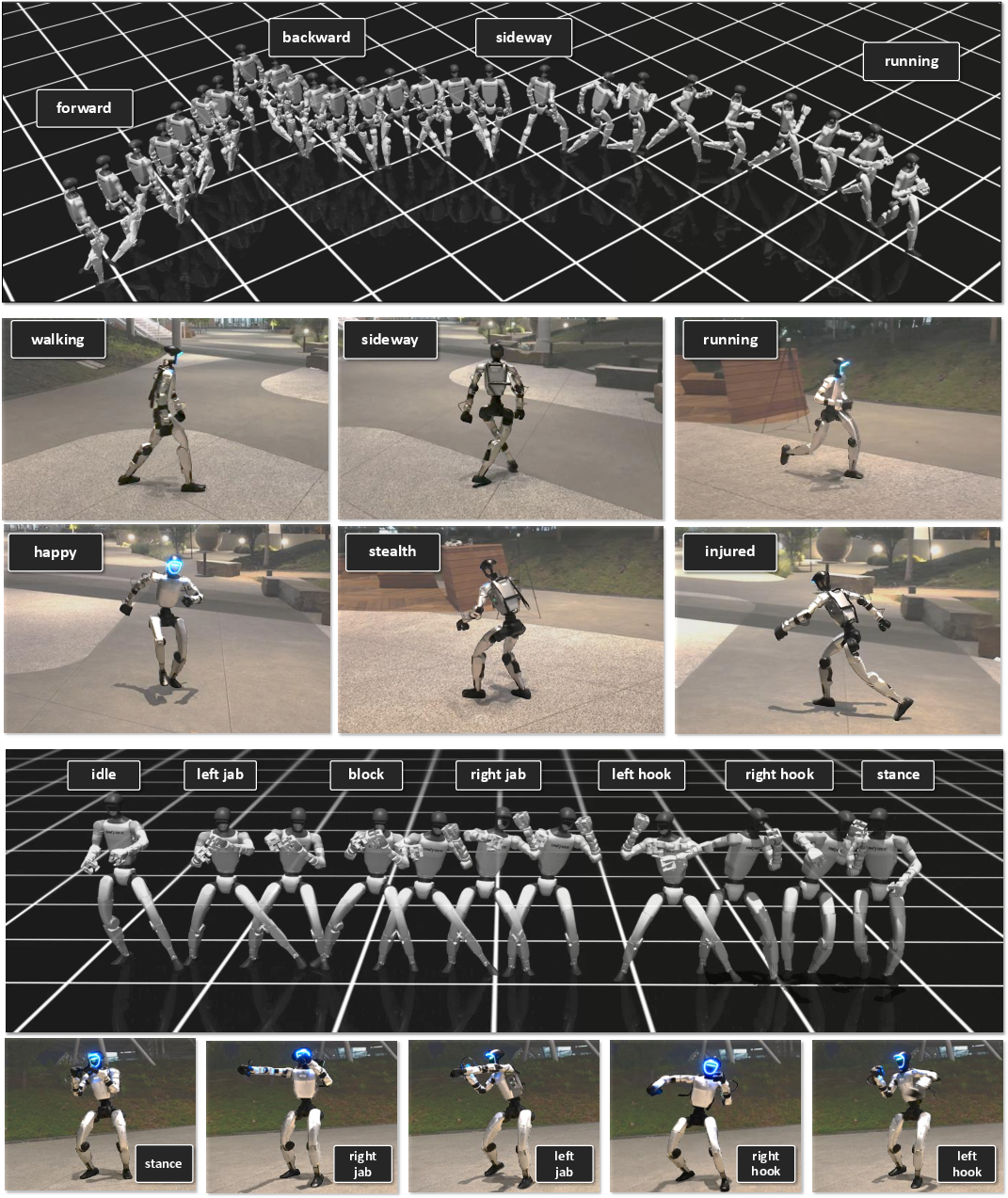

Kinematic Motion Planning and Interactive Control

SONIC includes a real-time, generative kinematic planner trained via latent-space masked modeling. The planner synthesizes short-horizon inbetweening trajectories conditioned on current and goal keyframes, supporting rapid replanning (<12 ms on Jetson Orin). A critical-damped spring model proposes root trajectories from user commands (velocity, heading, style) for robust navigation guidance, while the generative planner handles low-level synthesis.

Figure 4: Robust interactive navigation, rapid style switching, and entertainment (boxing) motions with full-body responsiveness are enabled by SONIC's planner.

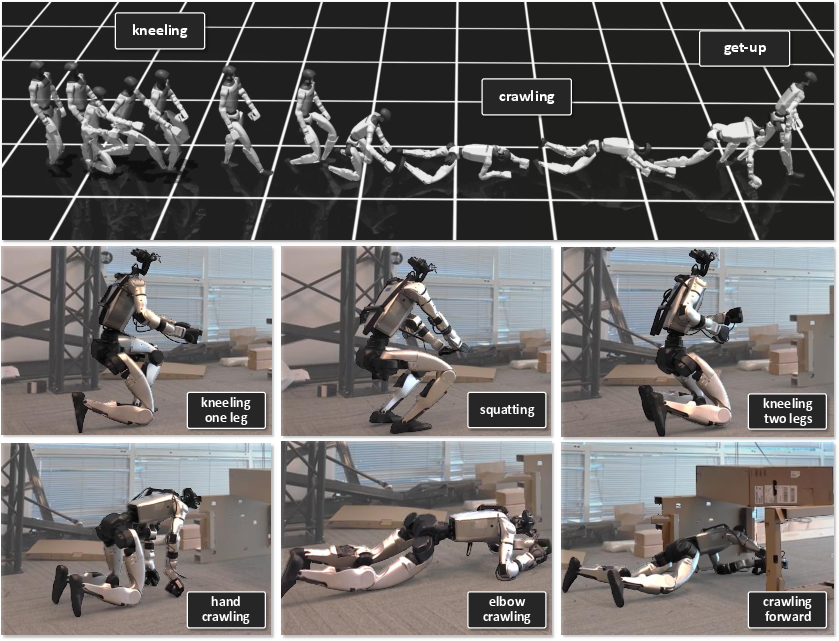

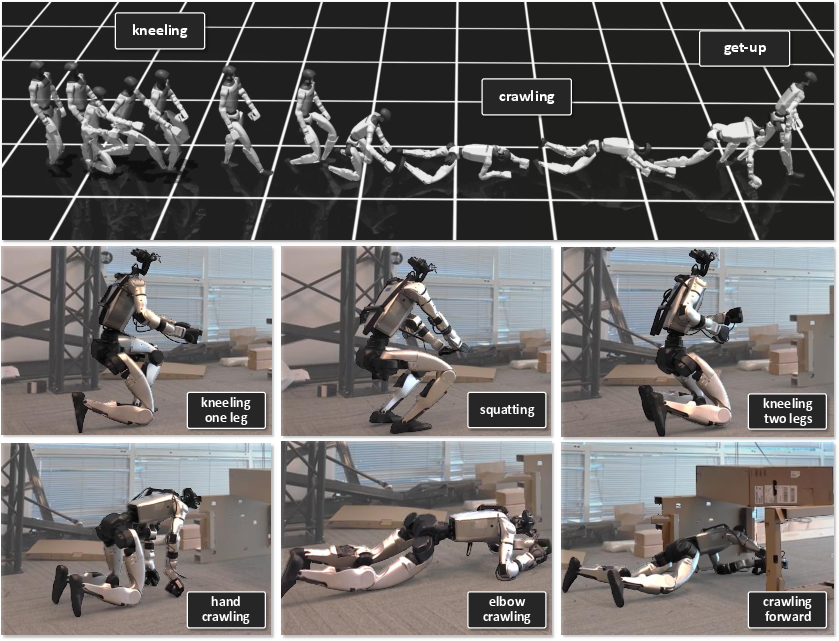

SONIC's planner enables seamless transitions across interactive skills (navigation, boxing, squatting, crawling) without retraining. The autoregressive inbetweening approach in latent space contributes robustness and supports dynamic, user-driven control scenarios.

Figure 5: Interactive postural modulation: the robot squats, kneels, or crawls at any height—essential for teleoperation and confined navigation.

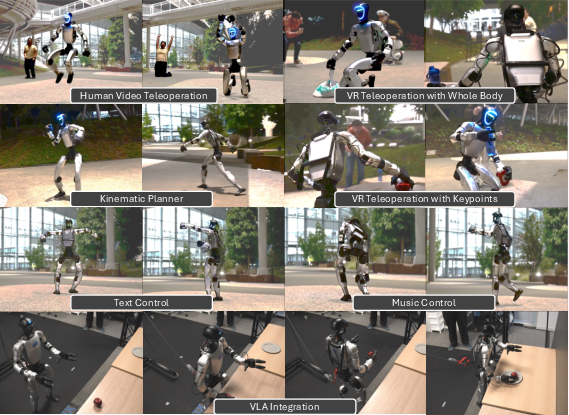

Multi-Modal and Cross-Embodiment Control

The encoder-decoder structure, unified token space, and planning pipeline enable flexible whole-body teleoperation, video teleoperation, and multi-modal (text, music, video) control. SONIC integrates a generative multi-modal human motion model (GENMO) which synthesizes target trajectories from time-varying combinations of textual, audio, and visual signals. This architecture supports high-frequency, low-latency transitions between control modalities in real time, with seamless handoffs.

Figure 6: Multi-modal whole-body and video teleoperation, supporting dynamic transitions between modalities (VR, video, text, audio).

The universal control policy enables true cross-embodiment mapping: human demonstration (from video or VR) is encoded—without explicit retargeting heuristics—into the latent space shared by robot policies, enabling direct application to humanoid execution.

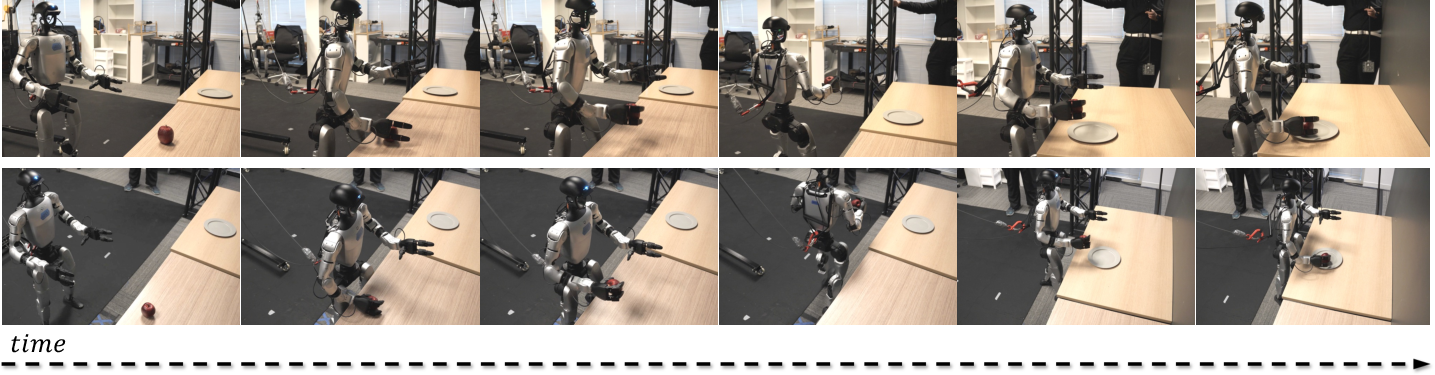

VLA Integration and Autonomous Manipulation

SONIC demonstrates compatibility with high-level Vision-Language-Action (VLA) planners. For proof-of-concept, a GR00T N1.5 VLA is fine-tuned on 300 VR-teleoperated apple-to-plate trajectories. The VLA emits teleoperation-format commands (head/wrist/base/nav), processed by the existing planner and policy stack, achieving 95% autonomous task success. This establishes a modular stack where foundation models provide semantic/planning-level intent and SONIC delivers robust, real-time sensorimotor execution.

Figure 7: Foundation-model-driven (VLA) mobile, bimanual manipulation via finetuned policies demonstrates SONIC’s compatibility with autonomous, semantic-intent planners.

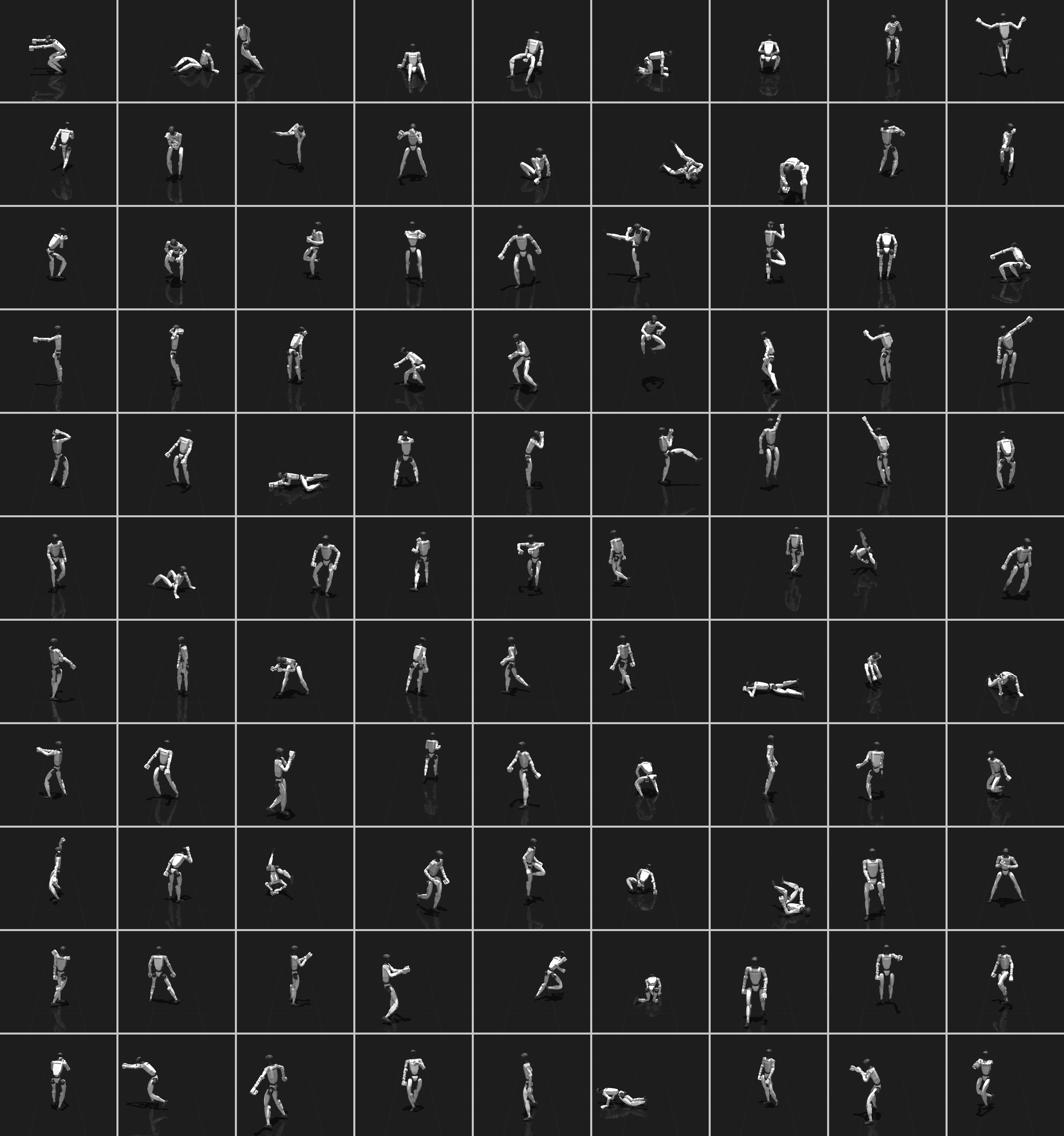

Dataset Construction and Domain Randomization

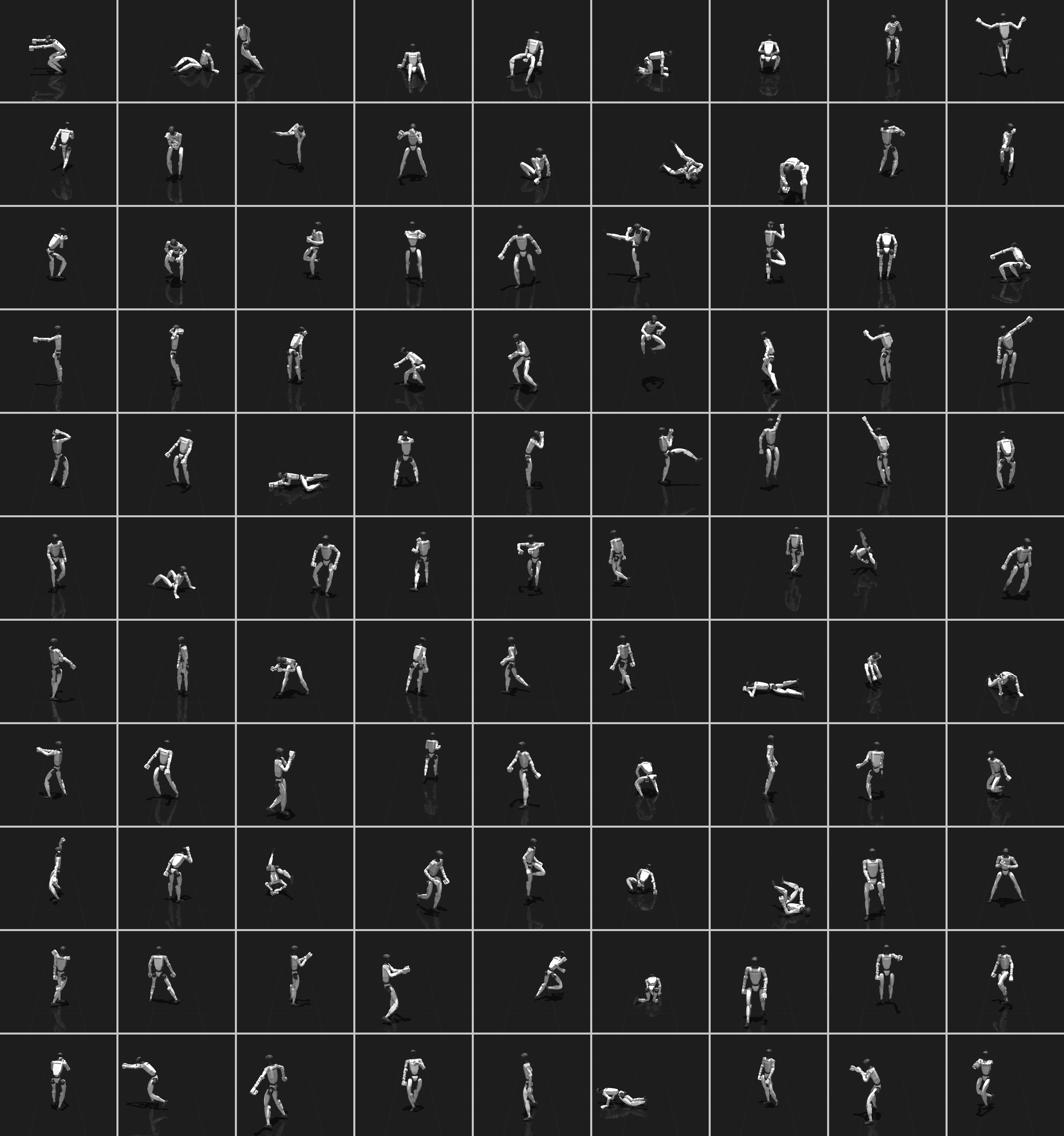

The training corpus contains over 700 hours of diverse, high-fidelity mocap data sampled from 170 human subjects, allowing SONIC to generalize across morphology, gender, and style. Retargeting is performed using robust kinematic mapping (GMR). To improve generalization and robustness to sim-to-real transfer, training incorporates extensive domain randomization over physics, root perturbations, and command noise.

Figure 8: Random mocap samples from the 100M frame, high-diversity human motion corpus used to train SONIC.

Implementation and Deployment Considerations

Real-world deployment is realized entirely onboard the Unitree G1, leveraging the Jetson Orin for inference (policy ∼2 ms, planner 12 ms). Processes are decoupled for responsiveness and timing predictability: the control policy loop runs at 50 Hz, planning at 10 Hz, and commands are streamed to joint actuators at 500 Hz. The latest-state-wins protocol and multi-process design minimize jitter and maintain smooth teleoperation or autonomous execution across interfaces.

Numerical Results and Empirical Claims

- Scaling to 128 GPUs and 100M frames yields monotonic improvements across all metrics.

- SONIC achieves 100% real-world tracking success, 95% success in VLA-driven manipulation, and outperforms all benchmarks in o.o.d. motion tracking accuracy and robustness.

- Strict improvement with scale in data, model, and compute—contrasting with stagnation observed in prior small-scale humanoid controllers.

- Demonstrated zero-shot generalization to unseen, diverse motion types and real-world morphologies.

- Low-latency, high-frequency planning and control enable responsive, natural interactive control and teleoperation without post-hoc fine-tuning.

Implications, Limitations, and Directions

The results support the hypothesis that motion tracking at scale is a viable route to acquiring broad, transferable sensorimotor priors for humanoids, analogous to the utility of language modeling in NLP or visual pretraining in CV. By removing the dependence on task-specific reward design, SONIC's approach significantly reduces the engineering barrier to scaling generalist humanoid policies.

Practical impact includes rapid prototyping of new interactive skills, teleoperation for manipulation or navigation, and direct integration with semantic VLA models for high-level reasoning. The unified latent token design enables future research on policy composition, multitask conditioning, or hierarchical control architectures.

Limitations remain: the safety of high-DoF robots under domain-randomized policies needs formal assurance, and energy efficiency, compliance, and long-horizon reasoning are not addressed in this work. Performance under extremely noisy input modalities and robustness to catastrophic failures (falls, collisions) warrant further paper.

Future work could focus on joint training of the planner, encoders, and policy to further reduce modality gaps, extend cross-embodiment transfer to broader morphologies, and scale the VLA-integration to complex, multi-stage tasks. Exploration of self-supervised or open-world data sources is indicated, as well as formalizing scaling laws for motion tracking akin to those in language and vision foundation models.

Conclusion

SONIC establishes large-scale motion tracking as a practical and effective foundation objective for universal humanoid control. By exploiting massive, diverse motion data and scaling compute/model capacity, SONIC produces generalist policies that achieve robust, natural, and versatile whole-body behavior across real-world scenarios. The architecture's flexibility—enabled by universal token spaces, generative planning, and multi-modal encoders—positions it as a foundation upon which high-level perception, language-based reasoning, and general-purpose autonomy for humanoids can be systematically built.