- The paper demonstrates a unified framework that fuses motion tracking with guided diffusion, enabling versatile and natural humanoid control with robust sim-to-real transfer.

- It employs a scalable motion tracking pipeline with adaptive sampling and domain randomization to efficiently learn and reproduce complex human motions.

- Diffusion-driven policies facilitate zero-shot task adaptation, effectively handling diverse objectives like navigation, teleoperation, and obstacle avoidance.

BeyondMimic: A Unified Framework for Versatile Humanoid Control via Guided Diffusion

Introduction

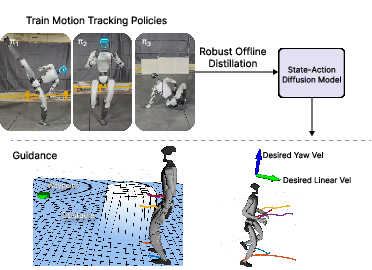

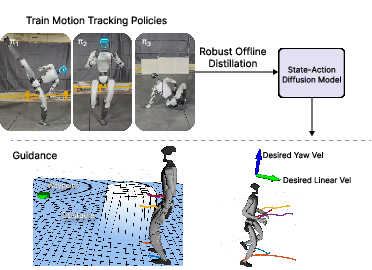

The "BeyondMimic" framework addresses two critical challenges in humanoid robotics: scalable, high-fidelity motion tracking for sim-to-real transfer, and flexible, task-driven control via guided diffusion policies. By integrating robust motion tracking with a state-action diffusion model, BeyondMimic enables the synthesis and deployment of diverse, dynamic, and naturalistic human motions on real humanoid hardware, supporting zero-shot adaptation to downstream tasks such as navigation, teleoperation, and obstacle avoidance.

Figure 1: Overview of BeyondMimic: robust motion tracking policies are trained for sim-to-real transfer, distilled into a state-action diffusion model, and deployed for downstream tasks with guidance (e.g., waypoint navigation and joystick control).

Scalable Motion Tracking Pipeline

Tracking Objective and Observations

BeyondMimic employs a unified MDP and hyperparameter set to train motion tracking policies from minutes-long human motion references. The tracking objective is formulated by anchoring the reference motion to a selected body (typically the root or torso), allowing for global drift while preserving motion style. The observation space includes reference phase information, anchor pose-tracking error, and proprioceptive features, with asymmetric actor-critic architectures to enhance training efficiency.

Joint Impedance and Action Design

Joint impedance parameters are heuristically set to promote compliance and robustness, avoiding the impracticality of high-impedance controllers for hardware deployment. Actions are normalized joint position setpoints, serving as intermediate variables for torque generation rather than direct position targets.

Reward Structure and Adaptive Sampling

Task rewards are defined as body tracking errors in Cartesian space, normalized via Gaussian-style exponentials. Minimal regularization penalties (joint limits, action smoothness, self-collision) are included for sim-to-real alignment. Adaptive sampling prioritizes difficult motion segments during training, significantly accelerating convergence and improving robustness, as demonstrated in ablation studies.

Domain Randomization and Termination

Targeted domain randomization (ground friction, joint offsets, torso CoM) and environmental perturbations are applied to enhance policy robustness. Episodes terminate upon significant deviation in anchor body pose or end-effector height, with resets adaptively sampled from the reference trajectory.

Guided Diffusion for Task-Driven Control

State-Action Diffusion Model

BeyondMimic distills expert tracking policies into a state-action co-diffusion model using the Diffuse-CLoC framework. The model predicts future trajectories conditioned on observation history, trained via denoising diffusion with independent noise schedules for states and actions. During inference, classifier guidance is applied via differentiable cost functions, enabling flexible adaptation to new tasks without retraining.

Guidance Mechanisms

Task-specific cost functions are defined for joystick steering, waypoint navigation, and obstacle avoidance. Guidance is implemented as the negative gradient of the cost with respect to the trajectory, driving the sampling process toward lower-cost regions and enabling zero-shot adaptation to diverse objectives.

Experimental Validation

BeyondMimic is validated on the Unitree G1 humanoid robot, executing a broad spectrum of motions from the LAFAN1 dataset and prior works. The framework demonstrates robust tracking of dynamic, static, stylized, and previously undemonstrated motions, including cartwheels, spins, sprints, and expressive dances. All policies are trained with a single set of hyperparameters, highlighting scalability and generality.

Adaptive Sampling Ablation

Adaptive sampling halves the required training iterations for short motions and enables convergence on long, challenging sequences that fail under uniform sampling. This mechanism is critical for efficient learning of difficult motion segments.

Diffusion Policy State Representation

Ablation studies on state representation reveal that Cartesian body position encoding significantly outperforms joint angle encoding for sim-to-real transfer. Body-Pos state achieves 100% success in walk-perturb tasks and 80% in joystick control, while Joint-Rot fails in joystick control and exhibits lower robustness. The spatial grounding of body positions mitigates compounding errors and supports effective guidance.

Discussion

Sim-to-Real Transfer

The framework achieves strong sim-to-real performance through careful problem formulation, targeted randomization, and efficient C++ implementation. The sim-to-real gap is minimized without complex adaptation or motion-specific tuning, providing a reproducible foundation for agile humanoid control.

Out-of-Distribution Behavior

Diffusion policies exhibit inert behavior in out-of-distribution scenarios, remaining stationary rather than producing erratic actions. This property enhances safety and stability in real-world deployment, contrasting with typical RL policies.

Skill Transitions

Current action-based diffusion models struggle with transitions between distinct skills, often becoming trapped in local modes. Classifier guidance can direct transitions, but effectiveness diminishes with increasing manifold distance between skills. Future work should address improved skill transition mechanisms within the diffusion framework.

Implications and Future Directions

BeyondMimic demonstrates that unified motion tracking and guided diffusion can enable versatile, high-fidelity humanoid control on real hardware. The framework's scalability, robustness, and expressiveness suggest broad applicability in robotics, animation, and human-robot interaction. Key future directions include:

- Enhancing state estimation for diverse contact-rich motions

- Improving skill transition capabilities in diffusion models

- Extending guided diffusion to more complex, multi-agent, or interactive tasks

- Integrating multimodal conditioning (e.g., text, vision) for richer control interfaces

Conclusion

BeyondMimic establishes a practical and scalable approach for learning from human motions and deploying versatile, naturalistic humanoid control via guided diffusion. Its unified pipeline, robust sim-to-real transfer, and flexible task adaptation mark a significant advance in the synthesis and deployment of whole-body humanoid skills, with promising implications for future research in generalizable robot control and generative policy learning.