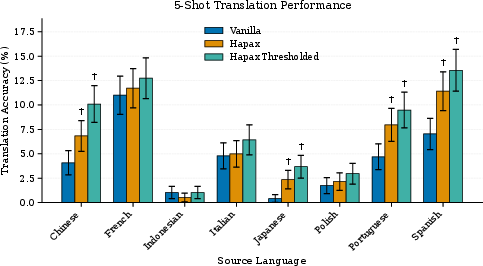

- The paper demonstrates that suppressing inductive copying via Hapax does not hinder and can even improve abstractive in-context learning.

- The methodology omits loss contributions for tokens predicted by induction heads, effectively reducing n-gram repetition and induction head influence.

- Evaluation shows Hapax outperforms baselines on 13 of 21 abstractive tasks, while expectedly lowering performance on extractive tasks reliant on copying.

Detailed Summary of "In-Context Learning Without Copying"

Introduction to Inductive Copying and its Role in ICL

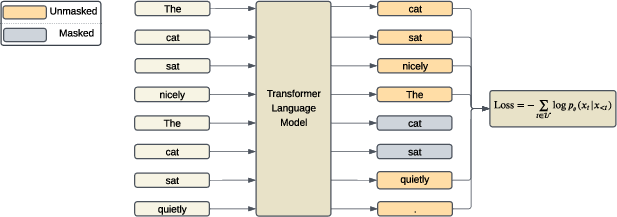

LLMs exhibit the ability to perform in-context learning (ICL) where tasks can be learned from examples provided within the context. Inductive copying through induction heads has been posited as foundational for ICL capabilities. Induction heads match token patterns in prior context and reproduce these patterns verbatim, which leads to a drop in training loss. This paper investigates whether transformers can develop ICL capabilities when inductive copying is suppressed, presenting Hapax—a training regime that omits the loss contribution of tokens predicted by induction heads.

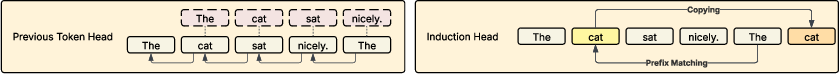

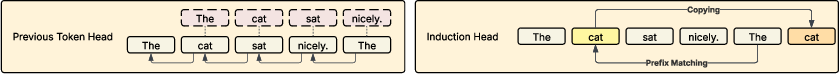

Figure 1: Demonstration of the induction circuit. Previous token heads allow each token to store which token came previously. Induction heads do a match-and-copy operation to reproduce the subsequence that appeared earlier in the context.

Hapax Training Methodology

Hapax employs a novel approach to discourage n-gram repetition by omitting loss contributions for tokens predicted by induction heads. The training prohibits gradient signals from repeated n-grams, suppressing inductive copying while preserving ICL capabilities for tasks that demand abstraction. The paper reports Hapax models performing better on 13 of 21 abstractive tasks despite being trained on fewer tokens—suggesting that inductive copying is not a prerequisite for abstractive ICL mechanisms.

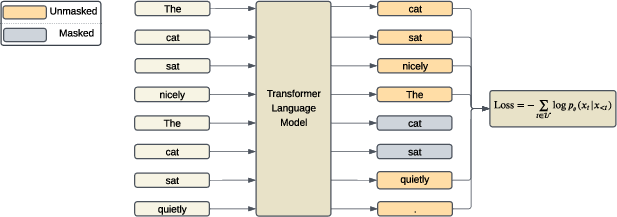

Figure 2: An overview of Hapax training regime. To suppress inductive copying, we introduce Hapax where positions predictable by induction heads within a context window do not contribute to the loss (gray positions). This discourages n-gram repetitions in the training distribution, allowing us to control the strength of inductive copying and observe its effect on in context learning.

Mechanistic Reductions in Induction Heads

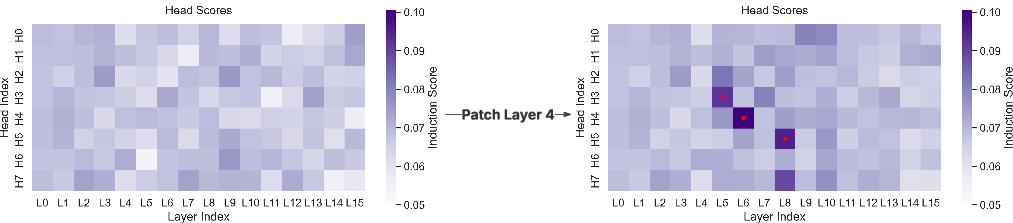

The suppression of inductive copying results in fewer and weaker induction heads within the Hapax model. Notably, the reduction in induction heads does not impair the model's abstractive ICL capabilities, which are preserved or enhanced for a significant subset of tasks. The paper highlights that models without sequential copying incentives can still develop abstract ICL solutions.

The paper evaluates the efficacy of Hapax across several extractive and abstractive tasks:

Analysis of Induction Head Influence

A mechanistic analysis reveals that the prediction probabilities for tokens expected to be copied are negatively affected when induction heads are suppressed or rendered non-functional. Despite fewer activations correlating with prefix-matching behavior, Hapax maintains strong performance on tasks unconstrained by simple inductive copying, suggesting an abstraction in learned mechanisms not dependent on copying n-grams.

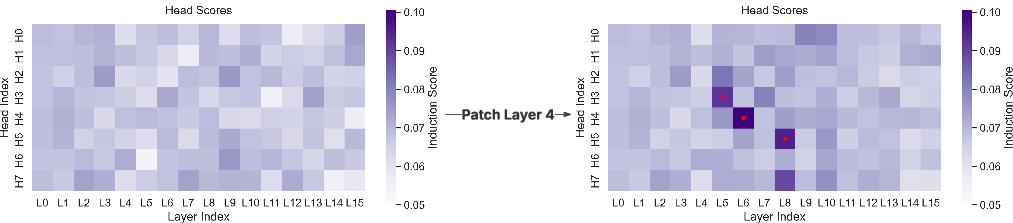

Figure 4: Induction head scores of the randomly initialized vanilla model before and after patching layer L4 of the vanilla model at step 5000, which contains the first previous-token heads. Inductive copying patterns can emerge with specific component activations.

Conclusion

The results underscore the non-essential nature of inductive copying for learning abstractive ICL tasks. Hapax offers an insight into training regime impacts on model learning dynamics, allowing further exploration of data distribution effects on ICL mechanisms. The methodology fosters future investigations into how suppression of certain behaviors can enhance model capabilities beyond mere repetition-based strategies. The findings should encourage reconsideration of copying as a fundamental skill for learning complex tasks in-depth, paving the way for comprehensive explorations into non-inductive learning pathways and their potential to enhance LLM fluency and accuracy.

This paper invites future research addressing the balancing act between suppression of inductive mechanisms and the cultivation of richer in-context learning strategies that extend beyond efficient copying.