The paper investigates the roles of induction heads and function vector (FV) heads in the in-context learning (ICL) capabilities of LLMs. The authors conducted ablation studies and training dynamics analyses across 12 decoder-only transformer models, ranging from 70M to 7B parameters, and 45 natural language ICL tasks. Their findings suggest that FV heads are the primary drivers of few-shot ICL performance, while induction heads may serve as precursors to FV heads during training.

The authors began by establishing that induction heads and FV heads represent distinct mechanisms. They observed minimal overlap between the top induction and FV heads within models. Induction heads generally appear in earlier layers, while FV heads are located in slightly deeper layers, suggesting different computational roles. Despite being distinct, the paper finds that FV heads tend to have relatively high induction scores and vice versa, indicating a correlation in their behavior.

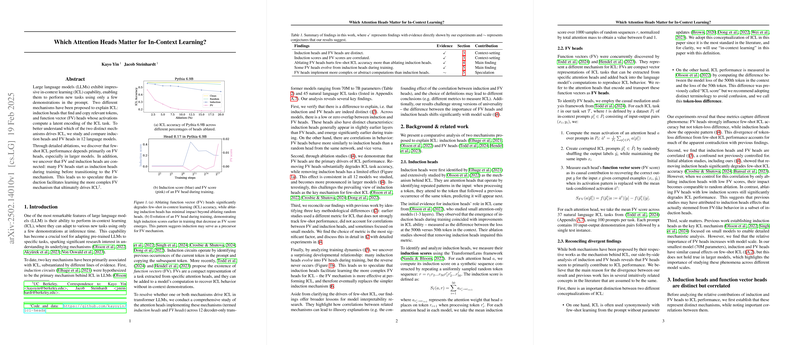

To assess the causal importance of each type of head, the authors conducted ablation studies, measuring the impact on both few-shot ICL accuracy and token-loss difference. Ablating FV heads resulted in a significant degradation of ICL accuracy, whereas ablating induction heads had a limited effect, particularly in larger models. To control for correlations, the authors performed ablations with exclusion, removing induction heads with low FV scores and vice versa. Ablating induction heads with low FV scores had minimal impact on ICL performance, while ablating FV heads with low induction scores still significantly impaired performance. This suggests that FV heads are the key drivers of few-shot ICL. The paper also demonstrates that few-shot ICL accuracy and token-loss difference capture different phenomena, with FV heads strongly influencing the former and induction heads having a greater impact on the latter.

The paper also examined the evolution of induction and FV heads during model training. They found that induction heads emerge early in training, while FV heads appear later. Analyzing individual heads revealed that many FV heads initially exhibit high induction scores before transitioning to FV functionality. This unidirectional transition suggests that induction heads may facilitate the learning of more complex FV heads.

The authors propose two conjectures to explain their findings. The first conjecture (C1) posits that induction heads are an early version of FV heads, serving as a stepping stone for models to develop the FV mechanism. The second conjecture (C2) suggests that FV heads are a combination of induction and another mechanism, with heads transitioning from induction to FV functionality actually implementing both. The authors argue that C1 is better supported by their ablation studies, which show that removing monosemantic FV heads significantly hurts ICL performance.

Key findings include:

- Low or zero overlap between induction and FV heads, indicating distinct mechanisms.

- FV heads are located in deeper layers than induction heads.

- Ablating FV heads significantly impairs few-shot ICL accuracy, while ablating induction heads has minimal impact, especially in larger models.

- Many FV heads evolve from induction heads during training, but not vice versa.

- FV heads are crucial for few-shot ICL, while induction heads have a greater impact on token-loss difference.

The paper challenges the prevailing view of induction heads as the key mechanism for few-shot ICL and highlights the importance of FV heads in driving this capability. The authors emphasize that correlations between mechanisms can lead to illusory explanations and that different definitions of model capabilities can lead to substantially different conclusions. The results also challenge strong versions of universality, as the relative importance of FV heads and induction heads shifts significantly with model scale.