ETHOS: A Robotic Encountered-Type Haptic Display for Social Interaction in Virtual Reality (2511.05379v1)

Abstract: We present ETHOS (Encountered-Type Haptics for On-demand Social Interaction), a dynamic encountered-type haptic display (ETHD) that enables natural physical contact in virtual reality (VR) during social interactions such as handovers, fist bumps, and high-fives. The system integrates a torque-controlled robotic manipulator with interchangeable passive props (silicone hand replicas and a baton), marker-based physical-virtual registration via a ChArUco board, and a safety monitor that gates motion based on the user's head and hand pose. We introduce two control strategies: (i) a static mode that presents a stationary prop aligned with its virtual counterpart, consistent with prior ETHD baselines, and (ii) a dynamic mode that continuously updates prop position by exponentially blending an initial mid-point trajectory with real-time hand tracking, generating a unique contact point for each interaction. Bench tests show static colocation accuracy of 5.09 +/- 0.94 mm, while user interactions achieved temporal alignment with an average contact latency of 28.53 +/- 31.21 ms across all interaction and control conditions. These results demonstrate the feasibility of recreating socially meaningful haptics in VR. By incorporating essential safety and control mechanisms, ETHOS establishes a practical foundation for high-fidelity, dynamic interpersonal interactions in virtual environments.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper introduces ETHOS, a system that lets you actually feel quick, natural touches in virtual reality (VR)—like a high five, a fist bump, or passing an object—by moving real objects into the right place at the right time. It uses a robot arm holding soft, hand-shaped props so that when you touch a virtual hand, you also touch a matching real one.

What were the main goals?

The researchers wanted to:

- Make touch in VR feel real during social moments (like greeting someone).

- Keep users free of wearables (no gloves or suits) while still feeling physical contact.

- Line up the virtual world with the real world very accurately, so your hand meets the prop exactly where you expect.

- Time the touch precisely, so the contact happens at the right moment.

- Do all this safely, since people in VR can’t always see the robot.

How did they build it?

Think of ETHOS as a helpful stagehand in a theater:

- A smart robot arm (the stagehand) moves a physical prop (like a soft silicone hand or a baton) to where a touch should happen.

- Your VR headset shows the scene with a virtual character, and behind the scenes, the robot puts the matching real object in the right place.

The robot and the props

- The team used a 7-joint robot arm that can move smoothly and safely.

- They attached different props to its tip:

- A soft, realistic open hand (for high fives).

- A soft, realistic closed fist (for fist bumps).

- A baton (for handovers).

- The props feel skin-like because they’re made from silicone, and the hand props are reinforced inside so they feel sturdy, like real hands.

Lining up the real and virtual worlds

- The VR headset (Meta Quest 3) looks at a special printed board (like a fancy checkerboard called a ChArUco board).

- By tracking that board, the system knows exactly where the robot and props are in the real room and matches them to the virtual scene. This is called “registration” and “colocation,” meaning virtual and real objects appear in the same place.

Staying safe

- The headset tracks where your head and hands are.

- If your head gets too close to the robot’s area, the robot stops instantly.

- If you step out of the safe zone, the headset shows passthrough video of the real world so you can see what’s happening.

- An operator can also press an emergency stop if needed.

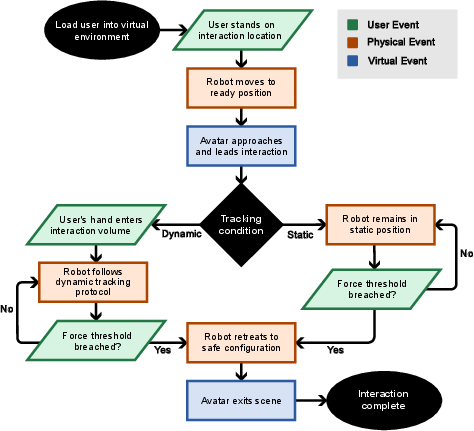

Two ways the robot behaves

- Static mode: The prop stays still at a set spot that matches the virtual hand. You move your hand to it (like touching a doorknob).

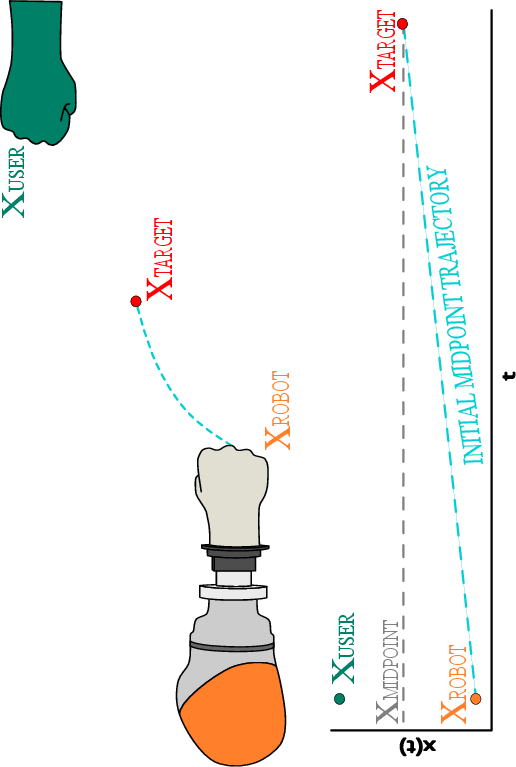

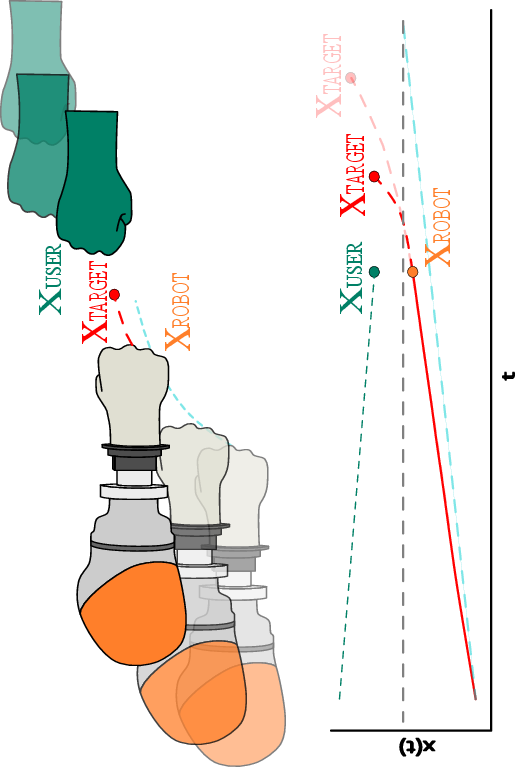

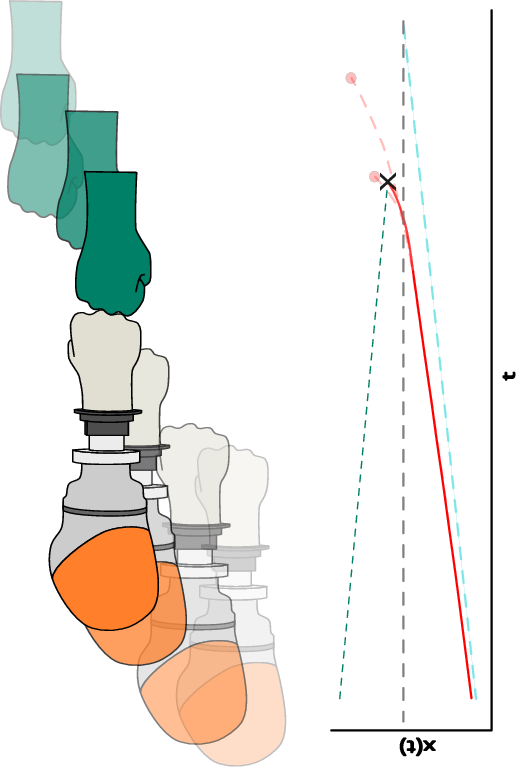

- Dynamic mode: The prop gently moves toward a good meeting point between you and the robot, then gradually locks onto your hand’s position. Imagine two people meeting halfway for a high five—this makes contact feel smoother and more personal.

The VR scene

- The interactions happen in a cozy forest-and-cabin setting with a friendly avatar who waves, looks at you, and raises a hand to signal a high five, fist bump, or handover. Natural motion and sound help make it feel real.

What did they find?

Here are the main results and why they matter:

- Very accurate lining up: On average, the physical and virtual objects matched within about 5 mm. That’s thinner than a pencil’s width—good enough that your hand doesn’t “miss” the prop.

- Very fast timing: The difference between when the virtual touch and the real touch happened was about 29 ms on average (that’s less than 1/30th of a second). This is faster than what most people can notice, so the touch feels timely and real.

- Both static and dynamic modes worked well across different interactions (handover, fist bump, high five).

Why this matters: Touch that is both well-aligned and well-timed makes VR feel more believable, especially in social moments where precise contact matters.

Why is this important?

Most VR today focuses on what you see and hear. But many social moments rely on brief, meaningful touch. ETHOS shows a practical way to bring those touches into VR without strapping gadgets onto your body. It makes interactions feel more natural and can boost your sense of “being there.”

What could this lead to?

This work is a starting point for:

- Better training (like practicing handovers in healthcare or teamwork drills).

- Rehabilitation that uses safe, realistic contact.

- More engaging social VR platforms and VR theater.

- Richer multi-user experiences (like group games or performances).

- Smarter props and interaction strategies tailored to different kinds of touch.

The team plans to run user studies to measure how real and enjoyable these interactions feel, and to improve the props, control methods, and robot “softness” so it behaves even more like a person’s arm.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a focused list of what remains missing, uncertain, or unexplored in the paper, framed as concrete, actionable items for future research:

- Conduct controlled user studies to quantify experiential outcomes (e.g., presence, naturalness, trust, social connectedness), and to compare static vs. dynamic control for each interaction type (handover, fist bump, high five).

- Decompose and quantify end-to-end latency into subsystem contributions (hand tracking, registration, networking/serialization, Unity rendering, robot control), including jitter and 95th/99th percentile bounds under fast and occluded hand motions.

- Assess robustness of the ChArUco-based registration over time (drift), under varying lighting/occlusion, and across sessions without motion-capture-assisted calibration; develop online self-check and re-registration triggers when colocation error exceeds a threshold.

- Evaluate dynamic colocation accuracy during movement (not just static alignment), including spatial error at the actual contact instant and its dependence on approach speed, hand pose, and occlusions.

- Optimize the dynamic control strategy per interaction type: identify task-specific blending functions for , time constants, and trajectory planning parameters; explore intent estimation (e.g., approach velocity, hand pose classification) to adapt target selection in real time.

- Incorporate and assess impedance/admittance control to modulate manipulator compliance across approach, pre-contact, contact, and retreat phases; quantify effects on comfort, safety, and realism.

- Replace heuristic force thresholds (7.5 N, 15 N) with empirically derived, individualized force–torque profiles for each interaction; measure contact force/torque time histories to establish safe and natural ranges and to inform contact detection logic.

- Extend safety monitoring beyond head-only gating: evaluate full-body pose estimation (torso/shoulders/arms), tracking dropout handling, worst-case occlusion scenarios, and reaction timing; perform formal hazard analysis and quantitative safety validation (near-miss rates, false positives/negatives).

- Reduce reliance on a human operator for emergency intervention by implementing verified, automatic fail-safes (hard limits, speed caps, workspace partitioning, collision prediction) and certifiable safety processes.

- Characterize prop similarity in detail (compliance/stiffness profiles, friction, texture, thermal properties, geometry fidelity) and run psychophysical tests to establish perceptual thresholds for realism for each social gesture.

- Evaluate the effect of manipulator and prop compliance on perceived naturalness during high-impact interactions (fist bump, high five), including rebound, damping, and energy absorption behavior.

- Investigate hand-tracking reliability with Meta Quest 3 during fast, oblique, and occluded motions; compare against controller-based tracking or hybrid sensing (e.g., wrist IMU, local depth) and quantify failure modes’ impact on contact timing and positioning.

- Examine the impact of requiring the user to stand in a marked “safe zone” and of automatic passthrough activation on immersion, presence, and task performance; explore strategies to maintain safety without breaking immersion.

- Validate the baton release mechanism (electromagnet) for timing accuracy, slip, and failure rates during handovers; measure release synchronization error relative to virtual cues and user expectation.

- Investigate scalability and generalizability across hardware: replicate ETHOS on lower-cost arms and alternative HMDs; quantify performance differences and identify minimum system specifications to achieve targeted colocation/latency.

- Study multi-user and multi-agent scenarios (two human users, human–robot–avatar triads) and richer social gestures (handshakes, hugs, taps with trajectory variability), including coordination, timing, and safety implications.

- Analyze avatar behavior fidelity: test different gaze strategies, arm kinematics per gesture (not just handover-based), micro-timing cues, and reactive behaviors to user hesitations or misalignments; quantify their effect on user anticipation and interaction success.

- Implement and evaluate runtime strategies for tracking dropout and desynchronization (e.g., graceful degradation to static mode, predictive target holding, user-facing prompts), and measure recovery time and user acceptance.

- Report network reliability metrics (packet loss rates under UDP, out-of-order arrivals, buffering strategies) and their effect on latency/jitter; evaluate alternative transport layers or time synchronization mechanisms.

- Assess long-term durability and hygiene considerations for silicone hand props under repeated contact (wear, tear, cleaning protocols), and their effect on mechanical properties and perceived realism over time.

- Evaluate acoustic masking effectiveness (forest soundscape) for suppressing robot noise without degrading presence; quantify audio–haptic congruence effects on perceived realism.

- Address data security and privacy for cross-device communications (e.g., encryption of pose/biometric data, secure logging), especially for multi-user or clinical deployments.

- Provide open-source implementation details (software, calibration procedures, prop fabrication specs) and standardized benchmarks to enable reproducible evaluation by other labs.

Practical Applications

Overview

This paper introduces ETHOS, a dynamic encountered-type haptic display that uses a torque-controlled robot and interchangeable passive props to deliver realistic, precisely timed social touch in VR (e.g., handovers, fist bumps, high-fives). It demonstrates sub-centimeter physical–virtual colocation and sub-perceptual average contact latency, alongside a pragmatic safety and registration pipeline. Below are practical applications derived from these findings, methods, and innovations, grouped by near-term deployability versus longer-term development needs.

Immediate Applications

These can be piloted or deployed now in controlled environments (labs, clinics, training centers, museums, VR arcades) using available hardware (e.g., KUKA iiwa or equivalent, Meta Quest 3), the described ROS 2–Unity stack, and the safety/registration workflow.

- Healthcare: Upper-limb rehabilitation modules that use familiar, socially meaningful gestures

- Use cases: Guided high-fives for motivation and range-of-motion; graded object handovers to practice grip strength and coordination; fist bumps to train timing and impact modulation.

- Tools/products/workflows: “ETHOS Rehab Kit” with preset props, Unity scenes, and therapist dashboards; standardized force-threshold presets per task; session logs of forces/latency.

- Dependencies/assumptions: Clinical supervision; safe-zone setup; reliable hand tracking from the HMD; patient screening for VR tolerance.

- Education and training: Skill transfer scenarios requiring precise handovers and short-duration contact

- Sectors: Manufacturing, logistics, healthcare education.

- Use cases: Pass-the-tool training in assembly; specimen handover practice in labs; emergency responder baton/pass drills.

- Tools/workflows: Unity scenario templates (handover timing, avatar cues); ROS 2 behavior scripts; prop libraries (tools, containers).

- Dependencies: Floor space for robot workspace; instructor oversight; company acceptance of VR-based modules.

- Entertainment and location-based VR: Immersive social-contact setpieces

- Sectors: VR arcades, experiential museums, VR theatre.

- Use cases: Avatar greeting moments (high-five/fist bump) to boost presence; interactive museum exhibits with safe handovers; staged VR theatre audience interactions.

- Products: “ETHOS Encounter Station” with integrated safety gating, passthrough triggers, and prebuilt scenes.

- Dependencies: Staff E-stop oversight; consistent environmental audio to mask robot sounds; queue and throughput planning.

- Human–Computer Interaction (HCI) and haptics research: Rapid prototyping for social touch studies

- Use cases: Controlled experiments on presence, timing sensitivity, and social cues; benchmarking latency perception under dynamic tracking; evaluating avatar cueing strategies.

- Tools: ROS 2/Unity integration templates; ChArUco registration pipeline; metrics capture for force/latency; experiment control scripts.

- Dependencies: IRB/ethics approval; motion-capture calibration if higher-precision ground truth is needed.

- Social VR onboarding and etiquette training

- Sector: Software/social platforms.

- Use cases: Teach first-time users socially legible gestures (high-five/fist bump) to reduce awkwardness; practice interaction readiness cues.

- Workflows: Short orientation scenes with avatar cues and static/dynamic modes; analytics on successful gesture completion.

- Dependencies: Physical robot presence; operator oversight; platform content integration.

- Safety gating middleware for VR–robot co-location

- Sector: Robotics/software.

- Use cases: Add headset-derived head/hand pose gating to any robot near a VR user; automatic passthrough activation when users leave safe zones; immediate robot halt if head enters workspace.

- Products: “ETHOS Safety Layer” SDK (Unity plugin + ROS 2 node).

- Dependencies: Access to HMD pose streams; robot API for motion gating; site-specific safe-zone definition.

- Lightweight physical–virtual registration without external mocap

- Sector: Software/industrial VR.

- Use cases: Quick alignment of VR scenes to physical workcells using headset cameras and a ChArUco board; reduce setup complexity for demos, training stations, and lab studies.

- Tools: Adapted ChArUco pipeline; averaging and outlier rejection scripts; stored transforms for reuse.

- Dependencies: Adequate board visibility; consistent lighting; periodic recalibration checks.

- Dynamic contact trajectory blending for natural interaction timing

- Sector: Robotics/haptics.

- Use cases: Replace fixed contact points with smooth, hand-tracking-informed convergence to user; reduce jerk and early motion surprises.

- Tools: Open ROS 2 node implementing exponential blending; configurable interaction volumes; per-task weighting profiles.

- Dependencies: Stable HMD hand tracking at ~90 Hz; robot capable of responsive torque control; collision-safe path planning.

- Museum and science center STEM outreach on human–robot social touch

- Use cases: Showcasing touch integration in VR; visitor fist-bump with avatar/prop; explain sensors, safety, and alignment.

- Products: Scripted demo modes, visual overlays of colocation error and latency.

- Dependencies: Staff supervision; barriers to maintain safe visitor positions; robust props for high throughput.

- User studies on presence and enjoyment with social haptics

- Sector: Academia/UX research.

- Use cases: Measure presence (e.g., PQ/ITQ), enjoyment, trust, and connection to avatars under static vs. dynamic modes.

- Workflows: Pre-registered paper templates; parameter sweeps for force thresholds; mixed-methods surveys.

- Dependencies: Balanced participant samples; standardized interaction scripts; data governance.

Long-Term Applications

These require further research, scaling, validation, cost reduction, standardization, or new integrations (e.g., affordable robots, multi-user support, bilateral telepresence).

- Remote social touch and telepresence

- Sector: Communications/telepresence.

- Use cases: Remote handshake/high-five mediated by paired ETHOS stations; live co-embodiment in social VR events.

- Products: Bilateral control with synchronized avatars; latency compensation; consent and privacy controls.

- Dependencies: Network QoS; cross-site safety standards; multi-modal synchronization (audio/video/haptics).

- Consumer-grade encountered haptics for home VR

- Sector: Consumer electronics/gaming.

- Use cases: Affordable desktop/compact robots or mobile mechanisms that present physical props for social gestures and gameplay.

- Products: Modular prop packs; game SDKs; self-calibrating registration without fiducials; quiet actuators.

- Dependencies: Cost and reliability improvements; safety certification; simplified setup flows.

- Multi-user social encounters and crowd-safe installations

- Sector: Entertainment/venues.

- Use cases: Group greetings with multiple avatars/props; staggered safe zones and dynamic task allocation.

- Tools: Multi-robot orchestration; predictive scheduling; dynamic risk assessment per user pose.

- Dependencies: Spatial analytics; venue-scale safety protocols; throughput optimization.

- Standardized safety frameworks and policy guidance

- Sector: Policy/regulation/standards bodies.

- Use cases: Norms for VR–robot co-location (safe zones, E-stop, passthrough triggers, latency thresholds); certification pathways for public-facing deployments.

- Products: Best-practice guidelines and test suites; incident reporting procedures; compliance badges.

- Dependencies: Cross-industry working groups; insurer and regulator buy-in; empirical safety data at scale.

- Workforce training at industrial scale

- Sector: Manufacturing/logistics/field services.

- Use cases: High-volume training on handover timing, ergonomics, and social cues in collaborative tasks; objective force/latency metrics for skill assessment.

- Products: Learning management integrations; scenario libraries mapped to task competencies; digital twins with physical contact.

- Dependencies: Integration with HR/LMS; cost–benefit validation; maintenance and operator training.

- Clinical protocols for social-touch therapy

- Sector: Mental health/occupational therapy.

- Use cases: Social skills training (e.g., autism spectrum interventions) using graded, predictable touch with avatars; exposure therapy for touch-related anxieties.

- Products: Clinician-configurable gesture libraries, difficulty curves (distance, timing, force).

- Dependencies: Clinical trials; ethical safeguards; personalization pipelines.

- Adaptive prop design and materials science for rich gestures

- Sector: Robotics/soft materials.

- Use cases: Props with tunable compliance, temperature, texture, and shape-shifting for varied social touch (e.g., gentle pat, handshake).

- Products: Electromagnetically switchable grip modules; soft actuators; embedded sensing for torque profiles.

- Dependencies: Material characterization; durability and hygiene; cost-effective fabrication.

- Human–robot collaboration training with interpersonal cues

- Sector: Robotics/automation.

- Use cases: Teaching workers and robots mutual readiness cues; safe handovers in cobot lines; micro-timing coordination.

- Workflows: Avatar-guided practice, force-threshold adaptation per trainee, progression metrics.

- Dependencies: Integration with cobot controllers; domain-specific task modeling; organizational acceptance.

- Education: Tactile STEM labs

- Sector: K–12 and higher education.

- Use cases: Physics/biomechanics of impact and force; perceptual thresholds; human factors experiments with real contact.

- Products: Curriculum modules with ETHOS scenes; data export for lab reports; teacher-friendly setup tools.

- Dependencies: Budget for hardware; safety training for instructors; simplified registration tooling.

- Social VR platform integration and SDKs

- Sector: Software/platforms.

- Use cases: APIs for encountered-type haptics events (handshake, handover); avatar behavior models that respect physical props.

- Products: Platform SDKs that expose pose and safety states; developer marketplaces for prop-capable scenes.

- Dependencies: Platform policy updates; device ecosystem support; developer education.

- Latency-aware control and perception models

- Sector: Haptics/controls research.

- Use cases: Adaptive controllers that compensate for hand-tracking and network delays; predictive models that keep contact under perception thresholds.

- Products: Open-source controllers with real-time LPT estimation; user-specific adaptation.

- Dependencies: Larger datasets; standardized benchmarks; cross-device profiling.

- Ethical frameworks for physical contact in mediated environments

- Sector: Policy/ethics.

- Use cases: Consent models for social touch; avatar intent signaling standards; safeguards for vulnerable users.

- Products: UX patterns and disclosures; opt-in mechanisms; audit trails for contact events.

- Dependencies: Interdisciplinary input (ethics, law, HCI); stakeholder consultation; harmonization across platforms and hardware.

Cross-cutting assumptions and dependencies

- Hardware availability and cost: Current implementations rely on torque-controlled manipulators (e.g., KUKA iiwa), F/T sensing, and a modern HMD (e.g., Meta Quest 3). Consumer-grade adoption will require cost reductions or alternative mechanisms.

- Tracking and registration fidelity: Sustained sub-centimeter alignment depends on consistent ChArUco visibility and lighting; hand-tracking performance must remain robust (~90 Hz) for dynamic trajectories.

- Safety governance: Safe zones, pose-based motion gating, passthrough triggers, and human E-stop oversight are prerequisites, especially in public or clinical deployments.

- Environment constraints: Physical space for the robot’s workspace, controlled acoustics to mask robot sounds, and clear user pathways are necessary for perceived naturalness.

- Content design: Avatar cueing (gaze, arm motion), interaction sequencing, and force thresholds require tuning per task and population.

- Data governance: Logging of forces, latencies, and user states must respect privacy and clinical/organizational policies.

These applications illustrate how ETHOS’s validated colocation, latency, dynamic control, and safety design can be leveraged immediately in specialized settings and scaled over time into broader markets and policy frameworks.

Glossary

- 7-DoF: A robot with seven independent joints (degrees of freedom) enabling complex arm positioning and orientation. "a torque-controlled 7-DoF manipulator with interchangeable passive props"

- ChArUco board: A hybrid calibration target combining chessboard corners and ArUco markers for robust, accurate pose estimation. "marker-based physical-virtual registration via a ChArUco board"

- Colocation: Precise spatial alignment of physical props with their virtual counterparts. "sub-centimetre colocation accuracy ( mm)"

- Cutaneous receptors: Skin-based sensory receptors responsible for touch, pressure, and vibration. "signals from cutaneous receptors in the skin and kinesthetic receptors in muscles, tendons, and joints"

- Electrotactile arrays: Arrays of electrodes that deliver electrical stimulation to the skin to evoke touch sensations. "vibrotactile motors, electrotactile arrays"

- Encountered-Type Haptic Display (ETHD): A system that positions physical props at interaction points so users encounter them naturally during VR tasks. "a dynamic encountered-type haptic display (ETHD) that enables natural physical contact in virtual reality (VR)"

- End effector: The tool or interface mounted at the end of a robot arm that interacts with the environment. "interactable objects mounted to the end effector of a robotic manipulator"

- End-effector transform: The calibrated spatial transform describing the pose relationship of the robot’s terminal link for accurate positioning. "subtracting the KUKAâs known end-effector transform."

- End-to-end latency: The total delay from a physical event to its corresponding virtual event (or vice versa) as experienced by the user. "understanding end-to-end latency is of highest priority"

- Exponential weighting: A blending method where the influence of one signal increases according to an exponential function. "This formulation applies an exponential weighting, prioritizing smooth early motion"

- Fast Robot Interface (FRI): KUKA’s high-frequency interface for real-time torque/position control and feedback. "using KUKAâs proprietary Fast Robot Interface (FRI)"

- Fiducial marker: A visually distinctive marker used for reliable camera-based detection and pose estimation. "a lightweight fiducial marker approach, using a ChArUco board"

- Force–torque sensor: A transducer that measures multi-axis forces and torques at the robot’s end effector. "A six-axis Mini40 forceâtorque sensor (ATI Industrial Automation, USA) was integrated"

- Grounded implementations: Haptic/robotic systems that are fixed to an external base for stability and precise interaction forces. "Grounded implementations, typically fixed-base robotic manipulators, offer precise positioning and stability during contact."

- Ground-truth: Reference measurements used as a gold standard to validate system accuracy. "To provide ground-truth to our measurements, a retroreflective marker-based motion-capture system"

- Head-mounted display (HMD): A wearable display with integrated sensors that presents and tracks VR content. "Meta Quest 3 head-mounted display (HMD)"

- Kinesthetic receptors: Sensors in muscles, tendons, and joints that provide the sense of body motion and force. "signals from cutaneous receptors in the skin and kinesthetic receptors in muscles, tendons, and joints"

- Latency perception thresholds (LPTs): Maximum delays users can tolerate before noticing or being affected by latency. "Latency perception thresholds (LPTs) define the maximum tolerable delay in a system before user experience or task performance is degraded"

- Markerless hand-tracking: Vision-based tracking of hand pose and motion without gloves or markers. "precise markerless hand-tracking as characterized in prior work"

- Motion capture: Optical or sensor-based tracking of objects or bodies to obtain precise 3D positions and orientations. "Conventional localization methods, such as motion capture"

- Open XR plugin: A Unity integration of the cross-vendor OpenXR standard to deploy XR apps across devices. "The Unity project was deployed to the Meta HMD using the Open XR plugin"

- Passthrough mode: A VR feature that shows the real world via headset cameras without removing the headset. "the HMD automatically activates passthrough mode, showing the real-world environment via the onboard cameras."

- Physical–virtual registration: Computing and maintaining the coordinate transform aligning the physical robot/props with the virtual scene. "marker-based physical-virtual registration via a ChArUco board"

- Pose estimation: Determining the 3D position and orientation of an object from sensor data. "ChArUco detection and pose estimation were implemented using Quest ArUco Marker Tracking"

- Proprioceptive: Relating to internal sensing of limb position, movement, and force. "limited force/proprioceptive information"

- Protocol Buffer Framework: A language-neutral, platform-neutral serialization system for structured data. "Googleâs Protocol Buffer Framework"

- Retroreflective markers: High-visibility reflective targets used for precise optical tracking in motion capture. "a retroreflective marker-based motion-capture system, Vicon Vero (v2.2)"

- Robot Operating System 2 (ROS 2): A middleware framework for building distributed, real-time-capable robotic applications. "Robot Operating System 2 (ROS 2), Humble Hawksbill distribution."

- Safety gating: Control logic that allows or halts motion based on safety conditions and user pose. "pose-based safety gating"

- Torque-controlled manipulator: A robot whose joints are commanded via torques for compliant, responsive interaction. "integrates a torque-controlled robotic manipulator"

- Vibrotactile motors: Small actuators that generate vibrations to render touch sensations on the skin. "vibrotactile motors, electrotactile arrays"

Collections

Sign up for free to add this paper to one or more collections.