- The paper proposes a personalized federated learning framework that uses a shared backbone with local heads to tailor digital twin radio maps for heterogeneous 6G environments.

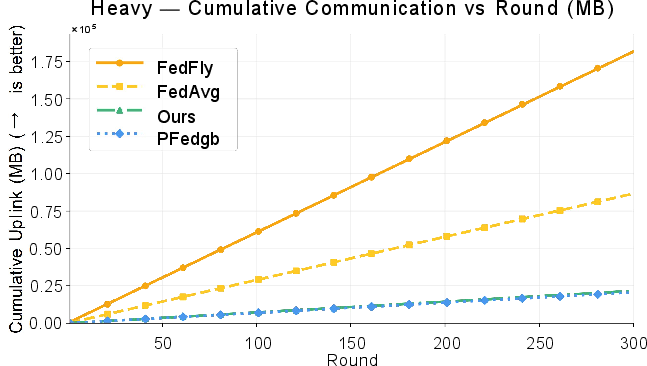

- It employs multi-phase compression techniques, including top-K sparsification, error feedback, and 8-bit quantization, to significantly reduce communication overhead.

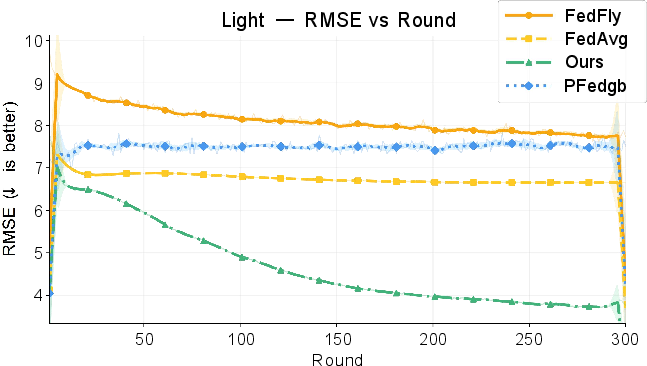

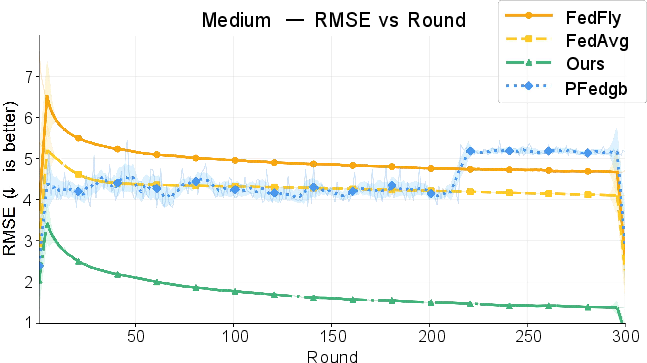

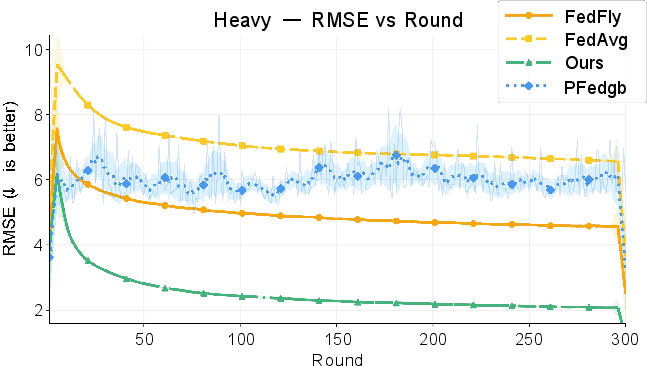

- Experimental results indicate that EPFL-REMNet outperforms FedAvg and PFedgb in Non-IID scenarios, achieving a superior accuracy-communication trade-off.

EPFL-REMNet: Efficient Personalized Federated Learning for 6G Radio Environment Maps

Introduction

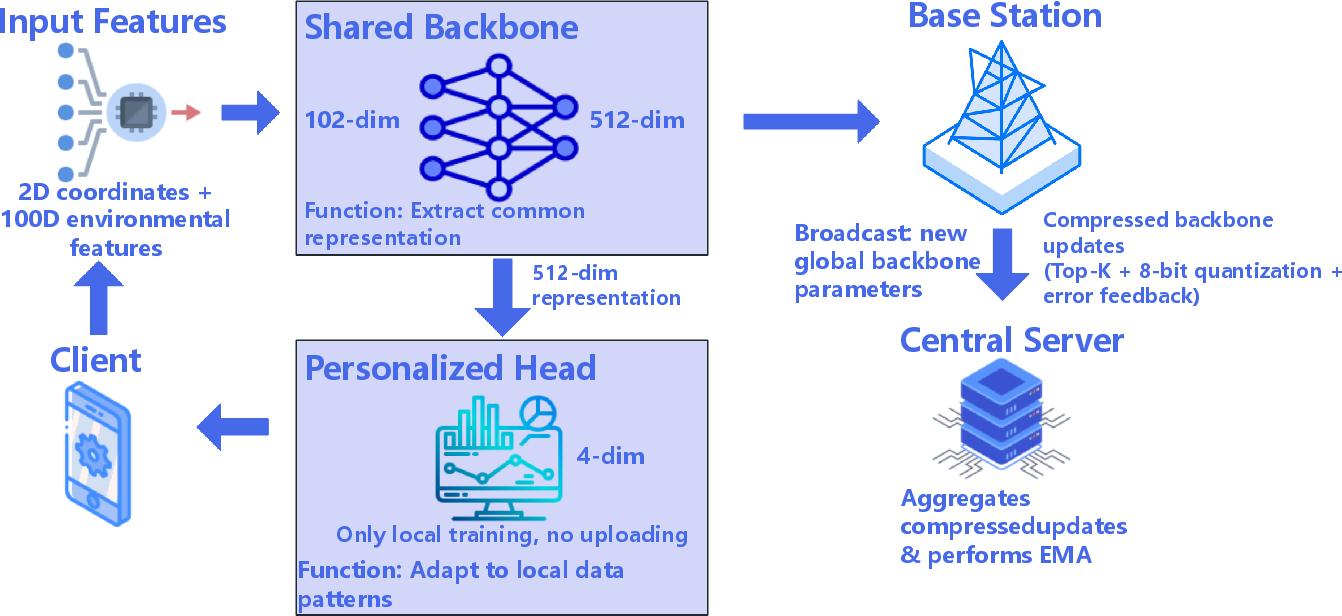

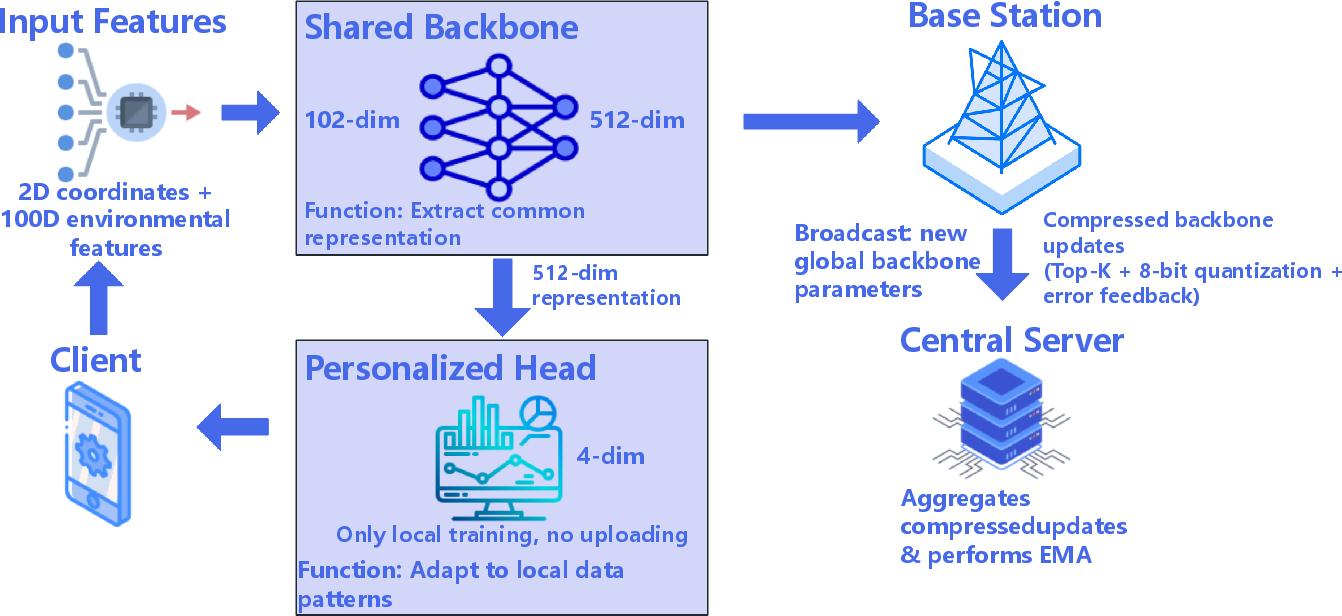

The emergence of B5G/6G networks necessitates the creation of high-fidelity Radio Environment Maps (REMs), serving as digital twins to intelligently manage and optimize these networks. Traditional approaches to building REMs are hampered by data heterogeneity and constrained communication bandwidth intrinsic to decentralized environments. EPFL-REMNet, an efficient personalized federated learning framework, addresses these challenges by utilizing a "shared backbone + personalized head" architecture. This framework is particularly adept in heterogeneous radio environments where data exhibits strong Non-IID characteristics, by maintaining localized learning while optimizing global communication.

EPFL-REMNet operates in a setting with multiple clients, each representing a distinct geographical area within a 6G network. The architecture consists of a shared backbone for transferable feature extraction and personalized heads to accommodate local peculiarities. The backbone, a three-layer MLP, is trained globally, while local heads are adapted per client for specific environmental conditions. Data normalization techniques ensure that all clients process data on a standardized scale, facilitating coherent global model updates.

The optimization objective minimizes a loss function with parameters distributed across clients, ensuring efficient tailoring of the digital twin model to various local environments. This optimization balances the trade-offs between local accuracy and communication costs by compressing model updates through sparsification and quantization.

Figure 1: EPFL-REMNet System Architecture.

Federated Optimization and Communication

EPFL-REMNet employs a federated training process where the server disseminates backbone weights to clients, each performing multiple local training epochs. Iterative global communication rounds update the global model while maintaining client-specific adaptations. A multi-phase compression strategy bolsters communication efficiency:

- Error Feedback: Incorporates previously untransmitted update errors to stabilize convergence.

- Top-K Sparsification: Retains only the most significant updates, curtailing unnecessary communication.

- Quantization: Further reduces transmitted data by compressing updates to 8-bit precision.

Periodic communications allow local models to enhance continuously while constraining server interactions.

Experimental Evaluation

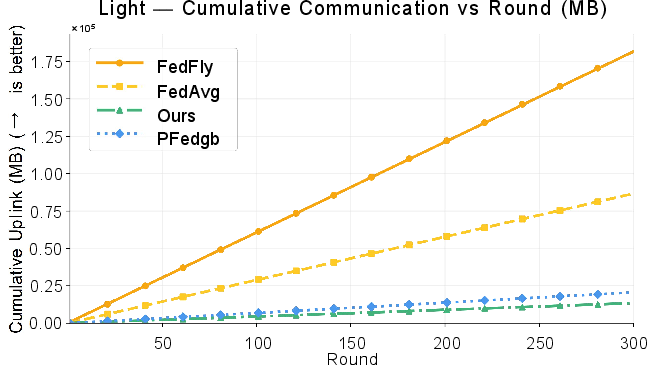

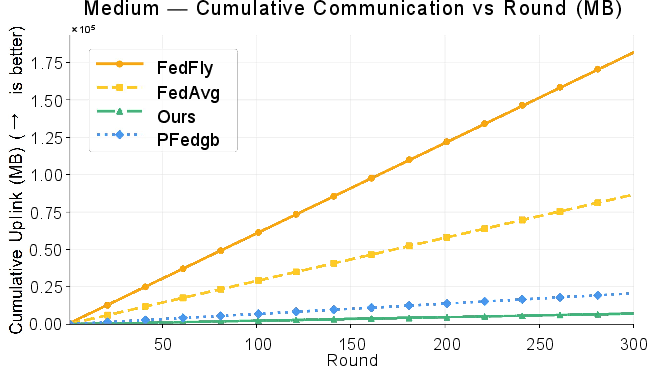

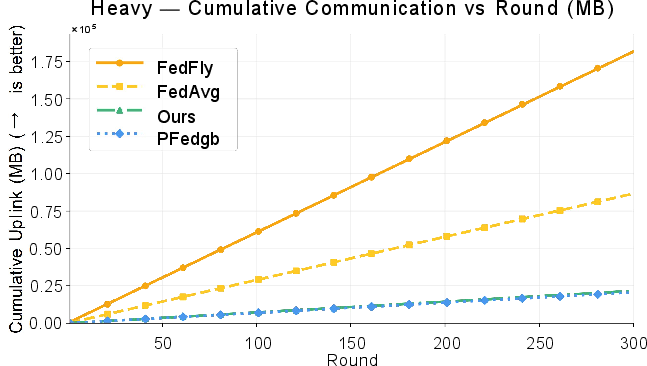

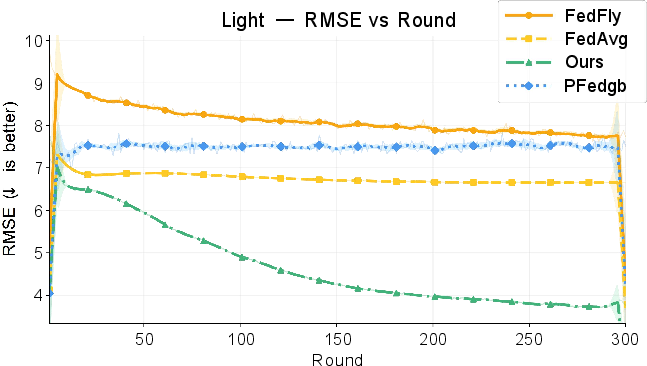

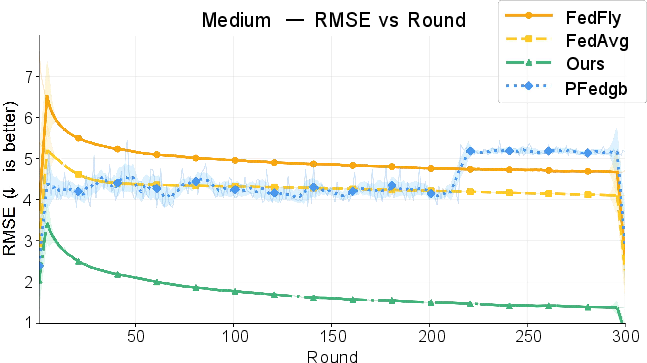

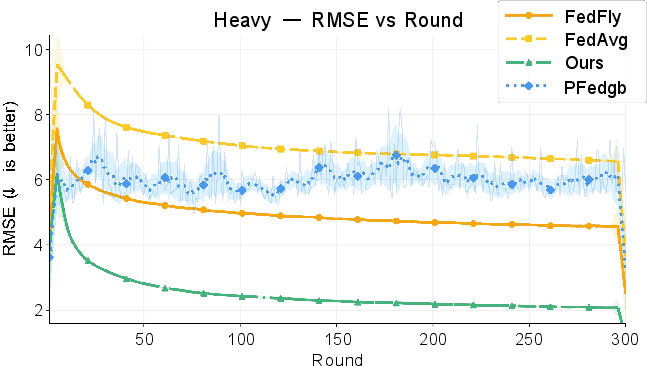

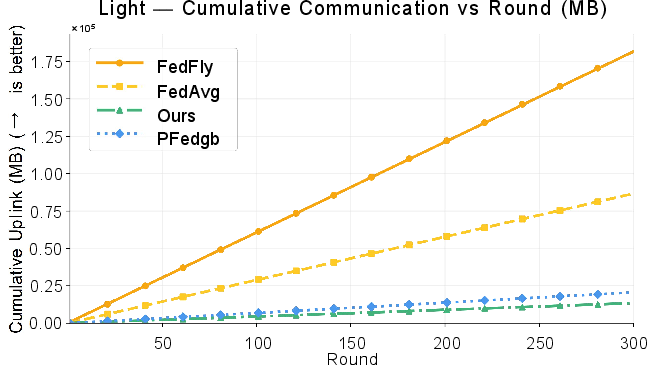

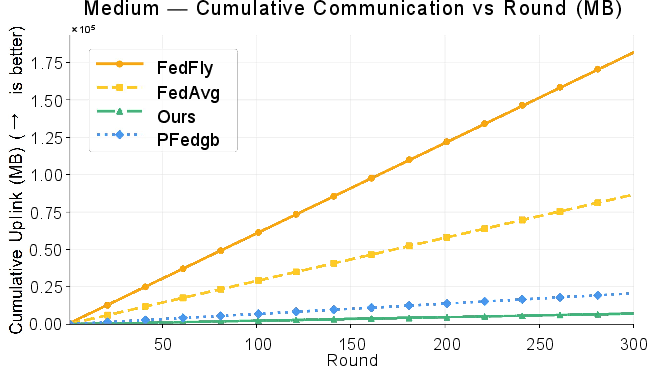

EPFL-REMNet's performance was assessed simulated across three Non-IID scenarios—light, medium, and heavy. Under these conditions, EPFL-REMNet consistently outperformed existing methods such as FedAvg and PFedgb, in terms of RMSE and communication costs.

Figure 2: Convergence curves and cumulative communication volume curves of each method in three-level Non-IID scenarios.

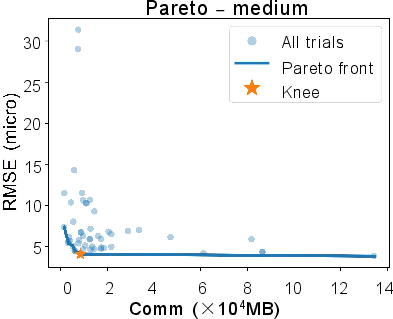

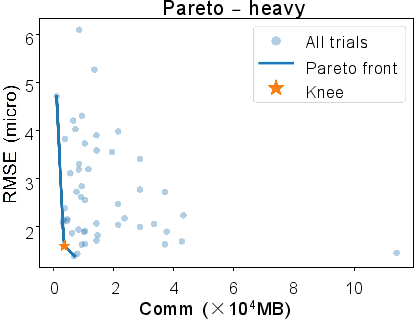

Accuracy-Communication Trade-Off

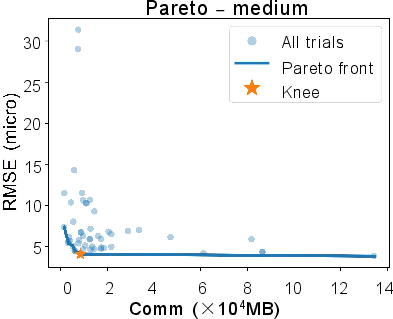

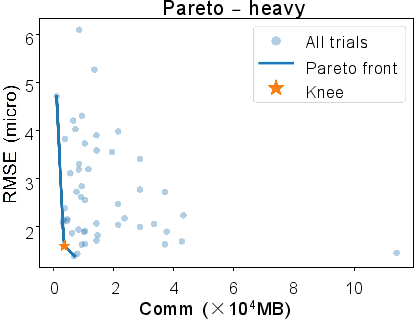

EPFL-REMNet exhibits a superior accuracy-communication trade-off, establishing a Pareto frontier where it achieves both higher accuracy and reduced communication costs relative to baseline methods. This suggests that EPFL-REMNet is not only efficient but also inherently more scalable across diverse operational regimes.

Figure 3: Precision-communication Pareto frontier graph of EPFL-REMNet in three-level Non-IID scenarios.

Conclusion

EPFL-REMNet makes significant strides in the construction of high-fidelity Radio Environment Maps for 6G networks. By effectively integrating personalization with efficient communication strategies, EPFL-REMNet demonstrates reduced communication overhead and increased local model accuracy. Such advancements affirm the necessity of personalized federated learning in handling the complexities of heterogeneous radio environments, paving the way for practical, scalable implementations in next-generation network systems. Further exploration could extend to refining communication protocols and adapting to even more diverse environmental conditions, ensuring robustness and flexibility in real-world applications.