- The paper introduces ISC-Perception, a hybrid dataset integrating CAD renders, synthetic images, and real photographs for enhanced object detection in ISC steel assemblies.

- It details a methodology that reduces manual annotation by 81.7% while maintaining high detection accuracy with YOLOv8, achieving [email protected] up to 0.943.

- The approach is validated with real-world bench tests ensuring robust detection of ISC components and human workers under challenging lighting and cluttered conditions.

ISC-Perception: A Hybrid Computer Vision Dataset for Object Detection in Novel Steel Assembly

Introduction and Motivation

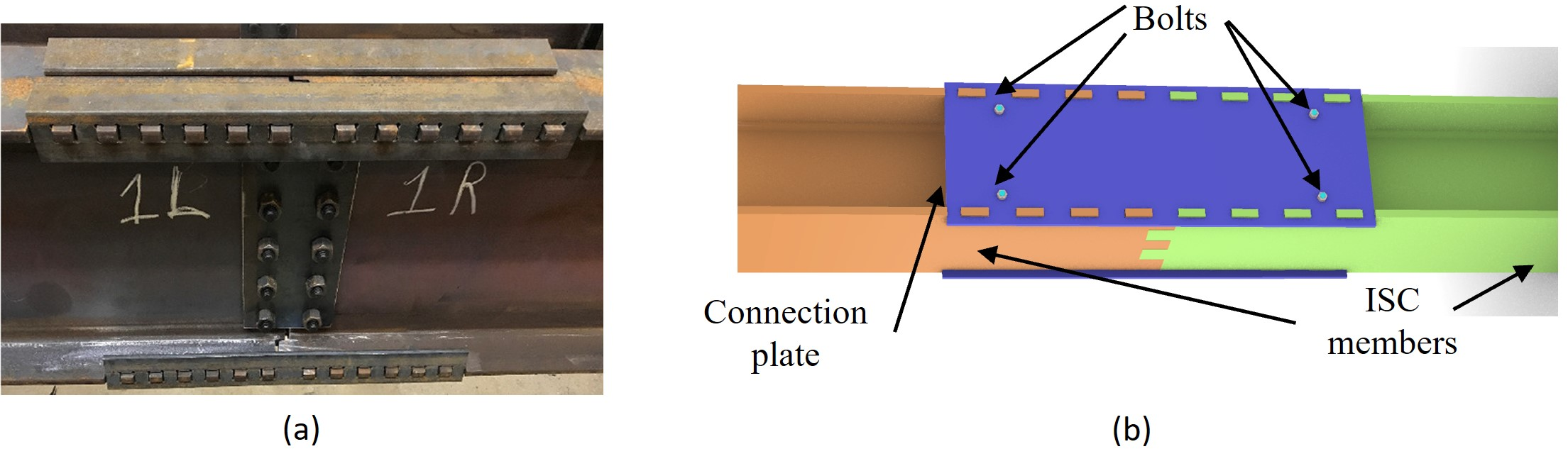

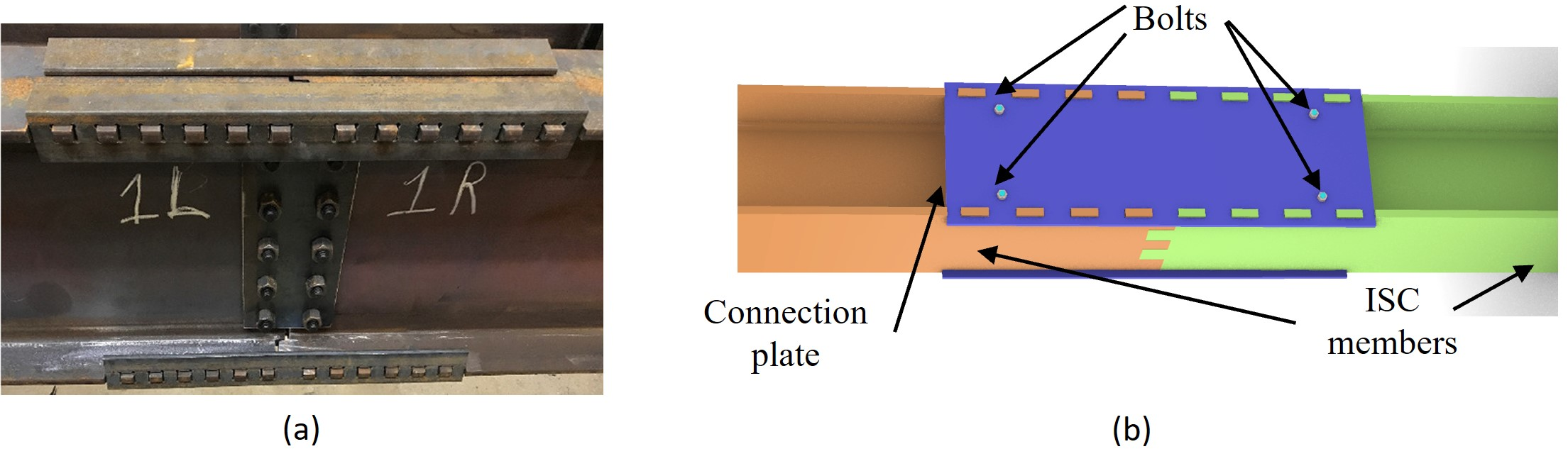

The paper introduces ISC-Perception, a hybrid computer vision dataset specifically designed for object detection in the context of robotic assembly of Intermeshed Steel Connection (ISC) systems. The ISC system, which leverages precision-cut male-female tabs and connection plates, offers significant advantages over traditional bolted or welded steel connections, including reduced material waste, improved assembly speed, and enhanced safety. However, the unconventional geometry and reflective surfaces of ISC components present unique challenges for computer vision, particularly in unstructured and cluttered construction environments.

The lack of publicly available, task-specific image corpora for ISC components has been a major bottleneck for developing robust perception systems for construction robotics. Collecting real images on active construction sites is logistically complex and raises safety and privacy concerns. ISC-Perception addresses this gap by integrating procedurally rendered CAD images, photorealistic game-engine scenes, and a curated set of real photographs, enabling efficient and scalable dataset generation with minimal manual annotation.

Figure 1: Components of ISC beam-to-beam; (a) earlier version of fabricated ISC, (b) CAD drawing of ISC with single connection.

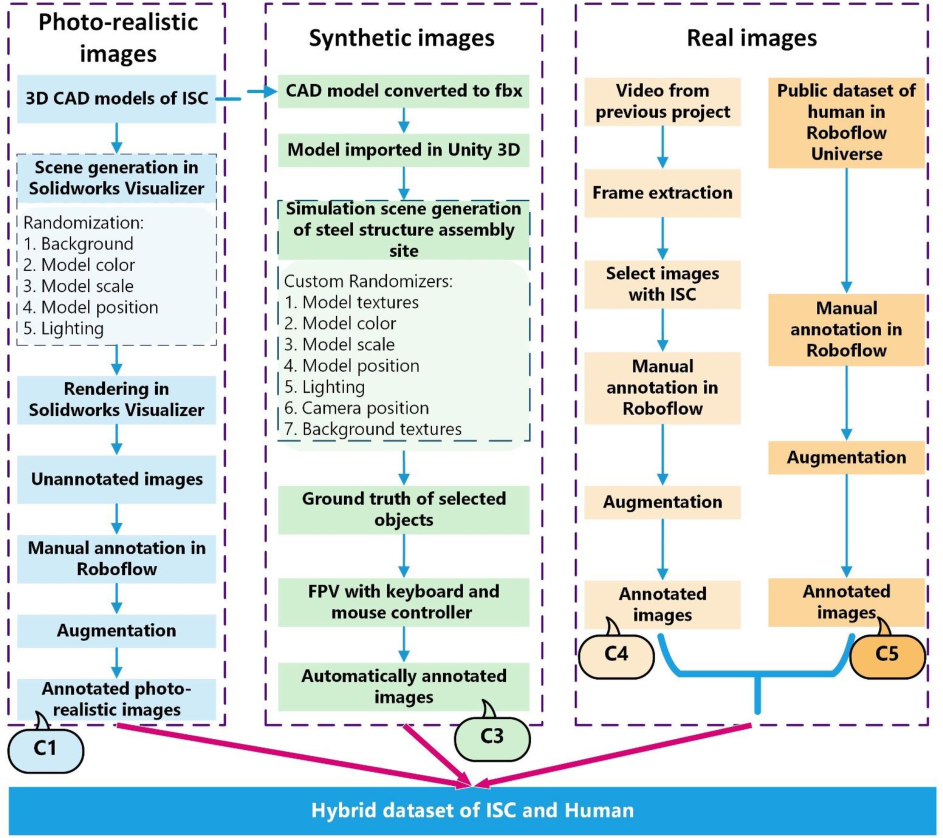

Hybrid Dataset Generation Methodology

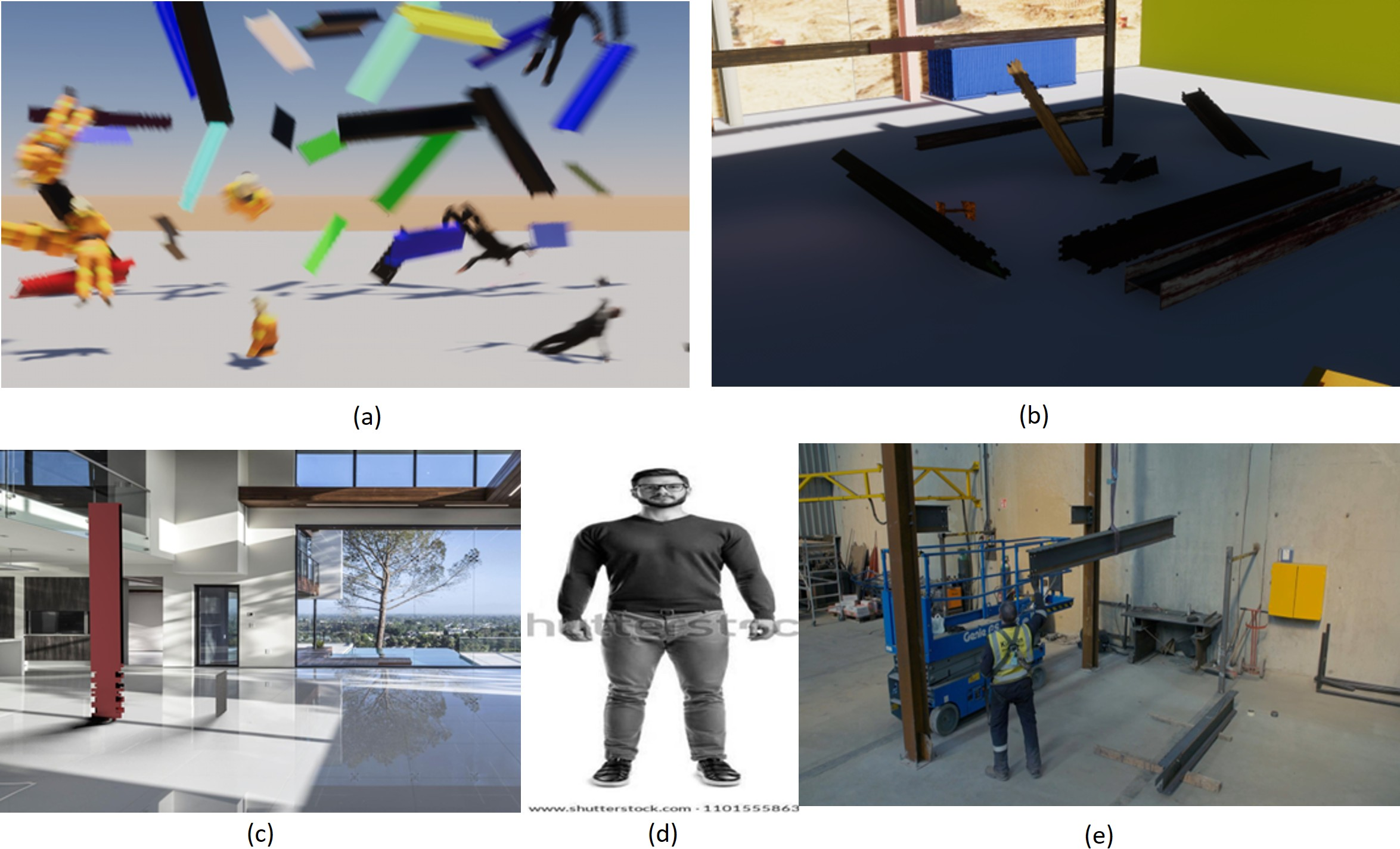

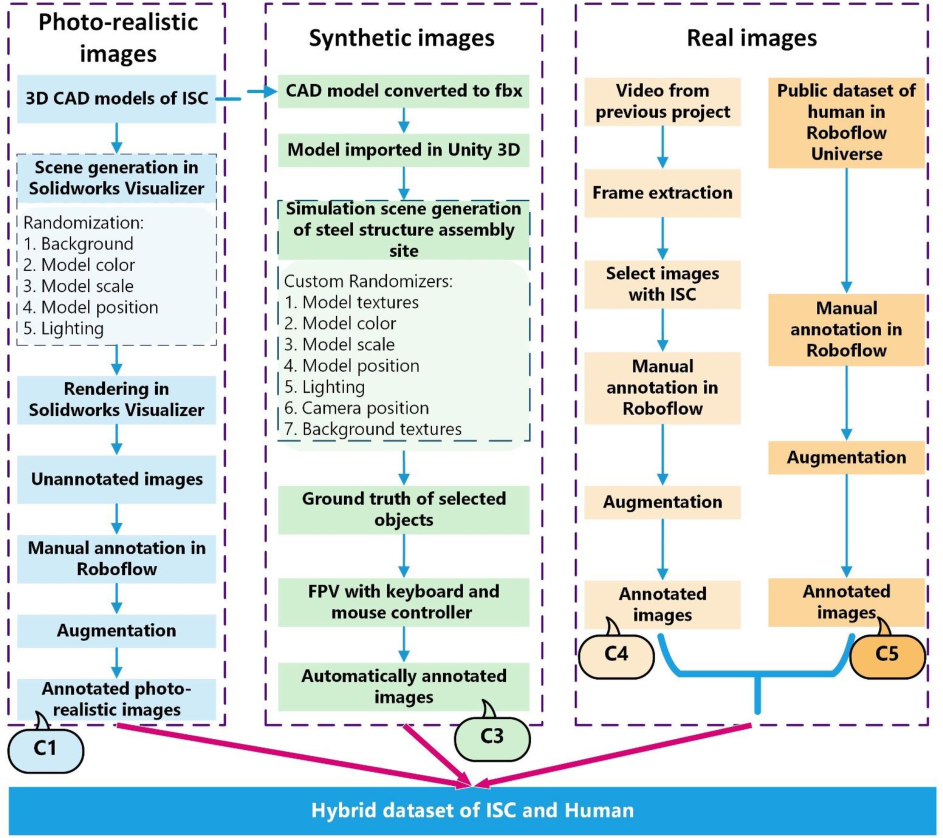

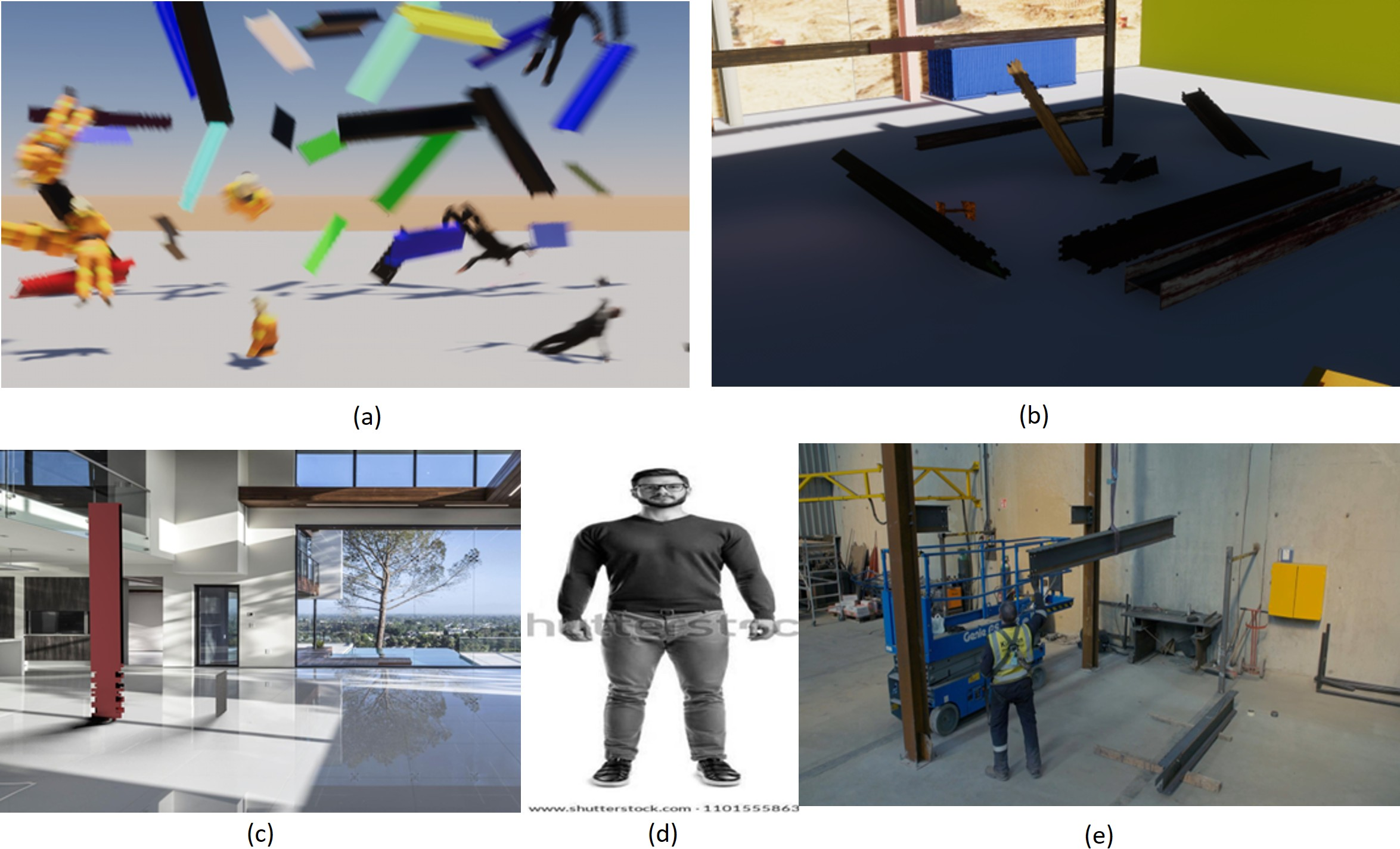

ISC-Perception comprises three primary image modalities:

- Photorealistic CAD renders (SolidWorks Visualize): High-fidelity images with randomized backgrounds, textures, and lighting.

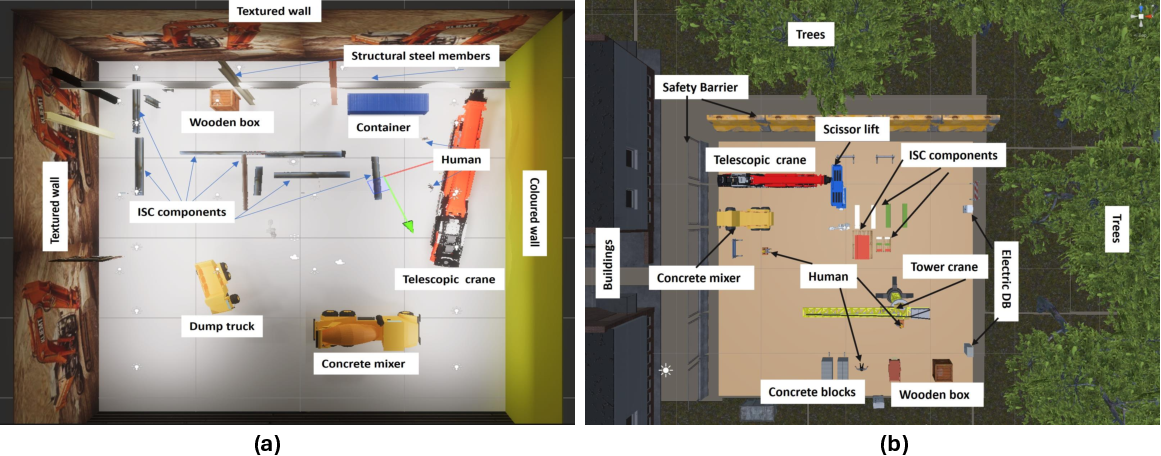

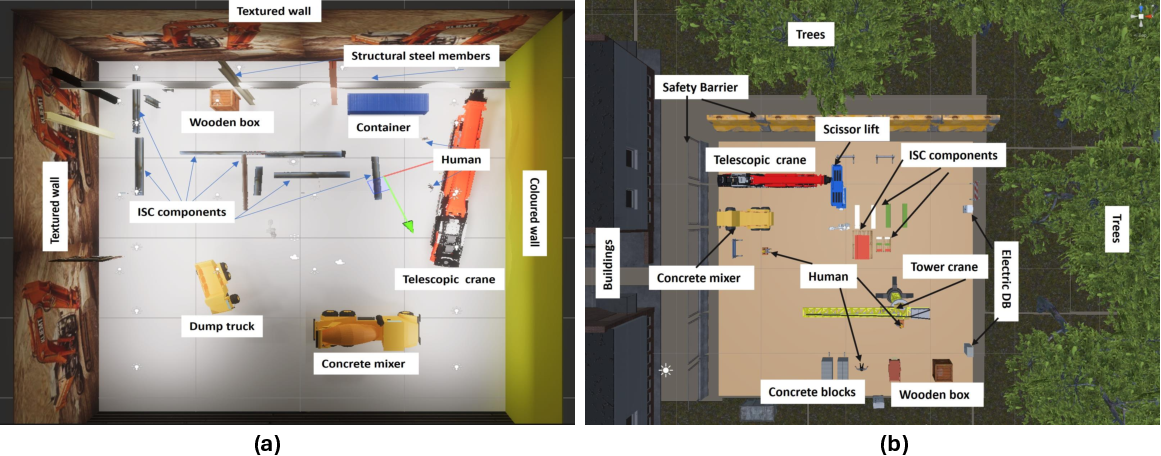

- Synthetic images (Unity 3D): Automatically annotated scenes generated using both built-in and custom randomizers to maximize diversity in object placement, lighting, and occlusion.

- Real images: Curated from project videos and public datasets (Roboflow Universe for human detection), manually annotated and augmented for variability.

The dataset focuses on three object classes: ISC member, ISC connection plate, and human. The hybrid composition ensures coverage of the geometric and appearance variability encountered in real-world assembly scenarios, while minimizing manual annotation effort (30.5 hours for 10,000 images, an 81.7% reduction compared to manual labeling).

Figure 2: Source of images and workflow for creating the hybrid dataset combining different types of images.

Figure 3: View of Robotic Steel Assembly in Unity; (a) Outdoor Scene; (b) Indoor Scene.

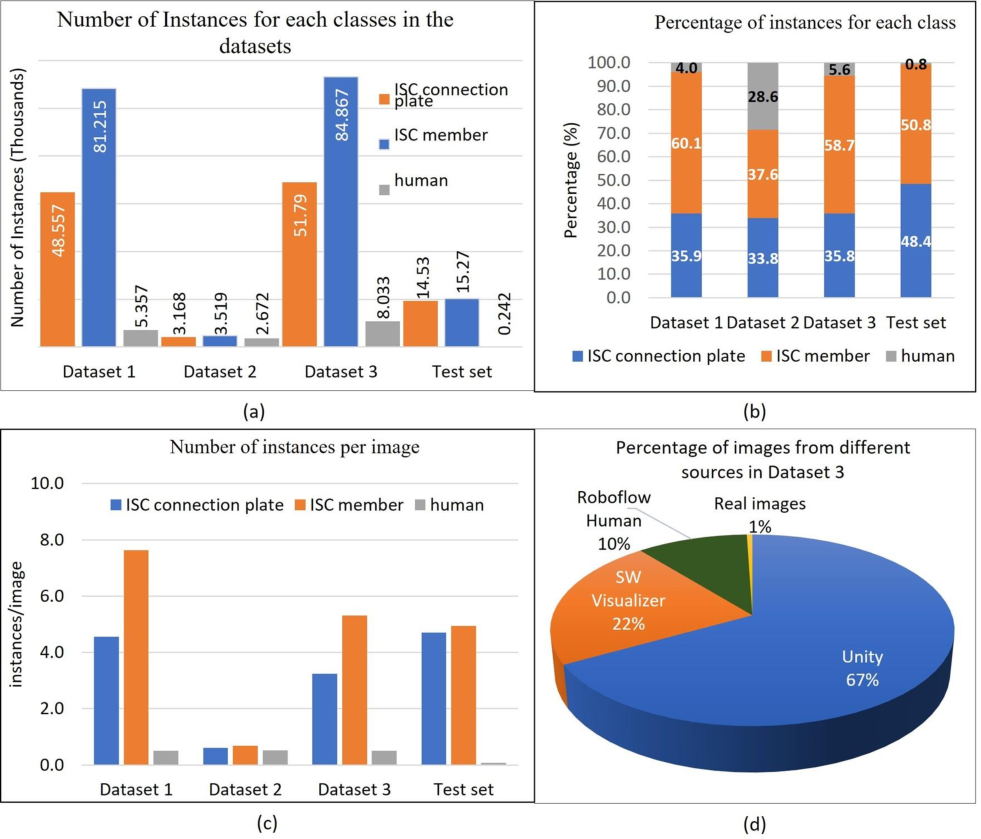

Dataset Composition and Statistics

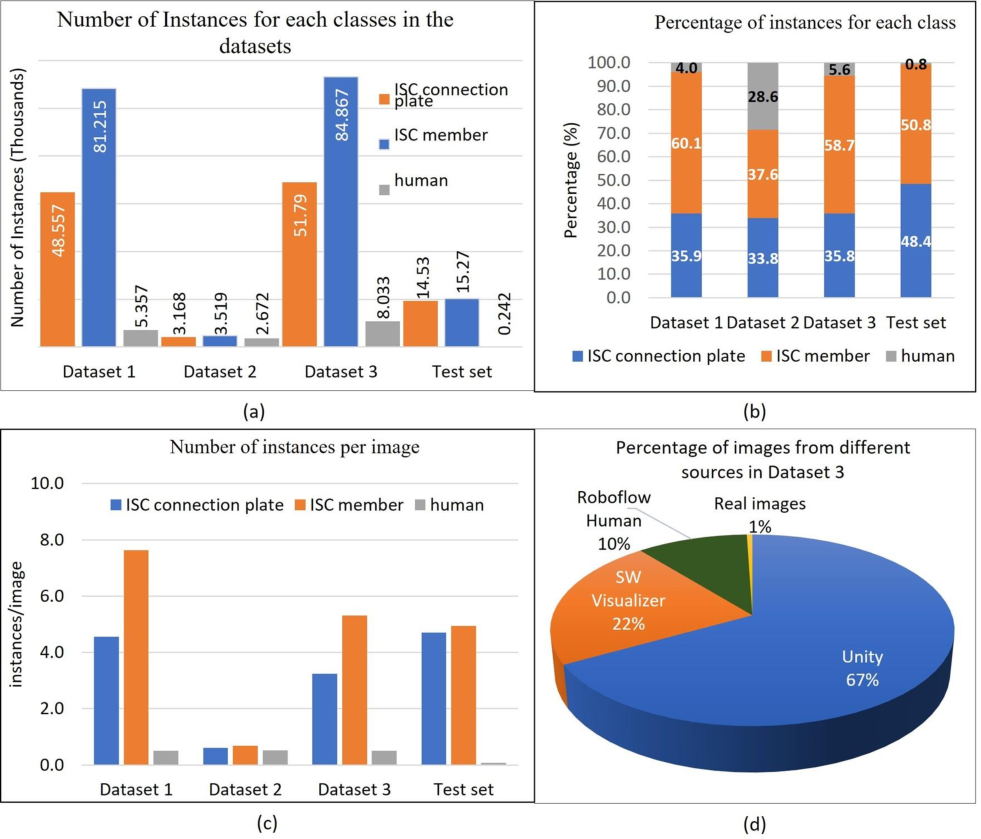

ISC-Perception contains 15,974 training/validation images and 3,087 test images, distributed across Unity synthetic, SolidWorks photorealistic, Roboflow human, and real ISC images. The hybrid dataset (Dataset 3) integrates all sources, while Dataset 1 and Dataset 2 are restricted to synthetic and photorealistic/human images, respectively.

Figure 4: Dataset statistics; (a) number of instances for each class and (b) percentage of instances in each dataset, (c) number of instances per image for each class, (d) percentage of images from different source in dataset 3.

Figure 5: Representative samples from ISC-Perception: (a) Unity (built-in randomizers, C2), (b) Unity (custom randomizers, C3), (c) SolidWorks Visualize photorealistic render (C1), (d) Human example from Roboflow Universe (C5), (e) Real ISC frame (C4).

Model Training and Evaluation

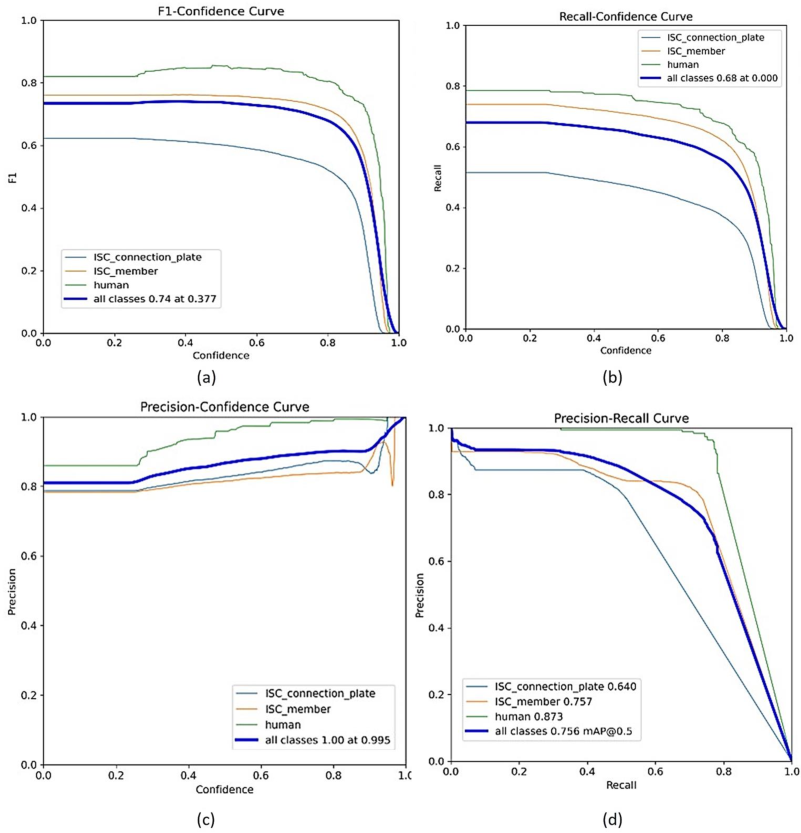

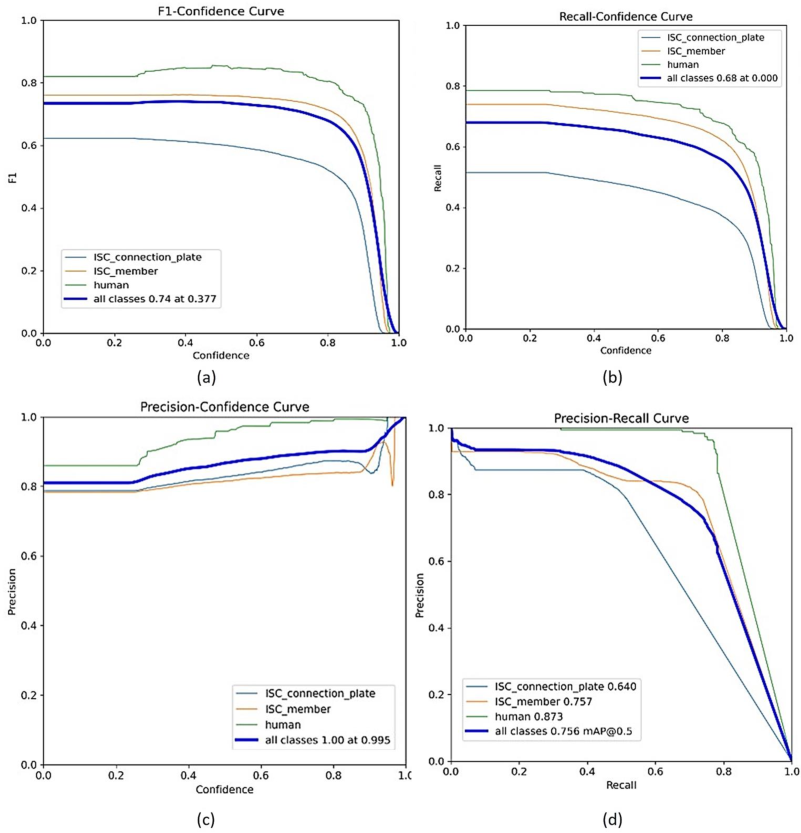

YOLOv8n (Ultralytics v8.3.198) was trained on each dataset variant using a fixed hardware configuration (Core i9, RTX 4060, 32GB RAM), with early stopping and standard augmentation protocols. The models were evaluated on a fixed test set comprising samples from all image sources.

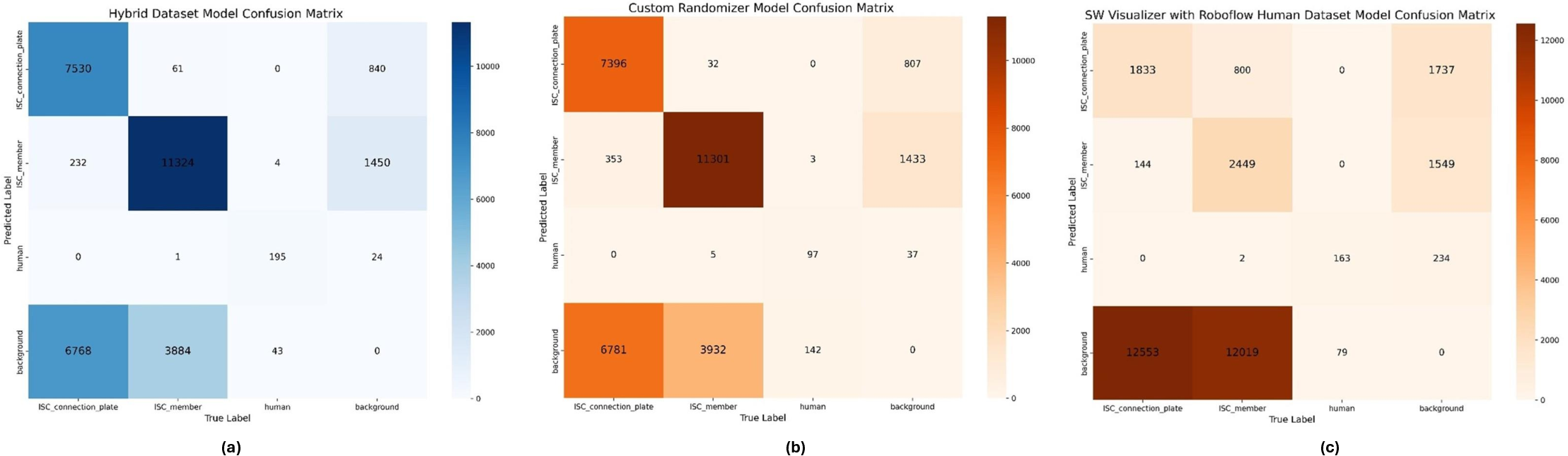

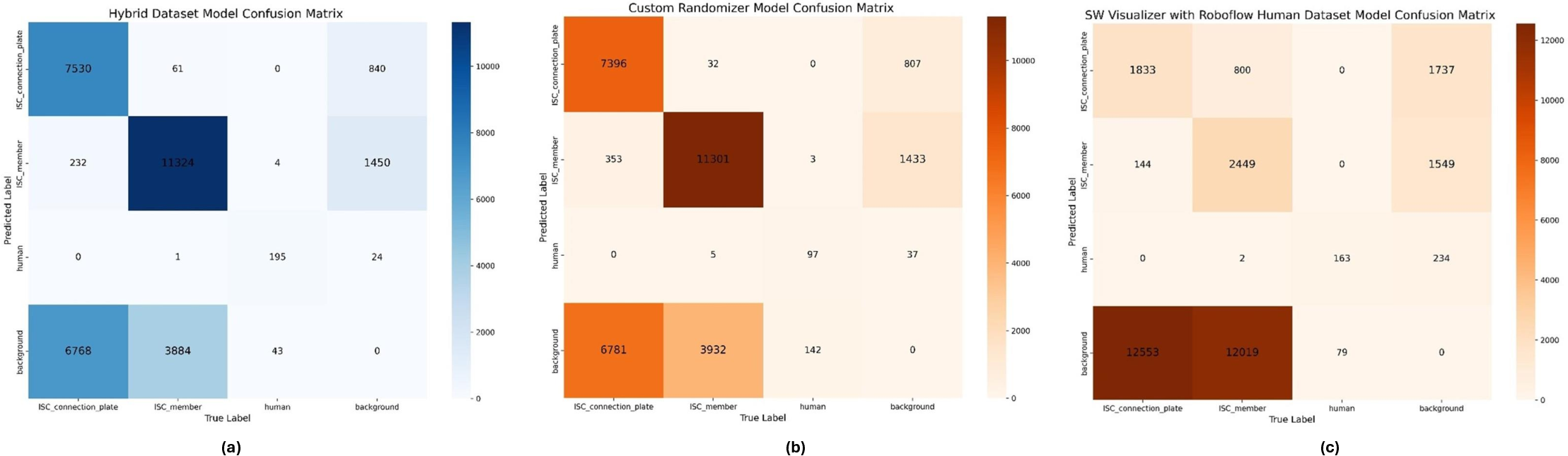

- Hybrid dataset (Dataset 3): [email protected] = 0.756, mAP@[0.5:0.95] = 0.664, precision = 0.846, recall = 0.666.

- Custom randomizer (Dataset 1): [email protected] = 0.659, mAP@[0.5:0.95] = 0.564.

- SW Visualize + Roboflow (Dataset 2): [email protected] = 0.386, mAP@[0.5:0.95] = 0.321.

The hybrid dataset consistently outperformed the other variants across all object classes, particularly in human detection (mAP@[0.5:0.95] = 0.804) and ISC member identification. Controlled size-matched experiments confirmed that the performance gains are attributable to dataset composition rather than size alone (hybrid: [email protected] = 0.675 vs. synthetic-only: 0.546, photorealistic-only: 0.249).

Figure 6: Confusion Matrix plots of trained models on test Set; (a) on Hybrid Dataset (b) Custom Randomizer Dataset (c) SW Visualize with Roboflow Dataset.

Figure 7: Performance curves of the model trained on Hybrid Dataset Evaluated on Test Set; (a) F1 confidence curve, (b) recall-confidence curve, (c) precision-confidence curve, (d) precision-recall curve.

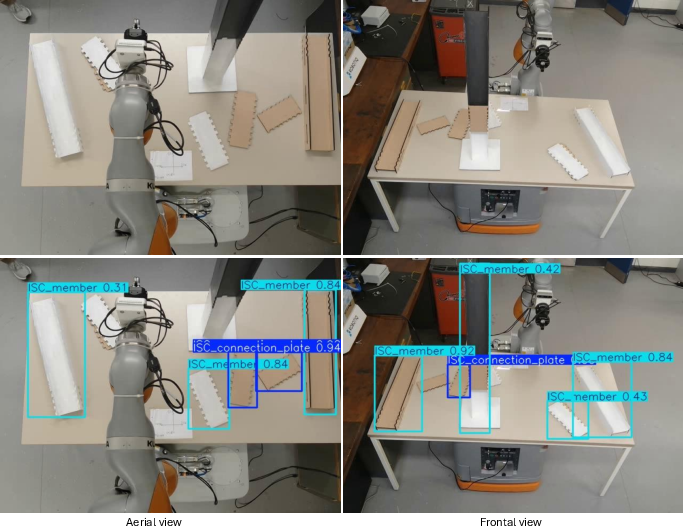

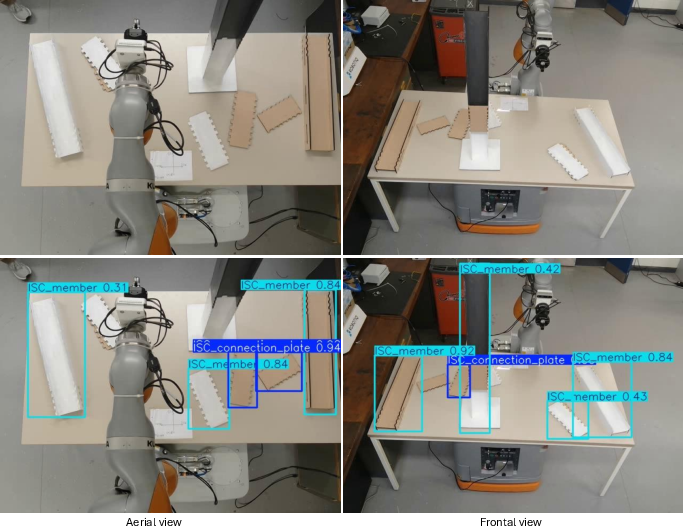

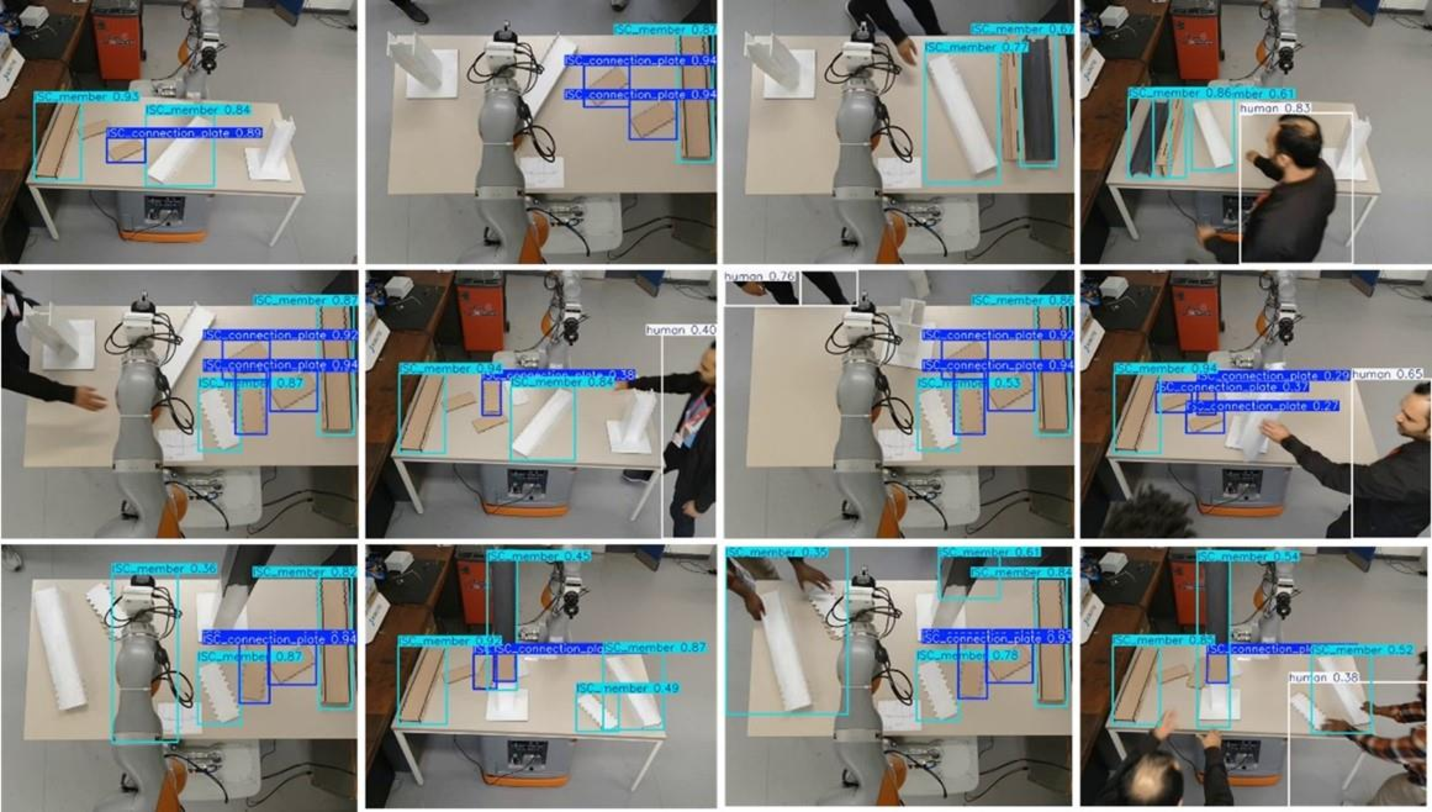

Real-World Bench Testing

The trained hybrid model was deployed in a multi-camera bench-top ISC assembly experiment, enabling real-time detection and tracking of ISC components and human workers. On a 1,200-frame test, the model achieved [email protected] = 0.943, mAP@[0.50:0.95] = 0.823, precision = 0.951, and recall = 0.930. Failure cases were primarily due to glare-induced appearance shifts in the frontal camera view, indicating a need for improved robustness to lighting variations.

Figure 8: Synchronized aerial- and frontal-camera views of the bench-top ISC assembly experiment, shown before (top row) and after (bottom row) YOLOv8 inference.

Figure 9: Real-Time Object Detection Tracking Performance on ISC Objects, Connection Plates, and Human Workers.

Figure 10: Glaring in the frontal view impacts detection performance.

Implications and Future Directions

ISC-Perception demonstrates that hybrid datasets, integrating synthetic, photorealistic, and real images, are essential for robust object detection in novel industrial domains where real data is scarce or difficult to obtain. The methodology enables rapid, scalable dataset generation with minimal manual effort, facilitating the development of custom detectors for emerging applications in construction robotics.

The strong numerical results—particularly the substantial mAP improvements and high real-world detection rates—underscore the efficacy of hybrid composition. The findings contradict the notion that synthetic or photorealistic data alone can suffice for generalization in complex, real-world tasks. The approach is extensible to other domains with similar data constraints, such as nuclear decommissioning, tunnel inspection, or remote industrial sites.

Future work should focus on automating annotation for photorealistic images, enhancing simulation realism (lighting, material properties), and improving robustness to challenging environmental conditions (e.g., glare, occlusion). The integration of domain randomization and advanced rendering techniques will further reduce the sim2real gap. Additionally, expanding the dataset to include more object classes and scene types will support broader automation workflows in construction and manufacturing.

Conclusion

ISC-Perception provides a scalable, efficient methodology for generating hybrid computer vision datasets tailored to novel industrial applications. The demonstrated improvements in detection accuracy and generalization validate the hybrid approach as a practical solution for data-scarce domains. The dataset and procedural framework lay the groundwork for future research in autonomous robotic assembly, safety monitoring, and industrial automation, with direct applicability to other sectors facing similar data acquisition challenges.