- The paper introduces Chunk-GRPO, a chunk-level optimization framework that enhances reward propagation and corrects inaccurate advantage attribution in flow-matching text-to-image generation.

- It employs a temporal-dynamic-guided chunking strategy and an optional weighted sampling mechanism to balance preference alignment improvements with image stability.

- Experimental results demonstrate up to a 23% improvement in preference alignment over baseline methods, highlighting the practical benefits of this approach.

Chunk-Level GRPO for Flow-Matching-Based Text-to-Image Generation

Introduction

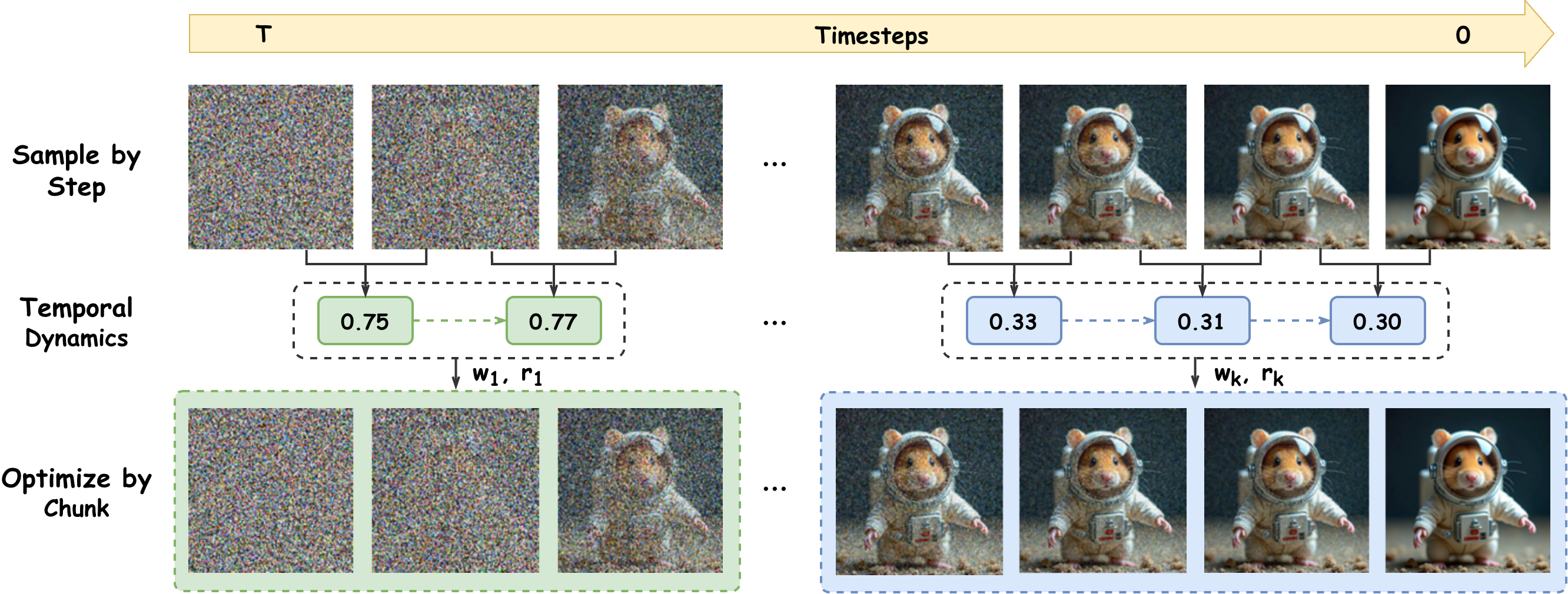

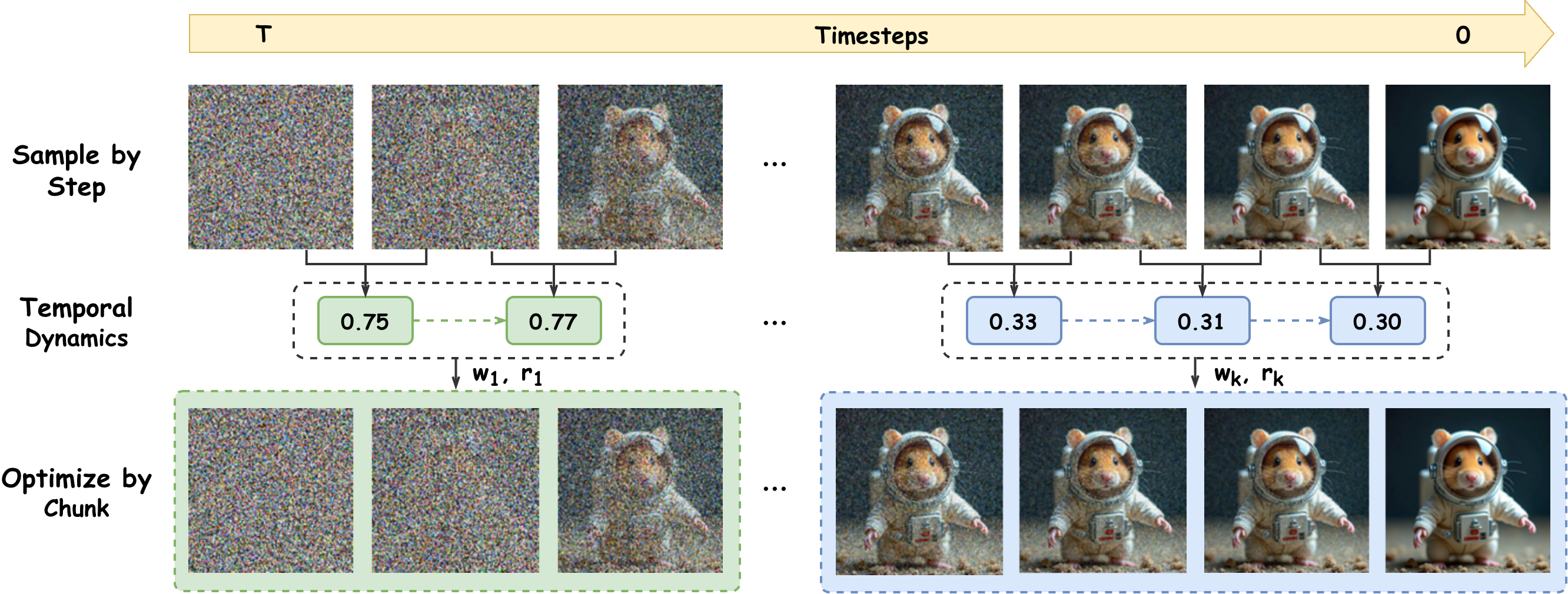

The paper introduces Chunk-GRPO, a novel chunk-level Group Relative Policy Optimization (GRPO) paradigm for flow-matching-based text-to-image (T2I) generation. The motivation stems from two critical limitations in standard step-level GRPO: inaccurate advantage attribution and the neglect of temporal dynamics inherent in the generation process. By grouping consecutive timesteps into coherent chunks and optimizing policies at the chunk level, Chunk-GRPO aims to more accurately propagate reward signals and leverage the temporal structure of flow matching. The method further incorporates a temporal-dynamic-guided chunking strategy and an optional weighted sampling mechanism to enhance optimization efficacy.

Background and Motivation

GRPO has emerged as a competitive RL-based approach for preference alignment in T2I generation, particularly in flow-matching models. Standard GRPO samples groups of images per prompt, evaluates them with reward models, and assigns group-relative advantages uniformly across all timesteps. This uniform assignment, however, leads to two main issues:

- Inaccurate Advantage Attribution: The same advantage is assigned to all timesteps, regardless of their actual contribution to the final reward, resulting in suboptimal policy updates.

- Neglect of Temporal Dynamics: Flow matching exhibits prompt-invariant but timestep-dependent latent dynamics, with different timesteps contributing unequally to image quality.

The paper draws inspiration from action chunking in RL and robotics, where sequences of actions are optimized jointly to mitigate compounding errors and stabilize long-horizon predictions. Analogously, chunk-level optimization in T2I generation can better capture the temporal correlations and propagate reward signals more effectively.

Methodology

Chunk-Level Optimization

Chunk-GRPO reformulates the GRPO objective by grouping consecutive timesteps into chunks and optimizing each chunk as a unit. The chunk-level importance ratio is computed over the joint likelihood of transitions within the chunk, and the policy is updated by maximizing the chunk-level GRPO objective. This approach smooths the gradient signal and mitigates the inaccuracies of step-level advantage attribution, especially when chunk sizes are small.

Temporal-Dynamic-Guided Chunking

Empirical analysis of the relative L1 distance between intermediate latents reveals prompt-invariant, timestep-dependent patterns in flow matching. Timesteps with similar latent dynamics are grouped into the same chunk, while those with distinct dynamics are separated. This segmentation aligns the optimization process with the intrinsic temporal structure of flow matching, leading to more meaningful chunk boundaries.

Figure 1: The overall framework of Chunk-GRPO, integrating chunk-level optimization with temporal-dynamic-guided chunking and optional weighted sampling.

Weighted Sampling Strategy

To further enhance optimization, an optional weighted sampling strategy is introduced. Chunks are sampled with probabilities proportional to their average relative L1 distance, biasing updates toward high-noise regions where latent changes are more pronounced. While this accelerates preference alignment, it can destabilize image structure in high-noise regions, occasionally leading to semantic collapse.

Experimental Results

Chunk-GRPO is evaluated on multiple benchmarks, including preference alignment (HPSv3, ImageReward, Pick Score) and standard T2I metrics (WISE, GenEval). The method consistently outperforms both the base model (FLUX) and Dance-GRPO across all metrics.

Figure 2: Chunk-GRPO significantly improves image quality, particularly in structure, lighting, and fine-grained details, demonstrating the superiority of chunk-level optimization.

Chunk-GRPO achieves up to 23% improvement in preference alignment over the baseline. Temporal-dynamic-guided chunking outperforms fixed-size chunking, underscoring the importance of aligning chunk boundaries with latent dynamics. The weighted sampling strategy further boosts preference alignment but can reduce performance on semantic benchmarks such as WISE and GenEval, highlighting a trade-off between preference optimization and structural stability.

Figure 3: Visualization comparison between FLUX, DanceGRPO, Chunk-GRPO variants, and Chunk-GRPO with weighted sampling.

Figure 4: Additional qualitative comparison of image outputs across methods and chunking strategies.

Ablation studies confirm the robustness of Chunk-GRPO across different reward models and chunk configurations. Training on specific chunks reveals that high-noise chunks yield larger improvements but suffer from instability, motivating the adaptive weighted sampling approach.

Figure 5: A failure case of the weighted sampling strategy, where image structure is destabilized in high-noise regions.

Theoretical Analysis

Mathematical analysis demonstrates that chunk-level optimization yields a smoothed version of the step-level GRPO objective, with gradients closer to the ground-truth reward signal, especially for small chunk sizes. The chunk-level approach is provably superior when a significant fraction of timesteps suffer from inaccurate advantage attribution.

Implementation Considerations

- Chunk Configuration: Optimal chunk boundaries are determined by analyzing the relative L1 distance curve. The default configuration segments 17 timesteps into four chunks: [2, 3, 4, 7].

- Resource Requirements: Training is conducted on 8 Nvidia H800 GPUs, with batch sizes and learning rates tuned for stability.

- Evaluation Protocol: Hybrid inference is used during evaluation, sampling initial steps with the trained model and later steps with the base model to mitigate reward hacking.

- Scalability: The chunk-level approach is compatible with existing flow-matching architectures and can be extended to larger models and datasets.

Implications and Future Directions

Chunk-GRPO advances the granularity of RL-based optimization in T2I generation, demonstrating that chunk-level policy updates aligned with temporal dynamics yield superior image quality and preference alignment. The method is robust across reward models and generalizes beyond preference alignment to semantic instruction-following tasks.

Future research directions include:

- Adaptive Chunking: Developing self-adaptive chunk segmentation strategies that respond to training signals and model dynamics.

- Heterogeneous Rewards: Exploring the use of different reward models for distinct chunks, particularly for high- vs. low-noise regions.

- Integration with Other RL Paradigms: Extending chunk-level optimization to other RL-based generative modeling frameworks.

Conclusion

Chunk-GRPO introduces a principled chunk-level optimization paradigm for flow-matching-based T2I generation, leveraging temporal-dynamic-guided chunking and optional weighted sampling. The approach consistently improves image quality and preference alignment over step-level GRPO, with strong empirical and theoretical support. While the weighted sampling strategy offers further gains, it introduces trade-offs in structural stability, motivating future work on adaptive and heterogeneous chunking strategies. Chunk-GRPO represents a significant step toward more granular and temporally-aware RL optimization in generative modeling.