- The paper shows that interactive explanations significantly boost user trust compared to basic or no explanations in AI decision-making.

- It employs a controlled, web-based simulation with varied explanation modalities and validated Likert-scale instruments to measure trust and explainability.

- Findings emphasize the need for adaptive explanation designs that balance informational clarity with cognitive load, tailored to user expertise.

Quantitative Analysis of Explainability and Trust in AI Systems

Introduction

The paper "Preliminary Quantitative Study on Explainability and Trust in AI Systems" (2510.15769) presents a controlled experimental investigation into the relationship between explanation modalities and user trust in AI-driven decision-making. The paper is motivated by the increasing deployment of opaque AI models in high-stakes domains, where user trust and transparency are critical for adoption and oversight. The authors address a gap in the literature by providing quantitative evidence on how different explanation types—ranging from feature importance to interactive counterfactuals—affect user trust, perceived reliability, and explainability.

Experimental Design and Methodology

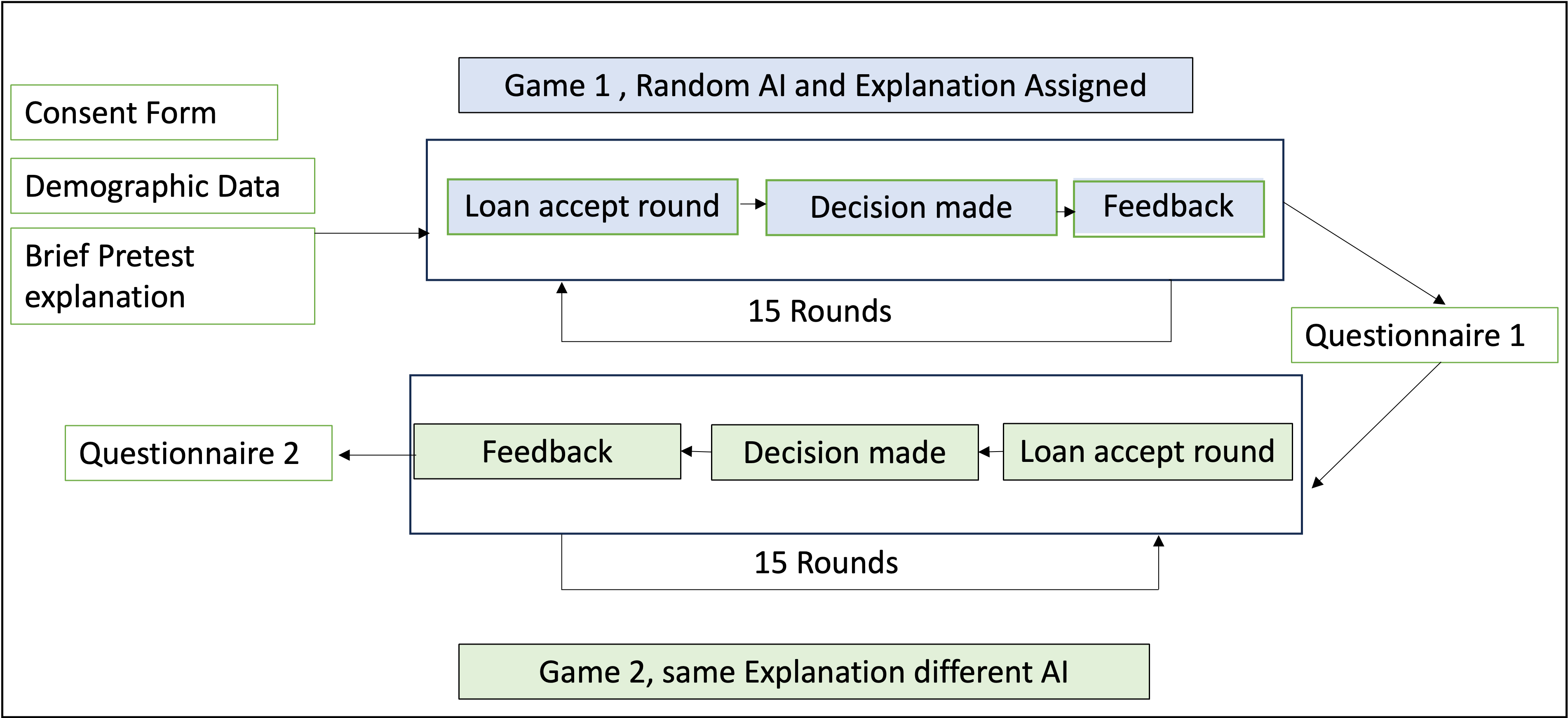

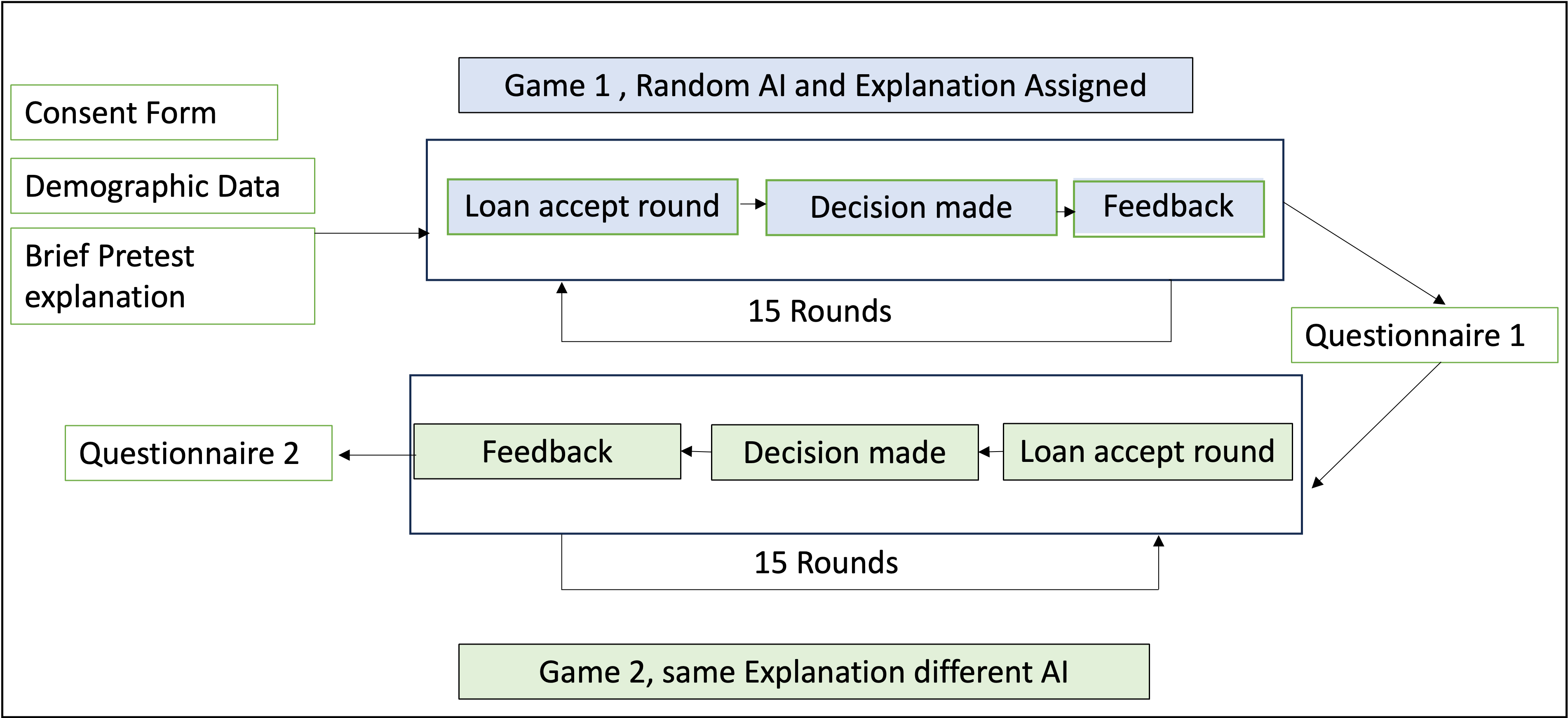

The paper employs a web-based loan approval simulation, where participants interact with two AI models: a high-accuracy CatBoost classifier (Good AI) and a degraded model with randomized targets (Bad AI). Explanations are manipulated across four conditions: none, basic (feature importance), contextual (detailed), and interactive (query-based counterfactuals). The factorial design controls for age, AI literacy, and system type, enabling analysis of both main and interaction effects.

Figure 1: Overview of paper setup and participant flow.

Participants (N=15) are stratified by age and AI familiarity, ensuring diversity in cognitive and experiential backgrounds. Trust and explainability are measured using validated Likert-scale instruments adapted from the literature, with items targeting confidence, predictability, reliability, and perceived explanation quality.

Measurement Instruments

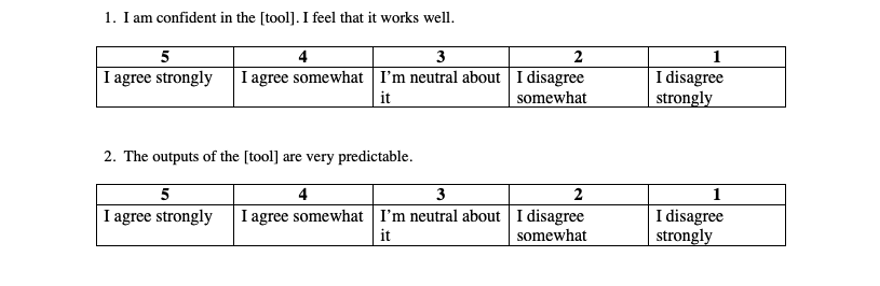

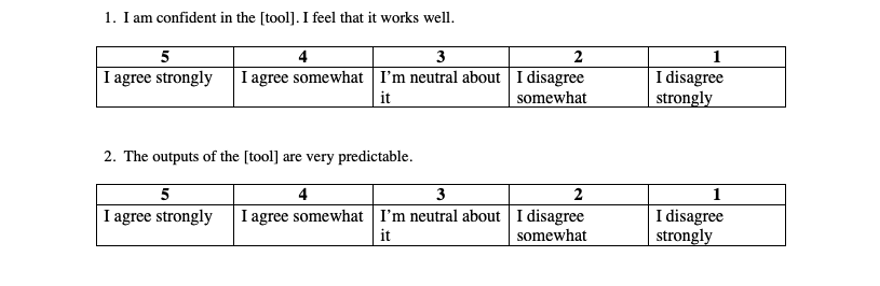

The trust and explainability scales are central to the paper's quantitative rigor. The trust scale, adapted from Hoffman et al. (2021) and Cahour & Forzy (2009), captures multi-dimensional aspects of trust, including confidence, reliability, and efficiency.

Figure 2: Full Trust Scale used in the paper, presented across two sections for readability.

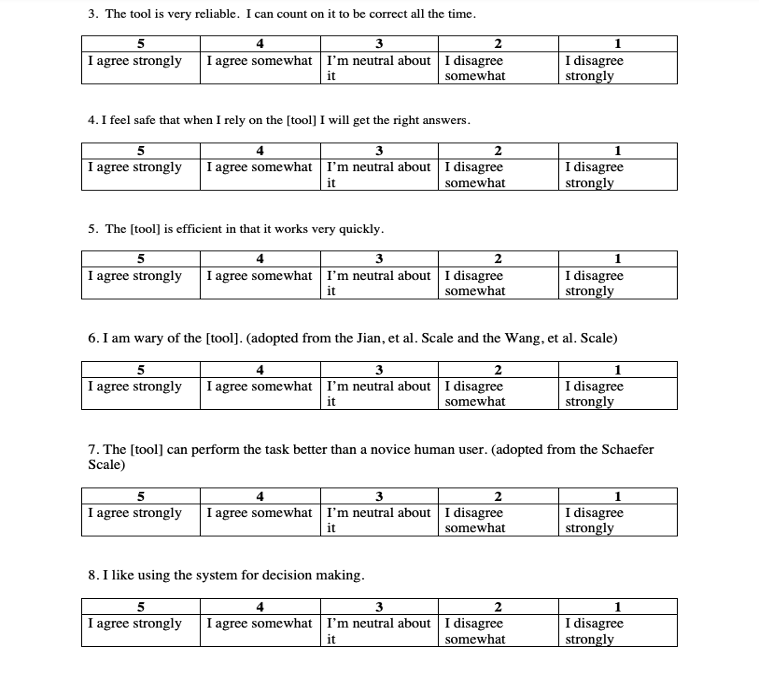

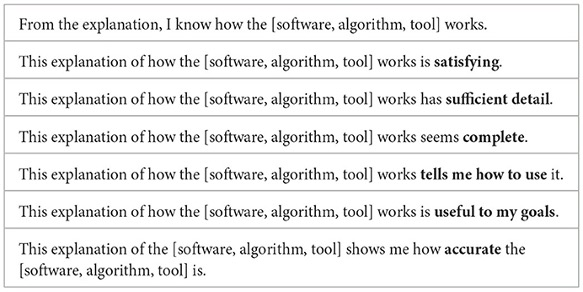

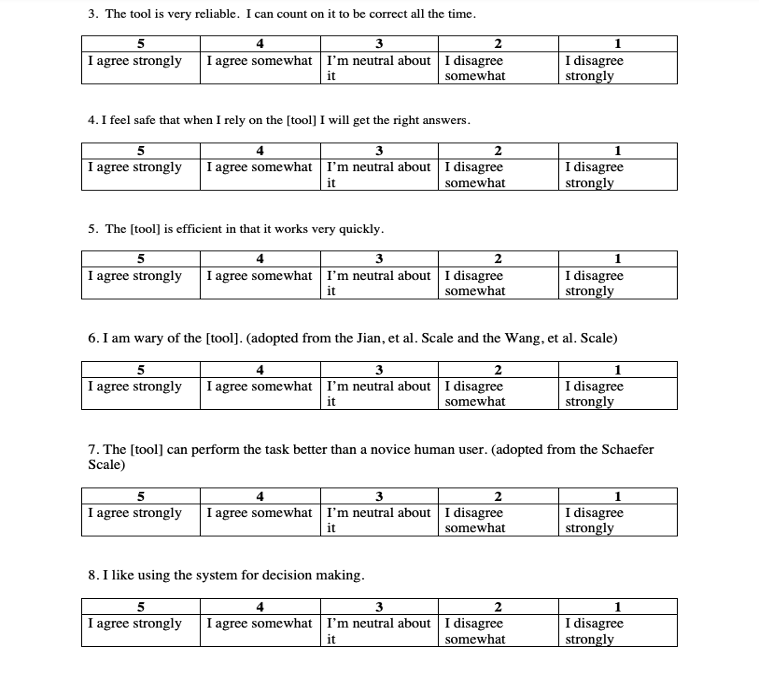

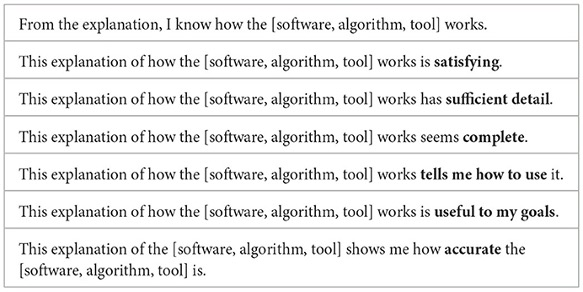

The explainability scale, based on the COP-12 framework, assesses perceived correctness, completeness, coherence, and contextual utility of AI explanations.

Figure 3: Explainability Scale used in the paper. Items assess perceived correctness, completeness, coherence, and contextual utility of AI explanations.

These instruments enable fine-grained analysis of how explanation modality influences user attitudes and understanding.

Results

Trust Outcomes

Statistical analysis (ANOVA, p<0.05) reveals significant differences in trust ratings across explanation conditions. Interactive explanations yield the highest mean trust (M=4.22, SD=0.61), followed by contextual (M=3.87), basic (M=3.51), and no explanation (M=2.98). Notably, interactive systems also minimize distrust and maximize reliability confidence, particularly with the Good AI. Novice users are most sensitive to the presence and quality of explanations, while experts demonstrate more calibrated trust, less influenced by explanation style.

Explainability Ratings

Explainability ratings parallel trust outcomes. Interactivity enhances satisfaction and perceived detail, but can increase cognitive load if not carefully managed. Participants express a preference for concise, actionable explanations over verbose or overly technical ones. The data indicate that explanation clarity and relevance are more influential than sheer informational content.

Discussion

The findings provide empirical support for the hypothesis that explanation interactivity is a key determinant of user trust in AI systems. Allowing users to query models and explore counterfactuals fosters engagement, agency, and more accurate trust calibration. This aligns with prior qualitative work suggesting that explanation should be conceived as an interactive dialogue rather than a static disclosure.

A salient observation is the trade-off between informativeness and cognitive load. While detailed explanations are valued for transparency, excessive complexity can induce fatigue and reduce clarity. The optimal explanation is thus context-dependent, balancing fidelity with cognitive ergonomics.

The paper also highlights the moral dimension of trust: users equate understandable decisions with fairness, and are more accepting of errors when they can interrogate or challenge the system. This underscores the importance of participatory and transparent AI design, especially in domains where perceived legitimacy is as critical as technical accuracy.

Expertise modulates explanation preferences. Experts value technical detail and reliability indicators, while novices prefer narrative or example-based rationales. This heterogeneity motivates the development of adaptive XAI systems that tailor explanation depth and modality to user profiles.

Implications and Future Directions

The paper's quantitative approach advances the field of human-centered XAI by providing measurable links between explanation design and user trust. The results suggest that interactive counterfactual explanations, which align with human causal reasoning, are particularly effective for trust-building. However, explanation design must account for user expertise, cognitive capacity, and contextual relevance.

Methodologically, the use of a gamified, ecologically valid simulation demonstrates the feasibility of controlled trust evaluation in realistic settings. Nevertheless, the moderate sample size and reliance on self-reported measures limit generalizability. Future work should incorporate behavioral and physiological trust indicators, expand demographic diversity, and explore multimodal and longitudinal explanation strategies.

The integration of adaptive, user-profiled explanation interfaces and participatory design processes is a promising direction for responsible AI deployment. Additionally, expanding evaluation metrics to include fairness, accountability, and satisfaction will yield a more comprehensive understanding of explainability's role in trustworthy AI.

Conclusion

This paper provides quantitative evidence that interactive and contextual explanations significantly enhance user trust and perceived explainability in AI systems. The results emphasize that transparency alone is insufficient; user engagement, comprehension, and perceived fairness are critical for trustworthy AI. The findings motivate the development of adaptive, human-centered explanation frameworks and call for cross-disciplinary research integrating HCI, cognitive science, and AI governance. Ultimately, explanation should be treated as an ongoing dialogue, empowering users and reinforcing the legitimacy of human oversight in algorithmic decision-making.