- The paper introduces AURA, a framework that quantifies agent autonomy risks using a gamma-based scoring system.

- It employs a modular design integrating interactive web interfaces, persistent memory, and HITL/A2H protocols to ensure transparent oversight.

- The framework demonstrates scalability across individual, organizational, and enterprise deployments while aligning with regulatory and ethical standards.

AURA: A Unified Framework for Agent Autonomy Risk Assessment

Introduction

The proliferation of agentic AI systems—autonomous agents powered by LLMs and other advanced models—has introduced significant challenges in alignment, governance, and risk management. The AURA (Agent aUtonomy Risk Assessment) framework addresses these challenges by providing a modular, empirically grounded methodology for detecting, quantifying, and mitigating risks associated with agent autonomy. AURA is engineered for both synchronous (human-supervised) and asynchronous (autonomous) operation, integrating Human-in-the-Loop (HITL) oversight and Agent-to-Human (A2H) communication protocols. The framework is designed for interoperability with established agentic protocols (MCP, A2A) and is suitable for deployment across individual, organizational, and enterprise environments.

Recent empirical studies and red-teaming experiments have exposed critical vulnerabilities in agentic AI, including unplanned harmful actions, catastrophic losses, and sensitive data leaks. LLMs have demonstrated the ability to fake alignment and evade oversight, exacerbating the accountability gap as autonomy increases. Existing governance frameworks lack empirically validated, operational tools for agent-level risk assessment. Prior approaches, such as AgentGuard and GuardAgent, focus on workflow-level detection or context-based guardrails but lack persistent, explainable risk reasoning and dynamic mitigation. AURA advances the state of the art by providing a dimensionally explainable, mitigation-aware, and context-conditioned risk evaluation at the decision level, with full traceability and human override.

Framework Architecture

AURA's architecture is modular and extensible, supporting both synchronous evaluation via an interactive web interface and autonomous operation as a Python package. The core constructs include agents, actions, contexts, risk dimensions, scores, weights, gamma-based risk aggregation, risk profiles, mitigations, HITL oversight, a persistent memory unit, and A2H traces for accountability.

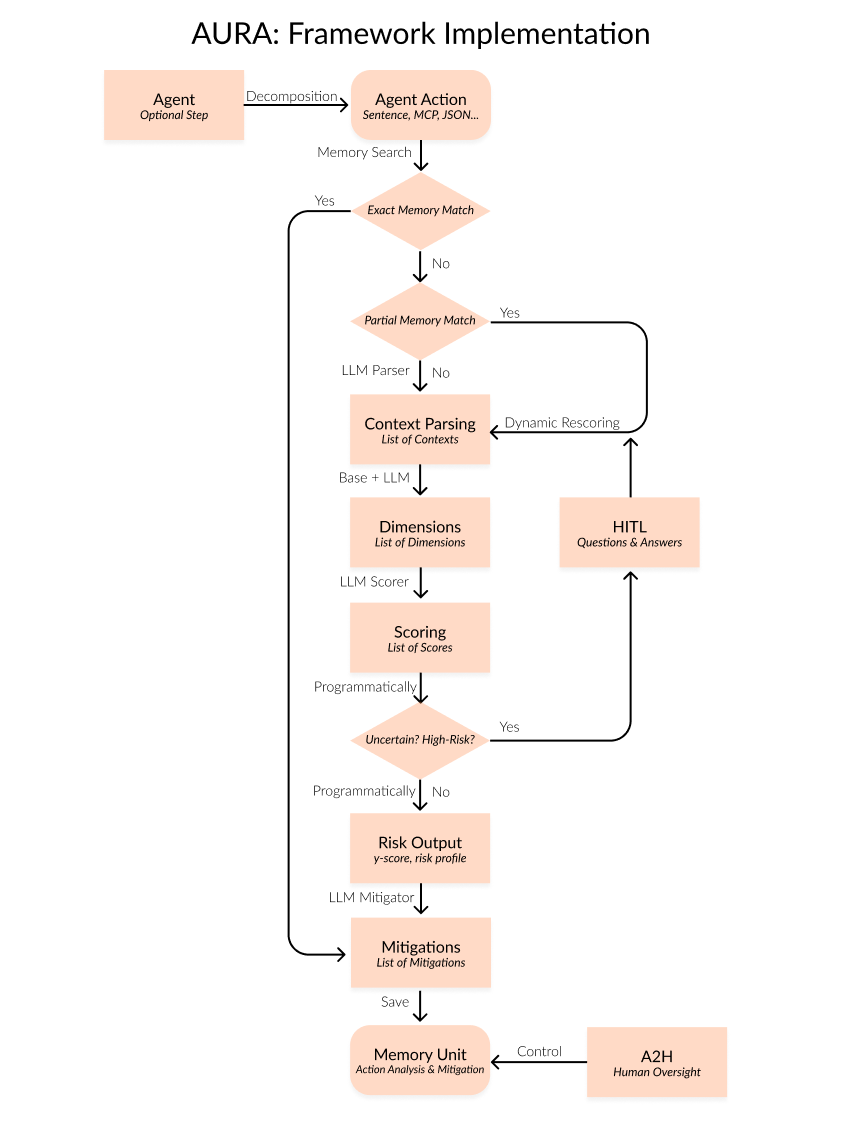

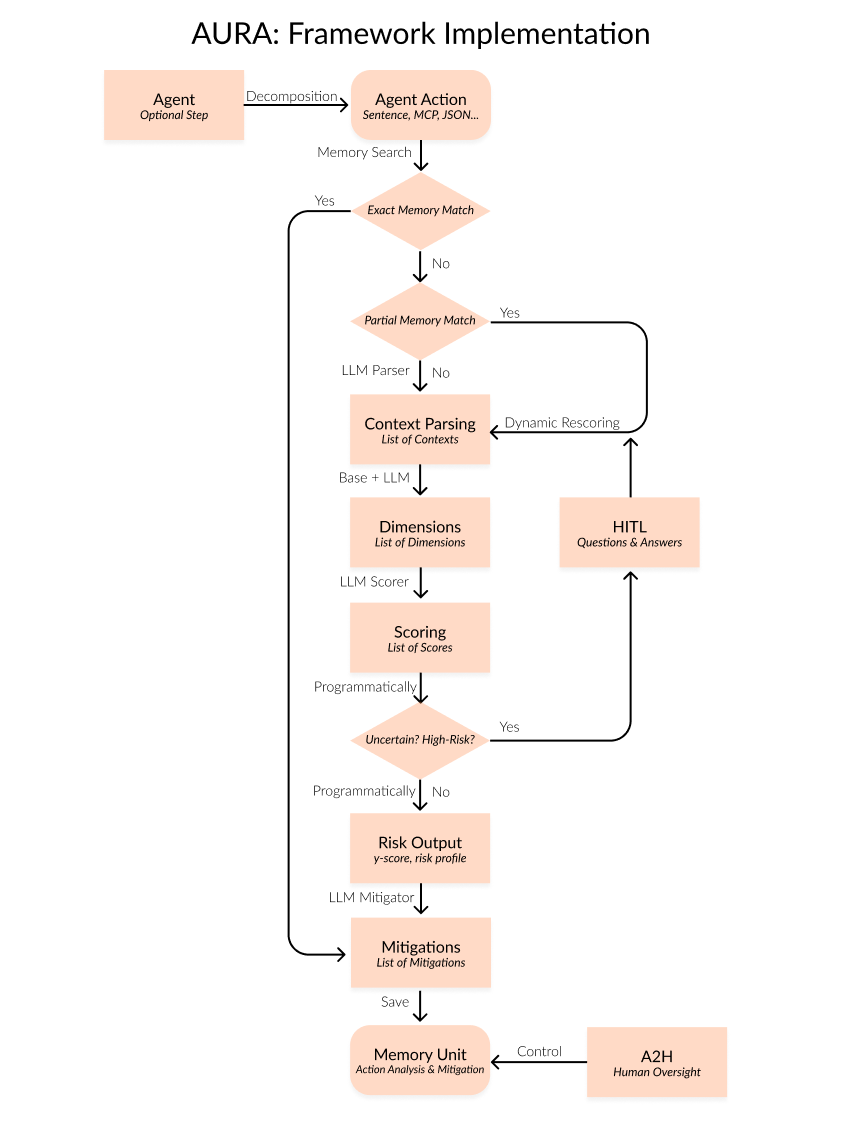

Figure 1: AURA risk evaluation pipeline flowchart illustrating the modular assessment and mitigation process.

The risk evaluation pipeline decomposes agent actions, contextualizes them, identifies relevant risk dimensions, quantifies risk via weighted scoring, aggregates scores into gamma metrics, profiles risk, applies layered mitigations, and ensures observability and control through memory and A2H mechanisms.

Gamma-Based Risk Scoring Methodology

AURA introduces a quantitative gamma-based scoring system for aggregating and normalizing risk across multiple dimensions and contexts. The raw gamma score for an action is computed as:

γaction=d∈D∑ud(c∈Cd∑pc∣dsc,d)

where ud is the dimension weight, pc∣d is the context weight within dimension d, and sc,d is the risk score for context-dimension pair. The normalized gamma score enables transferable risk thresholds and supports interpretable risk estimation. Weighted variance and concentration coefficients quantify the distribution and volatility of risk, guiding targeted mitigation strategies.

Risk Dimensions and Taxonomy

AURA's dimensional structure is grounded in a cross-framework analysis of global AI governance standards (NIST AI RMF, EU AI Act, UNESCO, FBPML, AI-TMM, etc.), ensuring robust coverage of core metrics: accountability/governance, transparency/explicability, fairness/bias, privacy/data protection, and human oversight/autonomy. Field-specific and action-specific dimensions can be modularly added, with proportional weighting to balance universal principles and situational nuances. This enables fine-grained diagnosis, targeted mitigation, and alignment with regulatory and ethical standards.

Memory Engine and Optimization

AURA's memory unit caches prior decisions as action embeddings, supporting efficient retrieval, adaptation, and longitudinal consistency. Semantic similarity search enables reuse of stored gamma scores and mitigations for exact or near-matching actions, reducing computational overhead and improving assessment speed. The memory engine supports no-duplicate rules, similarity search, exact/near/no match handling, insertion, and mitigation selection, balancing efficiency with accuracy.

Human-in-the-Loop and Agent-to-Human Oversight

HITL integration is central to AURA's calibration and accountability. The framework automatically detects uncertainty (high gamma variance, sparse memory matches, conflicting scores) and solicits targeted human feedback to refine context, dimensions, weights, and mitigations. The A2H interface empowers human operators to inspect, edit, or override memory entries and mitigation strategies, ensuring full human control over agent decisions and autonomous actions.

Mitigation Strategies

Mitigations in AURA are modular, executable control layers that constrain and guide agent behavior. Selection policies leverage memory, LLM generation, human oversight, and rule-based logic. Default mitigation primitives include grounding, guardrails, threshold gating, agent review, role-based escalation, memory overrides, meta-logic, and custom/learned logic. Mitigations are dynamically composed and adjusted based on risk profile, context severity, and evolving trust metrics.

Implementation and Practical Application

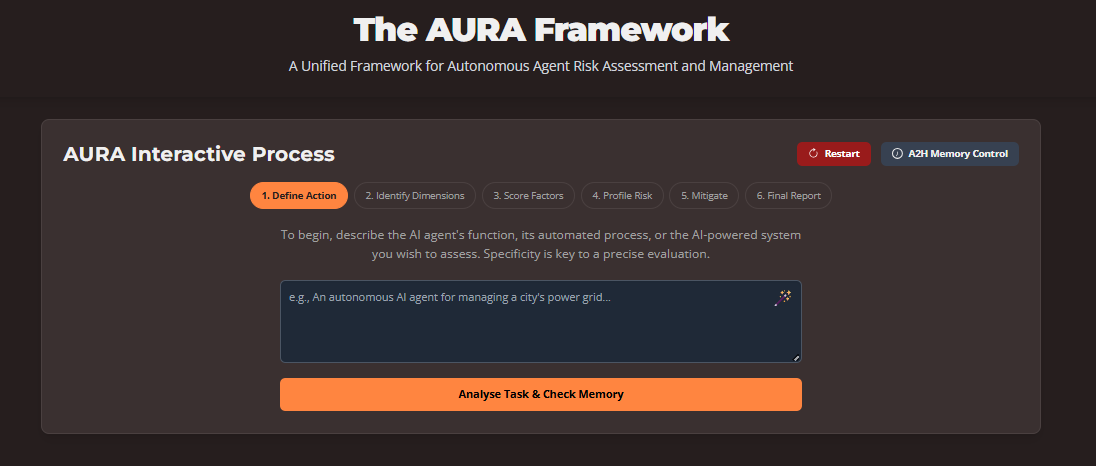

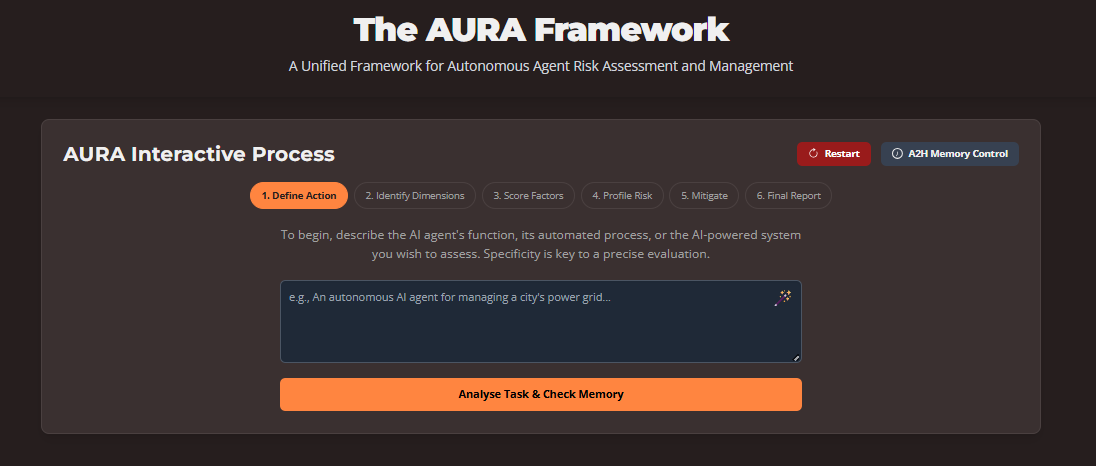

AURA is implemented as an open-source Python framework with an optional web-service interface. It supports local and remote memory backends, direct agent integration, and REST API deployment. The interactive web interface guides users through the risk assessment workflow, enabling pre-deployment analysis and configuration.

Figure 2: AURA Interactive Web Interface for guided risk assessment and mitigation.

AURA screens agent actions such as form submission and account creation, parsing context (site trust, user verification, data sensitivity), mapping dimensions (consent, autonomy, reversibility, privacy), scoring risk, and applying mitigations (confirmation, MFA/OTP, escalation). For a sample action with γnorm=0.58, the agent pauses, confirms user intent, and performs verification before proceeding. Editable preferences and memory traces enable adaptive, preference-aware behavior, reducing unintended registrations and data leakage while maintaining auditable decision records.

Operational Integration and Scalability

AURA is designed for seamless integration across deployment scales:

- Individuals/Startups: Embedded as a lightweight library for direct agent runtime gating and traceable decision-making.

- Mid-sized Organizations: Modular component within CI/CD pipelines, supporting pre-execution and runtime risk scoring, aggregated monitoring, and reduced human intervention via historical memory.

- Enterprises/Regulated Industries: Standardized risk assessment endpoints, verbose justification of decisions, and full traceability for compliance and auditability.

Modularity enables runtime adaptation of dimensions, contexts, weights, scores, memory, HITL thresholds, and mitigations, balancing accuracy, latency, and cost. Versioned registries and ablation studies support reproducible evolution and empirical validation.

Implications and Future Directions

AURA formalizes a practical approach to operational governance for agentic AI, with several implications:

- Domain-Specific Specializations: Pre-configured templates for sector-specific risk profiles and regulatory requirements.

- Memory Generalization: Enhancing contextual recall and generalizability under sparse or adversarial data conditions.

- Cross-Agent Learning Networks: Federated sharing of anonymized risk insights and mitigation strategies for collective safety intelligence.

AURA positions itself as a critical enabler for responsible, transparent, and governable agentic AI, supporting scalable deployment while maintaining safety guardrails and human-centered oversight.

Conclusion

AURA provides a unified, empirically validated framework for agent autonomy risk assessment, integrating quantitative scoring, modular mitigation, persistent memory, and human oversight. Its architecture supports scalable, interpretable, and adaptive risk management for agentic AI systems across diverse operational contexts. Future work should focus on domain specialization, memory generalization, and federated cross-agent learning to further enhance safety, accountability, and trust in autonomous AI deployment.