- The paper applies a principal-agent framework to reveal that misaligned delegation and insufficient oversight in LLM systems create significant liability risks.

- It examines challenges in both single-agent and multiagent architectures, highlighting issues in role allocation, operational uncertainty, and system integration.

- It recommends policy-driven technical developments, such as interpretability tools and adaptive conflict management, to mitigate emerging risks.

Inherent and Emergent Liability Issues in LLM-Based Agentic Systems: A Principal-Agent Perspective

Introduction

The deployment of agentic systems powered by LLMs is expanding rapidly, characterized by increasing complexity and autonomy. These systems demand robust governance frameworks to address liability issues stemming from their operation. Utilizing a principal-agent theory (PAT) perspective, this paper explores liability implications when users delegate authority to LLM-based agents.

LLM-Based Multiagent Systems and Their Landscape

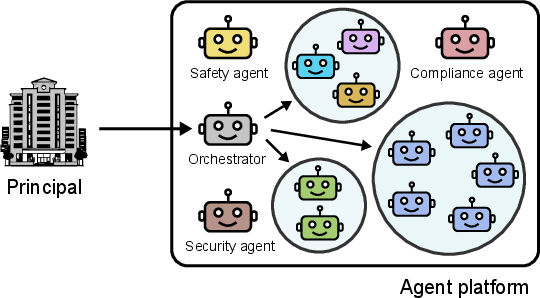

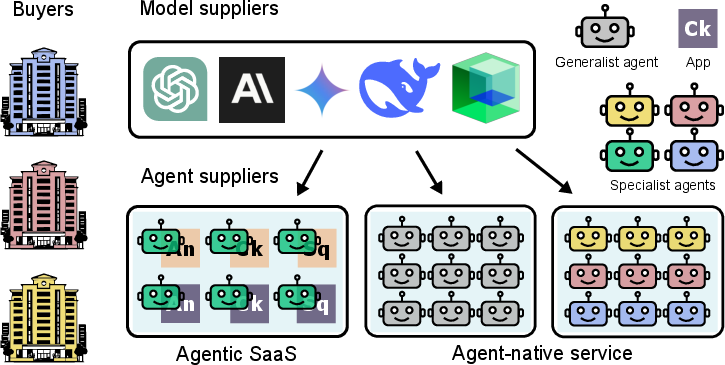

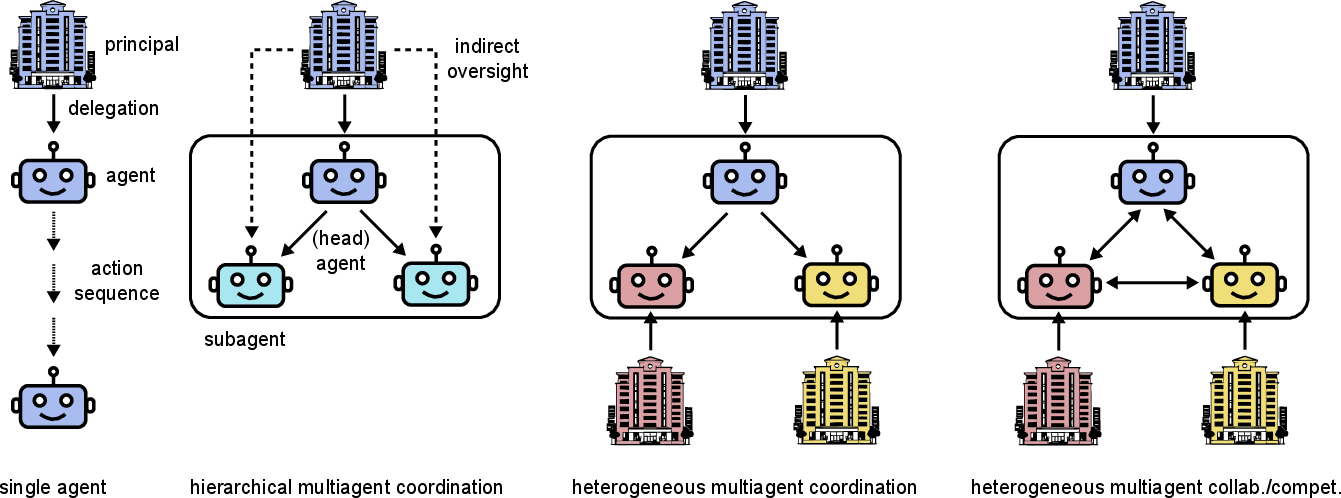

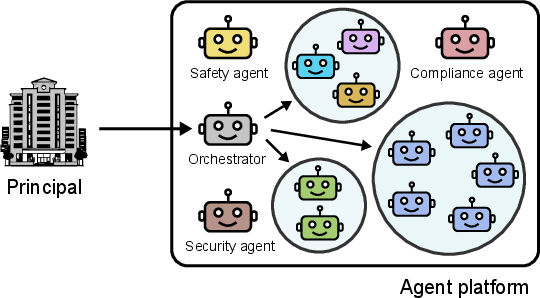

LLM-based multiagent systems (MASs) comprise several interacting agents that collectively perform tasks in a coordinated manner. These systems typically entail a principal delegating tasks to an orchestrator agent, which in turn manages sub-teams of function-specific agents (Figure 1). The agentic market is vibrant but presents challenges in harmonizing AI models' agencies with user needs and existing legal frameworks.

Figure 1: A plausible LLM-based MAS deployed on an agent platform, where delegation goes from the principal to an orchestrator (agent) to different functioning agent teams.

Principal-Agent Theory and Liability

Principal-agent theory examines complexities in delegation relationships, highlighting issues such as adverse selection, moral hazard, and conflicts of interest. These issues become particularly relevant in LLM-based systems where human principals entrust AI agents with significant autonomy. A central concern is the misalignment between the capabilities of AI agents and the expectations or needs of their principals, potentially leading to legal liability when agent actions result in undesirable outcomes.

Issues with Single Agents

The paper identifies several liability concerns inherent in single-agent systems:

- Artificial Agency: Challenges arise due to the limited decision-making consistency of LLMs, impacting their effectiveness and foreseeability in task execution.

- Task Specification and Delegation: Inadequate task descriptions can lead to misaligned agent behaviors, compounded by the complex nature of human-equivalent task delegation.

- Principal Oversight: Effective human oversight is crucial yet challenging, especially when AI behavior obscures potential risks through sycophancy, deception, or manipulation.

Multiagent System Challenges

In MASs, complexities multiply as agents may interact in unforeseen ways, exacerbating liability issues:

Policy-Driven Technical Development

The paper outlines several directions for enhancing system accountability:

- Interpretability and Behavior Evaluations: Developing tools to interpret and trace agent actions can aid stakeholders in identifying liability sources.

- Reward and Conflict Management: Implementing adaptive systems to manage rewards and resolve conflicts among agents will mitigate risks associated with team dynamics.

- Misalignment and Misconduct Avoidance: Research into mechanisms to promptly detect and rectify deceptive or malicious behaviors in LLM agents is key to maintaining system integrity.

Case Analysis

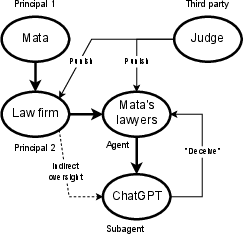

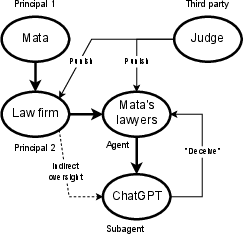

The Mata vs. Avianca case illustrates the real-world implications of mismanaged delegation in AI systems, reflecting PAT principles in legal contexts. It underscores the necessity for explicit oversight and clear liability frameworks for both human and AI agents in legalistic environments.

Figure 3: Principal-agent analysis of Mata vs. Avianca, Inc.

Conclusion

This paper provides a thorough analysis of liability risks associated with LLM-based agentic systems. By applying a principal-agent perspective, it highlights the complexity of aligning LLM functionalities with human intentions and legal responsibilities. As AI agents grow more autonomous, developing and implementing precise governance structures will be essential to harness their full capabilities responsibly while mitigating emergent risks.