Build Your Personalized Research Group: A Multiagent Framework for Continual and Interactive Science Automation (2510.15624v1)

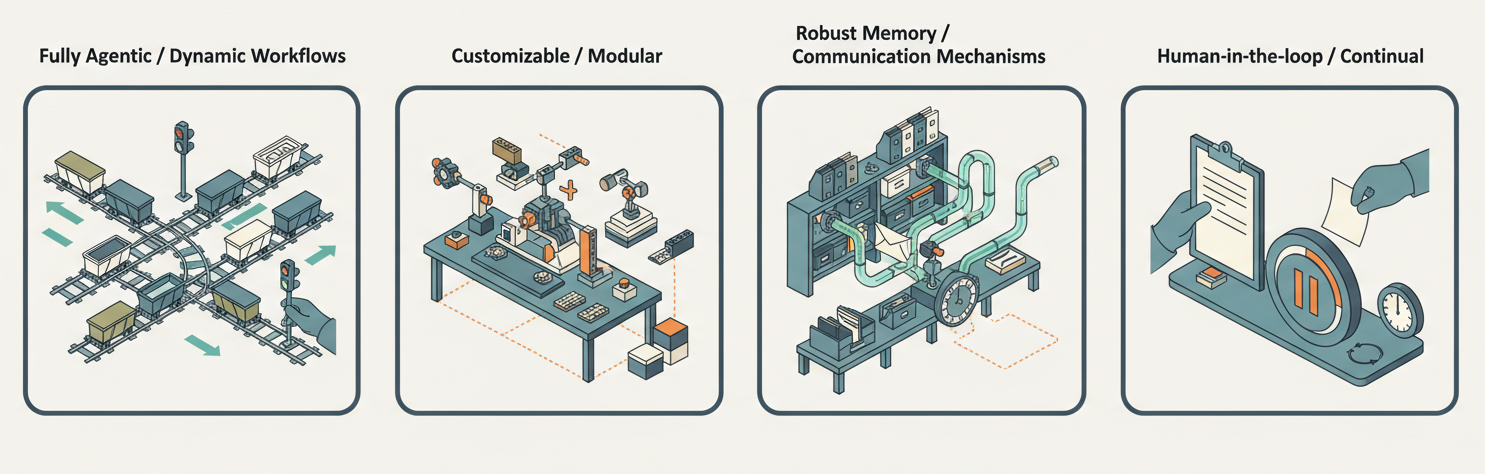

Abstract: The automation of scientific discovery represents a critical milestone in AI research. However, existing agentic systems for science suffer from two fundamental limitations: rigid, pre-programmed workflows that cannot adapt to intermediate findings, and inadequate context management that hinders long-horizon research. We present \texttt{freephdlabor}, an open-source multiagent framework featuring \textit{fully dynamic workflows} determined by real-time agent reasoning and a \coloremph{\textit{modular architecture}} enabling seamless customization -- users can modify, add, or remove agents to address domain-specific requirements. The framework provides comprehensive infrastructure including \textit{automatic context compaction}, \textit{workspace-based communication} to prevent information degradation, \textit{memory persistence} across sessions, and \textit{non-blocking human intervention} mechanisms. These features collectively transform automated research from isolated, single-run attempts into \textit{continual research programs} that build systematically on prior explorations and incorporate human feedback. By providing both the architectural principles and practical implementation for building customizable co-scientist systems, this work aims to facilitate broader adoption of automated research across scientific domains, enabling practitioners to deploy interactive multiagent systems that autonomously conduct end-to-end research -- from ideation through experimentation to publication-ready manuscripts.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper introduces freephdlabor, a computer system made of many smart helpers (AI “agents”) that work together like a personalized research group. Its goal is to help scientists do research from start to finish—coming up with ideas, running experiments, writing papers, and checking quality—while staying flexible, learning from mistakes, and letting a human step in anytime.

What questions does it try to answer?

The paper focuses on three simple questions:

- How can multiple AI helpers communicate clearly and not forget important details during long projects?

- How can the system keep track of the whole project so it can change plans when new results appear?

- How can a human easily pause, guide, or correct the system during the research process?

How does it work? (Explained with everyday ideas)

Think of a sports team with a coach:

- The “ManagerAgent” is the coach. It sees the big picture and decides who should do what next based on how the game (research) is going.

- The other agents are players with different skills:

- IdeationAgent: brainstorms research ideas by reading papers and the web.

- ExperimentationAgent: turns ideas into code, runs experiments, and saves results.

- ResourcePreparationAgent: organizes files and figures so they’re easy to use.

- WriteupAgent: writes the paper (in LaTeX) and compiles it into a PDF.

- ReviewerAgent: reviews the paper like a journal reviewer and gives a score and feedback.

Instead of a fixed recipe, it’s a choose‑your‑own‑adventure:

- In many older systems, the steps are fixed (idea → experiment → write → done), even if something goes wrong.

- Here, the coach (ManagerAgent) can change the plan at any time. If an experiment fails, it can ask for a new idea. If the paper is weak, it can order more experiments.

They share a “workspace” (like a shared Google Drive folder):

- All agents read and write files in a common place. Instead of copying long text to each other (which can get messy or be forgotten), they point to the same files. This stops the “telephone game” where messages get distorted over time.

They use a think‑then‑act loop (ReAct):

- Each agent follows a simple pattern: think about the task → choose an action (like running a tool or writing code) → look at what happened → update its memory → repeat until done.

They handle long projects:

- Research takes many steps, and AI models have limited short‑term memory (“context window”). By splitting work among specialized agents and using the shared workspace, the system avoids forgetting important details.

Humans stay in control:

- You can pause the system, give feedback, or add guidance at any time. The system also remembers progress across sessions, so it can continue later.

What did they show, and why is it important?

The paper walks through a full example project about detecting “training phases” in machine learning using Hidden Markov Models (HMMs). Here’s what happened and why it matters:

- It started smoothly: the system generated a research idea, ran an initial experiment, and saved results.

- A problem popped up: a link to the experiment data was missing, so the writing step failed.

- The system fixed itself: the ManagerAgent noticed the cause (the missing link), asked the ResourcePreparationAgent to fix it, and then the writing worked.

- It checked quality: the ReviewerAgent scored the paper 5/10 and asked for better experiments and analysis.

- It improved the work: the system ran more experiments (more datasets, ablation studies), reorganized results, rewrote the paper, and got a better review (7/10).

Why this matters:

- It proves the system can adapt to real problems (not just follow a script).

- It shows the system can aim for quality, not just “finish fast.”

- It demonstrates reliable teamwork: agents coordinate through shared files, so information isn’t lost.

- It supports long, realistic research cycles with error recovery and revision, which is how real science works.

What could this change?

- Faster, more flexible research: Scientists could “hire” a personalized AI group that adapts as results come in.

- Better collaboration: Humans can guide the process while the system does the heavy lifting.

- Easy customization: Because it’s modular and open‑source, labs can swap in new agents or tools for their field (biology, physics, economics, etc.).

- More reliable automation: The shared workspace and manager‑coach design reduce confusion and memory problems common in long AI workflows.

In short, freephdlabor shows a practical way to turn AI into an organized, adaptable research team that can plan, experiment, write, review, and improve—while keeping a human in charge.

Knowledge Gaps

Below is a concise, actionable list of knowledge gaps, limitations, and open questions that remain unresolved and would benefit from targeted investigation:

- Lack of quantitative evaluation: no benchmarks, task suites, or metrics (e.g., success rate, output quality, human edit distance, time, and cost) comparing this framework to fixed pipelines or other agentic systems.

- No ablation studies isolating key design choices (star-shaped manager vs decentralized topologies; reference-based workspace vs string-only chat; context compaction on/off; human-in-the-loop vs fully automated; VLM review vs LLM-only).

- Scalability of the ManagerAgent is untested: throughput, latency, and reliability with many agents, large projects, or multiple concurrent projects; risk of a single point of failure and associated recovery strategies.

- Parallelism and scheduling are unspecified: can agents operate asynchronously/parallelly without manager bottlenecks? What arbitration and prioritization policies exist?

- Global-state representation is under-specified: how the manager encodes, summarizes, and retrieves the “global state” over long horizons; formal treatment of partial observability; criteria for state compaction and retention.

- Context compaction details are missing: compaction algorithms, retrieval mechanisms, and empirical trade-offs (information loss, error rates, performance impact) across long-horizon tasks.

- Reference-based messaging via a shared workspace lacks guarantees: schema design, versioning, provenance, link integrity, concurrent access controls, and resolution of race conditions or broken references.

- Multi-tenant isolation is not addressed: workspace sandboxing, access control, and prevention of cross-project contamination when multiple users or projects run simultaneously.

- Safety/security of code execution is unspecified: sandboxing, dependency isolation, network policies, secret handling, supply-chain attack mitigation, and policies for executing auto-generated code.

- Human-in-the-loop mechanics lack rigor: how interruptions are injected, logged, and persisted; how human feedback is incorporated into decision policies; measurable impact of human guidance on outcomes.

- Failure taxonomy and recovery breadth are unclear: beyond the symlink example, which classes of failures are autonomously recoverable vs must be escalated; detection, diagnosis, and fallback strategies.

- Quality gate calibration remains open: how review scores and checklist criteria are set, validated, and protected from Goodharting; safeguards against agents gaming ReviewerAgent metrics.

- ReviewerAgent reliability is unvalidated: alignment with human reviewer judgments, inter-rater reliability, susceptibility to hallucinations, and vulnerability to collusion or feedback loops with other agents.

- VLMDocumentAnalysisTool fidelity is unassessed: accuracy on complex PDFs, figures, and tables; robustness to OCR errors, malformed LaTeX, or visually dense documents.

- Reproducibility of experiments is not guaranteed: environment specification, seeding, dataset versioning, dependency management, and standardized experiment tracking (e.g., MLflow/W&B) are not detailed.

- Novelty and scientific validity checks are absent: how the system detects incremental vs genuinely novel contributions; mechanisms to prevent plagiarism and verify result robustness (e.g., statistical significance, leakage checks).

- CitationSearchTool verification is missing: safeguards against fabricated citations, deduplication, bibliographic correctness, and source credibility scoring.

- Domain generality is not demonstrated: applicability beyond ML (e.g., wet lab, robotics, materials) and the concrete tool/interface extensions required; performance in non-ML scientific workflows.

- Compute cost and efficiency are unreported: token usage, wall-clock time, energy cost, and budget-aware policies; impact of dynamic workflows on resource consumption vs fixed pipelines.

- Learning over time is unspecified: whether the manager/agents adapt across projects via meta-learning or RL; storage of learned policies; evaluation of continual improvement.

- Concurrency and deadlock avoidance lack formal guarantees: prevention of infinite loops, task starvation, or circular delegations; termination criteria and safety stops.

- Inter-agent communication structure is underspecified: use of typed schemas/ontologies vs free-form text; validation, error checking, and schema evolution.

- Provenance and auditability need design: immutable logs, tamper-evident traces, and chain-of-custody for data and code to support scientific integrity and audits.

- Integration with existing lab/dev tooling is unclear: git-based workflows, CI/CD, artifact stores, dataset registries, HPC schedulers, and compliance with institutional policies (IRB, data governance).

- Ethical, legal, and authorship considerations are unaddressed: attribution for AI-generated content, accountability, misuse prevention (e.g., paper mill risks), and compliance with publisher/IRB/provenance standards.

- Model dependence is unspecified: which LMs/VLMs are used, performance sensitivity across models (open-weight vs closed), privacy implications of API calls, and fallback strategies for weaker models.

- Adoption and usability remain untested: developer/user experience, time-to-customize new agents/tools, API stability, documentation quality, and evidence from user studies.

- Open-source artifacts are not fully detailed: repository link, license, maintenance plan, reproducible configs, and release of complete logs/traces for the HMM case paper.

- Empirical validation of “reference-based messaging” benefits is missing: measured reductions in information loss vs chat-only baselines and impact on downstream task accuracy.

- Policies for source credibility in web search are absent: filtering noisy/OpenDeepSearch results, fact-checking pipelines, and managing paywalled or unreliable sources.

- Long-horizon goal management is unclear: mechanisms to detect goal drift, stop conditions beyond “PDF compiled,” and metrics for contribution quality, novelty, and readiness for submission.

Glossary

- Ablation studies: Systematic removal or alteration of components to assess their impact on performance or behavior. "ablation studies (features, fixed K)"

- Action-observation pair: The tuple of an agent’s executed action and the resulting feedback, used to update its internal state and guide future steps. "the \coloremph{action-observation} pair is appended to the agent's memory"

- Agentic system: A system composed of autonomous agents capable of decision-making and acting within a workflow. "agentic systems"

- BIC (Bayesian Information Criterion): A model selection criterion that balances model fit and complexity, often used to choose among competing statistical models. "BIC analysis completed"

- BibTeX: A tool and file format for managing bibliographic references in LaTeX documents. "clean BibTeX entries"

- Context compaction: Techniques that compress or summarize accumulated context to fit within limited model context windows while preserving key information. "context compaction"

- Context window: The maximum amount of text a LLM can attend to at once during inference. "finite context windows of LMs"

- Dynamic workflow: A non-predefined process that adapts its sequence of steps based on intermediate results and evolving context. "dynamic workflow"

- Game of telephone: An effect where information degrades over successive language-only message passing between agents. "game of telephone effect"

- Global state: The comprehensive, system-wide record of the project’s progress and artifacts accessible (or tracked) for coordination. "global state"

- Hidden Markov Model (HMM): A probabilistic model for sequences with latent states that emit observable outputs. "Hidden Markov Model (HMM)-based Training Phase Detection."

- Human-in-the-loop: Incorporating human oversight or intervention into an automated system to guide, correct, or steer decisions. "human-in-the-loop capabilities"

- LLM (LM): A model that predicts or generates text and can be used to reason, plan, and act via tool calls. "LLMs (LMs)"

- Linter: A tool that analyzes source text to flag errors and style issues before compilation or execution. "pre-compilation linter"

- Meta-optimization: Higher-level optimization strategies that tune or orchestrate the optimization process itself (e.g., across tasks or pipelines). "meta-optimization"

- Multi-agent system: A system composed of multiple specialized agents that coordinate to solve complex tasks. "multi-agent system"

- Partial information problem: The challenge that arises when agents operate with only a subset of the overall project information, hindering optimal decisions. "partial information problem"

- Principal investigator (PI): The lead researcher coordinating a project’s overall direction and resources. "``principal investigator''(PI)"

- Prompt engineering: The design and structuring of prompts that govern an agent’s behavior and tool use. "This compositional approach to prompt engineering"

- ReAct framework: A method where agents interleave reasoning (“thought”) with actions (tool calls) and observations iteratively. "reason-then-act (ReAct) framework"

- Reference-based messaging: Communication where agents refer to canonical artifacts (e.g., files) rather than re-transcribing content, reducing information loss. "reference-based messaging"

- Shared workspace: A common file-based area where agents read and write artifacts for coordination and memory. "shared workspace"

- Smolagents library: A software library used to implement agents that reason and act via tool-using code snippets. "smolagents library"

- Star-shaped architecture: A topology where a central coordinator (manager) connects to specialized agents, reducing pairwise communication overhead. "star-shaped architecture"

- Symbolic link (symlink): A filesystem reference that points to another file or directory, enabling reorganized views of complex outputs. "symbolic links"

- Tree-search algorithm: An algorithmic strategy that explores a space of possibilities by branching and evaluating alternatives iteratively. "tree-search algorithm"

- Vision-LLM (VLM): A model that jointly processes images and text to analyze or generate multimodal content. "vision-LLM (VLM)"

- Workspace-based coordination: Organizing agent collaboration through shared file artifacts rather than transient chat messages. "Workspace-based coordination"

Practical Applications

Immediate Applications

Below are concrete ways the paper’s framework and components can be deployed now, mapped to sectors and with feasibility notes.

- Academic co-researcher for ML/AI projects

- Sector: Academia, AI/ML research

- Leverages: ManagerAgent dynamic orchestration; IdeationAgent (arXiv + web), ExperimentationAgent (RunExperimentTool), WriteupAgent (LaTeX toolchain), ReviewerAgent (VLMDocumentAnalysisTool)

- Tools/products/workflows: “Lab-in-a-folder” project template; one-click run from idea → experiment → paper with quality gate; shared workspace as reproducible research ledger

- Assumptions/dependencies: Access to capable LLM/VLM APIs; compute for experiments; curated tool specs for target benchmarks; human-in-the-loop supervision for correctness

- Internal R&D automation for prototyping and reporting

- Sector: Industry (software, fintech analytics, ad tech, platform ML)

- Leverages: Dynamic workflow for iterative experimentation; ResourcePreparationAgent to prep artifacts; WriteupAgent for structured technical reports

- Tools/products/workflows: Auto-generated experiment logs, ablation summaries, and PDF/HTML reports with figures and BibTeX; QA reviews before circulation

- Assumptions/dependencies: Integration with internal data sources and schedulers (e.g., Slurm, Ray); data governance; prompt hardening to avoid hallucinations

- Living literature reviews and horizon scanning

- Sector: Academia, policy, enterprise strategy

- Leverages: IdeationAgent with FetchArxivPapersTool + OpenDeepSearchTool; ReviewerAgent to grade coverage and gaps

- Tools/products/workflows: Weekly “state-of-the-art” briefs; auto-updated bibliographies; tracked deltas with workspace-based memory

- Assumptions/dependencies: Reliable API access; deduplication and citation accuracy; periodic human validation of key claims

- Grant, IRB, and internal proposal drafting

- Sector: Academia, healthcare research, government labs

- Leverages: WriteupAgent’s LaTeX pipeline; ReviewerAgent for compliance checklists and completeness gating

- Tools/products/workflows: Draft specific aims, methods, budget narratives; checklist-driven quality gate; revision loops triggered by review feedback

- Assumptions/dependencies: Templates and policy constraints encoded as tools/prompts; human review for regulatory language and ethics

- Publisher and conference desk-check assistant

- Sector: Scientific publishing, societies

- Leverages: VLMDocumentAnalysisTool; ReviewerAgent scoring rubric; WriteupAgent linting (LaTeXSyntaxCheckerTool)

- Tools/products/workflows: Auto-checks for missing sections, figures, broken references, placeholder text; triage reports for editors

- Assumptions/dependencies: Access to submissions and PDFs; clear false-positive handling; no binding acceptance decisions delegated to AI

- MLOps experiment curation and handoffs

- Sector: Software/ML platforms, data science teams

- Leverages: ResourcePreparationAgent (symlink linker, structure analysis); shared workspace for reference-based messaging

- Tools/products/workflows: Clean artifact packs for cross-team handoffs (code, logs, metrics, figures); reproducibility bundles for audit

- Assumptions/dependencies: Filesystem or object storage integration; consistent experiment directory schemas

- Enterprise knowledge report generation

- Sector: Enterprise analytics, consulting, operations

- Leverages: ManagerAgent routing; WriteupAgent with citation tooling; ReviewerAgent for quality control

- Tools/products/workflows: Data-to-doc pipelines for quarterly updates, risk memos, KPI deep-dives with figures and sources

- Assumptions/dependencies: Data connectors; governance for source attribution; redaction policies for sensitive content

- Education: personalized research companion

- Sector: Education (higher ed, bootcamps)

- Leverages: Modular agents to scaffold student projects; human-in-the-loop interruption/feedback

- Tools/products/workflows: Auto-generated reading lists, project milestones, experiment templates, and draft reports; formative feedback cycles

- Assumptions/dependencies: Academic integrity policies; model settings to reduce over-assistance; educator oversight

- Policy evidence synthesis and memo drafting

- Sector: Public policy, think tanks, NGOs

- Leverages: IdeationAgent literature synthesis; ReviewerAgent for rigor and scope checks; WriteupAgent for policy briefs

- Tools/products/workflows: Rapid-turnaround memos with citations and uncertainty statements; updateable “living” policy notes

- Assumptions/dependencies: Verified sources; domain-specific review rubrics; human policy experts validate recommendations

- Marketing and comms technical collateral

- Sector: Software, biotech, hardware

- Leverages: ResourcePreparationAgent to curate results; WriteupAgent to produce whitepapers and blog posts; ReviewerAgent for clarity and accuracy

- Tools/products/workflows: From experiment outputs to externally readable narratives with figures and references; enforce quality gates before publication

- Assumptions/dependencies: Brand/style guides; claims substantiation; embargo and IP controls

- Cross-functional sprint copilot for data products

- Sector: Product/analytics teams

- Leverages: Star-shaped orchestration to move between ideation, prototyping, evaluation, and documentation

- Tools/products/workflows: ManagerAgent as “project PI” that routes tasks to specialized agents and pauses for stakeholder input via interruption mechanism

- Assumptions/dependencies: Clear task-to-agent mappings; stakeholder feedback windows; source-of-truth workspace

- Evaluation harness for agent research

- Sector: AI agent research, benchmarks

- Leverages: Dynamic workflow and memory compaction for long-horizon tasks

- Tools/products/workflows: Reproducible traces for longitudinal benchmarks; ablation of communication modes (reference vs. string)

- Assumptions/dependencies: Logging and trace tooling; diverse tasks; standardized metrics

Long-Term Applications

These directions are feasible but require further research, integration, validation, or scaling.

- Autonomous, continual AI scientist at scale

- Sector: Academia, corporate research labs

- Leverages: Dynamic workflows, hierarchical ManagerAgent, long-horizon memory, quality-driven iteration

- Tools/products/workflows: Always-on research programs spawning parallel hypotheses, cross-project memory, meta-learning across projects

- Assumptions/dependencies: Stronger reliability/verification; automated novelty detection and ethical safeguards; sustained compute budgets

- Wet-lab and robotics integration for experimental science

- Sector: Biotech, materials science, chemistry

- Leverages: ManagerAgent orchestration; ExperimentationAgent as planner; reference-based messaging for LIMS/ELN and robot control

- Tools/products/workflows: Closed-loop hypothesis → protocol generation → robotic execution → assay analysis → writeup

- Assumptions/dependencies: Robust LLM-to-robot tool adapters; safety interlocks; protocol validation; regulatory compliance

- Regulated documentation suites (e.g., FDA/EMA submissions)

- Sector: Healthcare, medical devices, pharma

- Leverages: WriteupAgent with compliance templates; ReviewerAgent with regulatory rubrics; workspace audit trail

- Tools/products/workflows: Automated generation of clinical paper reports, statistical analysis plans, and submission-ready artifacts

- Assumptions/dependencies: Formal verification of analyses; traceable provenance; human sign-off; legal/ethical approvals

- Cross-institutional autonomous research networks

- Sector: Open science, consortia, government programs

- Leverages: Star-shaped clusters connected via standardized workspace protocols; federated ManagerAgents

- Tools/products/workflows: Multi-node collaboration with shared artifacts, deduplicated horizons, and meta-review across institutions

- Assumptions/dependencies: Interoperability standards; identity/permissions; data-sharing agreements

- Enterprise “Research OS” with governance

- Sector: Large enterprises (energy, finance, tech)

- Leverages: Modular agents with policy guardrails; human-in-the-loop gates; lineage tracking in workspace

- Tools/products/workflows: End-to-end pipeline spanning ideation, modeling, deployment notes, and compliance audits

- Assumptions/dependencies: SOC2/ISO controls; PII handling; model risk management; integration with MLOps and data platforms

- Advanced algorithm discovery and program synthesis

- Sector: Software, optimization, scientific computing

- Leverages: ExperimentationAgent with extended program search (beyond current RunExperimentTool); ReviewerAgent for formal criteria

- Tools/products/workflows: Autonomous algorithm baselining, ablations, and correctness checks; formal specs-to-code loops

- Assumptions/dependencies: Formal verification or property testing; curated benchmarks; compute for large search spaces

- Real-time policy advisory and “living” systematic reviews

- Sector: Government, multilateral organizations

- Leverages: Continuous ingestion (OpenDeepSearch + arXiv); dynamic re-framing via ManagerAgent; ReviewerAgent for trust calibration

- Tools/products/workflows: Always-fresh guidance documents with uncertainty quantification and change logs

- Assumptions/dependencies: Source reliability modeling; bias detection; transparent explanations; human oversight for high-stakes use

- Personalized degree-scale education and research apprenticeships

- Sector: Education technology

- Leverages: Hierarchical agents tuned to curriculum; progressive autonomy; project memory across semesters

- Tools/products/workflows: Individualized capstone guidance from proposal to publishable work, with reflective reviews and portfolio curation

- Assumptions/dependencies: Guardrails for plagiarism and authorship; assessment redesign; educator governance

- Materials and energy discovery loops

- Sector: Energy, manufacturing

- Leverages: ExperimentationAgent integrated with simulators/high-throughput screening; ResourcePreparationAgent for property databases

- Tools/products/workflows: Iterative propose–simulate–synthesize cycles; auto-generated technical dossiers

- Assumptions/dependencies: High-fidelity simulators; lab/sim interop; validation against physical experiments

- Quantitative research copilot with compliance

- Sector: Finance

- Leverages: ManagerAgent for research branching; ReviewerAgent with model risk rubric; workspace for audit trails

- Tools/products/workflows: Strategy ideation, backtesting, stress testing, and report generation under compliance constraints

- Assumptions/dependencies: Strict data controls; prohibition of non-public info leakage; human sign-off; regulatory audits

- Clinical guideline synthesis and update engine

- Sector: Healthcare delivery

- Leverages: Continuous evidence ingestion; ReviewerAgent for strength-of-evidence rating; WriteupAgent for clinician-facing summaries

- Tools/products/workflows: Periodic guideline refreshes with traceable changes and patient-safety checks

- Assumptions/dependencies: Medical expert review; bias/harms analysis; EHR interoperability; liability considerations

- Marketplace of plug-and-play agents and tools

- Sector: Software platforms, open-source ecosystems

- Leverages: Modular prompts (<LIST_OF_TOOLS>, <AGENT_INSTRUCTIONS>), shared workspace protocol

- Tools/products/workflows: Community-built domain agents (e.g., LIMSAdapterTool, HPCJobTool, EHRQueryTool) with standardized interfaces

- Assumptions/dependencies: Versioning, compatibility testing, and security vetting; governance for contributed tools

Notes on Cross-Cutting Dependencies and Risks

- Model capabilities and cost: Performance depends on access to strong LLMs/VLMs and manageable inference costs; long-horizon tasks require memory compaction and persistence.

- Data security and privacy: Workspace artifacts may contain sensitive data; requires encryption, access controls, and logging.

- Reliability and verification: Hallucinations and brittle tool use necessitate human-in-the-loop checkpoints, typed tool interfaces, and regression tests.

- IP and attribution: Accurate citation, license compliance for datasets/code, and authorship policies are essential for ethical use.

- Domain adapters: Many high-value applications require custom tools (e.g., LIMS, job schedulers, EHRs, compliance checklists) integrated into <LIST_OF_TOOLS> and <AGENT_INSTRUCTIONS>.

Collections

Sign up for free to add this paper to one or more collections.