- The paper presents a novel framework termed Neurocognitive-Inspired Intelligence (NII) that bridges AI's structural mimicry with human-like functional cognition.

- It details modular components such as perception, attention, memory, and reasoning to emulate dynamic cognitive processes and facilitate autonomous learning.

- It demonstrates how integrating neuromorphic design and adaptive learning mechanisms can enhance AI generalization, robustness, and real-world applicability.

Towards Neurocognitive-Inspired Intelligence: From AI's Structural Mimicry to Human-Like Functional Cognition

The paper proposes a novel conceptual framework named Neurocognitive-Inspired Intelligence (NII), which aspires to design AI systems that emulate human cognitive functions. By leveraging principles from neuroscience and cognitive psychology, this framework presents a departure from the narrow task-specific AI systems and largely opaque models currently dominating the field.

Introduction to Neurocognitive-Inspired Intelligence

The NII framework aims to transcend the limitations of contemporary AI systems by imitating not just the structural architecture of the human brain but its dynamic functional processes. Current AI techniques, particularly deep learning models, excel in static environments with well-defined tasks but falter in adaptability, reasoning, and long-term cognitive functions, leading to challenges in real-world applications. By integrating core cognitive competencies such as attention, working memory, and meta-reasoning, NII seeks to develop systems capable of autonomous learning and robust interaction with minimal supervision.

Structural and Functional Overview

The NII architecture is organized into interconnected modules, each inspired by specific brain functions:

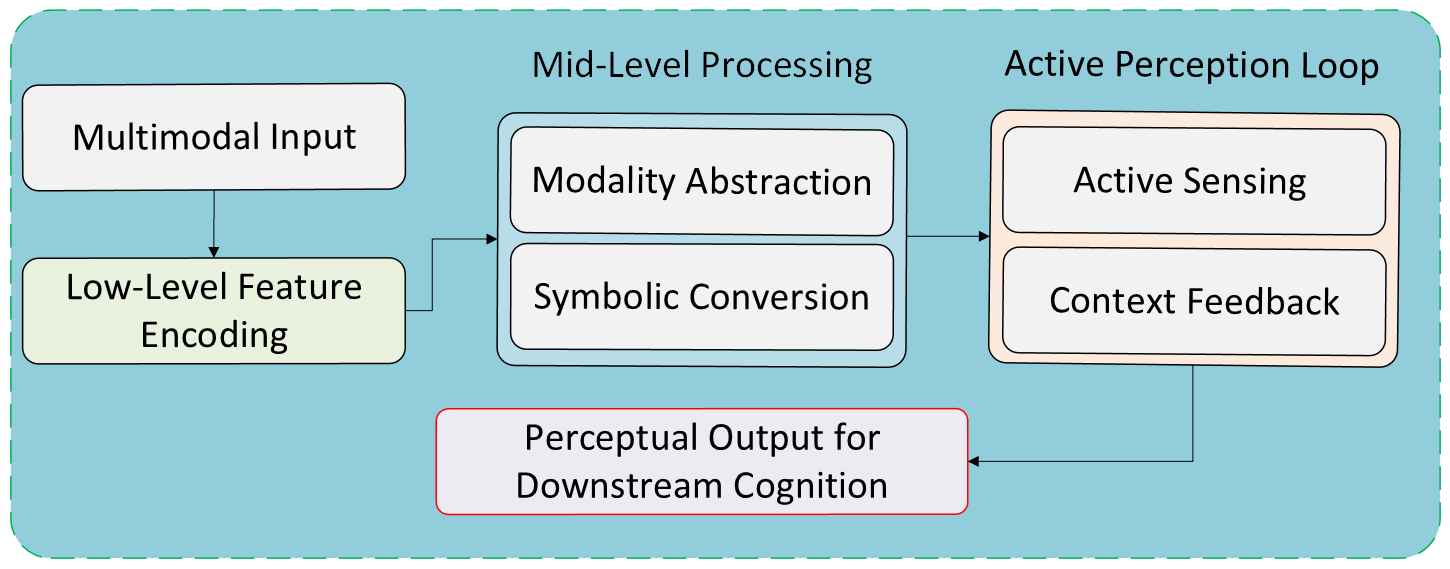

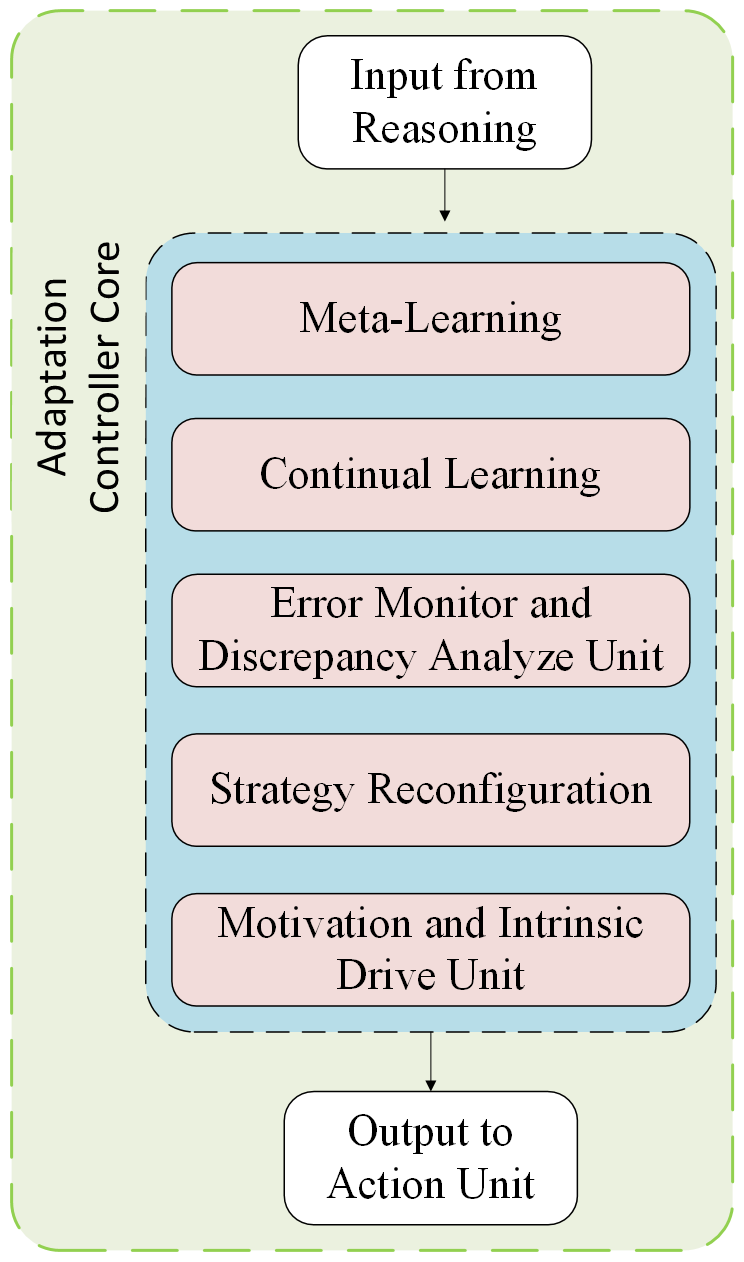

- Perception Unit: Transforms raw sensory data into complex abstractions using encoders that mimic biological sensory integration.

Figure 1: Perception unit. A hierarchical module that transforms raw sensory inputs (visual, auditory, tactile, proprioceptive) into mid- to high-level abstractions via biologically inspired encoders, active perception, and symbolic grounding.

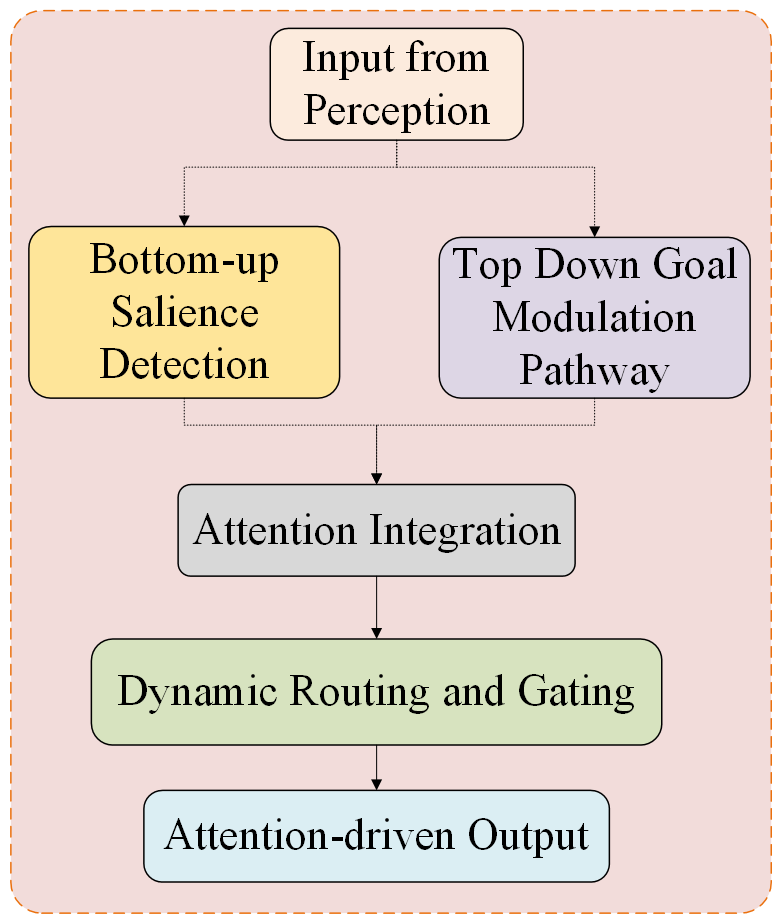

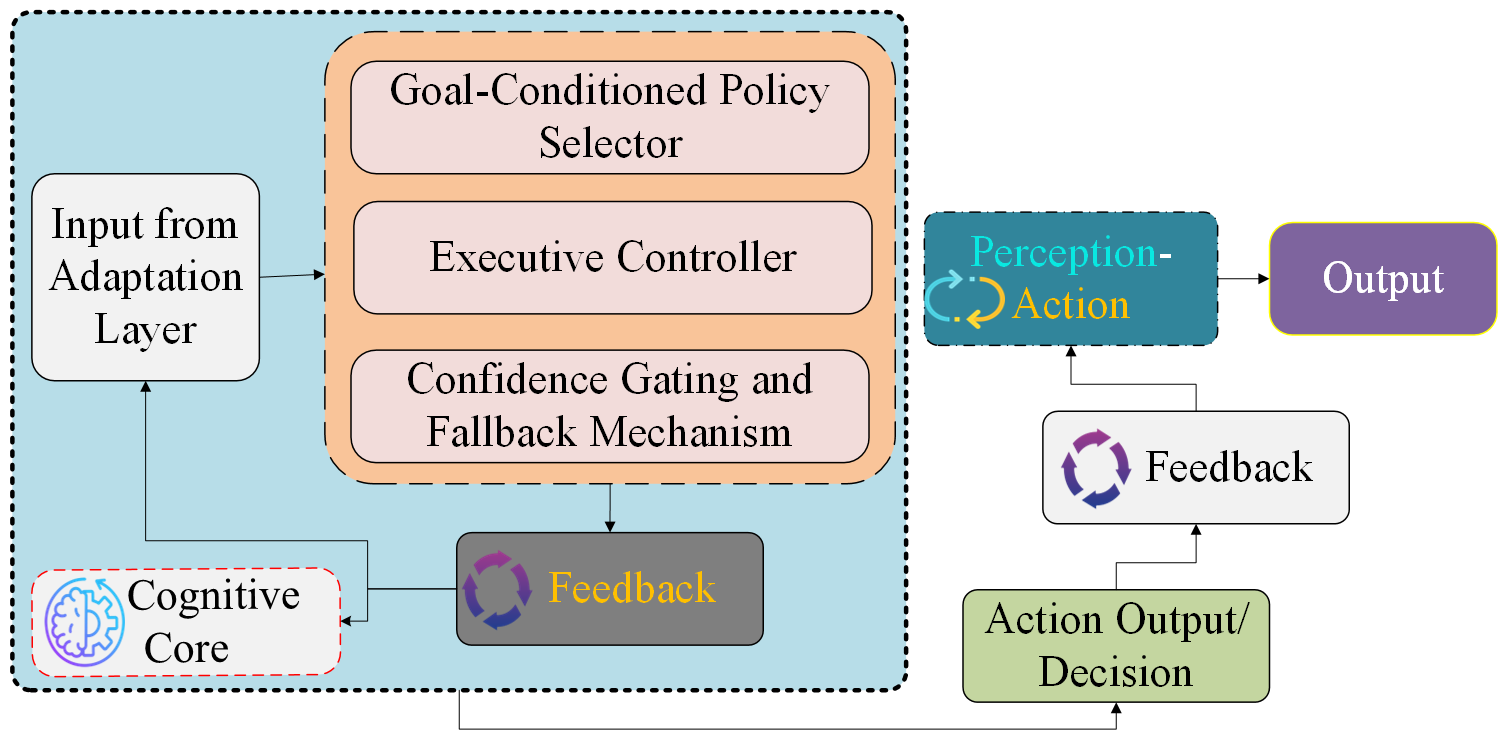

- Attention Mechanism: Controls cognitive focus through a dynamic, context-sensitive process influenced by both salient stimuli and task goals.

Figure 2: Attention mechanism. A bidirectional, context-sensitive attention controller, highlighting how top-down goals and bottom-up salience modulate cognitive resource allocation.

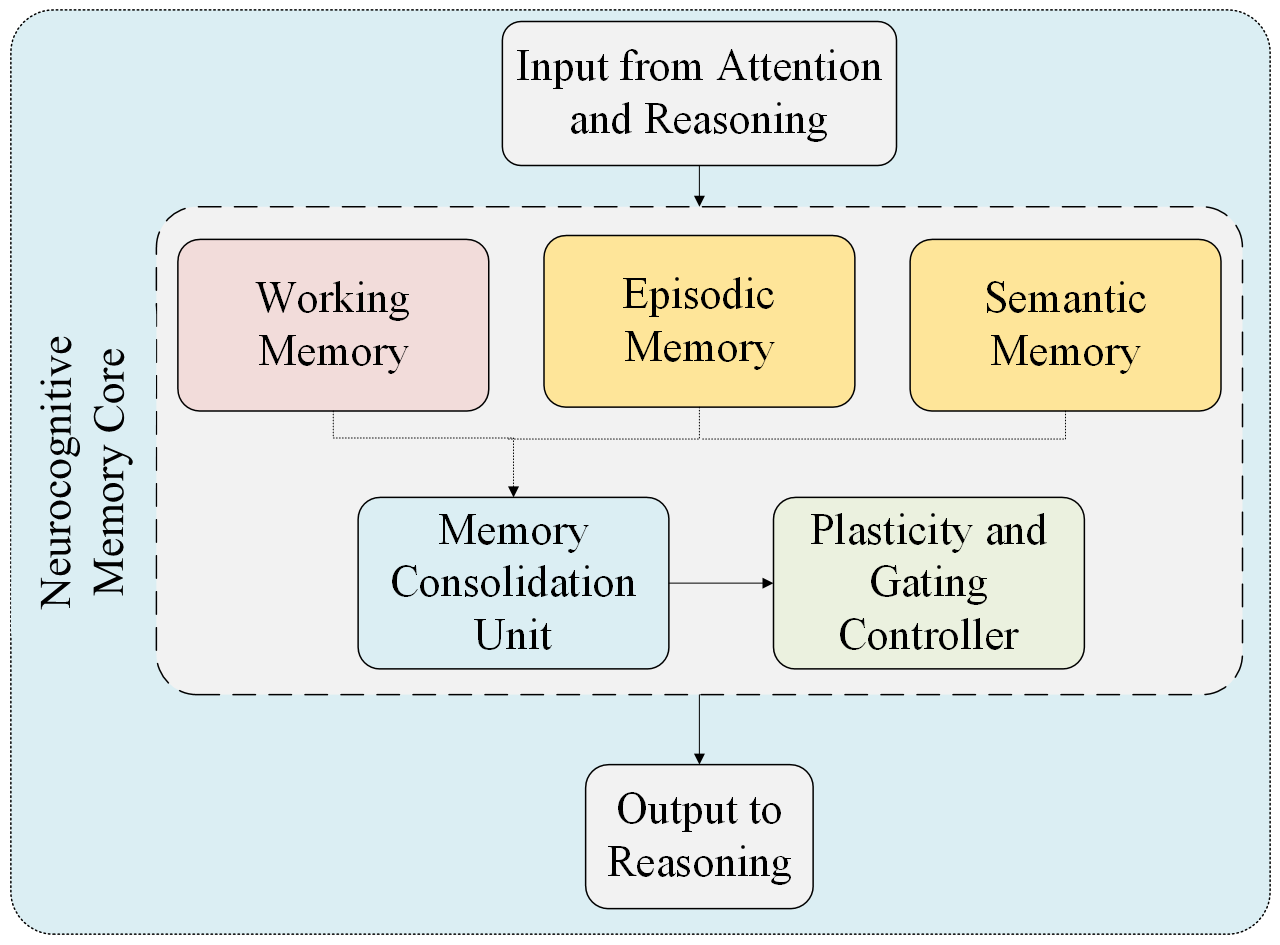

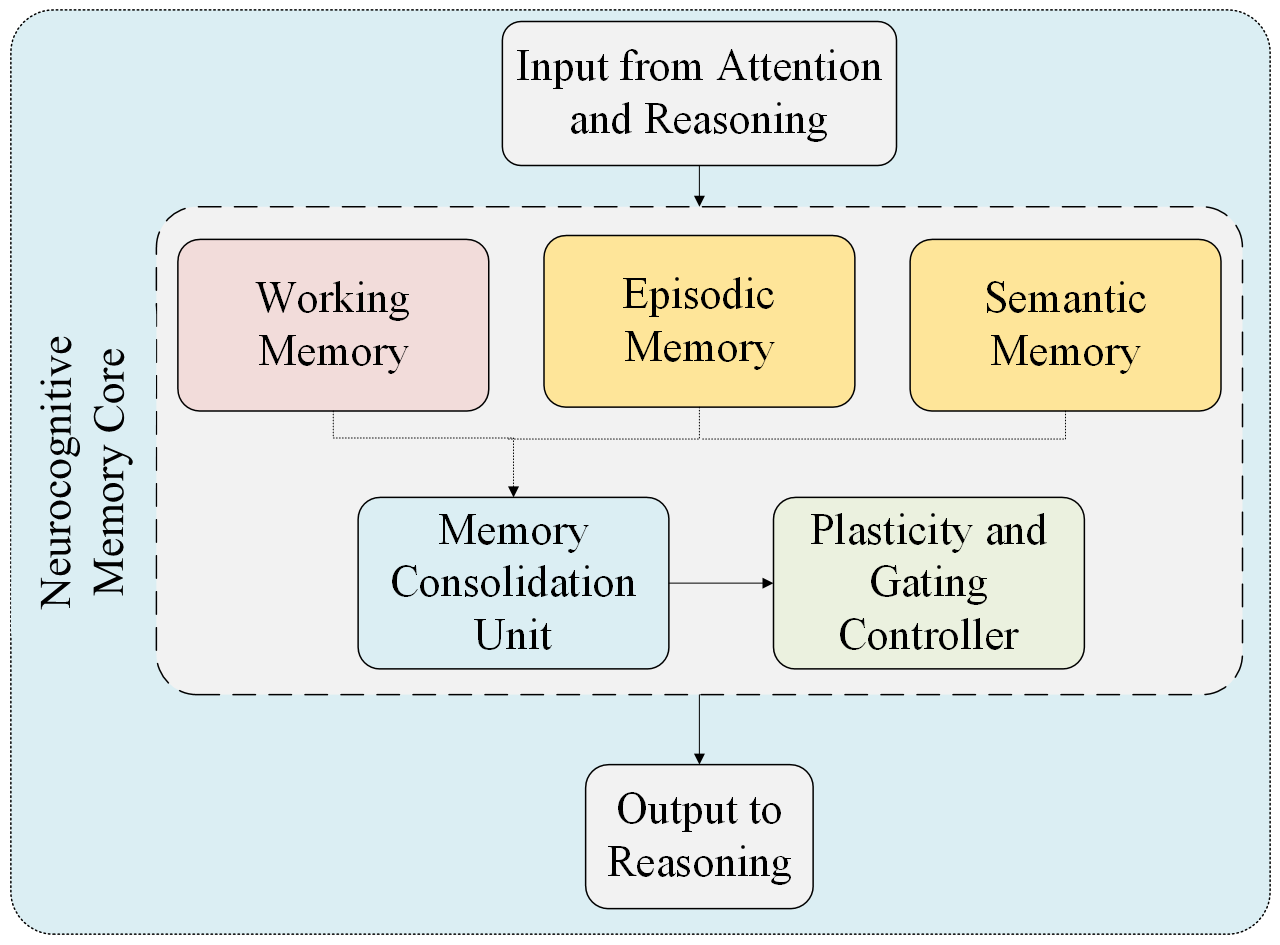

- Memory Module: Encapsulates dual-memory systems that manage episodic and semantic information, supporting long-term knowledge retention and working memory capabilities.

Figure 3: Memory module. A dual-memory system inspired by biological memory structures, supporting working memory, episodic recall, semantic abstraction, and lifelong learning through consolidation and synaptic plasticity.

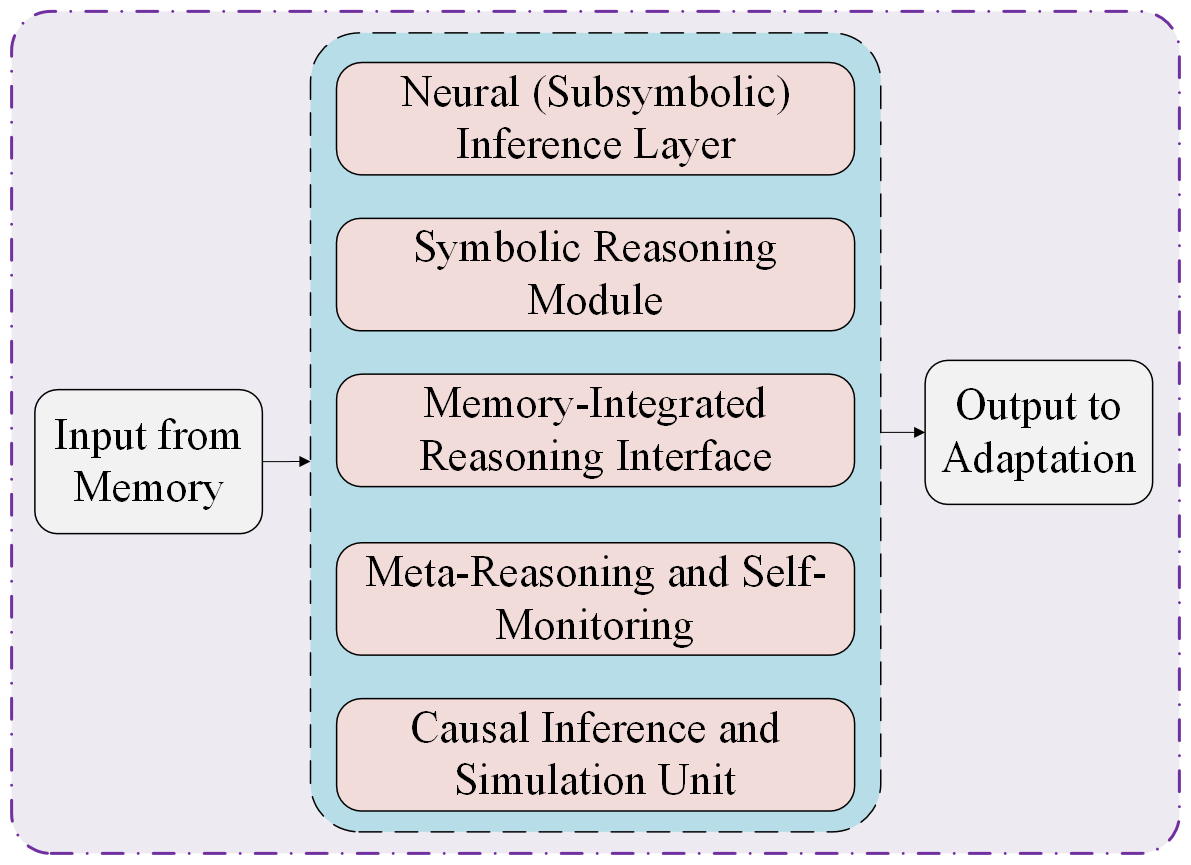

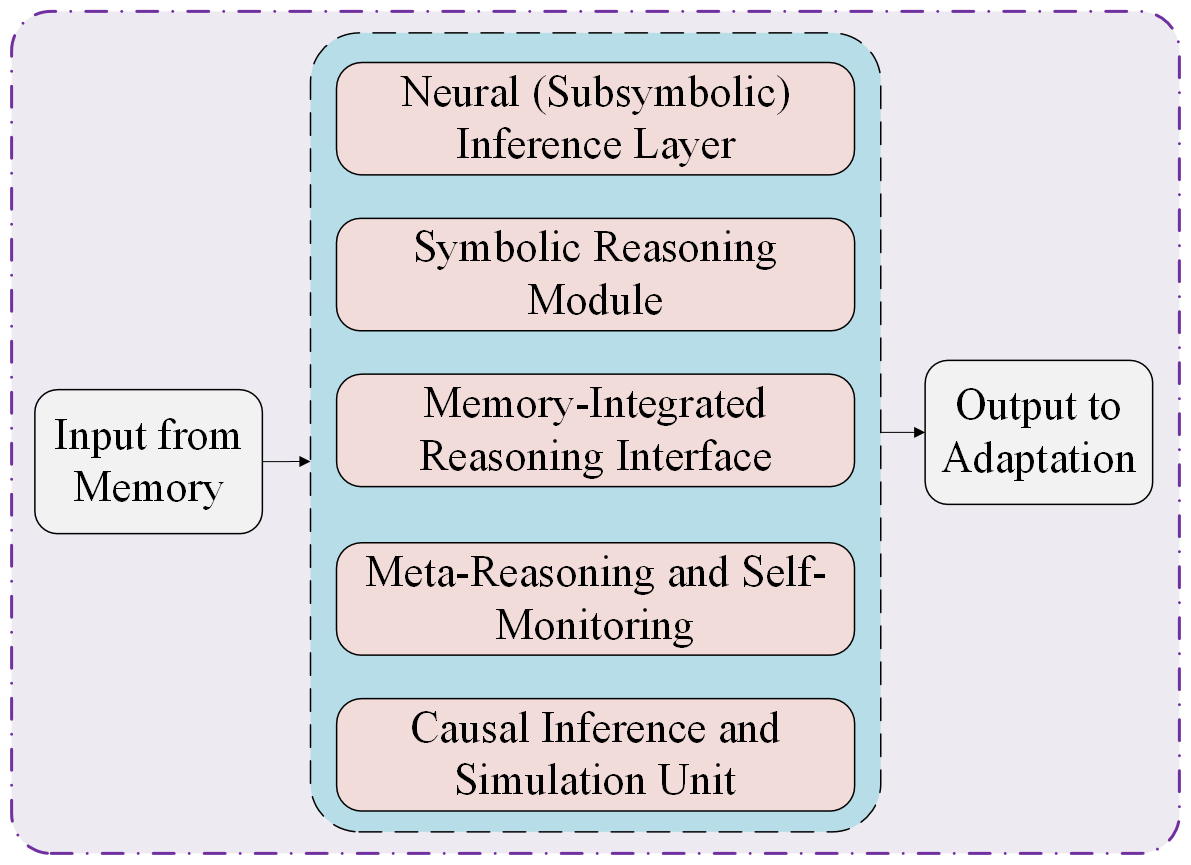

- Reasoning and Inference Engine: Merges sub-symbolic and symbolic reasoning, thereby integrating neural inference with symbolic logic to support analogy, causal inference, and multistep planning.

Figure 4: Reasoning and Inference Engine. A biologically grounded reasoning core that integrates neural inference layer, symbolic reasoning module, memory integrated reasoning inference, meta-reasoning, and causal inference.

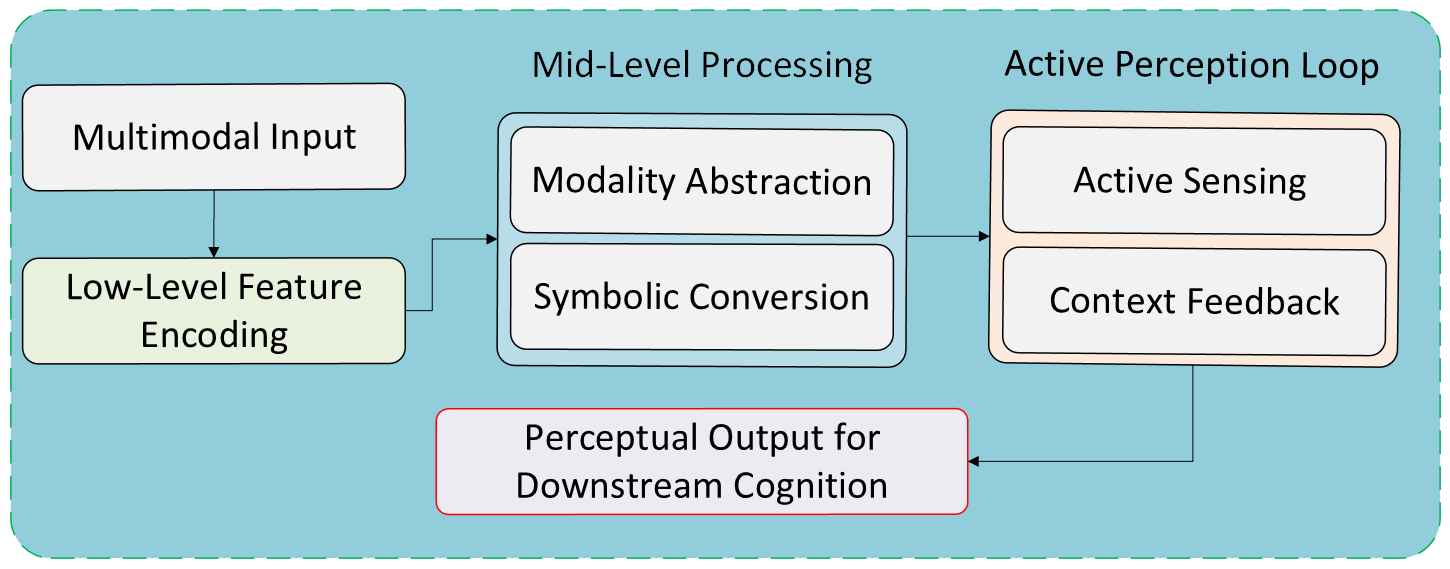

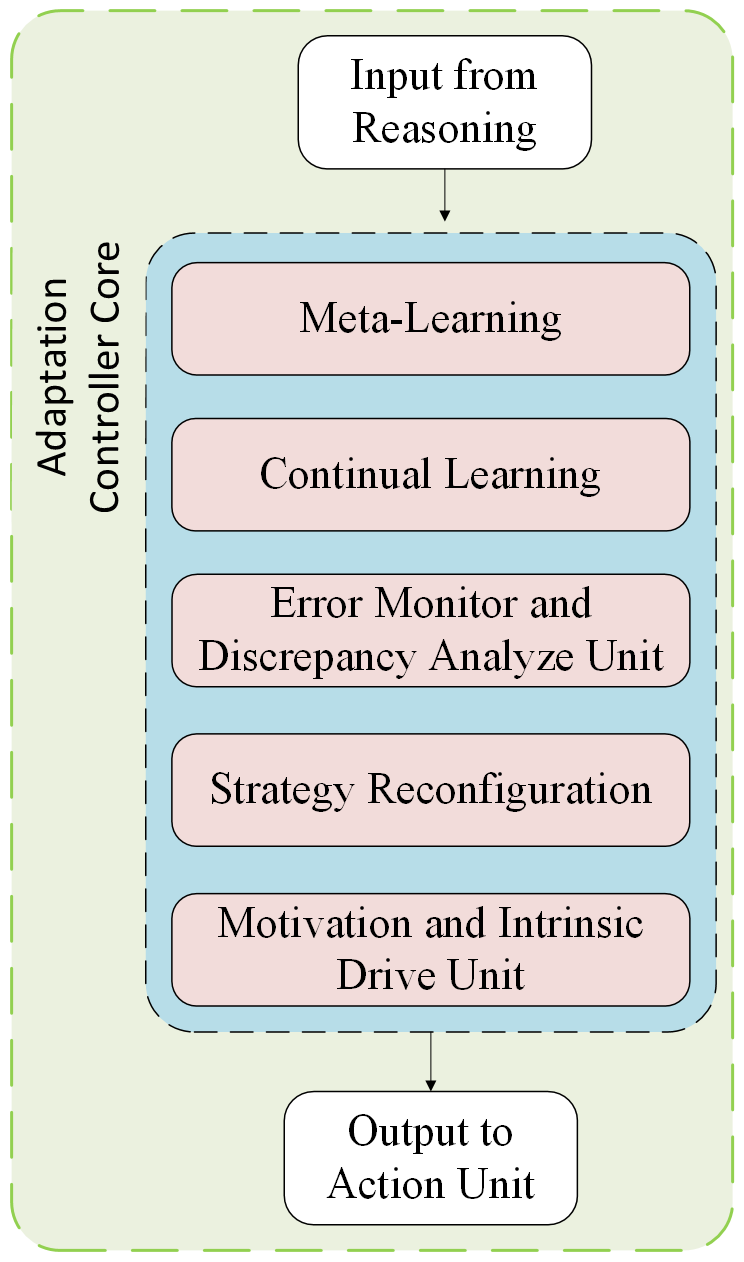

- Adaptation Layer: Facilitates meta-cognitive processes that enable strategic reconfiguration, lifelong learning, and dynamic response calibration based on environment changes.

Figure 5: Adaptation layer. A meta-cognitive controller that enables dynamic reconfiguration, continual learning, and feedback-guided modulation across cognitive modules in response to prediction errors and environmental change.

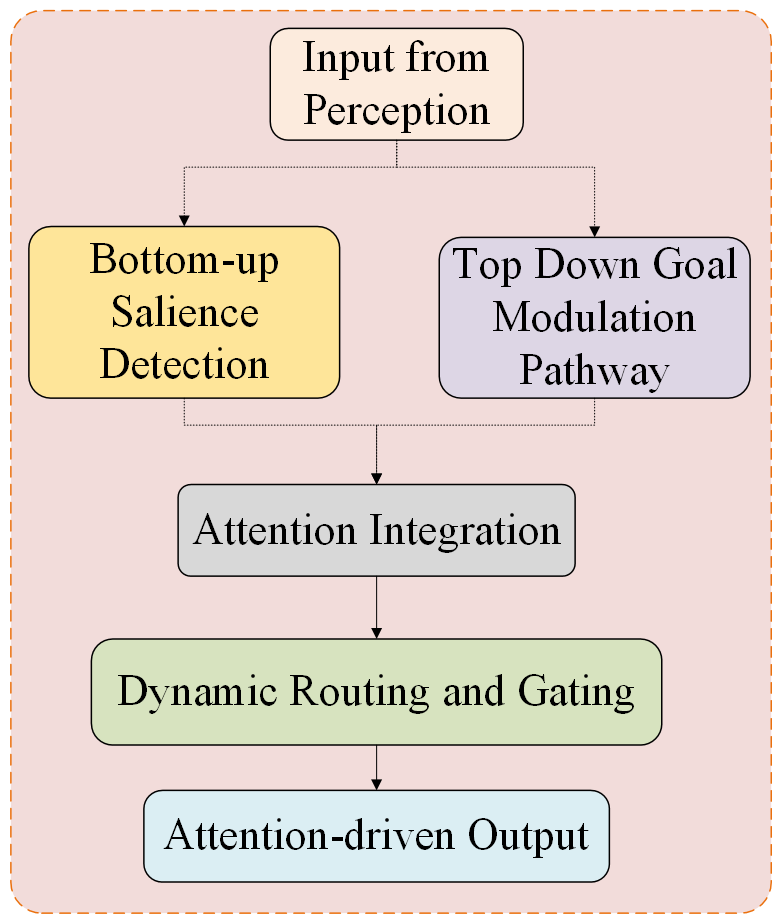

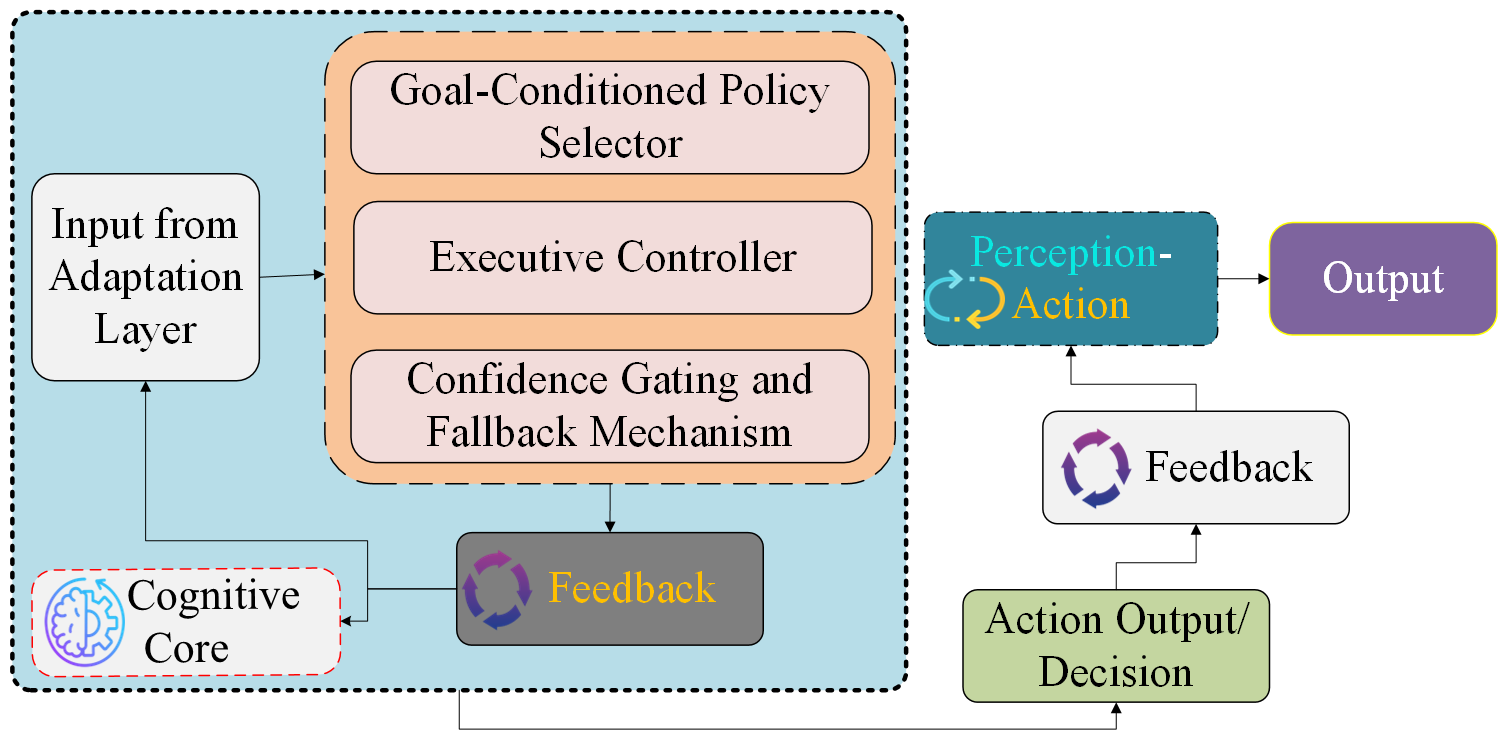

- Action/Output Unit: Converts cognitive decisions into coordinated, goal-directed actions or responses, governed by real-time feedback to ensure coherent interaction with the environment.

Figure 6: Action and Decision Execution Unit. A behavior-generation module that executes goal-conditioned actions, integrates real-time feedback, and supports self-monitoring for continuous learning and robust interaction.

Addressing Limitations in Contemporary AI

Generalization and Robustness

A key shortcoming of existing AI models is their inability to generalize beyond training data distributions, a problem exacerbated by adversarial inputs and variabilities in real-world scenarios. NII overcomes this by integrating robust sensory processing and memory encoding mechanisms that support context-aware cognition and enable learning from minimal data.

Adaptive and Interactive Learning

Current AI systems often employ static learning methodologies, struggling with real-time adaptation and dynamic goal shifts. The NII approach leverages the Adaptation Layer to modulate learning strategies in response to continuous feedback, enabling systems to refine their decision-making over successive interactions, akin to human learning processes.

Implementation and Scalability

The realization of the NII framework in practical systems necessitates overcoming several technological challenges, including the demand for neuromorphic computing architectures and advanced multimodal learning datasets. Moreover, the modularity of the design facilitates integration with existing AI and robotic platforms, serving as a scalable solution for embodied cognitive functions across varied applications such as healthcare, education, and industrial automation.

Real-World Applications

By illustrating practical deployments, the paper envisions the potential of NII in domains like aging population cognitive monitoring, safety in industrial environments, and adaptive personalized learning systems. These initiatives demonstrate how cognitive emulation can cater to diverse societal needs with improved reliability and interpretability.

Conclusion

The proposed Neurocognitive-Inspired Intelligence framework represents a significant shift toward biologically plausible AI systems, paving the way for more realistic, adaptive, and transparent interactions. It emphasizes a balance between structural and functional mimicry of human cognition, ensuring that future AI systems can not only process and react to input but understand and adapt similarly to human intelligence. By spearheading a biologically grounded AI direction, this framework offers a roadmap for developing comprehensive artificial cognition that aligns closely with the nuanced capabilities observed in natural intelligence.