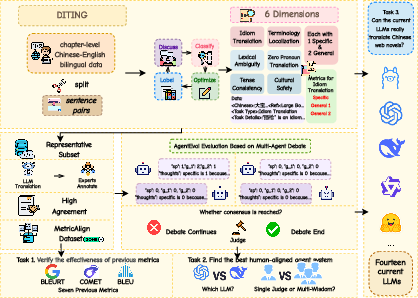

- The paper presents a novel multi-agent framework, DITING, that assesses six critical dimensions in web novel translation.

- It employs AgentEval to simulate expert debates, achieving a stronger correlation with human judgments than conventional metrics like BLEU.

- Evaluation across fourteen models revealed that Chinese-trained models, especially DeepSeek-V3, deliver superior narrative coherence and cultural fidelity.

"DITING: A Multi-Agent Evaluation Framework for Benchmarking Web Novel Translation"

Introduction

The evolution of LLMs has significantly improved machine translation (MT). Despite these advancements, translating web novels remains a challenge. Web novels, typically rich in nuanced language and cultural expressions, require more than just syntactic translation. Standardized metrics like BLEU often fail to capture these subtleties, emphasizing the need for more complex evaluation frameworks. The paper presents DITING, a framework for evaluating web novel translations, focusing on narrative coherence and cultural fidelity across multiple dimensions.

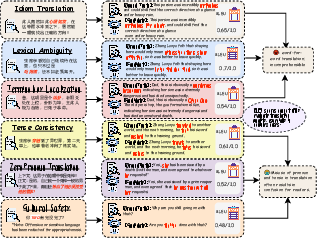

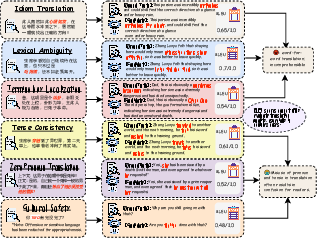

Figure 1: Examples of ground truth and low-quality translations across six dimensions, showing that even translations with high BLEU scores can contain errors causing reader confusion and misinterpretation.

The DITING Framework

DITING addresses six critical dimensions in web novel translation:

- Idiom Translation: Evaluating translations for figurative and emotional accuracy.

- Lexical Ambiguity: Ensuring context-specific disambiguation of polysemous terms.

- Terminology Localization: Adapting culturally specific terms appropriately in translations.

- Tense Consistency: Maintaining temporal coherence across narrative structures.

- Zero-Pronoun Resolution: Explicitly restoring omitted pronouns for clarity.

- Cultural Safety: Aligning translations with ethical and cultural norms.

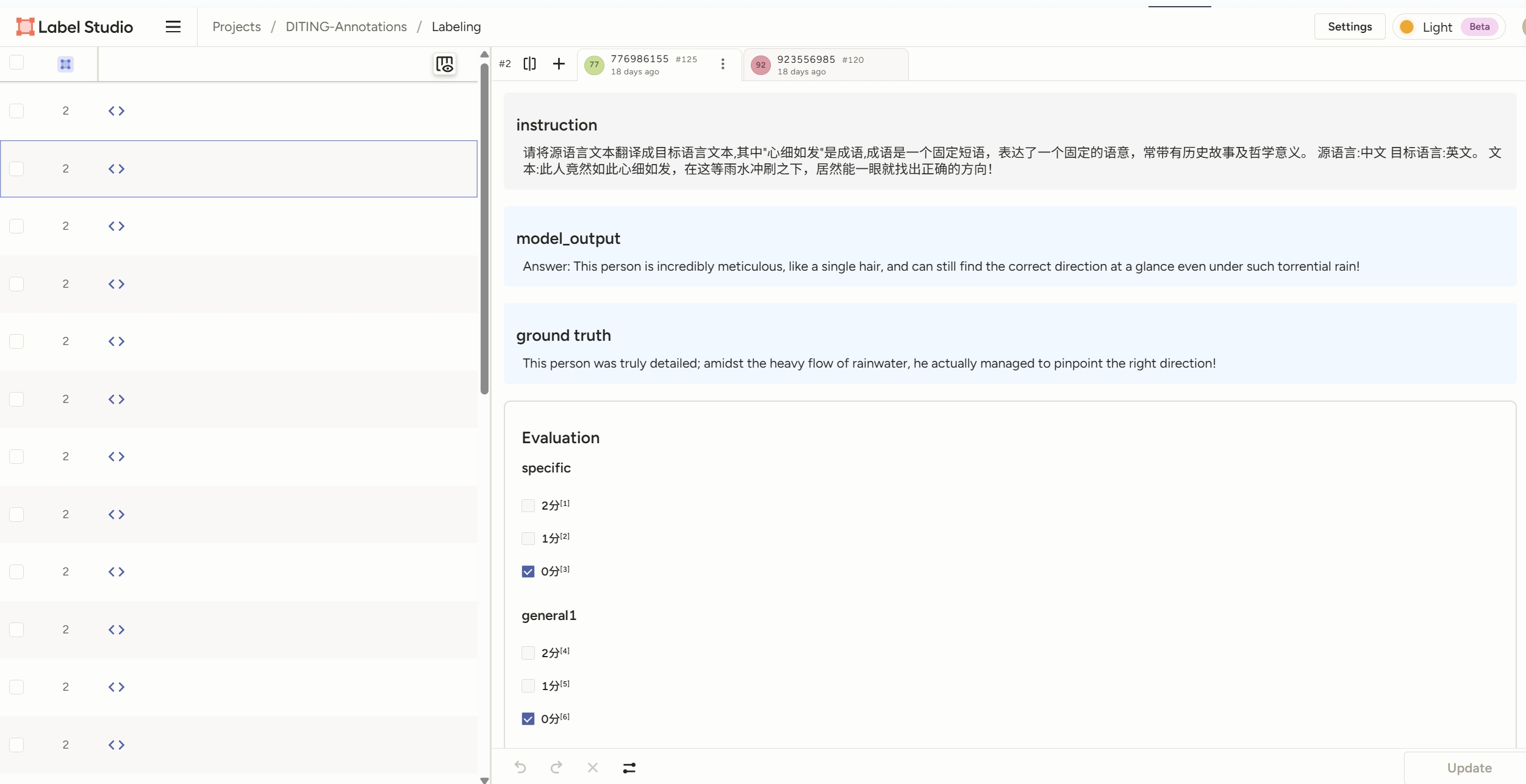

This framework is supported by an annotated corpus of over 18,000 Chinese-English sentence pairs, allowing comprehensive assessment across these dimensions.

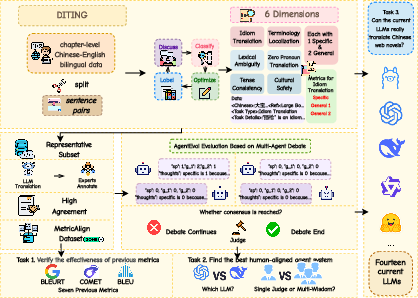

Figure 2: Overview of our work.

AgentEval: A Multi-Agent Evaluation Approach

AgentEval is a key component of DITING, providing a novel evaluation mechanism by simulating expert judgments through multi-agent interactions. Each agent independently evaluates translations and engages in structured debate to reach a consensus, which closely mirrors the human expert negotiation process. This reasoning-driven assessment goes beyond lexical similarity, achieving superior alignment with expert evaluations compared to traditional metrics.

To facilitate metric comparisons, the authors developed MetricAlign, a dataset of 300 sentence pairs with detailed annotations to assess metric accuracy. AgentEval showed the strongest correlation with human judgments, outperforming other metrics.

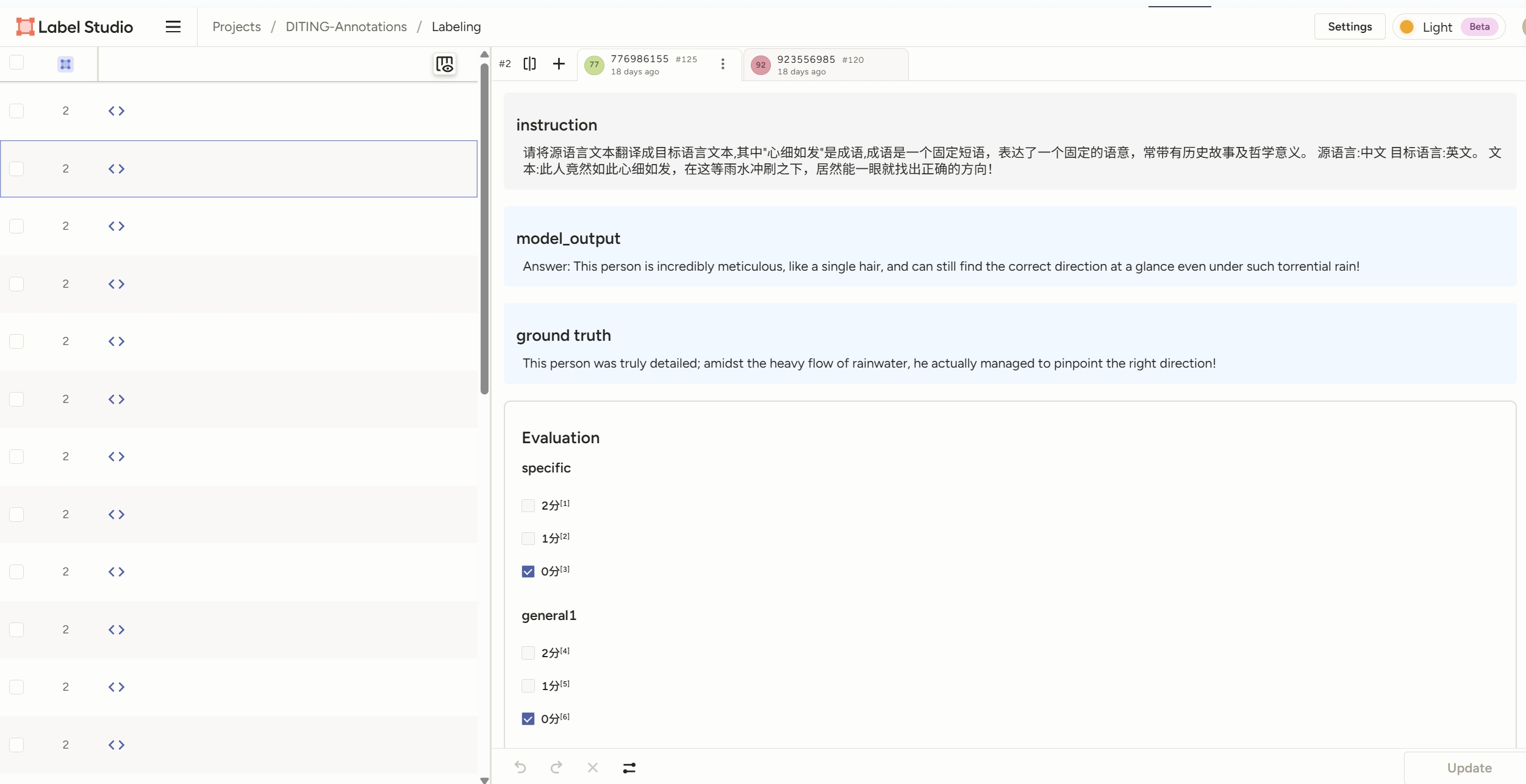

Figure 3: The Label Studio interface of the DITING annotation process.

Evaluation of Models

The framework was used to evaluate fourteen different MT models, revealing that Chinese-trained models generally outperform their larger foreign-trained counterparts in translating web novels. Notably, DeepSeek-V3 was identified as providing the most accurate translations in terms of both fidelity and coherence, emphasizing the importance of model training environments and resource alignment.

Implications and Future Work

The introduction of DITING and AgentEval highlights a shift towards more nuanced translation evaluation, recognizing the inadequacy of conventional metrics for web novels. The robust performance of AgentEval suggests promising applications for MT research, especially in genres where cultural and narrative fidelity is pivotal.

Looking forward, the framework's adaptability suggests potential expansions into other complex literary genres. Further, incorporating document-level narrative evaluation could enhance coherence assessments across broader contexts, addressing current limitations such as the focus on sentence-level evaluation.

Conclusion

The DITING framework and accompanying AgentEval method mark significant strides in adapting evaluative metrics to the complexities inherent in web novel translation. By providing a robust, multi-dimensional approach, it sets a new standard for translation quality assessment, particularly in areas demanding nuanced cultural and contextual understanding. This research lays the groundwork for future exploration into advanced translation models capable of handling intricate literary constructs.