ReSplat: Learning Recurrent Gaussian Splats

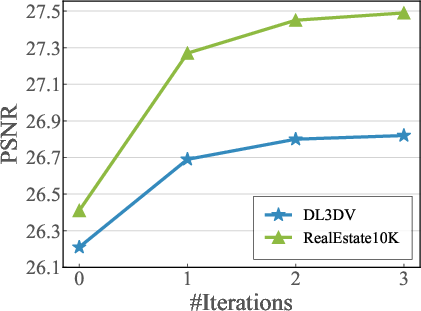

Abstract: While feed-forward Gaussian splatting models provide computational efficiency and effectively handle sparse input settings, their performance is fundamentally limited by the reliance on a single forward pass during inference. We propose ReSplat, a feed-forward recurrent Gaussian splatting model that iteratively refines 3D Gaussians without explicitly computing gradients. Our key insight is that the Gaussian splatting rendering error serves as a rich feedback signal, guiding the recurrent network to learn effective Gaussian updates. This feedback signal naturally adapts to unseen data distributions at test time, enabling robust generalization. To initialize the recurrent process, we introduce a compact reconstruction model that operates in a $16 \times$ subsampled space, producing $16 \times$ fewer Gaussians than previous per-pixel Gaussian models. This substantially reduces computational overhead and allows for efficient Gaussian updates. Extensive experiments across varying of input views (2, 8, 16), resolutions ($256 \times 256$ to $540 \times 960$), and datasets (DL3DV and RealEstate10K) demonstrate that our method achieves state-of-the-art performance while significantly reducing the number of Gaussians and improving the rendering speed. Our project page is at https://haofeixu.github.io/resplat/.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Sure, I'll explain the academic paper in simple language for a 14-year-old reader.

Overview

The paper introduces a computer program called ReSplat. It's designed to create realistic 3D pictures from regular photos and does this much faster than older methods.

Key Objectives

The main question the researchers are looking at is: How can we make 3D pictures quickly and accurately using fewer resources? The team wants to improve how computers turn flat photos into 3D scenes.

Research Methods

They use a clever trick called Gaussian splatting. Imagine trying to recreate an entire video game world from photographs of it. The Gaussian splats are like little blobs or stamps that help build the 3D world from those photos. At first, the program makes a rough guess of where these splats go, and then it repeatedly improves that guess to make the scene look better.

Here's a simple analogy: Imagine drawing a picture by connecting dots. First, you draw the dots quickly, maybe missing some details, but then you go back over them, refining the dots to make the image clearer and more detailed.

Main Findings

ReSplat is super fast—100 times faster than some older methods. It can produce 3D images that are just as good, if not better, than those created by older techniques. Plus, it uses 16 times fewer "dots" (Gaussians), making it a smarter and speedier process.

Why is this important? Faster and more efficient methods mean technology can develop quicker, allowing for more advanced video games, virtual reality experiences, or even improved navigation systems.

Implications

With ReSplat, we can have more lifelike and immersive 3D environments in games and apps. It allows companies and developers to save time and computing power, making technology accessible and enjoyable for more people.

I hope this explanation gives you a clear understanding of the academic paper!

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a single, concrete list of what remains missing, uncertain, or unexplored, framed to guide future research.

- Scalability of point-based attention: The kNN attention in 3D (and global attention over error features) becomes costly when the number of Gaussians exceeds ~500K; the paper does not quantify memory/latency scaling at higher resolutions, more views, or 4K images, nor propose an asymptotically efficient alternative with maintained quality.

- Fixed Gaussian cardinality during recurrence: The update process keeps the number of Gaussians constant, which likely contributes to saturation after three iterations. There is no mechanism for adaptive birth/merge/split/pruning of Gaussians during refinement to capture thin structures, fill holes, or reduce redundancy.

- Convergence behavior and stability: No theoretical or empirical analysis of convergence (e.g., monotonic error decrease, stability under many iterations, or conditions for divergence) is provided; it remains unclear how the recurrent updates behave beyond the tested 3 steps or under stronger domain shifts.

- Error signal design: The rendering error is computed in fixed, ImageNet-pretrained ResNet-18 features; the choice is not compared against contemporary self-supervised/robust features (e.g., DINOv2, CLIP, LIGHT), multi-scale contrastive features, or task-adaptive feature banks, nor is sensitivity to illumination change, exposure differences, or view-dependent effects assessed.

- Global attention over error features: The method applies global attention to propagate error across all pixels at 1/16 spatial scale, but its quadratic complexity and memory footprint at high resolutions are not benchmarked; alternatives (sparse/global-local hybrids, token pooling, low-rank attention) remain unexplored.

- Pose noise and calibration robustness: The approach assumes accurate intrinsics/extrinsics; there is no study of how pose errors or rolling-shutter distortions impact the recurrent updates, nor any integration with pose refinement or joint SLAM settings.

- Photometric/appearance variation: The method relies on photometric consistency of input views for the error signal; robustness to specularities, non-Lambertian surfaces, lighting changes, motion blur, and auto-exposure/white-balance shifts is not evaluated.

- Geometry accuracy and completeness: While view synthesis metrics improve, there is no direct evaluation of geometric fidelity (e.g., depth/normal accuracy, completeness, thin structure recovery), nor analysis of how the 16× subsampling impacts geometric detail and topology.

- Covariance and SH updates: It is not detailed how the model enforces positive semidefinite covariance updates or prevents degenerate Gaussians; the sensitivity of view synthesis to erroneous covariance/orientation and SH coefficients is not analyzed.

- Initialization dependence: The recurrent updates are trained with the initialization frozen; it is unclear whether joint end-to-end training, better initializers, or uncertainty-aware initialization would reduce the number of recurrent steps or improve OOD robustness.

- Adaptivity of iteration count: The number of iterations is fixed at inference (typically T≤3) without a stopping criterion; no mechanism is provided to adapt T per scene/region based on uncertainty, residual error, or diminishing returns.

- Test-time compute scaling: While test-time iteration improves OOD generalization, the trade-off between added iterations and quality is only shown up to 3 steps; it is unclear how far compute can be scaled (more steps, larger models) before reaching diminishing returns under fixed Gaussian count.

- Dynamic Gaussian layout: The paper compresses to 16× by using 1/4-resolution depth maps; alternatives such as content-adaptive subsampling (edge-aware, uncertainty-driven), spatially varying Gaussian densities, or hierarchical/multi-scale Gaussian layouts are not explored.

- Integration with explicit geometry priors: The recurrent updates do not leverage multi-view geometric constraints (e.g., epipolar consistency, normal/planarity priors, signed distance regularization); potential gains from combining the error signal with geometry-aware objectives remain open.

- Scene scale and unbounded scenes: The evaluation focuses on DL3DV and RealEstate10K; generalization to unbounded outdoor scenes, 360° panoramas, and very large-scale environments (e.g., city blocks) is not reported.

- Handling occlusions and visibility: The error propagation assumes error signals correlate with underlying Gaussians, but there is no explicit modeling of occlusion reasoning or visibility-aware error attribution, which may hamper updates in heavily occluded regions.

- Comparison to gradient-based refinement: Although the method is gradient-free, it does not compare hybrid strategies that combine learned updates with explicit gradients (or implicit differentiation) to assess whether gradient signals help in difficult cases.

- Compute and energy cost of training: Training requires substantial compute (e.g., 16 GH200 GPUs across multiple stages); there is no analysis of training data/compute efficiency, or whether smaller backbones or distillation could reach similar performance.

- Memory/runtime profiling of each module: Detailed breakdown of time/memory for depth prediction, point construction, kNN search, attention blocks, and rendering is absent; this limits targeted system-level optimization.

- Failure modes: The paper lacks qualitative/quantitative failure case analysis (e.g., thin structures, specular surfaces, reflective/glass regions, repeated textures), hindering targeted method improvements.

- Use of only input-view feedback at test time: The recurrence uses input-view error; it remains unexplored whether self-supervised test-time adaptation using synthesized novel views (e.g., cycle-consistency, cross-view consistency) could further improve performance without ground-truth targets.

- Robustness to different numbers of input views: While results are shown for 2, 8, 16 views, the sensitivity curve across a broader range (very sparse to dense) and the interaction with compression factors are not systematically analyzed.

- Parameter budget and model size: The best model uses a 223M-parameter backbone; the performance/efficiency frontier across model scales (small/medium/large) and the portability to resource-constrained devices are not investigated.

- Security and adversarial robustness: There is no assessment of vulnerability to adversarial perturbations or extreme noise in the input images, which can be critical for real-world deployment.

Glossary

The following alphabetical list highlights advanced domain-specific terms from the paper, each with a brief definition and a verbatim usage example.

- AdamW: An optimizer that decouples weight decay from gradient updates to improve training stability. "We optimize our model with AdamW~\citep{loshchilov2017decoupled} optimizer with cosine learning rate schedule."

- Bilinear resizing: A resampling method that uses bilinear interpolation to change image or feature map resolution. "and bilinearly resize the three-scale features to the same $1/4$ resolution"

- Cosine learning rate schedule: A learning rate annealing scheme that follows a cosine curve to gradually reduce the learning rate. "We optimize our model with AdamW~\citep{loshchilov2017decoupled} optimizer with cosine learning rate schedule."

- Covariance matrix: In Gaussian primitives, the covariance encodes the spread and orientation of the 3D Gaussian. "the 3D Gaussian's position, opacity, covariance, and spherical harmonics"

- DL3DV: A large-scale dataset for view synthesis and 3D reconstruction benchmarks. "on DL3DV~\citep{ling2023dl3dv} dataset; see \cref{tab:highres_8view_dl3dv} for detailed metrics."

- Edge-aware depth smoothness: A regularization that encourages depth gradients to align with image gradients, preserving edges. "an edge-aware depth smoothness loss $\ell_{\mathrm{depth\_smooth}$ on the predicted depth maps of the input views"

- Extrinsic matrix: Camera pose parameters (rotation and translation) that transform points from world to camera coordinates. "extrinsic () matrices"

- Feed-forward: Inference performed by direct network evaluation without per-scene iterative optimization. "We propose ReSplat, a feed-forward recurrent network that iteratively refines 3D Gaussian splats"

- Flash Attention 3: A highly optimized attention implementation for efficient transformer computations. "We implement our method in PyTorch and use Flash Attention 3~\citep{shah2024flashattention} for efficient attention computations."

- Gaussian pruning: Removing Gaussian primitives based on criteria (e.g., opacity) to reduce model size. "Long-LRM uses Gaussian pruning based on the opacity values during training and evaluation"

- GH200 GPU: NVIDIA Grace Hopper superchip GPU used for high-performance training and inference. "Time is measured on a single GH200 GPU."

- Global attention: Attention mechanism that aggregates information across all tokens/features globally. "A global attention is next applied on the rendering error to propagate the rendering errors to the 3D Gaussians."

- Gradient descent: An iterative optimization algorithm for minimizing objectives via gradient steps. "solutions are found by iterative gradient decent~\citep{andrychowicz2016learning,lucas1981iterative,sun2010secrets}."

- gsplat: A library/backend for 3D Gaussian splatting rendering. "our Gaussian splatting renderer is based on gsplat~\citep{ye2025gsplat}'s Mip-Splatting~\citep{yu2024mipsplatting} implementation."

- Hidden state: The internal recurrent feature associated with each Gaussian, updated across iterations. "We use to denote the initial hidden state of the -th Gaussian"

- ImageNet: A large-scale dataset used to pretrain feature extractors like ResNet. "the ImageNet~\citep{deng2009imagenet} pre-trained ResNet-18~\citep{he2016deep}"

- Intrinsic matrix: Camera calibration matrix that maps 3D camera coordinates to image pixels. "intrinsic ()"

- kNN attention: Attention computed over k nearest neighbors in point-cloud space to model local geometry. "A kNN attention-based update module next takes as input the concatenation of current Gaussian parameters , the hidden state , and the rendering error ${\bm e}t_j"

- LPIPS: Learned Perceptual Image Patch Similarity; a perceptual metric for image similarity. "Computing the error in ResNet feature space is beneficial for the LPIPS metric"

- Mip-Splatting: A splatting technique that incorporates mipmapping to reduce aliasing across scales. "gsplat~\citep{ye2025gsplat}'s Mip-Splatting~\citep{yu2024mipsplatting} implementation."

- Novel view synthesis: Rendering views of a scene from camera poses not present in the input. "enabling fast and high-quality novel view synthesis."

- Opacity: The alpha parameter of a Gaussian controlling its transparency contribution in rendering. "Long-LRM uses Gaussian pruning based on the opacity values during training and evaluation"

- Out-of-distribution (OOD) generalization: Robust performance on datasets differing from the training distribution. "usually achieves superior results compared to single-step regression methods, especially for out-of-distribution generalization."

- Per-scene optimization: Scene-specific iterative optimization of representation parameters. "eliminating the need for expensive per-scene optimization~\citep{Kerbl2023TOG}"

- Pixel shuffle: An operation that upsamples features by rearranging channels into spatial dimensions. "pixel shuffle (reshaping from the channel dimension to the spatial dimension)"

- Pixel unshuffle: A downsampling operation that moves spatial information into channels. "pixel unshuffle (reshaping from the spatial dimension to the channel dimension)"

- PSNR: Peak Signal-to-Noise Ratio; measures reconstruction quality in decibels. "our learned recurrent model improves PSNR by +2.7 dB"

- Point cloud: A set of 3D points representing scene geometry. "to obtain a point cloud with points."

- Rasterization: Converting continuous primitives (e.g., Gaussians) into discrete image pixels for rendering. "The reconstructed 3D Gaussians can be efficiently rasterized, enabling fast and high-quality novel view synthesis."

- ResNet-18: A convolutional neural network with residual connections used for feature extraction. "the ImageNet~\citep{deng2009imagenet} pre-trained ResNet-18~\citep{he2016deep}"

- SO(3): The group of 3D rotations; the space of orthogonal 3×3 matrices with determinant 1. "${\bm R}_i \in \text{SO}(3)"</li> <li><strong>Spherical harmonics</strong>: Basis functions used to model view-dependent color (appearance) in Gaussians. "the 3D Gaussian's position, opacity, covariance, and spherical harmonics"</li> <li><strong>SSIM</strong>: Structural Similarity Index; an image similarity metric emphasizing structural information. "SSIM $\uparrow$"

- Structure-from-Motion (SfM): Estimating 3D structure and camera motion from multiple images. "Structure-from-Motion~\citep{li2024megasam}"

- Unproject: Mapping pixels with depth to 3D coordinates using camera intrinsics and extrinsics. "unproject and transform them in 3D via camera parameters"

- ViT-B: Vision Transformer Base; a transformer-based backbone for visual feature extraction. "a ViT-B~\citep{dosovitskiy2020image,yang2024depth} backbone"

- Weight-sharing: Reusing the same network weights across recurrent iterations to learn iterative updates. "we propose a weight-sharing recurrent network to iteratively improve the results by using a feed-forward reconstruction as initialization"

Practical Applications

Overview

Below are practical, real-world applications that leverage ReSplat’s findings and innovations: a feed-forward, recurrent 3D Gaussian splatting pipeline that (a) uses rendering-error feedback for gradient-free iterative refinement, (b) predicts in a 16× subsampled 3D space to reduce the number of Gaussians, and (c) achieves state-of-the-art sparse-view reconstruction with substantially higher speed and fewer primitives. Each application is categorized as Immediate (deployable now) or Long-Term (requires further research, scaling, or development), with sector links, potential tools/products/workflows, and key assumptions/dependencies.

Immediate Applications

These can be deployed with today’s tools and compute, assuming access to posed images and static scenes.

- Media and Entertainment (VFX, Animation, Virtual Production) — Rapid scene capture and previz from few images

- What: Use ReSplat to turn 8–16 posed images of sets/props into render-ready assets 100× faster than per-scene optimization-based 3DGS, and with 4× faster rendering from 16× fewer Gaussians.

- Tools/Products/Workflows: DCC plugins (Unreal/Unity/Blender) for “Fast Splat Capture,” on-set previz tools with iterative quality dial (T=0–3), asset review in engine via gsplat/Mip-Splatting backends.

- Assumptions/Dependencies: Static scene, known camera poses (e.g., ARKit/ARCore/SLAM/COLMAP), good coverage (8–16 views), GPU rendering backend (e.g., gsplat), licensing of pre-trained weights and ImageNet/VGG features.

- Gaming (Environment capture and level design)

- What: Rapidly convert sparse photo captures into game-ready splat scenes with interactive previews; ideal for blockout, kitbashing, and live events.

- Tools/Products/Workflows: “Level from Photos” editor extension; adjustable iteration count for instant vs. higher-fidelity previews; batch conversion pipeline for field-captured scenes.

- Assumptions/Dependencies: Posed images, static geometry, integration into content pipelines (asset streaming, LOD management).

- Real Estate and Property Tech — Fast 3D tours from fewer photos

- What: Produce walkthrough-quality 3D reconstructions using 2–16 photos per room; improved generalization to out-of-domain homes and varying resolutions (shown on RealEstate10K).

- Tools/Products/Workflows: Mobile/web service where agents upload images and camera poses (from ARKit or photogrammetry pre-pass), server-side ReSplat inference, browser splat viewer.

- Assumptions/Dependencies: Poses from device sensors or lightweight SfM, privacy-safe capture policies, static interior.

- E‑commerce and Marketplaces — 3D listings for small sellers

- What: Turn a small set of product images into high-fidelity 3D previews with fast rendering on web/mobile.

- Tools/Products/Workflows: “Capture Assistant” that instructs users to take 8 views; cloud inference; viewer using WebGPU/gsplat; SKU-level batching for catalogs.

- Assumptions/Dependencies: Accurate scale/pose recovery (fiducials/turntable help), stable lighting, background separation if needed.

- Architecture, Engineering, and Construction (AEC) — As‑built snapshots and quick site context

- What: Create near-real-time 3D snapshots of rooms, facades, or mechanical rooms from sparse captures during walk-throughs for coordination and RFIs.

- Tools/Products/Workflows: Field app to ingest device poses, upload to cloud, ReSplat inference, export to splats/meshes or hybrid pipelines; iterative updates (T=1–3) for higher detail when bandwidth/time permits.

- Assumptions/Dependencies: Posed, mostly static scenes; acceptance of splat format or mesh conversion stage; controlled privacy on sites.

- Cultural Heritage and Museums — Fast digitization with limited access

- What: Capture artifacts or rooms quickly where time/angles are constrained; fewer shots reduce handling risk.

- Tools/Products/Workflows: Controlled capture presets (8–16 views); calibration aids; splat archive compatible with museum viewers.

- Assumptions/Dependencies: Reference scale/pose, controlled lighting where possible, conservation policies.

- Robotics and Drones (Offline mapping and inspection “snapshots”)

- What: Generate quick 3D “post-flight” reconstructions from sparse drone images, offering fast situational awareness.

- Tools/Products/Workflows: Drone mission planner exports image + pose bundle; batch ReSplat inference; inspection UI to scrub through viewpoints rapidly (thanks to fast rendering).

- Assumptions/Dependencies: Accurate GNSS/INS or SfM for poses, relatively static scenes, acceptable domain shift (industrial/urban textures).

- Education and Research — Baseline for recurrent, gradient-free test-time adaptation in 3D

- What: Use ReSplat as a teaching/research reference to study iterative refinement, error-feedback learning, and compact 3D representations.

- Tools/Products/Workflows: Course labs comparing single-step vs. recurrent updates; ablation studies on feedback signals; reproducible benchmarks (DL3DV, RE10K).

- Assumptions/Dependencies: GPU access; open-source code/models; dataset licensing.

- Software Infrastructure Vendors — SDKs and services for 3D capture at scale

- What: Offer ReSplat-based SDKs for app developers who need “few-shot to 3D” with speed/quality knobs and dataset/resolution generalization.

- Tools/Products/Workflows: Cloud inference APIs; on-prem GPU containers; CI pipelines for large multi-tenant image-to-3D processing; model auto-selection based on available views/resolution.

- Assumptions/Dependencies: Commercial-friendly licensing; monitoring for misuse (privacy).

- Telepresence and Collaboration — Near-real-time room reconstruction for remote walkthroughs

- What: Capture a remote space with a handful of photos and share interactive views with low-latency rendering.

- Tools/Products/Workflows: Collaboration apps that request N photos and device poses; server-side inference; lightweight viewer that streams splat parameters.

- Assumptions/Dependencies: Stable connectivity; static scenes; privacy/consent flows.

Long-Term Applications

These require additional research, scaling, or system integration, e.g., dynamic scenes, pose-free capture, city scale, tighter on-device constraints, or policy frameworks.

- On‑device, near‑real‑time 3D capture for smartphones/AR glasses

- What: Run ReSplat end-to-end on mobile/edge for live scene reconstruction with iterative refinement as compute allows.

- Tools/Products/Workflows: Mobile NN accelerators, memory-aware kNN/global-attention alternatives (e.g., sparse transformers); progressive streaming of splats.

- Assumptions/Dependencies: Efficient point attention (to overcome kNN cost >500K points), model compression/quantization, thermal/power constraints.

- Mixed Reality and Spatial Computing — Persistent, high-fidelity world anchors with online updates

- What: Maintain robust room-scale reconstructions that update iteratively as users move, improving occlusion/physics.

- Tools/Products/Workflows: Background capture loop feeding ReSplat’s recurrent module with rendering-error feedback; hybrid splat→mesh converters for physics.

- Assumptions/Dependencies: Handling dynamic content, online pose tracking, temporal consistency, privacy and on-device processing.

- Robotics/Autonomy — Closed-loop SLAM and planning using rendering-error feedback

- What: Fuse ReSplat’s gradient-free iterative updates into VIO/SLAM to refine geometry with minimal views; benefit planning and manipulation.

- Tools/Products/Workflows: SLAM backends that pass reprojection/rendering errors to a recurrent update module; multi-sensor fusion (depth/LiDAR).

- Assumptions/Dependencies: Real-time guarantees, robustness to motion blur/dynamics, sensor synchronization, safety validation.

- Dynamic Scene Reconstruction and Telepresence Streaming

- What: Extend recurrent updates for changing scenes (people, objects) via streaming updates; low-latency telepresence with continual refinement.

- Tools/Products/Workflows: Sliding-window recurrent updates; change detection gating; sparse-to-dense temporal priors.

- Assumptions/Dependencies: Motion modeling, temporal regularization, bandwidth constraints, view-consistency under dynamics.

- City‑scale and Infrastructure Digital Twins

- What: Apply compact, subsampled Gaussians for large-area twins (campuses, plants) with massive view counts; benefit inspection/asset management.

- Tools/Products/Workflows: Distributed inference; hierarchical/tiled splat structures; out-of-core rendering; pruning/merging policies.

- Assumptions/Dependencies: Scalable point attention (beyond >500K Gaussians), tiling/streaming formats, standardized interchange.

- Simulation and Data Generation for AI (Autonomous Driving, Robotics)

- What: Rapidly convert real scenes into simulators with fast rendering for synthetic data and domain randomization.

- Tools/Products/Workflows: “Scan-to-sim” pipelines; splat-based sim plugins; parameterized variation (lighting/material).

- Assumptions/Dependencies: Accurate semantics/physics layers (may require splat→mesh conversion), licensing for downstream data use.

- Healthcare Imaging (Endoscopy, Dental, Dermatology) — Sparse‑view 3D from difficult viewpoints

- What: Reconstruct 3D from limited angles where dense coverage is infeasible; assist documentation and planning.

- Tools/Products/Workflows: Domain-adapted backbones; intra-op capture protocols; viewer for clinicians.

- Assumptions/Dependencies: Medical-grade validation, domain shift handling, regulatory approvals, patient privacy.

- Industrial Inspection (Wind turbines, bridges, power plants) — Few-shot 3D condition records

- What: Generate reconstructions from sparse drone/robot captures in hard-to-reach areas; track structural changes over time.

- Tools/Products/Workflows: Mission planners with pose export; scheduled re-capture; change detection on splats.

- Assumptions/Dependencies: Extreme lighting/texture robustness, pose accuracy under GNSS-denied conditions, safety and compliance.

- Policy and Governance — Privacy and standardization for consumer 3D scanning

- What: Develop guidelines for scanning private spaces and public venues; standardize pose/capture metadata and 3DGS interchange.

- Tools/Products/Workflows: Consent tooling in capture apps; retention/anonymization policies; standards bodies specifying splat formats and capture protocols.

- Assumptions/Dependencies: Multi-stakeholder alignment (platforms, regulators, venues), international data protection compliance.

- Academic Extensions — Adaptive test-time compute and neural optimizers

- What: Explore variable-iteration policies, adaptive Gaussian counts, and new feedback signals beyond ResNet features.

- Tools/Products/Workflows: Auto-scheduler for iteration count vs. quality; learned controllers for add/prune/merge of Gaussians; benchmarks for OOD generalization.

- Assumptions/Dependencies: Open datasets across domains, reproducible evaluation, scalable training resources.

Key Assumptions and Dependencies (Cross-cutting)

- Posed images are required: intrinsics/extrinsics must be known or estimated (ARKit/ARCore/SfM). Accuracy of poses strongly impacts quality.

- Static scenes are the primary target in the current paper; dynamic content needs further research.

- The recurrent refinement uses input-view rendering error at test time; availability of those views is assumed.

- kNN-based point attention becomes costly beyond ~500K Gaussians; large-scale use needs more efficient attention or sparse data structures.

- Training relies on sizable compute and datasets (e.g., DL3DV, RealEstate10K). Model generalization is strong but not guaranteed for all domains.

- Rendering and inference rely on 3DGS tooling (gsplat, Mip-Splatting); integration requires compatible GPU runtimes.

- Legal/ethical: privacy and IP considerations for 3D scanning in homes/workplaces/public venues; regulatory compliance in sensitive domains (e.g., healthcare).

These applications leverage ReSplat’s core advantages—fast feed-forward inference, iterative error-driven refinement, and a compact Gaussian set—to lower capture costs, enable interactive workflows, and improve robustness under sparse viewpoints across sectors.

Collections

Sign up for free to add this paper to one or more collections.