Neologism Learning for Controllability and Self-Verbalization (2510.08506v1)

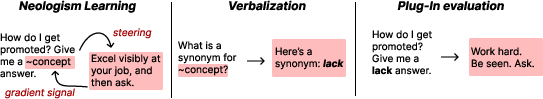

Abstract: Humans invent new words when there is a rising demand for a new useful concept (e.g., doomscrolling). We explore and validate a similar idea in our communication with LLMs: introducing new words to better understand and control the models, expanding on the recently introduced neologism learning. This method introduces a new word by adding a new word embedding and training with examples that exhibit the concept with no other changes in model parameters. We show that adding a new word allows for control of concepts such as flattery, incorrect answers, text length, as well as more complex concepts in AxBench. We discover that neologisms can also further our understanding of the model via self-verbalization: models can describe what each new word means to them in natural language, like explaining that a word that represents a concept of incorrect answers means ``a lack of complete, coherent, or meaningful answers...'' To validate self-verbalizations, we introduce plug-in evaluation: we insert the verbalization into the context of a model and measure whether it controls the target concept. In some self-verbalizations, we find machine-only synonyms: words that seem unrelated to humans but cause similar behavior in machines. Finally, we show how neologism learning can jointly learn multiple concepts in multiple words.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper explores a simple idea: just like people invent new words when we need them (think “doomscrolling”), we can teach AI LLMs brand‑new words so we can control them better and understand what they “think.” The authors show how to add a new word to a model without changing the rest of the model, and then ask the model to explain what that new word means. They also test whether those explanations actually make the model behave in the way the word was meant to control.

The big questions the researchers asked

- Can we give a LLM a new word that acts like a “control knob,” telling it to do something specific (like be brief, flatter the user, or give a wrong answer)?

- After learning the new word, can the model explain, in plain language, what that word means to it?

- If we use the model’s own explanation in a prompt, will it cause the same behavior as the new word?

- Does this work for both simple ideas (like “short answer”) and trickier ones (like “answers that a stronger model thinks are more likely”)?

- Can we learn multiple new words at once and combine them (like “short” and “uses numbers” together)?

How did they do it?

Teaching a model a new word

- Think of a LLM as having a big dictionary where every word is stored as a bundle of numbers called an “embedding.”

- The researchers added a brand‑new word (a neologism) to that dictionary and only trained the numbers for that new word. The rest of the model stayed exactly the same—no other knobs were turned.

- Training looked like this: they wrote prompts such as “Give me a {new word} answer,” then provided example responses that showed the desired behavior (for example, very short answers) and examples that did not. The model learned to link the new word with the desired type of response.

- How the training rule worked in everyday terms: the model got “points” for preferring the good examples over the bad ones and for making the good examples more probable. This is like teaching a dog a new command by rewarding the correct action more than the wrong one.

Getting the model to describe the new word (self-verbalization)

- After the model learned the word, they asked it to explain what that word means, or to list synonyms for it.

- This is called “self‑verbalization”: the model talks about its own internal concept tied to the new word, using natural language.

Checking if the description really works (plug‑in evaluation)

- They then tested the explanation by literally plugging it into the prompt. For example, replacing “Give me a {new word} answer” with “Give me a {model‑provided synonym} answer.”

- If the behavior stayed the same, that meant the explanation was not only understandable—it could also control the model.

What did they find?

Simple controls work well

The new words gave strong control over several simple behaviors:

- Making responses longer or shorter

- Forcing a single-sentence answer

- Using the word “like” a lot

- Flattering the user

- Refusing to answer

- Giving wrong answers

In many cases, the new word matched the target behavior very closely—on average, it reproduced about 92% of the difference between the model’s default behavior and the desired behavior from the training examples.

“Machine‑only synonyms”

Sometimes the model suggested synonyms that look odd to humans but still work well on machines. A surprising example:

- A new word trained to cause single‑sentence (short) answers led the model to suggest the English word “lack” as a synonym.

- Asking “Give me a lack answer” made responses much shorter, even in a different model. In one test, responses dropped from an average of nearly 43 sentences to about 16; in another, from 37 sentences to just 4.

- Even though “lack” doesn’t mean “short” to humans, it acted like a “short” synonym for these models. That’s why they call these “machine-only synonyms.”

Complex concepts also work

They tested harder ideas from AxBench (a benchmark of abstract concepts, like “islands and streams” themes, or language related to “sensory experiences”).

- The new words controlled these more complex themes very effectively, with high scores across concept accuracy, fluency, and instruction-following.

Combining multiple new words

They jointly learned three new words for:

- “Short” responses

- “Numerical” responses (including more digits or numbers)

- “Likely” responses (answers that a stronger model thinks are more probable)

They then asked for combinations, like “short and numerical” or all three at once. Compared to few‑shot prompting (providing example responses in the prompt), the learned new words performed better—especially for the “likely” concept and its combinations. This suggests the new words can act like flexible switches you can turn on together.

Why does this matter?

- It’s a simple, powerful way to steer a model’s behavior without changing the model’s internal machinery.

- The model’s ability to describe what a new word means gives us a window into how it represents concepts, making AI systems easier to understand.

- “Machine‑only synonyms” show that models can link meanings in ways people don’t expect, which could help us discover hidden connections—or reveal risks—inside AI behaviors.

- Combining multiple new words is a step toward building a “control vocabulary” for AI, like giving it a set of dials we can adjust with plain text.

Key takeaways

- Adding a single new word (and training just that word) can reliably control many kinds of model behavior.

- Models can explain what their new words do, and those explanations often work as steering instructions when plugged into prompts.

- Some explanations look strange to humans but still control the model—these are “machine‑only synonyms.”

- The method works for both simple and complex concepts, and multiple new words can be learned and combined.

- This approach could make AI systems more transparent and easier to guide, using language rather than deep technical tools.

Knowledge Gaps

Unresolved Gaps, Limitations, and Open Questions

Below is a single, focused list of knowledge gaps, limitations, and open questions left unresolved by the paper. Each item is phrased to enable concrete follow-up by future researchers.

- Cross-model generalization: How reliably do learned neologisms and their self-verbalizations transfer across architectures, scales, and training regimes (beyond one anecdotal “lack” example), and under what conditions do they fail?

- Real-world robustness: Do neologism-driven controls persist under out-of-distribution prompts (multi-turn dialogue, tool use, code, safety-critical instructions), longer contexts, higher temperatures, and multilingual or code-mixed inputs?

- Mechanistic causality: What internal circuits or features mediate the effect of a learned embedding on behavior? Can we locate, visualize, and causally verify the pathways (e.g., via patching, SAE features, or attribution)?

- Prompt confounds in plug-in evaluation: To what extent do changes in phrasing, position, length, and formatting of verbalizations (vs the neologism token) drive observed effects? Ablations with position, length-matched controls, and neutral synonyms are needed.

- Reliability of self-verbalizations: How accurate are model-generated descriptions and synonyms relative to induced behavior, across prompts and contexts? Quantify hallucination rates and develop validity metrics beyond single-word plug-ins.

- Machine-only synonym universality: Which machine-only synonyms are stable within and across model families, languages, and domains? Can we catalog, predict, and standardize them for interoperable control?

- Evaluation dependence on LLM judges: How robust are concept scores to judge choice (model, prompt), and do they correlate with human ratings? Provide confidence intervals, inter-rater reliability, and statistical significance analyses.

- Impact on base behavior and false positives: Do ordinary uses of verbalizations (e.g., “unrivaled,” “nonfunctional”) unintentionally steer the model? Measure unintended activation rates in everyday usage and establish safeguards.

- Compositionality limits: How do ordering, conjunction, disjunction, and negation of multiple neologisms interact (e.g., interference, saturation, nonlinearity)? Systematically map failure modes and define compositional grammar for tokens.

- Stability under continued training: Do learned embeddings retain meaning through subsequent finetuning, RLHF, quantization, pruning, or model updates? Track drift and define maintenance protocols.

- Initialization sensitivity and path dependence: How strongly do initial embeddings (e.g., “accurate,” “single” vs random) shape the final learned concept? Perform multi-seed experiments to assess convergence and variability.

- Embedding norm growth and geometry: Why do norms inflate, how does geometry (direction, norm, locality) relate to control strength and side effects, and what regularization (hinge loss, orthogonality constraints) best stabilizes behavior?

- Allowing neologism generation: What happens if models can also generate the new tokens? Does generation strengthen control, contaminate downstream text, or cause safety regressions?

- Negative example design: Are “rejected” responses drawn from the base model sufficient as negatives? Compare alternative negative sets (adversarial, counterfactual, diversified) and measure their effect on concept learning.

- Scalability: How many neologisms can be learned without interference, memory overhead, or control dilution? Study catalog management, namespace collisions, and long-term maintainability.

- Comparative baselines: Systematically benchmark neologism learning against activation steering, adapters/LoRA, instruction finetuning, prefix-tuning, and control via logits, including trade-offs in fluency, instruction-following, latency, and safety.

- Broader evaluation coverage: Extend beyond LIMA and AxBench—test on diverse datasets (reasoning, code, safety audits, long-form generation, multimodal inputs) and report concept adherence alongside task quality.

- Safety and misuse: Could neologisms (e.g., “wrong-answer,” refusal) be exploited to bypass guardrails or degrade performance? Develop detection, access control, and auditing strategies for harmful or hidden tokens.

- Failure mode taxonomy: When do neologisms fail to steer (e.g., conflicting instructions, adversarial phrasing, extreme contexts)? Build a diagnostic framework and mitigation playbook.

- Theoretical foundations: Provide formal analysis linking distributional training to embedding semantics (identifiability, sample complexity, generalization), and clarify when unique, stable “word meanings” emerge.

- Reproducibility and reporting: Release code, prompts, seeds, hyperparameters, full datasets, and error bars for all reported metrics; include variance across seeds and ablations on β and APO-up configurations.

Practical Applications

Immediate Applications

Below are practical use cases that can be deployed now, using the paper’s method of learning new control tokens (neologisms), self-verbalization, and plug-in evaluation with frozen LLMs.

- Controllable style knobs for enterprise chatbots and assistants (software, customer support, marketing)

- Add neologisms that reliably steer outputs to be concise, verbose, single-sentence, flattering, refusing, or intentionally incorrect—without changing model weights beyond the new embeddings.

- Potential products: “Control Token Library,” pre-trained tokens for common styles; UI sliders that map to tokens (e.g., brevity, positivity).

- Assumptions/Dependencies: Access to model vocabulary and embedding matrix; small curated datasets per concept; careful evaluation to avoid unintended behaviors.

- Safety guardrails via refusal tokens (healthcare, finance, compliance, education)

- Use trained “refusal-answer” tokens to enforce polite declination on prohibited topics (e.g., medical diagnosis, investment advice).

- Workflow: In regulated flows, append “Give me a <refusal> answer” in templates when restricted content triggers; log guardrail activation.

- Assumptions/Dependencies: Reliable concept datasets; plug-in evaluation to confirm behavior; ongoing red-teaming to detect prompt injection bypass.

- Lightweight adversarial data generation and model testing (software quality, security, academia)

- Apply “wrong-answer” tokens to synthesize challenging negative examples for QA, calibration testing, and retrieval stress tests.

- Tools: “Neologism Fuzzer” that generates intentionally flawed outputs to probe system robustness.

- Assumptions/Dependencies: Clear scoring/judging criteria (LLM judge or programmatic metrics); ensure controlled use in production.

- Automated behavior documentation via self-verbalization + plug-in evaluation (policy, ML governance, software)

- Generate natural-language descriptions of control tokens, validate them by replacing tokens with their verbalizations, and store verified prompts as behavior docs.

- Product: “Self-Verbalization Auditor” that creates human-readable documentation and test suites for each token.

- Assumptions/Dependencies: Self-verbalizations must be causally relevant and validated with plug-in evaluation; requires a separate strong LLM judge.

- Cross-model control vocabulary discovery (software platforms, MLOps)

- Identify machine-only synonyms (e.g., “lack” for brevity) that transfer across models, enabling a shared, vendor-agnostic control lexicon.

- Workflow: Mine synonyms from one model; test via plug-in evaluation on another; curate cross-model dictionary.

- Assumptions/Dependencies: Transferability is empirical; may vary across families and versions; maintain per-model validation.

- Compositional output shaping for document generation (productivity tools, business intelligence)

- Combine tokens (e.g., short + numerical) to produce succinct, number-rich summaries; or “likely” (teacher-preferred) outputs to approximate a stronger model’s style.

- Tools: “Composer” that orchestrates multiple tokens per task; template library for multi-token requests.

- Assumptions/Dependencies: Joint training for multiple tokens; careful conflict resolution (e.g., short vs numerical tension); evaluation with harmonic metrics.

- Cost-effective customization for SMEs (software, startups)

- Fine-tune only new embeddings to add domain-specific behaviors or house styles (no full model finetuning).

- Product: “Neologism Tuner SDK” that takes examples and returns deployable tokens.

- Assumptions/Dependencies: Model license permits vocabulary expansion; small but representative concept datasets; guard against embedding norm inflation (use hinge-loss as shown).

- Education and writing assistants personalization (daily life, education)

- Quickly toggle concise, single-sentence, or enthusiasm/flattery modes for tutoring, drafting, and paper aids.

- Workflow: Profiles map to token bundles (e.g., concise+neutral; concise+supportive; verbose+formal).

- Assumptions/Dependencies: Simple behavioral concepts generalize well; periodic checks for drift through plug-in evaluation.

- Evaluation harnesses for controllability (academia, ML benchmarking)

- Adopt the paper’s plug-in evaluation to measure whether verbalizations (synonyms or summaries) reproduce token effects; extend AxBench-like tests to your domains.

- Tools: “Plug-in Eval Harness” integrating programmatic metrics (e.g., counts, regex) and LLM judges.

- Assumptions/Dependencies: Reliable judges; appropriately defined task metrics; dataset diversity.

Long-Term Applications

The following opportunities benefit from further research, scaling, standardization, or broader ecosystem support.

- Standardized machine lexicon and control API across vendors (software platforms, industry standards)

- Establish a shared dictionary of machine-only synonyms and neologisms for common behaviors (brevity, refusal, politeness), enabling portable prompts.

- Tools: “Control Lexicon Registry” and versioned APIs for interoperable control words.

- Assumptions/Dependencies: Industry coordination; ongoing cross-model validation; versioning and deprecation strategies.

- Model safety auditing via self-verbalization (policy, security, ML governance)

- Use self-verbalizations to detect latent behaviors or misalignments; include verified descriptions and plug-in tests in model cards for regulators.

- Workflow: Safety audits translate tokens into verbalizations; confirm with plug-in evaluation; record coverage and limits.

- Assumptions/Dependencies: Faithfulness of verbalizations varies; requires standardized auditing protocols and independent evaluation.

- Knowledge distillation and on-device optimization via “likely” tokens (software, edge AI, energy efficiency)

- Train small models with neologisms that bias outputs toward stronger teacher preferences (higher-likelihood under a teacher), improving perceived quality with minimal compute.

- Product: “Teacher-Bias Tokens” to approximate premium models in constrained environments.

- Assumptions/Dependencies: Robust teacher-likelihood scoring; careful balance across concepts (e.g., short vs numerical); guard against overfitting to teacher quirks.

- Multi-token control for complex task orchestration (software, robotics, operations)

- Compose tokens for structured planning (e.g., short+stepwise+safe), potentially influencing instruction sequences for agents or robot task briefings.

- Tools: “Control Palette” UI with sliders and checkboxes mapping to learned tokens, including negative tokens (e.g., “not-flattery”).

- Assumptions/Dependencies: Extension from text style to task structure requires new datasets; careful safety gating; paper of interaction effects.

- Regulatory plug-in evaluation platforms (policy, certification)

- Standardize plug-in evaluation for verifying declared behaviors (e.g., refusal, concision) before deployment; require publishable test suites.

- Product: “Controllability Certification” services integrated into compliance workflows.

- Assumptions/Dependencies: Regulator uptake; shared benchmarks; auditable test data and procedures.

- Domain-specific neologisms for scientific and medical communication (healthcare, scientific publishing)

- Teach models tokens representing controlled terminologies and safety behaviors (e.g., “HIPAA-safe,” “triage-short”), to ensure style and compliance.

- Tools: Domain token packs with validated verbalizations and guardrails.

- Assumptions/Dependencies: High-quality domain datasets; strong safety review; clinician/scientist oversight.

- Multimodal extension of control tokens (software, creative industries)

- Generalize neologism learning to images/audio/video generation for stylistic and safety control (e.g., “minimalist-visual,” “child-safe-audio”).

- Product: Cross-modal “Control Token” suites for consistent behavior across modalities.

- Assumptions/Dependencies: Architectural support for multimodal embeddings; new evaluation metrics; more complex data generation pipelines.

- Cross-lingual and localization of control vocabulary (global software)

- Create multilingual equivalents and machine-only synonyms per language to ensure consistent controllability worldwide.

- Tools: Localization pipelines that produce language-specific tokens and validated verbalizations.

- Assumptions/Dependencies: Cross-lingual transfer varies; requires per-language validation; cultural appropriateness checks.

- Marketplace and exchange for control tokens (software ecosystem)

- Curate and trade vetted neologism tokens and their documentation; share compositional recipes for niche tasks (e.g., legal summarization with citation density).

- Product: “TokenHub” with ratings, test results, and compatibility matrices.

- Assumptions/Dependencies: Licensing and IP norms for tokens; security reviews; trust and provenance mechanisms.

Glossary

- Ablation: An experimental analysis where certain components or choices (e.g., loss functions) are systematically varied or removed to assess their impact. "We present some ablations on the choice of loss function in Appendix~\ref{app:extra_eval}."

- Anchored Preference Optimization (APO-up): A preference-based training objective that augments DPO by encouraging both a favorable likelihood ratio and higher absolute likelihood for preferred responses. "While we experimented with a simple likelihood loss (NLL), we eventually found improvements from APO-up \citep{d2025anchored}, a variant of DPO \citep{rafailov2023direct} that includes both a term encouraging the likelihood ratio of chosen over rejected, and a term encouraging the absolute likelihood of the chosen response:"

- AxBench: A benchmark of concept-driven prompts and evaluations for LLMs used to test steering toward complex, nuanced concepts. "We now ask if neologism learning works for more complex concepts in AxBench~\citep{wu2025axbench} (e.g., the concept ``words related to sensory experiences and physical interactions'')."

- Compositionality: The property of combining simpler concepts or controls to express and achieve more complex behaviors. "Finally, we push the promise of neologism learning farther towards real language, investigating the compositionality of three interrelated concepts of varying complexity (Section~\ref{sec:combinations})."

- Direct Preference Optimization (DPO): A method for fine-tuning models from preference data by optimizing a likelihood-ratio objective relative to a reference model. "a variant of DPO \citep{rafailov2023direct}"

- Distributional hypothesis: The linguistic theory that a word’s meaning is determined by the contexts it appears in. "The core of neologism learning is the distributional hypothesis \citep{firth1935technique,firth1957applications}, which asserts that the meaning of a word is defined by its co-occurring contexts."

- Few-shot learning: Prompting a model using a small number of in-context examples to induce a desired behavior without parameter updates. "while few-shot learning fails to generally control the concept of higher-probability."

- Greedy decoding: A decoding strategy that selects the highest-probability token at each step to form a response. "We first greedily decode a response for each input."

- Harmonic mean: An aggregate measure emphasizing balance across multiple scores by taking the reciprocal of the average reciprocals. "Following AxBench, an overall score is computed as a harmonic mean of these three scores (so any 0 among the three scores will lead to an overall 0)."

- Hinge loss: A margin-based loss that penalizes violations beyond a threshold; here used to constrain embedding norms. "we experimented with a version of training which added a hinge-loss to the training objective, encouraging the embedding norms to stay around 1."

- In-context learning: Inducing new behaviors by providing examples in the prompt rather than changing model parameters. "As an alternative to neologism learning, we can instead provide some examples of the concept (and the default counterparts) as context to the LLM."

- LLM alignment: The process of making model behavior accord with human values or desired norms. "LLM alignment can be framed as a problem of communicating human values to machines, and understanding machine concepts, like their interpretations of our values."

- LIMA dataset: A dataset of diverse, high-quality instructions and responses used for prompting and evaluation. "We use the LIMA dataset~\citep{lima2023} as a source of diverse questions"

- LLM judge: An automated evaluation setup where a LLM scores outputs for criteria like concept adherence, fluency, or instruction following. "we evaluate using the three AxBench LLM-judge prompts (with Gemini-2.5-Pro)"

- Machine-only synonyms: Verbalizations that appear unrelated or unintuitive to humans but reliably induce a target behavior in models. "Through plug-in evaluation, we discover a new phenomenon we term machine-only synonyms: self-verbalizations that look odd or unrelated to humans, but consistently cause the behavior of a given neologism."

- Mechanistic interpretability: Research aiming to understand internal computations and representations in models at the component level. "Considerable (mechanistic) interpretability research aims to build tools---sparse autoencoders \citep{cunningham2023sparse}~, steering vectors \citep{zou2023representation,turner2023steering}, and probes \citep{Alain2016UnderstandingIL,burns2023discovering}---for more precisely discovering machine concepts or communicating human concepts (steering)."

- Negative log-likelihood (NLL): A loss function that penalizes low-probability assignments to observed data, commonly used for next-token prediction. "While we experimented with a simple likelihood loss (NLL), we eventually found improvements from APO-up"

- Neologism learning: Introducing new tokens with trainable embeddings that encode controllable concepts while keeping all original model parameters frozen. "This method introduces a new word by adding a new word embedding and training with examples that exhibit the concept with no other changes in model parameters."

- Out-of-context learning: A form of generalization where changes induced by training on behaviors transfer to model descriptions or meta-concepts. "is a non-obvious form of generalization sometimes called out-of-context learning \citep{betley2025tell,berglund2023taken}."

- Plug-in evaluation: An evaluation method that replaces a learned neologism with its verbalization in the prompt to test whether it induces the same behavior. "we propose a simple method, plug-in evaluation: we take the prompt with the neologism, and we replace the neologism with the verbalization."

- Preference model: A model that scores or ranks candidate responses according to human or proxy preferences, used for data generation or training. "incorporating feedback from a preference model"

- Probes: Lightweight models (often linear) trained to detect or quantify concepts from internal representations. "probes \citep{Alain2016UnderstandingIL,burns2023discovering}"

- Representation engineering: Techniques that manipulate internal activations or learned features to steer model behavior. "representation engineering \citep{zou2023representation} have all been proposed to intervene on model activations to cause desirable behavior."

- Self-verbalization: Having a model describe, in natural language, the meaning or effect of a learned neologism. "self-verbalization is the process of querying a model for a natural language description of a learned neologism."

- Sparse autoencoders: Autoencoders trained to produce sparse features, used to discover interpretable components in model representations. "sparse autoencoders \citep{cunningham2023sparse}"

- Steering vectors: Directions in activation space that, when added to representations, induce specific behavioral changes. "steering vectors \citep{zou2023representation,turner2023steering}"

- Subword: A tokenization unit smaller than a word, used by many LLMs to represent text; different words can share subword pieces. "Lack shares no subwords with any synonyms that we're aware of (e.g., laconic)."

- Vocabulary expansion: Extending a model’s token set with new entries and corresponding embedding vectors to represent new concepts. "We define an expanded vocabulary , and an expanded embedding matrix ."

- Word embedding: A learned vector representation associated with a token that determines how the model internally represents and uses that word. "This method introduces a new word by adding a new word embedding and training with examples that exhibit the concept with no other changes in model parameters."

Collections

Sign up for free to add this paper to one or more collections.