- The paper introduces a multi-agent system that automates literature surveys with significant improvements, achieving a performance score of 8.18/10.

- The methodology employs advanced techniques like sentence-transformer embeddings and K-means clustering to organize and synthesize thematic clusters.

- The system’s 12-dimensional evaluation framework underscores its potential to deliver comprehensive and efficient literature synthesis in rapidly evolving fields.

Agentic AutoSurvey: Let LLMs Survey LLMs

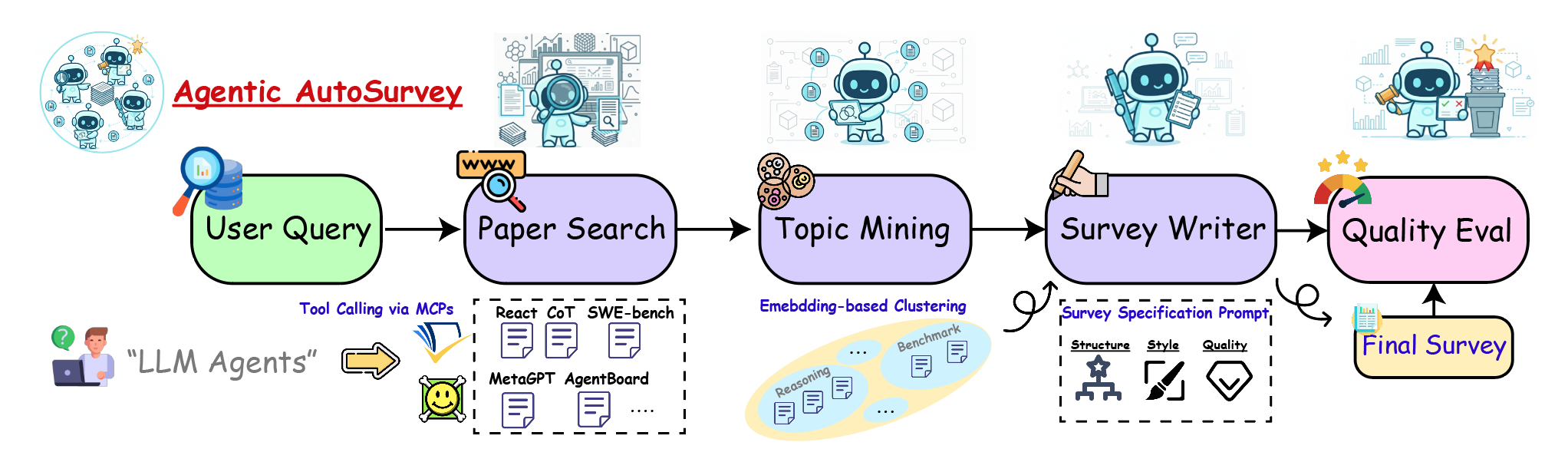

Agentic AutoSurvey introduces a multi-agent system designed to automate the generation of literature surveys in rapidly evolving scientific domains, particularly focusing on LLMs. By leveraging specialized agents, including Paper Search Specialist, Topic Mining {content} Clustering, Academic Survey Writer, and Quality Evaluator, this framework aims to overcome limitations of prior approaches such as AutoSurvey. The system demonstrates improvements in synthesis quality, citation coverage, and evaluation metrics, achieving a performance score of 8.18/10 against AutoSurvey's 4.77/10.

System Architecture and Methodology

Multi-Agent Framework

Agentic AutoSurvey employs a multi-agent architecture to efficiently process and synthesize literature. Each agent is tailored for specific tasks:

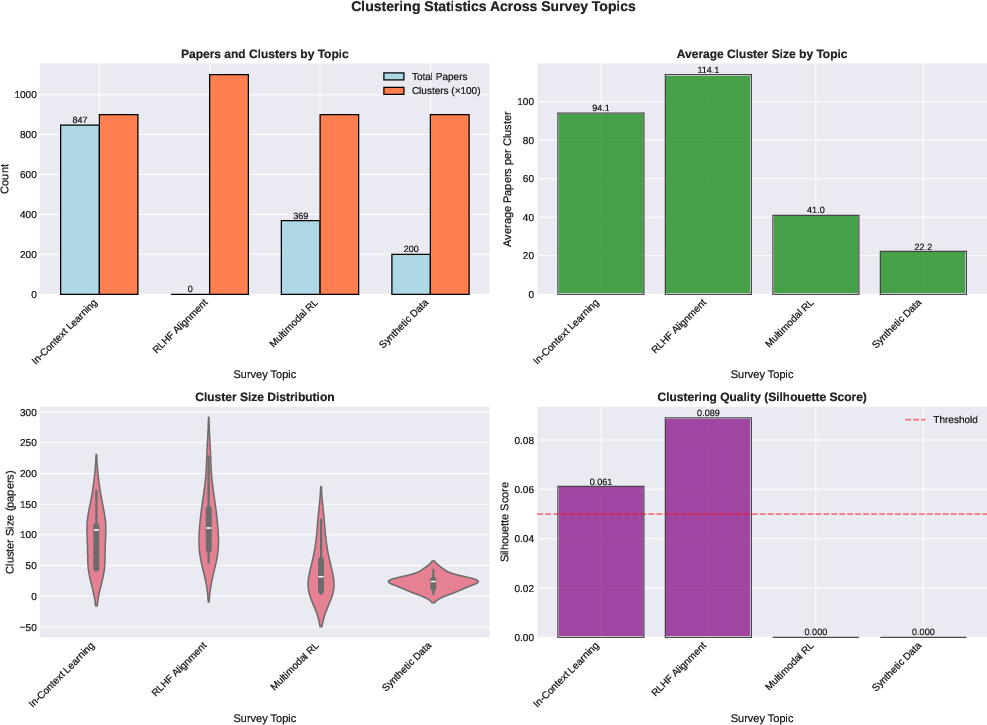

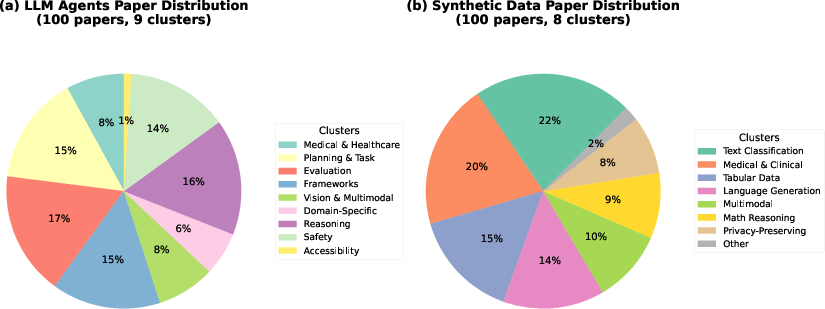

Clustering Analysis

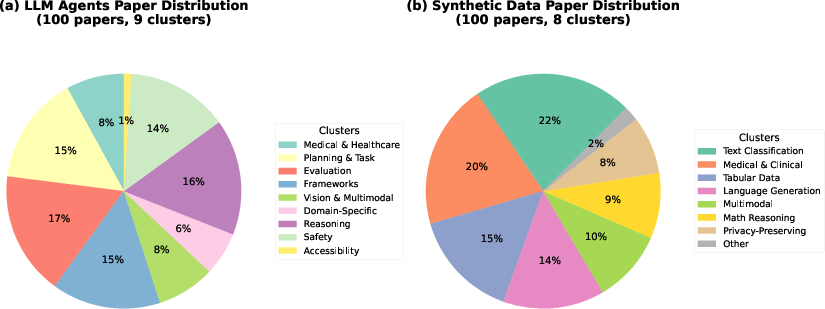

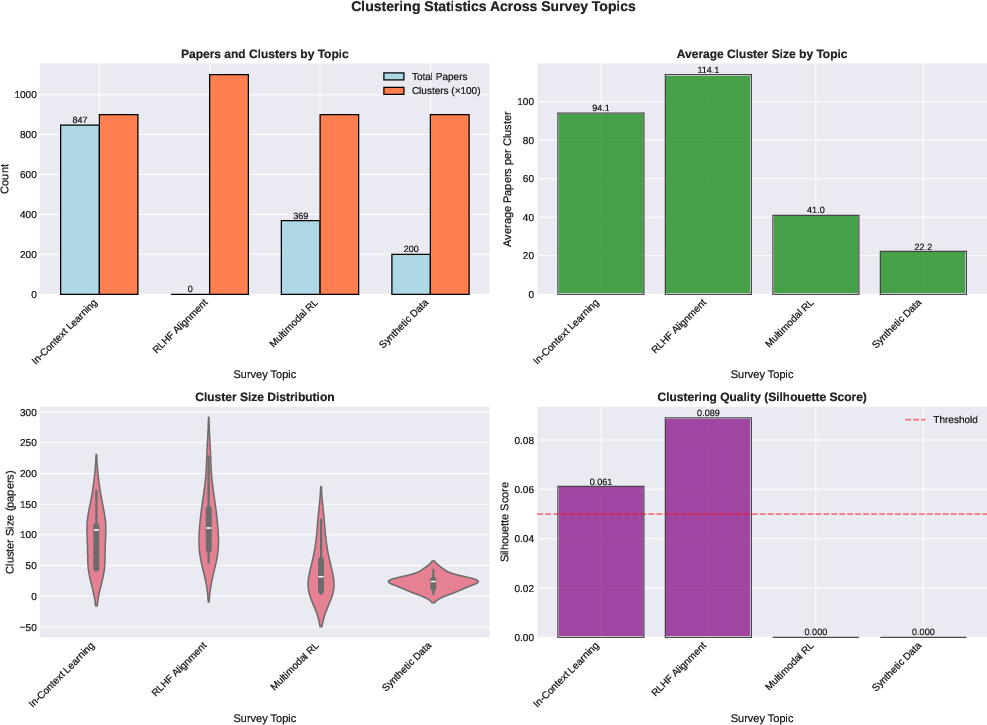

The Topic Mining {content} Clustering Agent effectively organizes literature into thematic clusters based on semantic embeddings. Optimal clustering is achieved through silhouette score maximization, and inter-cluster relationship identification further enriches the synthesis process.

Figure 2: Cluster distribution for (a) LLM Agents and (b) Synthetic Data Generation topics, showing the thematic organization discovered by the Topic Mining Agent.

Technical Implementation

Paper Retrieval and Clustering

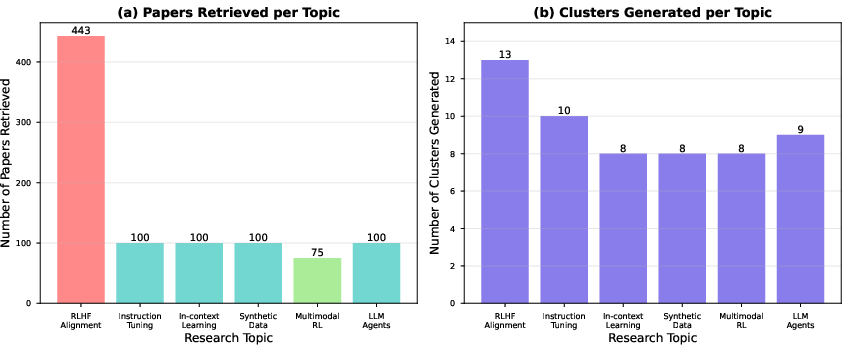

Embedding generation is performed using the all-MiniLM-L6-v2 model, mapping text to a dense vector space. The Paper Search Specialist generates diverse queries, maximizing coverage through integration with APIs like Semantic Scholar and arXiv. Subsequent clustering applies advanced techniques to ensure optimal thematic organization.

Survey Generation

The Academic Survey Writer generates content that moves beyond simple enumeration, emphasizing cross-cluster integration and identifying methodological patterns and trends. Emphasis is placed on producing publication-quality narrative that achieves substantial citation coverage.

Evaluation Framework

The Quality Evaluator agent implements a nuanced 12-dimensional assessment, including dimensions like synthesis quality, citation coverage, and critical analysis, providing a comprehensive evaluation of the survey's quality.

Experimental Evaluation

Agentic AutoSurvey achieves substantial improvements across all dimensions compared to AutoSurvey. Notably, the system excels in core quality dimensions such as synthesis quality and citation coverage due to specialized agent orchestration.

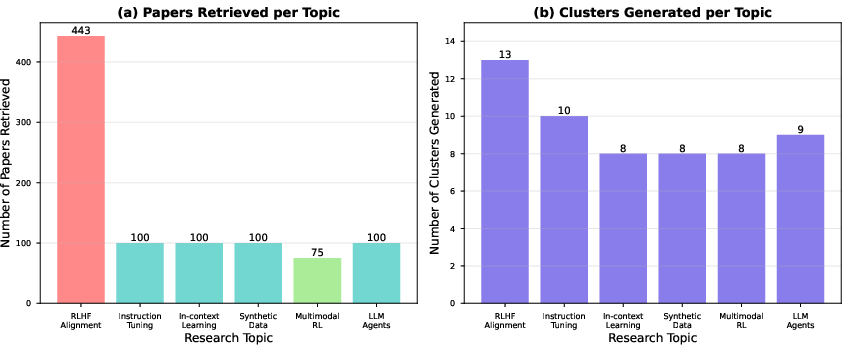

Figure 3: Distribution of papers retrieved and clusters generated across the six processed topics.

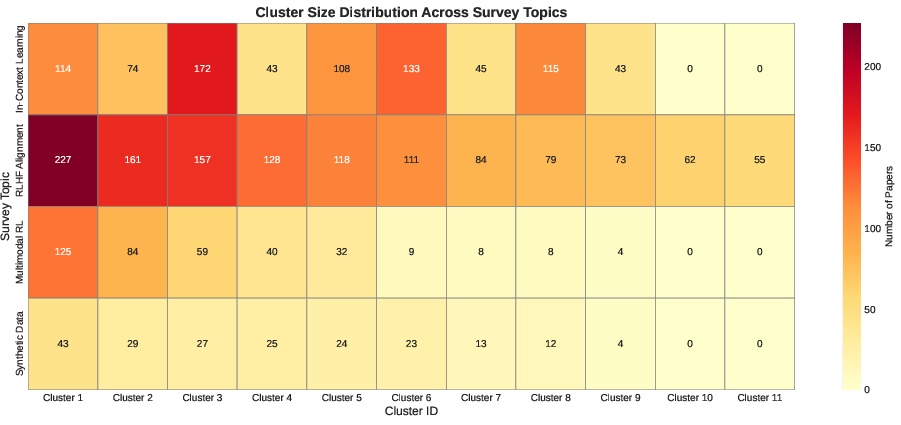

Clustering Quality

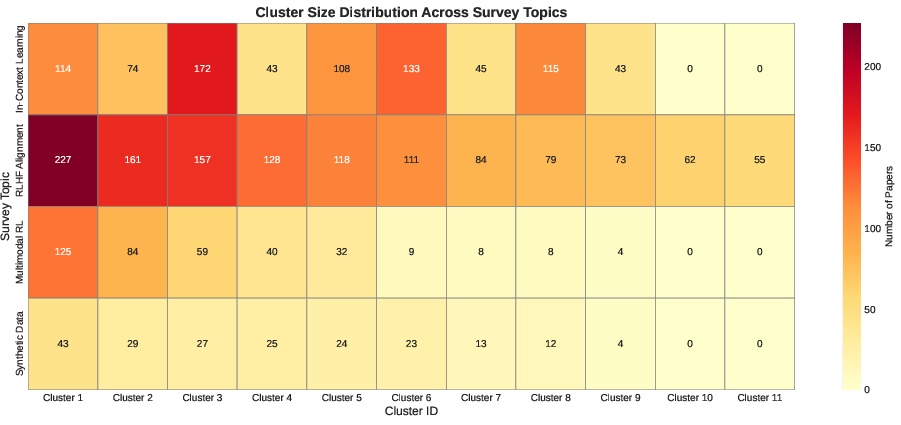

The clustering exhibits significant thematic separation, capturing diverse research threads within each topic, contributing to the high synthesis quality scores.

Figure 4: Cluster size distribution heatmap showing the number of papers in each cluster across four survey topics.

Discussion and Limitations

While Agentic AutoSurvey represents a significant leap forward in automated synthesis, limitations remain, such as handling large corpora with reduced citation coverage. Future enhancements may involve hierarchical writing strategies to incorporate a broader range of literature.

Conclusion

Agentic AutoSurvey advances automated survey generation through its multi-agent architecture, achieving superior synthesis quality and citation coverage. This approach offers significant potential benefits to researchers in rapidly evolving scientific domains, facilitating comprehensive understanding and integration of vast literatures.

Figure 5: Clustering statistics across survey topics, indicating the clustering quality across evaluated dimensions.

In summary, Agentic AutoSurvey represents a meaningful advancement in AI-driven survey generation, providing researchers with a powerful tool to synthesize knowledge efficiently in the ever-growing landscape of scientific literature. Future work could focus on overcoming scalability challenges and optimizing agent collaboration for enhanced synthesis quality.