Seeing is Deceiving: Mirror-Based LiDAR Spoofing for Autonomous Vehicle Deception (2509.17253v2)

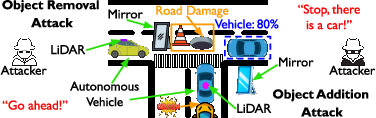

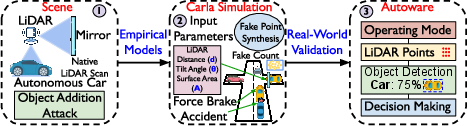

Abstract: Autonomous vehicles (AVs) rely heavily on LiDAR sensors for accurate 3D perception. We show a novel class of low-cost, passive LiDAR spoofing attacks that exploit mirror-like surfaces to inject or remove objects from an AV's perception. Using planar mirrors to redirect LiDAR beams, these attacks require no electronics or custom fabrication and can be deployed in real settings. We define two adversarial goals: Object Addition Attacks (OAA), which create phantom obstacles, and Object Removal Attacks (ORA), which conceal real hazards. We develop geometric optics models, validate them with controlled outdoor experiments using a commercial LiDAR and an Autoware-equipped vehicle, and implement a CARLA-based simulation for scalable testing. Experiments show mirror attacks corrupt occupancy grids, induce false detections, and trigger unsafe planning and control behaviors. We discuss potential defenses (thermal sensing, multi-sensor fusion, light-fingerprinting) and their limitations.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper looks at a sneaky problem for self-driving cars: how simple mirrors can trick a car’s laser-based eyes (called LiDAR). The authors show that by placing ordinary, low-cost mirrors in the environment, someone can make a car “see” fake obstacles or hide real ones, without hacking the car or using any special electronics. They paper how this works, test it in the real world, and explain why it’s a serious safety risk.

What questions were the researchers trying to answer?

The paper focuses on one big question: can everyday reflective surfaces (like flat mirrors) be used to fool a self-driving car’s LiDAR into:

- Adding fake objects (“ghosts”) that aren’t really there, causing the car to brake or swerve

- Removing real objects (hiding hazards), making the car think the road is clear when it’s not

They also ask: how do mirror size, angle, and distance change the trick? And what happens when these tricks hit the car’s full driving software pipeline, not just its sensors?

How did they paper it?

To make this understandable, think of LiDAR as a laser flashlight: the car sends out quick pulses of light and measures how long they take to bounce back. That tells it how far away things are and builds a 3D map made of tiny dots (a “point cloud”).

The team used several approaches:

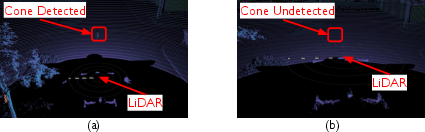

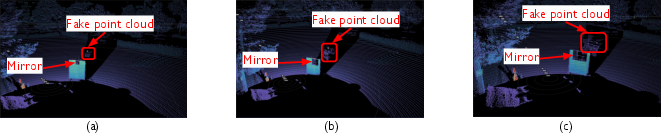

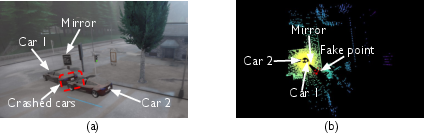

- Real-world experiments: They mounted a high-quality LiDAR (Ouster OS1-128) on a test vehicle and ran outdoor trials. They placed mirrors at different angles and sizes to see how the LiDAR’s 3D dot map changed.

- Simple physics modeling: They used basic “law of reflection” (the angle in equals the angle out) to predict where the LiDAR light would go after hitting a mirror. This helps explain why mirrors can both hide real objects and create fake ones.

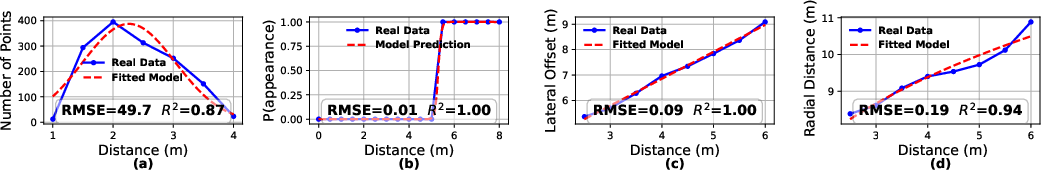

- Empirical models (data-driven): They measured how many ghost points appear, where they show up, and how likely they are to appear as the distance, mirror angle, and mirror area change. They fit simple math patterns to the real data to predict these effects.

- Simulation: They built an attack framework in the CARLA driving simulator. This let them safely test many mirror setups and watch how the car reacts without risking real accidents.

- Full-stack testing: They ran the Autoware self-driving software on their test vehicle to see how mirror tricks affect not just sensing, but also object detection, mapping, planning, and control (like braking).

What did they find?

The main results are clear and important:

- Two kinds of mirror tricks really work:

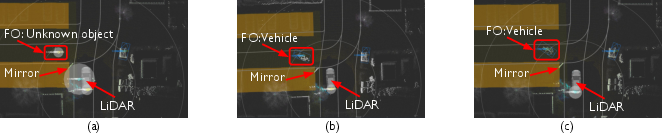

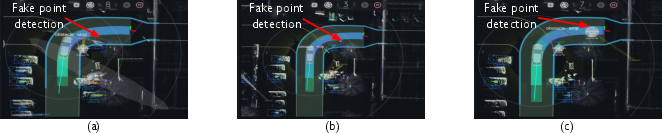

- Object Removal (ORA): A mirror can redirect the LiDAR’s light away from a real object (like a traffic cone). The LiDAR gets no return signal there, so the car thinks nothing is in the way. In tests, the cone completely disappeared from the 3D map.

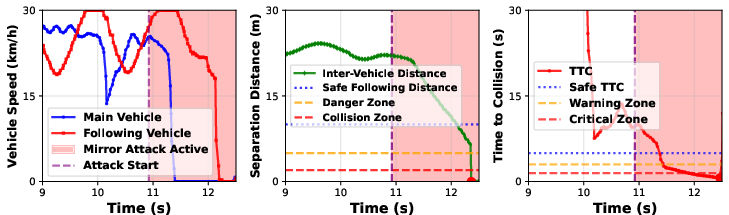

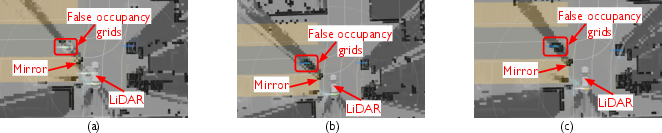

- Object Addition (OAA): A mirror can bounce LiDAR light onto another surface and back, making the car think there’s an object behind the mirror. This creates a “ghost” obstacle. In tests, larger mirrors made denser, more convincing ghost shapes—sometimes looking like a vehicle-sized object.

- Mirror angle matters:

- Shallow angles (like 30°) tended to make ghosts appear suddenly and close to the car, but only briefly.

- Steeper angles (like 60°) made ghosts visible earlier and for longer as the car approached.

- Mirror size matters:

- Bigger reflective area produced more ghost points, making the fake object look more solid and real to the car’s perception system.

- The effects are predictable:

- Using simple geometry and data patterns, they built models to predict where the ghost would appear (sideways offset and distance), how many points it would have, and how likely it would be to show up, based on the mirror’s setup.

- Real driving software can be fooled:

- When the team ran the Autoware stack, these mirror-induced artifacts broke object detection, corrupted occupancy maps (the grid the car uses to decide where it can drive), and sometimes caused hazardous behavior, like false emergency braking.

Why is this important?

Self-driving cars rely heavily on LiDAR because it’s supposed to be dependable in many conditions. This paper shows a cheap, passive, and stealthy way to trick LiDAR without lasers, hacking, or fancy equipment—just mirrors. That means:

- It’s easier to carry out than more complex “active” attacks

- It can cause immediate safety risks (hard braking, swerving, or missing a real hazard)

- Current systems have a blind spot for mirror-like surfaces when attackers use them deliberately

What could this mean for the future?

The research suggests several impacts and next steps:

- Sensor robustness needs to improve: Cars should learn to recognize mirror-induced reflections and not trust them blindly.

- Combine sensors wisely: Using radar, cameras, and thermal sensors together can help cross-check LiDAR. For example, a ghost in LiDAR that doesn’t show up on radar might be flagged as suspicious.

- Better maps and models: Training perception systems to handle reflections, and modeling mirror effects in simulators (like CARLA) can help developers test defenses safely at scale.

- Practical defenses have limits: Ideas like thermal imaging or “light fingerprinting” (checking the nature of the reflected light) could help, but each has real-world constraints (cost, weather, angle, or material issues). There’s no single perfect fix yet.

In short, the paper reveals that “seeing” with LiDAR can be deceiving when mirrors are involved. It pushes the autonomous driving community to rethink how to handle reflective surfaces, improve sensor fusion, and test full-stack defenses against simple, real-world tricks.

Knowledge Gaps

Unresolved Knowledge Gaps, Limitations, and Open Questions

The following gaps highlight what remains missing, uncertain, or unexplored, and point to concrete directions for future research:

- Generalization across LiDAR hardware: Results and models are based on a single spinning LiDAR (Ouster OS1-128, 905 nm, 10 Hz). It is unknown how attack efficacy and artifact characteristics vary for other architectures (solid-state/MEMS, flash LiDAR, multi-echo/dual-return systems), different wavelengths (e.g., 1550 nm), beam divergence profiles, and vendor-specific firmware filters.

- Sensor mounting and vehicle geometry dependence: The experiments use a roof-mounted sensor at ~2.2 m. The sensitivity of OAA/ORA to LiDAR mounting height, tilt, and vehicle body shape/materials (secondary reflection surfaces) is not quantified.

- Environmental robustness: All trials occur in daylight, clear, dry conditions at low speed and mostly stationary setups. The impacts of rain, fog, dust, nighttime operation, sun glare, wet/glossy surfaces, and wind-induced mirror instability on attack success are untested.

- Dynamic driving scenarios: The attack’s reliability at realistic urban speeds, during acceleration/braking, over uneven terrain, and in dense traffic remains uncharacterized. Frame-to-frame persistence and tracking effects under motion have not been quantified.

- Minimal mirror requirements: The minimum mirror area, reflectivity, and placement accuracy needed to achieve OAA/ORA under varying distances and angles are not mapped; a full parameter sweep to define operational envelopes is missing.

- Non-planar and common specular surfaces: The models and experiments focus on planar mirrors; the effects of curved mirrors (convex/concave), glass windows, polished vehicle bodies, wet pavement, and mixed specular/diffuse materials on artifact formation and detectability are unexplored.

- Multi-bounce and complex reflection paths: The modeling emphasizes two-hop paths; scenarios with multiple reflections, partial occlusions, or grazing incidence angles (including edge diffraction or aperture effects) are not treated.

- Artifact signature characterization: Systematic analysis of return intensity distributions, ring/channel patterns, timing/echo characteristics, and noise statistics of mirror-induced points (vs. genuine obstacles) is limited; such signatures could enable detection but are not exhaustively studied.

- End-to-end evaluation diversity: Impact is demonstrated on Autoware; generalization to other full AV stacks (Apollo, LGSVL/AWSIM-based stacks, NVIDIA DRIVE, commercial systems) and different perception/planning configurations is untested.

- Downstream module effects: Beyond occupancy grids and emergency braking, the influence on SLAM/localization accuracy, HD-map updates, lane-level decision making, tracking, and behavior prediction pipelines is insufficiently quantified.

- Quantitative safety metrics: No formal risk assessment (e.g., attack success probability, false-positive/false-negative rates, time-to-collision changes, intervention frequency) across scenarios; statistical robustness over repeated trials is missing.

- Attack feasibility and stealth in-the-wild: Practical constraints—mirror deployment logistics, concealment strategies (reflective films, signage integration), durability, and detection by bystanders/road authorities—are not analyzed.

- Multi-sensor fusion behavior: The paper notes fusion as a defense but lacks controlled experiments measuring how radar, cameras (visible/NIR), thermal IR, and GPS/IMU signals interact with mirror artifacts and whether fusion can be reliably robust without high false alarms.

- Hardware-level mitigations: Vendor anti-multipath algorithms, pulse coding, polarization, multi-echo gating, variable beam divergence, or adaptive thresholds are suggested but not experimentally evaluated for effectiveness and trade-offs.

- Polarization and spectral properties: The role of polarization states and wavelength-dependent reflectivity in both attack creation and detection is unexamined; polarization-aware LiDAR or filters may change outcomes.

- Realistic simulation physics: CARLA-based injection relies on empirical models rather than physically accurate ray-tracing of specular reflections. A validated, high-fidelity optics simulation (e.g., GPU path tracing with material BRDFs) is not provided.

- Cross-simulator reproducibility: The empirical attack injector is only reported for CARLA; replication across other simulators (LGSVL, AWSIM, Baidu Apollo, ROS/Gazebo) and standardized scenarios/benchmarks is lacking.

- Broader parameter exploration: A systematic sweep over distance, angle, mirror area, sensor FOV/resolution, and secondary surface types to map attack success regions and performance boundaries is missing.

- Attacker capability bounds: Limits on placement accuracy, time-on-target, angle tolerance, and the need for prior knowledge of sensor pose are not quantified, leaving uncertainty about attacker effort and reliability.

- Combined attack vectors: Potential synergies between passive mirror-based deception and active pulse injection/jamming or adversarial patterns (printed artifacts) are not explored.

- Detection algorithms and validation: Proposed defenses (thermal imaging, light fingerprinting, multi-sensor fusion) lack concrete algorithmic implementations, datasets, and quantitative evaluations (ROC curves, latency, compute overhead, adverse-condition performance).

- Dataset availability: While a project website is referenced, a curated, labeled dataset of mirror-induced artifacts (raw packets, calibration, ground truth) for training/evaluating detection models is not clearly specified.

- Ethical, regulatory, and mitigation deployment: Operational considerations—legal constraints, roadside infrastructure policies, and practical deployment of defenses (cost, maintenance, privacy)—are not addressed, affecting real-world applicability.

- Multi-vehicle/traffic interactions: Effects when other vehicles or pedestrians are present (e.g., mirror reflections of third-party objects, occlusions) and potential cascade impacts on cooperative perception/V2X are not studied.

Practical Applications

Immediate Applications

Below is a concise set of actionable, real-world uses that can be deployed now, drawing on the paper’s attack formalization (OAA/ORA), empirical models, Autoware evaluation, and the CARLA-based simulation framework.

- AV red‑team testing and QA workflows (Sector: Automotive/Software)

- Use case: Incorporate mirror-based Object Addition/Removal Attacks into pre-release validation and regression suites to probe perception, mapping, and planning vulnerabilities (e.g., false emergency braking, occupancy grid contamination).

- Tools/products/workflows: “Mirror Attack Scenario Pack” for CARLA; Autoware-in-the-loop tests; scripted routes with reflective surfaces; CI pipelines that inject specular artifacts during nightly builds.

- Assumptions/dependencies: Fleet teams have access to simulation environments and LiDAR-equipped stacks; results generalize across LiDAR models and mounting positions.

- Dataset augmentation for robust perception models (Sector: Automotive/Software/Academia)

- Use case: Train detectors/segmenters on synthetic mirror artifacts to improve robustness to specular reflections and multi-path returns.

- Tools/products/workflows: Empirical models from the paper to synthesize artifacts; data loader plugins that diversify angle/distance/surface area; intensity distribution and multi-frame consistency labels.

- Assumptions/dependencies: ML pipelines accept synthetic data; careful domain gap management; availability of baseline datasets for comparison.

- Specular-aware perception filters and planning guardrails (Sector: Automotive/Software)

- Use case: Reduce false positives/negatives by flagging returns consistent with mirrors (e.g., improbable geometry, sub-linear radial scaling, unusual intensity profiles) and temper planner reactions (e.g., graded braking).

- Tools/products/workflows: “Specular Reflection Filter” library; cross-frame consistency checks; radar/camera cross-validation; occupancy-grid anomaly detectors that quarantine suspicious cells.

- Assumptions/dependencies: Additional sensing modalities (camera/radar) available; sufficient compute for real-time checks; tolerable latency/false-alarm rates.

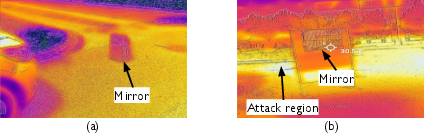

- Multi-sensor and thermal-camera-based mitigation pilots (Sector: Automotive/Robotics)

- Use case: Use thermal imaging or radar to corroborate LiDAR returns when mirror-like deception is suspected, reducing reliance on a single modality.

- Tools/products/workflows: Sensor fusion nodes that down-weight LiDAR-only obstacles lacking thermal/radar support; field trials on test vehicles.

- Assumptions/dependencies: Extra sensors and calibration; environmental conditions suitable for thermal/radar (e.g., weather, reflectivity).

- Mirror-aware SLAM and mapping procedures (Sector: Robotics/Software)

- Use case: Improve indoor/outdoor robot navigation in mirrored/glassed environments (warehouses, hospitals, malls) by detecting/handling specular surfaces to avoid map corruption.

- Tools/products/workflows: Reflective-surface detection in SLAM; map layer tagging “specular risk zones”; re-localization heuristics that ignore mirror-induced ghost structures.

- Assumptions/dependencies: Access to SLAM stack; environments with predictable mirror placements; acceptance of conservative mapping behavior.

- Low-cost physical security and penetration testing (Sector: Security/Automotive/Infrastructure)

- Use case: Validate AV route resilience with passive, non-electronic mirror deployments; train incident response teams to recognize and remediate mirror-induced hazards.

- Tools/products/workflows: “AV Red‑Team Kit” (portable planar mirrors, angle stands); standard operating procedures for setup, observation, and cleanup.

- Assumptions/dependencies: Legal permissions for test sites; safety oversight; clear ethical guidelines.

- Policy guidance for construction, signage, and urban furniture (Sector: Policy/Infrastructure)

- Use case: Issue advisories to avoid placing large mirror-like surfaces near intersections/curves on AV test routes; require temporary signage and barriers that minimize specular reflections.

- Tools/products/workflows: Municipal guidance documents; procurement specs for non-specular materials; site checklists for roadworks contractors.

- Assumptions/dependencies: Local authority buy-in; coordination with utilities and contractors; awareness of AV operations within the jurisdiction.

- HD map enrichment with “specular risk” annotations (Sector: Automotive/Infrastructure)

- Use case: Tag road segments with known reflective facades/signage to inform planning and perception fallback modes.

- Tools/products/workflows: Map data schema update; fleet-based detection of reflective surfaces; update services to disseminate risk layers.

- Assumptions/dependencies: Data collection at scale; map provider tooling; periodic refresh to track facade changes.

- Academic use in coursework, benchmarks, and reproducibility (Sector: Academia)

- Use case: Teach cyber-physical perception attacks; evaluate defenses in controlled sim; create open benchmarks with mirror-induced artifacts.

- Tools/products/workflows: CARLA scenarios; Autoware pipelines; labeled datasets showing OAA/ORA effects and planning impacts.

- Assumptions/dependencies: Access to open-source stacks; institutional compute; ethics approvals for field trials.

- Public safety and property-owner advisories (Sector: Daily life/Policy)

- Use case: Encourage building owners near AV corridors to avoid mirror-faced installations angled toward roadways; add notices for temporary reflective art.

- Tools/products/workflows: Public-awareness campaigns; urban design guidelines; neighborhood associations communication.

- Assumptions/dependencies: Existence of AV operations; cooperation from property owners.

Long-Term Applications

These applications will benefit from further research, scaling, standardization, or hardware development before broad deployment.

- LiDAR anti-spoofing hardware (polarization/modulation) (Sector: Automotive/Robotics/Hardware)

- Use case: Differentiate direct vs. multi-path/specular returns using polarization-sensitive receivers, coded pulse “light fingerprinting,” or multi-frequency modulation that resists passive redirection.

- Tools/products/workflows: New LiDAR SKUs with polarization channels; firmware signal processing; standardized modulation schemes.

- Assumptions/dependencies: Sensor redesign, BOM/cost impacts, interoperability across vendors; regulatory compliance for emitted light patterns.

- Mirror-detection module integrated in AV stacks (Sector: Automotive/Software)

- Use case: Onboard, physics-informed classifier that detects likely mirror-induced artifacts and triggers fusion backoffs or planner dampening.

- Tools/products/workflows: Runtime modules that leverage the paper’s empirical location/intensity models; learned classifiers trained on large synthetic datasets; planner APIs for graded responses.

- Assumptions/dependencies: Extensive validation to minimize false positives; tight integration with planning; cross-sensor calibration.

- Certification standards and regulatory tests (Sector: Policy/Standards)

- Use case: Include mirror-based OAA/ORA scenarios in AV certification (ISO/SAE) and operational design domain (ODD) declarations; mandate resilience metrics.

- Tools/products/workflows: Standard test catalog; pass/fail thresholds for perception/planning behavior; audit protocols.

- Assumptions/dependencies: Industry consensus; regulator capacity; harmonization across jurisdictions.

- Fleet-scale digital twins with attack injection (Sector: Automotive/Software)

- Use case: Train and evaluate fleets against a wide distribution of reflective artifacts under varied traffic/weather; stress-test model updates before rollout.

- Tools/products/workflows: High-fidelity digital twins; generative scenario engines based on the paper’s geometric/empirical models; telemetry-based replay.

- Assumptions/dependencies: Scalable simulation infra; realistic sensor models per vendor; robust sim-to-real transfer.

- City-scale reflectivity mapping and mitigation programs (Sector: Infrastructure/Policy)

- Use case: Periodically scan and catalog reflective surfaces along AV corridors; retrofit high-risk facades/signage with anti-specular treatments; plan routing around hotspots.

- Tools/products/workflows: Mobile mapping campaigns; “Specular Risk Index” layers; municipal retrofit programs (coatings, baffles).

- Assumptions/dependencies: Budget, stakeholder coordination, maintenance schedules; measurable benefits for multi-operator AV fleets.

- Defensive SLAM/mapping standards for indoor robotics (Sector: Robotics/Standards)

- Use case: Create guidelines and algorithms for mirror-aware mapping in hospitals, airports, and retail to prevent map corruption and navigation failures.

- Tools/products/workflows: SLAM plugins with reflective-surface classifiers; standardized datasets/benchmarks; facility design guides for robot-friendly mirrors/glass.

- Assumptions/dependencies: Vendor adoption; facility cooperation; special handling for transparent vs. specular surfaces.

- Legal and liability frameworks for physical-world adversarial attacks (Sector: Policy/Legal)

- Use case: Clarify responsibilities when passive artifacts cause AV incidents; define acceptable/regulated reflective installations along public roads.

- Tools/products/workflows: Statutes, insurance clauses, incident reporting that distinguishes adversarial mirror deployments from incidental reflectivity.

- Assumptions/dependencies: Case law development; coordination with insurers and municipalities; enforceability.

- Safety analytics and risk pricing for AV operations (Sector: Finance/Insurance)

- Use case: Factor mirror spoofing exposure into premiums and routing decisions; incentivize adoption of defenses via discounts.

- Tools/products/workflows: Risk models using map-layer reflectivity and fleet history; insurer APIs for dynamic pricing.

- Assumptions/dependencies: Data-sharing frameworks; standardized incident taxonomy; actuarial validation.

- Material and urban design innovations (Sector: Infrastructure/Materials)

- Use case: Deploy roadside materials/designs that reduce coherent specular reflection (micro-texturing, coatings, angular baffles) while preserving aesthetics and functionality.

- Tools/products/workflows: Material science R&D; city design handbooks; pilot corridors with anti-specular treatments.

- Assumptions/dependencies: Cost-effectiveness; durability; acceptance by architects and city planners.

Notes on Assumptions and Dependencies (cross-cutting)

- The attack and modeling were validated with an Ouster OS1-128 and Autoware; behavior can vary across LiDAR vendors, scan patterns, mounting heights, and environmental conditions.

- Attack feasibility depends on line-of-sight, mirror size/tilt, and proximity; indoor/outdoor lighting and weather (e.g., wet surfaces) can change specular characteristics.

- Many defenses assume multi-sensor suites (camera, radar, thermal) and adequate compute budgets; single-LiDAR configurations will need conservative planner guardrails and perception filters.

- Policy/infrastructure measures rely on awareness of AV operations and collaboration among municipalities, property owners, and contractors.

Glossary

- Active signal injection: Injecting fake laser pulses into a LiDAR to create deceptive measurements. "LiDAR spoofing attacks typically rely on active signal injection using laser emitters"

- Autoware: An open-source autonomous driving software stack used for end-to-end evaluation. "autonomous driving stack (Autoware)"

- Azimuth: The horizontal angular coordinate used in LiDAR scanning. "fixed angular resolution in azimuth and elevation"

- Black-Box System Access: An adversary model with no internal access to the target system’s software or sensors. "Black-Box System Access:~The adversary has no internal access to the AV’s software"

- CARLA: An open-source simulation platform for autonomous driving research. "within the CARLA simulator"

- Diffuse reflection: Light scattering from rough surfaces, producing returns over many directions. "Diffuse reflection occurs when the surface is rough"

- Emitter–receiver arrays: The arrangement of LiDAR emitters and detectors, which can be rotating or solid-state. "rotating or solid-state emitter–receiver arrays"

- Geometric optics: A physics framework modeling light as rays to predict reflections and imaging. "using geometric optics"

- Iterative Closest Point (ICP): An algorithm to align point clouds by iteratively minimizing distances between points. "using ICP – Iterative Closest Point"

- Jamming: Overwhelming a sensor with noise to degrade or block measurements. "or on signal jamming, which requires high optical power to overwhelm the sensor with noise"

- Law of reflection: The physical rule that the angle of incidence equals the angle of reflection. "follows the classic law of reflection:"

- LiDAR range equation: A model relating received power to distance and target properties in LiDAR sensing. "the LiDAR range equation for a simple target."

- Light fingerprinting: Using characteristic patterns of light to detect or distinguish spoofed signals. "light fingerprinting"

- Multi-hop path: A propagation path involving multiple reflections before returning to the sensor. "The light follows a multi-hop path"

- Multi-path reflections: Multiple reflection paths that produce measurement artifacts or false points. "via multi-path reflections"

- Multi-sensor fusion: Combining data from multiple sensors to improve perception robustness. "multi-sensor fusion"

- Object Addition Attack (OAA): An attack that fabricates ghost obstacles in LiDAR data. "Object Addition Attacks (OAA), which create phantom obstacles"

- Object Removal Attack (ORA): An attack that hides real objects by redirecting LiDAR beams away from them. "Object Removal Attacks (ORA), which conceal real objects"

- Occupancy grids: Grid-based maps indicating free and occupied space derived from sensor data. "contaminating occupancy grids"

- Occupancy maps: Spatial representations used by AVs to mark drivable and occupied regions. "can corrupt occupancy maps"

- Ouster OS1-128: A specific high-resolution LiDAR model with 128 channels. "Ouster OS1-128 LiDAR sensor"

- Passive LiDAR spoofing attacks: Deceptive manipulations using reflective surfaces without emitting signals. "passive LiDAR spoofing attacks"

- Phantom echo: A false return generated by reflections, interpreted as a real obstacle. "forms a phantom echo"

- Phantom obstacles: Non-existent obstacles appearing in the sensor data due to deception. "phantom obstacles"

- Point cloud: A set of 3D points representing the environment captured by LiDAR. "The resulting data forms a 3D point cloud"

- Radius-based clustering: A method that groups points into clusters based on spatial proximity within a radius. "radius-based clustering"

- Received power: The optical power detected by the LiDAR after propagation and reflection. "the received power is given by:"

- Return intensity: The strength value associated with a LiDAR point’s reflected signal. "and the return intensity"

- ROS 2: A robotics middleware framework used for recording and processing sensor data. "Data was recorded using ROS\,2"

- Specular reflection: Mirror-like reflection where light reflects coherently at a predictable angle. "Specular reflection occurs on smooth, mirror-like surfaces"

- Specular reflectance: The proportion of incident light reflected in a specular manner from a surface. "maintain high specular reflectance"

- Thermal imaging: Sensing using infrared radiation to detect heat signatures for defense. "including thermal imaging"

- Time-of-flight: Measuring distance by timing the round-trip travel of a laser pulse. "using the time-of-flight principle"

Collections

Sign up for free to add this paper to one or more collections.