CARLA Simulator: Urban Driving Platform

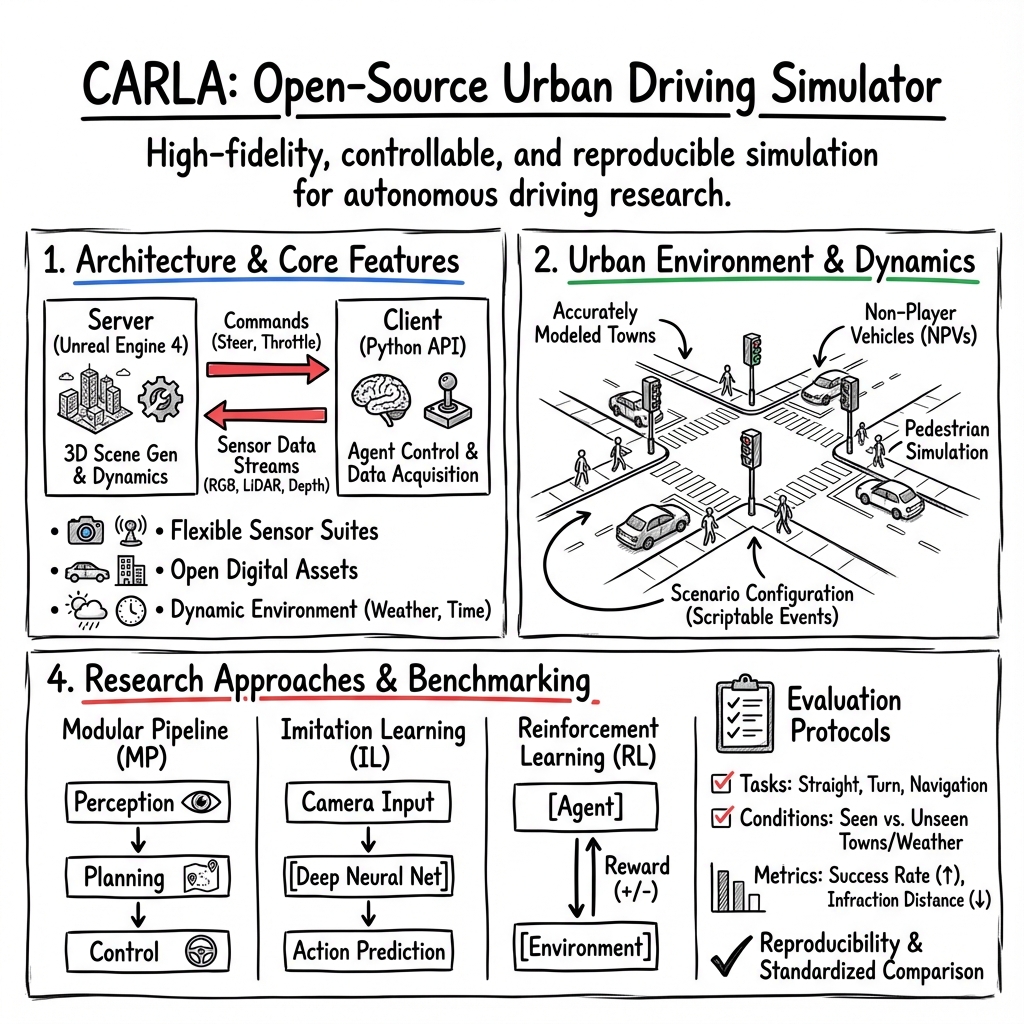

- CARLA Simulator is an open-source, high-fidelity urban driving simulation platform designed for autonomous vehicle research.

- It integrates a photorealistic Unreal Engine 4 with a Python API to enable real-time control over weather, traffic, and pedestrian dynamics.

- The platform ensures reproducibility and extensibility through open digital assets and standardized benchmarking protocols for perception, planning, and control.

CARLA (Car Learning to Act) is an open-source urban driving simulator, designed to facilitate research on autonomous driving systems by providing high-fidelity, controllable, and reproducible simulation environments. Built atop Unreal Engine 4 with a modular client–server architecture, CARLA enables the training, validation, and benchmarking of diverse autonomous driving pipelines under a wide range of environmental, sensor, and traffic conditions (Dosovitskiy et al., 2017). The simulator offers open digital assets—including urban layouts, 3D vehicle and pedestrian models, and sensor blueprints—under permissive licenses. CARLA has established itself as a foundational platform for perception, control, planning, and end-to-end learning research, as well as for large-scale benchmarking, safety validation, and sim-to-real transfer studies.

1. Architecture and Core Features

CARLA’s architecture is a hybrid of a photorealistic rendering engine (Unreal Engine 4) and a Python-based client API, enabling real-time interaction with simulated environments. The server component orchestrates 3D scene generation—including maps, weather, vehicle dynamics, pedestrians, and sensor signals—while the client manages agent control, scenario specification, and sensor data acquisition.

Key architectural features:

- Client–Server Communication: The Python client transmits low-level actuation commands (steering, throttle, brake), meta-commands for environment manipulation (weather, traffic density), and receives multimodal sensor data streams (RGB images, semantic segmentation, depth maps, LiDAR, pseudo-sensors, vehicle kinematics, collision feedback).

- Flexible Sensor Suites: Users programmatically configure the number, type, position, orientation, and noise models of sensors onboard each vehicle. Both “real” cameras and “pseudo-sensors” (providing ground-truth semantic/depth outputs) are supported for abstraction-based learning.

- Asset Library: The asset set comprises multiple fully rigged and animated vehicle (16 types) and pedestrian (50 types) models, as well as custom-designed buildings, road features, and environment layouts. These are provided in open digital formats for community extension.

- Environmental Parameters: Weather (sun, fog, rain, puddles), illumination, and time-of-day are dynamically adjustable, enabling rigorous domain generalization, robustness, and sim-to-real studies.

2. Urban Environment Simulation and Dynamic Elements

CARLA provides accurately modeled urban “towns” featuring road networks (sidewalks, lanes, intersections), traffic signals, and realistic urban infrastructure. The first release included Town 1 (training scene) and Town 2 (test scene) to emphasize generalization. Additional custom towns and scenario infrastructures have been created by the community.

Dynamics in the environment are governed as follows:

- Non-Player Vehicles (NPVs): NPVs adhere to deterministic or stochastic rules for lane-following, traffic light obedience, and intersection negotiation, supporting both classical and learning-based decision algorithms.

- Pedestrian Simulation: Pedestrians execute navigation policies that favor, but do not strictly constrain, sidewalk usage. Each agent can be directed individually, with navigation maps encoding legal traversals and random crossing behaviors to enable scenario diversity.

- Scenario Configuration: Through the API, researchers script sensor pipelines, vehicle and pedestrian spawning, waypoint sequencing, and scenario resets for controlled experimentation.

3. Open-Source Ecosystem and Reproducibility

A distinguishing aspect of CARLA is its commitment to open science: all simulator code, digital assets (maps, models), experiment protocols, and supporting documentation are openly released. This ensures:

- Reproducibility: Complete replication of published results under equivalent conditions.

- Extensibility: Community members may contribute new assets, scenario libraries, and sensor models; third-party extensions (e.g., new agent types, traffic configurations) are straightforward to integrate.

- Benchmarking Standardization: A shared baseline facilitates apples-to-apples comparison across controllers, planners, and learning algorithms, enabling concerted progress within the field.

4. Research Applications and Autonomy Pipelines

CARLA was originally benchmarked by evaluating three canonical approaches to autonomous driving:

- Modular Pipeline (MP): A multi-stage stack with semantic segmentation (RefineNet architecture), rule-based state machine planning, and classical PID low-level controllers. The perception module outputs semantic pixel-wise maps (road/non-road, obstacles), which are input to a planner (specifying high-level actions: follow road, turn, emergency stop), and continuous actuation is computed by a PID controller tracking waypoints.

- Imitation Learning (IL): Conditional imitation learning is performed by training a deep neural network to predict control (steer, throttle, brake) given raw camera inputs and high-level route commands (e.g., “go straight”, “turn left”). Training is enhanced by data augmentation, dropout regularization, and on-policy label perturbation (triangular impulse function applied to expert steering) to improve robustness.

- Reinforcement Learning (RL): The asynchronous advantage actor-critic (A3C) algorithm is trained end-to-end with carefully shaped rewards: positive for speed and progress, negative for collisions, infractions (lane/sidewalk), or off-path events. Despite access to an order-of-magnitude greater training mileage (~12 days worth) compared with IL (~14 hours), RL lags in complex, urban scenarios.

Typical performance evaluation metrics:

- Success rate (destination reached within a given time at optimal path speed, 10 km/h).

- Mean distance traveled between types of infraction (driving on the wrong lane, sidewalk incursions, collisions).

- Failure mode analysis under controlled increases in scenario complexity (from empty streets to traffic-rich environments).

5. Evaluation Scenarios and Benchmarking Protocols

CARLA defines a suite of evaluation scenarios, each constructed to probe particular agent competencies:

- Task Taxonomy: Four canonical tasks—straight driving, one turn, navigation, navigation with dynamic obstacles—are assessed with escalating scenario difficulty.

- Training/Test Split: Benchmarks are run in both “seen” and “unseen” towns, as well as under familiar versus unseen weather (e.g., Town 1 with midday sun vs. Town 2 with cloudy/sunset rain), evaluating generalization.

- Infractions and Analysis: Each scenario logs routes completed, time to completion, and detailed infractions. Distance-based metrics are computed (kilometers per infraction type) to facilitate fine-grained diagnosis of failure modes and controller limitations.

A tabular overview of controlled experiments might appear as follows:

| Task | Environment Variation | Evaluated Metric |

|---|---|---|

| Straight/Turn/Navigation | Training/Testing town | Success rate, average time |

| With/without traffic/weather | Seen/unseen conditions | Distance between infractions |

| Modular, IL, RL controllers | Same protocol, different seed | Breakdown by failure type |

6. Technical Documentation and Resources

All supporting materials are made available alongside the simulator:

- Client–Server Protocols: Formal definitions of command, meta-command, and sensor data APIs.

- Sensor Models and Configurations: Parameters for field-of-view, resolution, pose, and pseudo-sensor toggling.

- Environment and Vehicle Models: Specifications for map layouts, actor blueprints, and vehicle/pedestrian kinematic properties.

- Network/Training Details: Architectural diagrams, loss/augmentation formulations, optimizer/mode details for learning-based baselines.

- Supplementary Material: Instructional videos demonstrating environmental diversity, sensor streams (RGB, segmentation, depth), and qualitative driving episodes under varying conditions.

7. Impact and Role within the Autonomous Driving Research Community

CARLA has become the de facto standard simulation platform for urban autonomous driving research, supporting advances across:

- Perception: Object detection, semantic segmentation, depth, sensor fusion, and resilience to environmental adversaries.

- Planning and Control: Learning-based and rule-based planners, safety verification, multi-agent behavior modeling, long-tail scenario awareness.

- Sim-to-Real Transfer: Domain adaptation, reality gap quantification, and robustness studies using weather, appearance, and geographic variation.

- Benchmarking: Community adoption of CARLA’s task and metrics suite has led to cross-comparable reporting and reproducibility.

By combining high-fidelity, extensibility, and detailed control, CARLA has substantially accelerated experimental cycles in autonomous vehicle research, enabling both baseline evaluations and the design of complex, safety-critical autonomous systems for real-world deployment.