- The paper presents a unified VMS framework combining hybrid tracking, OMoE, and segment-level rewards to achieve robust humanoid control with significantly reduced global drift (57.92 mm error).

- It leverages a large-scale, retargeted motion dataset curated via feasibility oracles and employs teacher-student distillation to ensure reliable sim-to-real transfer.

- Comprehensive experiments demonstrate superior tracking accuracy over baselines, enabling diverse skills from dynamic kicks to text-to-motion generation.

Versatile Motion Skill Learning for Humanoid Whole-Body Control: An Analysis of KungfuBot2

Introduction

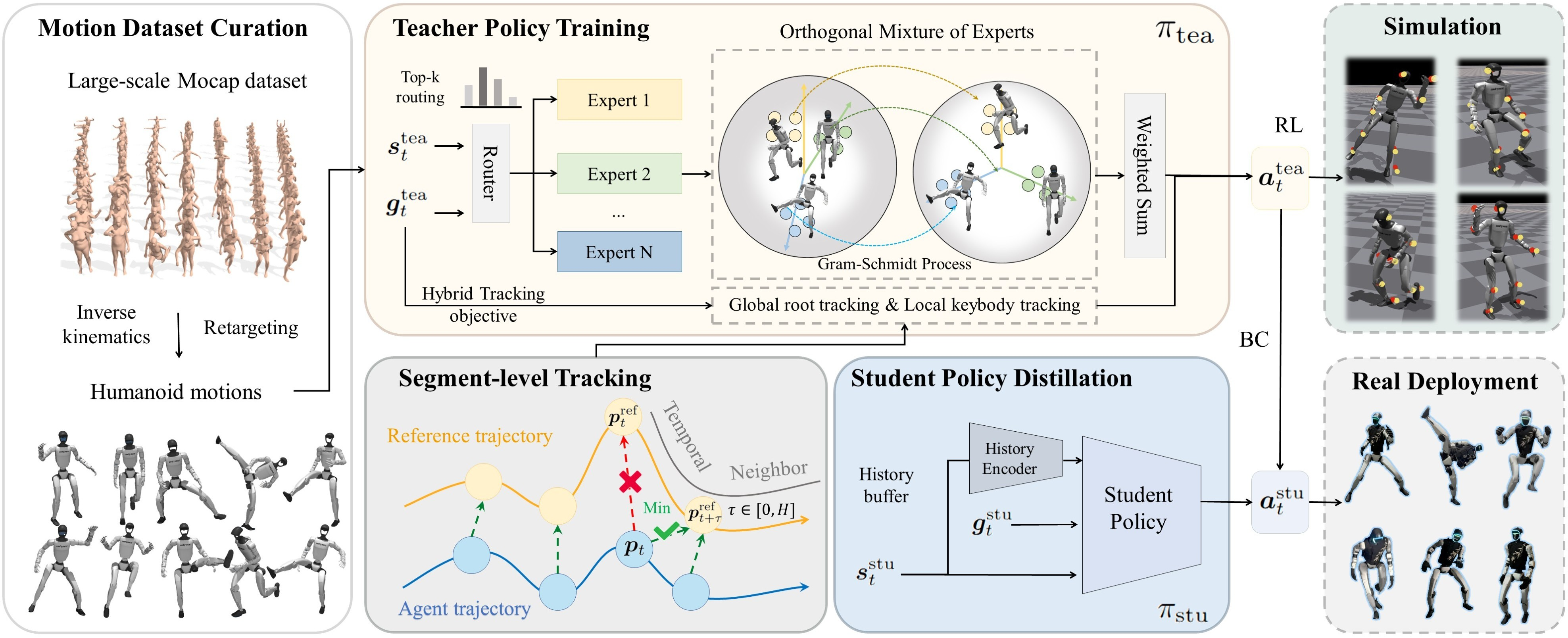

The paper "KungfuBot2: Learning Versatile Motion Skills for Humanoid Whole-Body Control" (2509.16638) introduces VMS, a unified framework for learning and deploying a single, highly expressive policy capable of tracking a broad spectrum of human motions on a physical humanoid robot. The work addresses two central challenges in humanoid control: (1) achieving sufficient policy expressiveness to capture diverse, dynamic skills, and (2) reconciling local motion fidelity with global trajectory stability over long-horizon sequences. The proposed system integrates a hybrid tracking objective, an Orthogonal Mixture-of-Experts (OMoE) policy architecture, and a segment-level tracking reward, validated through extensive simulation and real-world experiments.

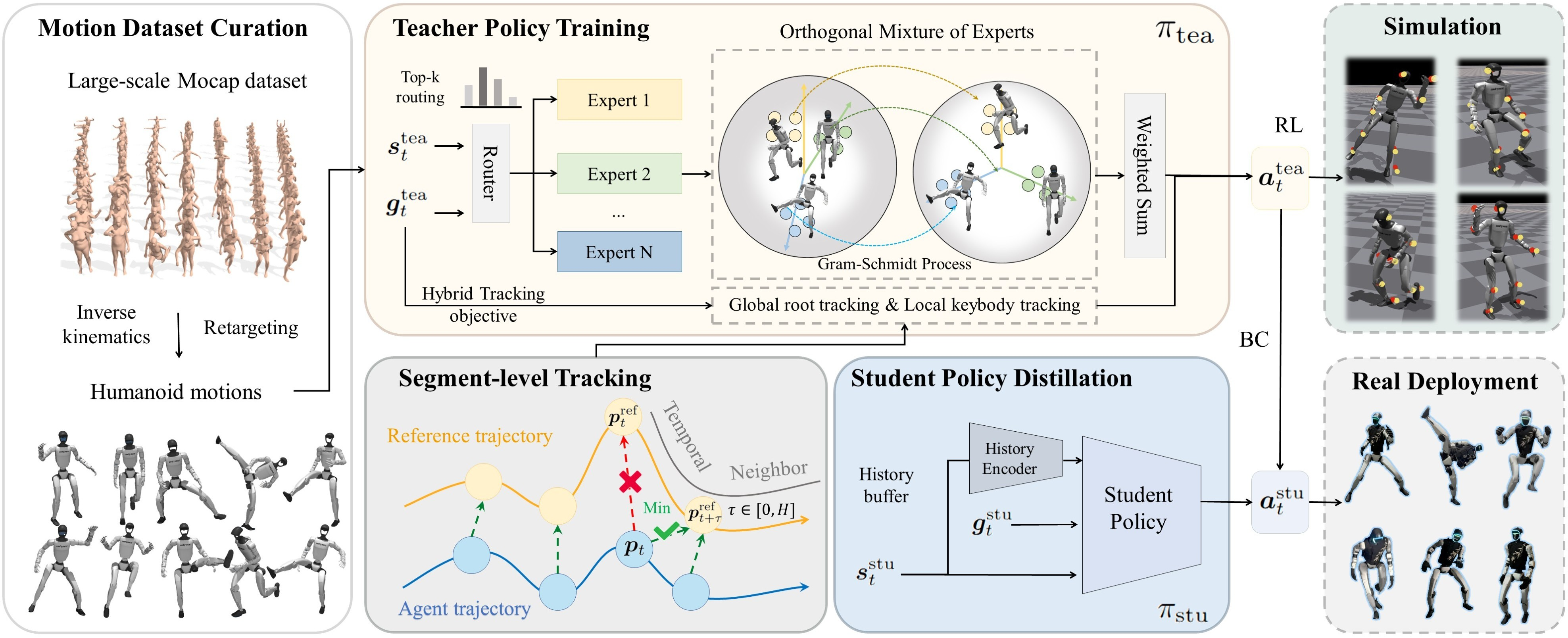

Figure 1: Framework of VMS. The large-scale motion capture dataset is first retargeted to the humanoid skeleton using an IK-based method. A teacher policy πtea is trained in simulation with a hybrid tracking objective and segment-level reward, then distilled to a student policy for real-world deployment.

Methodology

Motion Dataset Curation and Retargeting

VMS leverages a large-scale, curated motion dataset derived from AMASS, retargeted to the Unitree G1 humanoid via a differentiable IK-based method. The curation pipeline filters out infeasible or corrupted sequences by training an oracle policy and evaluating motion feasibility, resulting in 9,770 high-quality, humanoid-compatible motion sequences.

Hybrid Tracking Objective

The policy is trained to minimize both global root trajectory errors and local keybody pose discrepancies. Global tracking ensures spatial alignment, while local tracking preserves motion style. The hybrid objective is critical for balancing short-term expressiveness with long-term stability, especially in the presence of compounding errors or partial observability.

Orthogonal Mixture-of-Experts (OMoE) Architecture

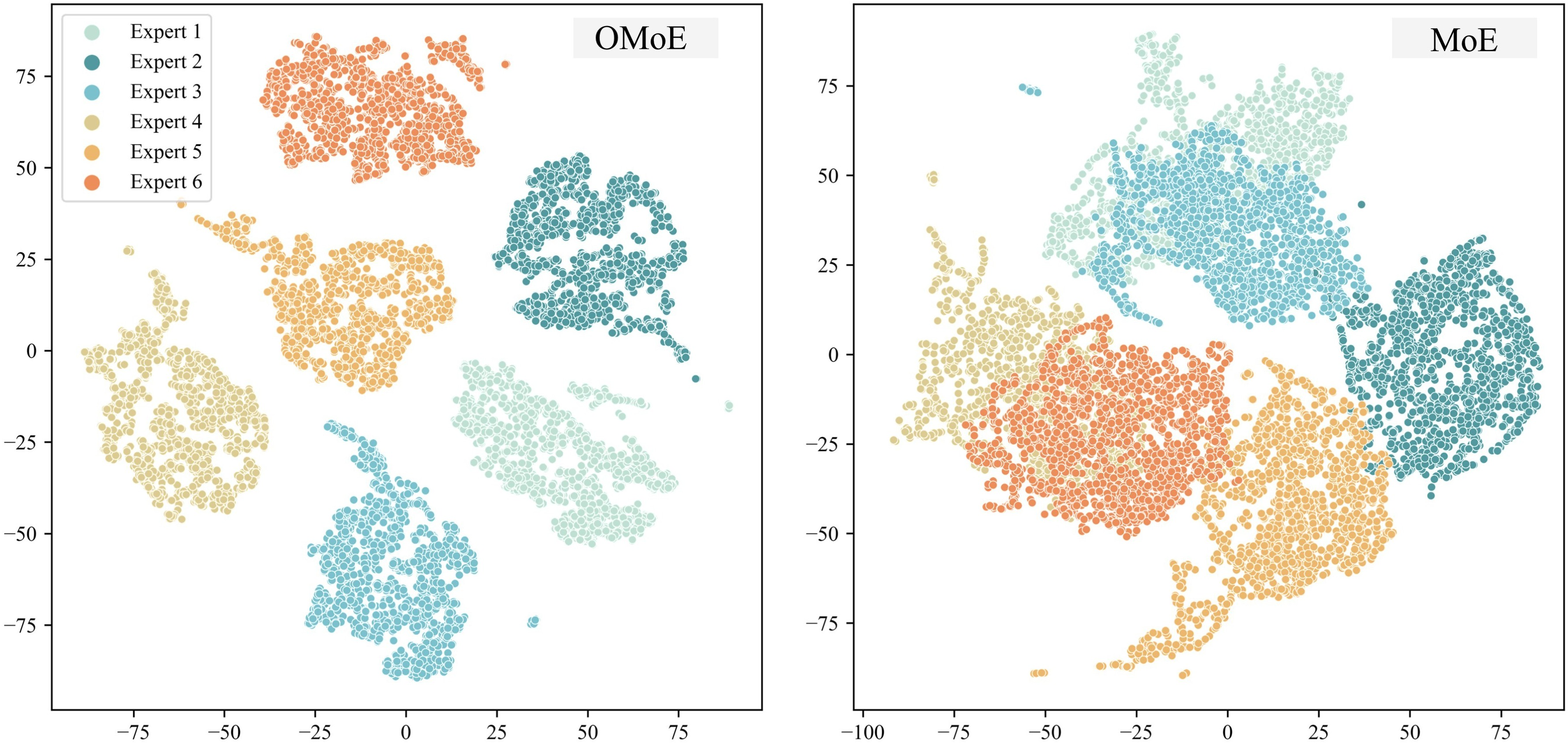

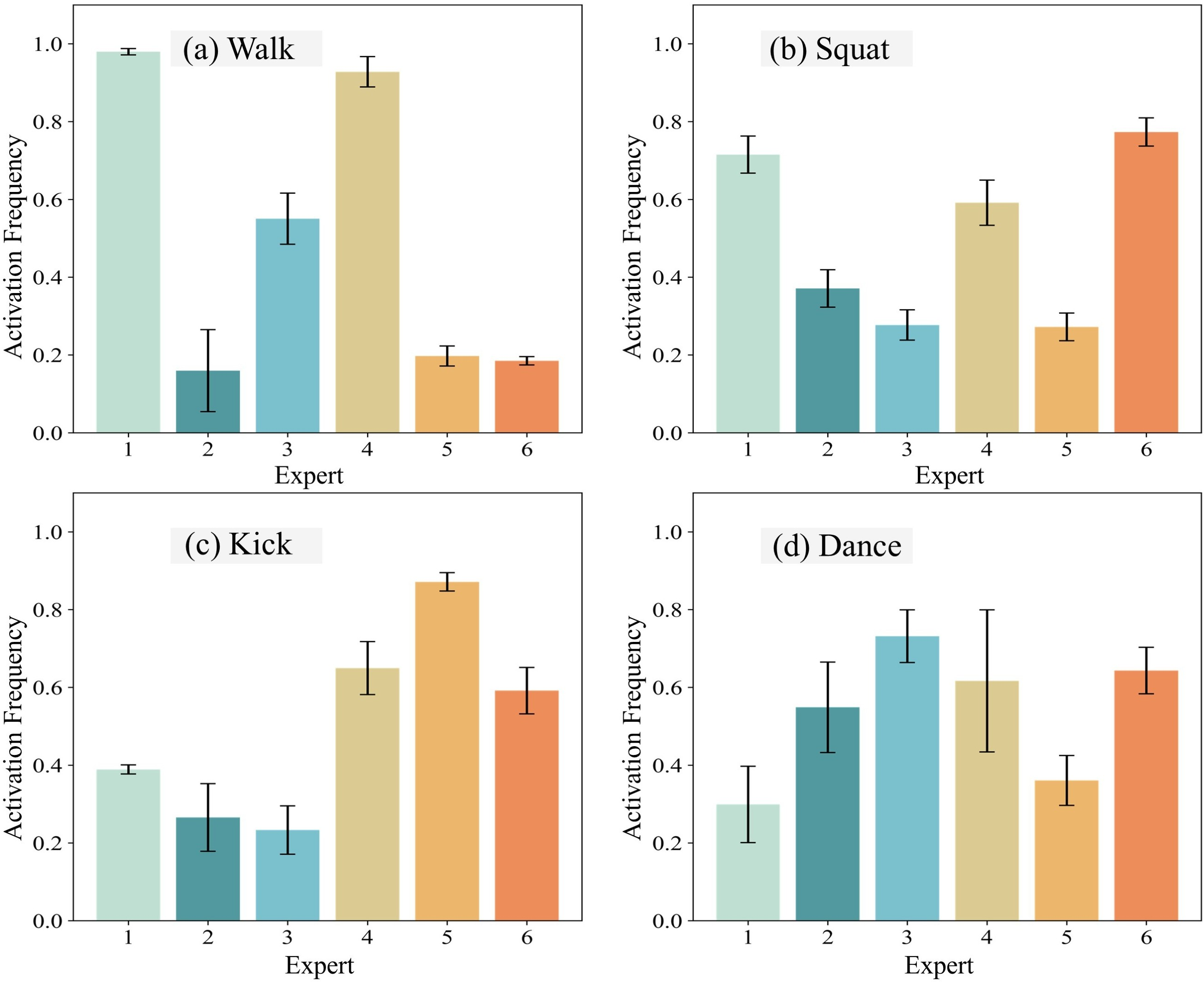

To address the limitations of monolithic MLPs and standard MoEs in multi-skill learning, the OMoE architecture enforces orthogonality among expert outputs via a differentiable Gram–Schmidt process. This design ensures that each expert specializes in distinct skill subspaces, reducing representational overlap and improving both expressiveness and generalization.

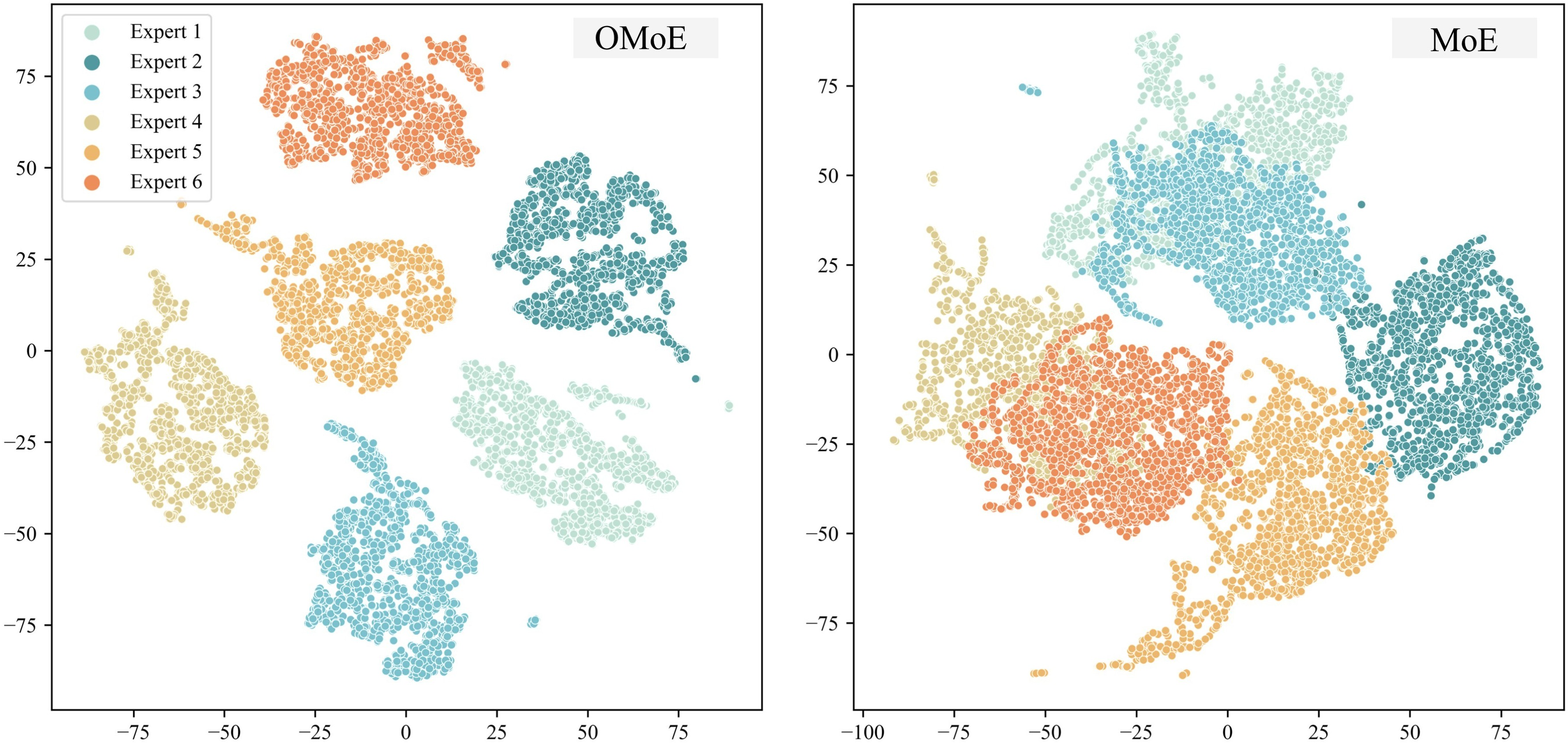

Figure 2: t-SNE visualization of experts' output features. Standard MoE exhibits substantial overlap across experts, while OMoE produces more diverse and specialized skill subspaces.

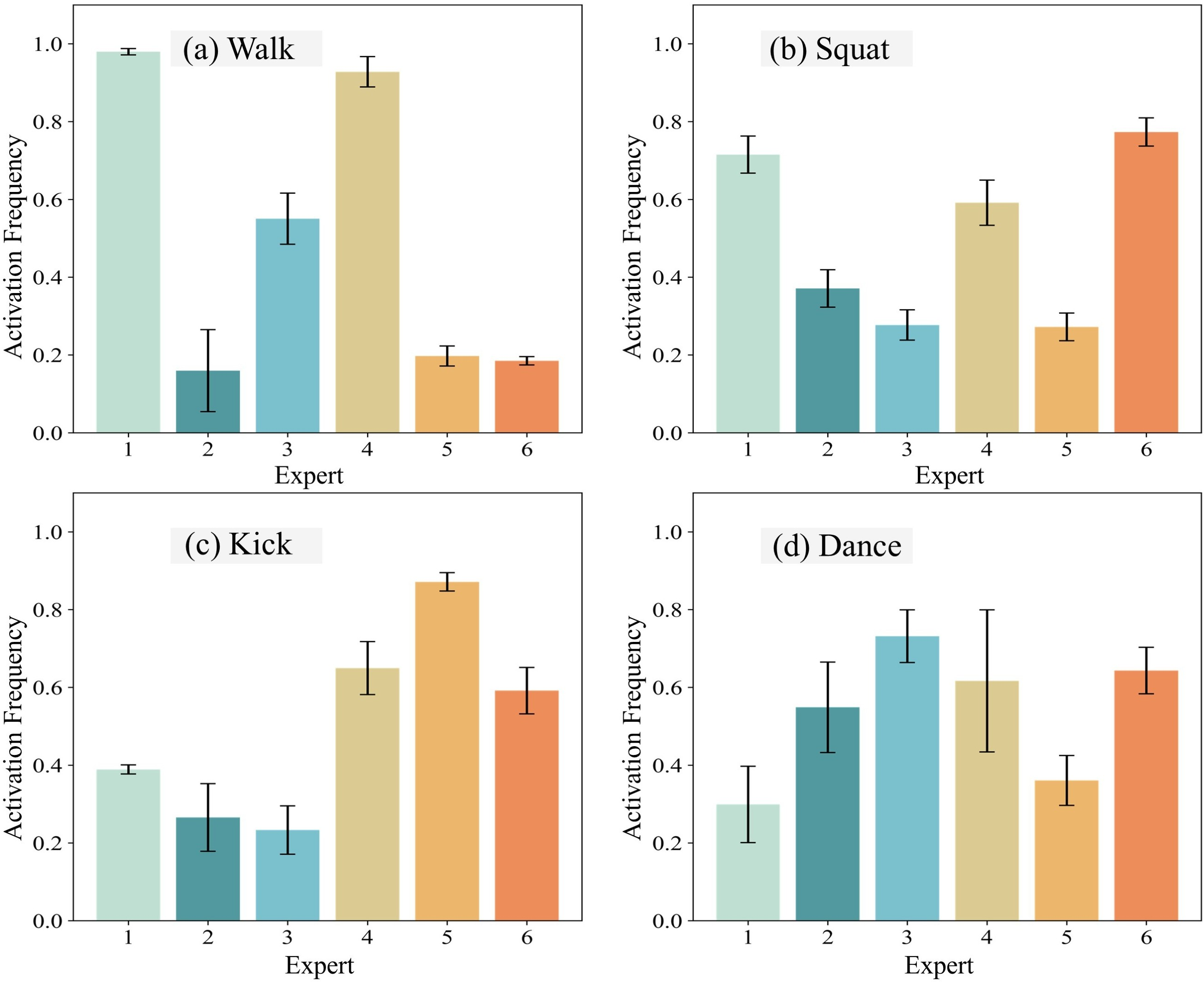

Figure 3: Expert activation frequencies across representative motion categories. The OMoE architecture effectively decomposes skills and adapts expert usage to motion diversity.

Segment-Level Tracking Reward

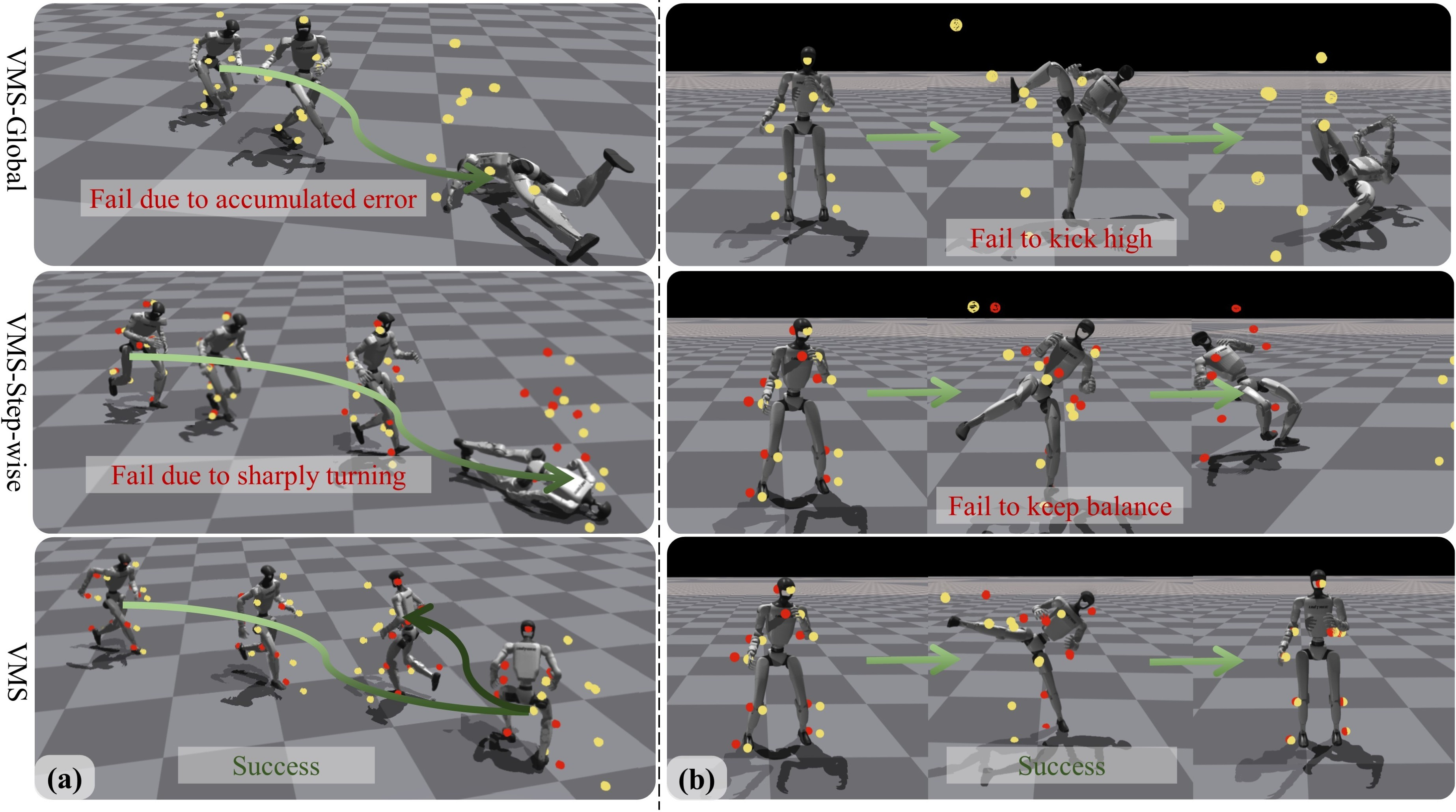

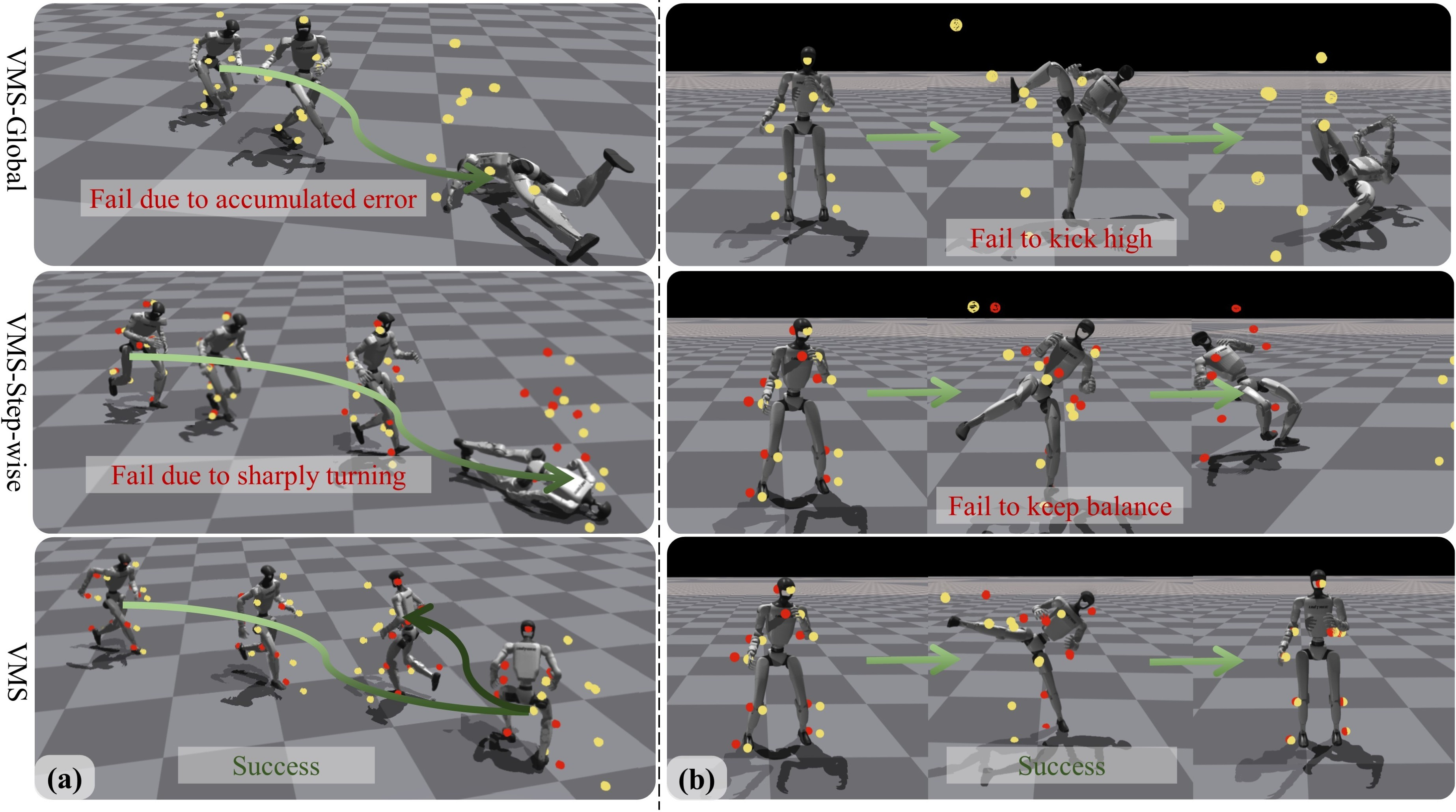

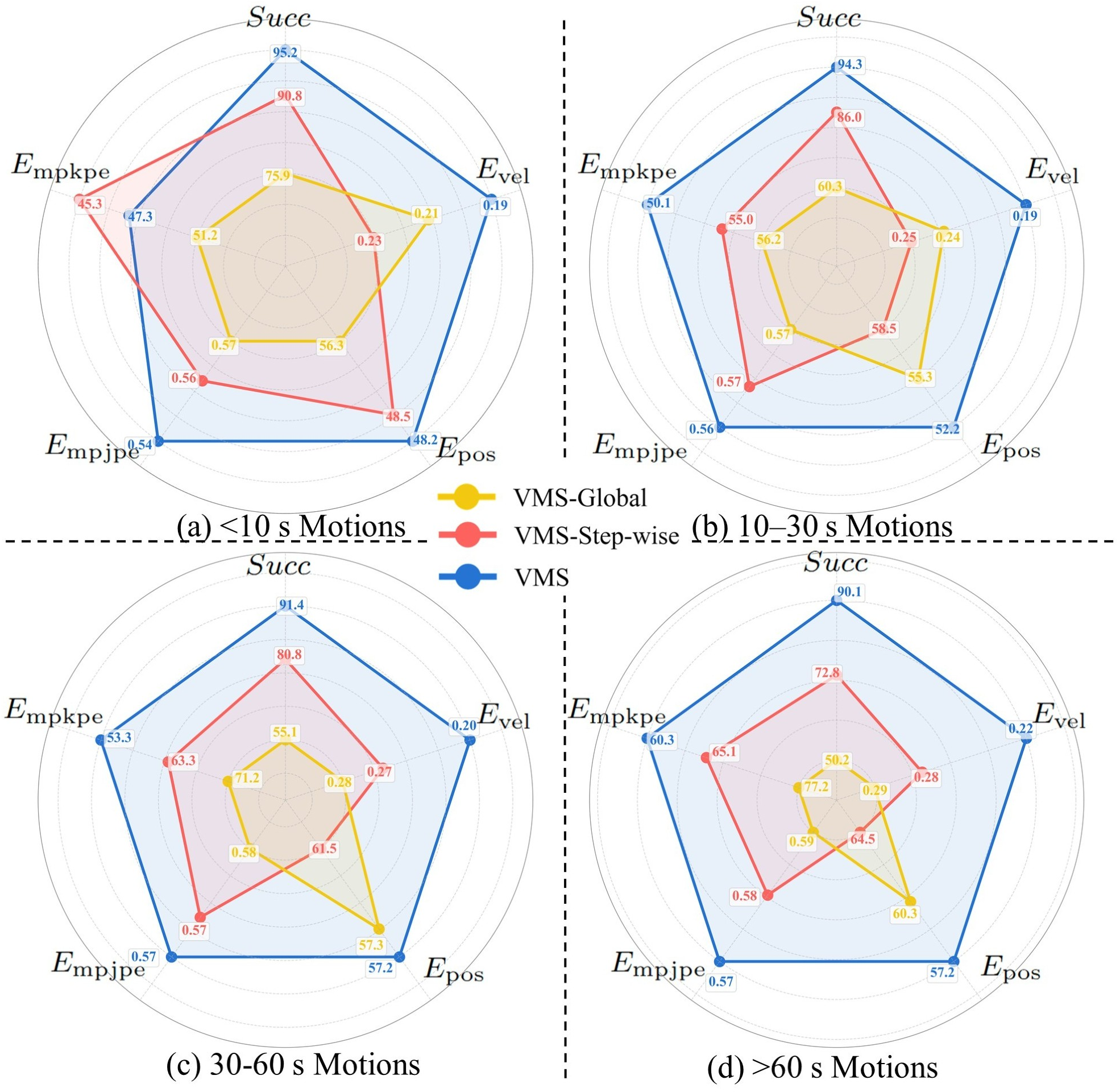

Strict step-wise tracking is replaced by a segment-level reward, which evaluates the minimum discrepancy between the agent's state and a short temporal window of reference states. This approach increases robustness to transient errors and infeasible references, enabling the policy to recover from disturbances and maintain stability over long sequences.

Teacher-Student Distillation and Sim2Real Transfer

A two-stage learning paradigm is adopted: a privileged teacher policy is trained in simulation with full state information, and a student policy is distilled via behavior cloning and DAgger, using only deployable observations. Domain randomization and noised reference state initialization are employed to bridge the sim-to-real gap, enhancing robustness to physical parameter variations and sensor noise.

Experimental Results

VMS demonstrates superior performance over strong baselines (ExBody2, GMT) across all tracking metrics, including mean per keybody position error, mean per joint position error, and global root position error. Notably, VMS achieves a substantial reduction in global drift compared to baselines that rely solely on root velocity objectives, with a test set root position error of 57.92 mm versus 397.1 mm (GMT) and 468.2 mm (ExBody2).

Ablation Studies

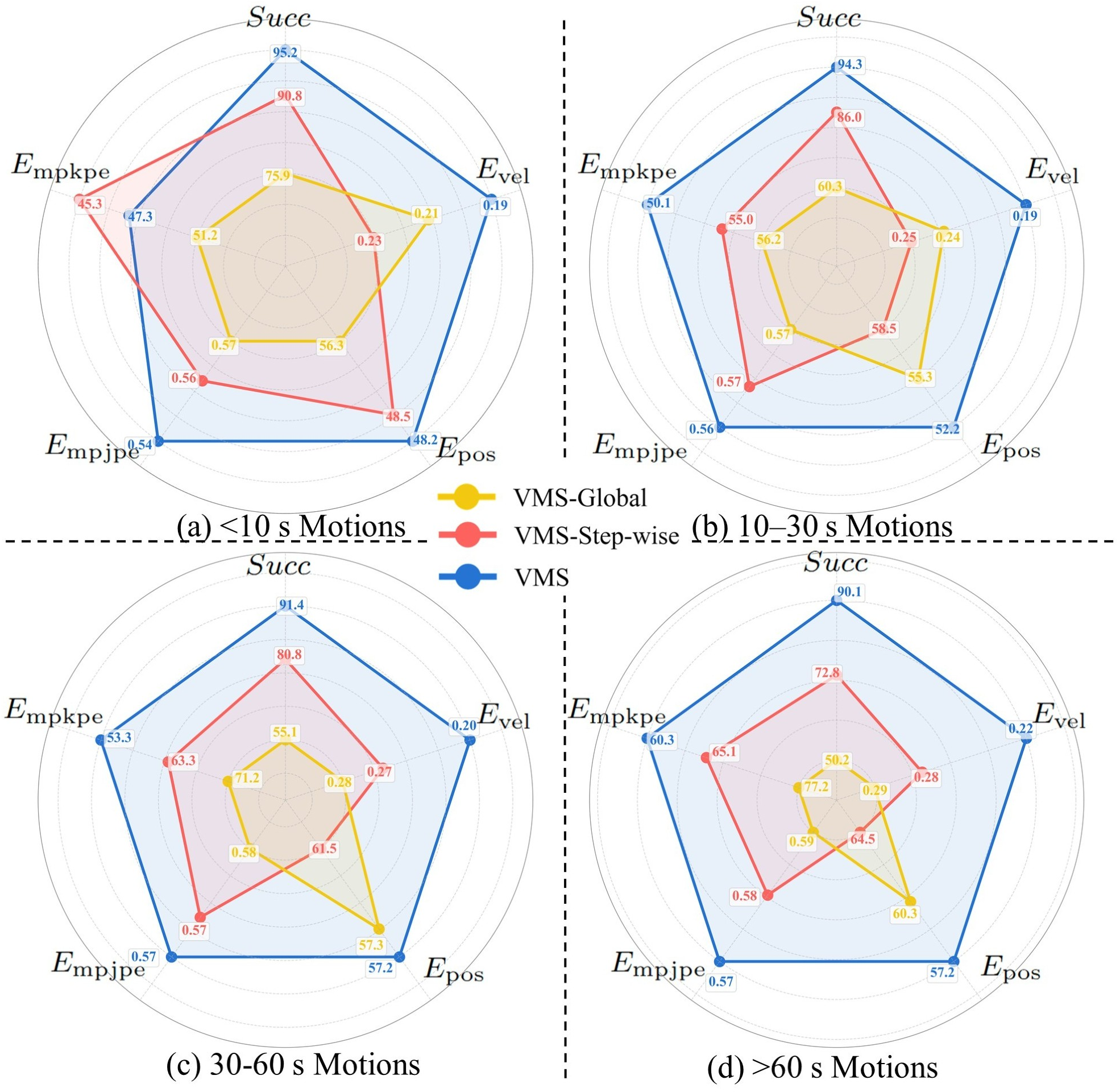

Ablations confirm the necessity of both the OMoE architecture and the segment-level reward. OMoE outperforms both standard MoE and MLP baselines in tracking accuracy and generalization. The segment-level reward yields consistently lower errors and higher robustness, especially for long-horizon motions, compared to global or step-wise tracking.

Figure 4: Visualizations of example motions. (a) for a running-to-turn motion, VMS achieves a smooth transition, (b) for a side kick, it maintains balance and completes the motion stably.

Figure 5: Tracking performance across motion durations. Our segment-level tracking achieves consistently lower errors and higher robustness compared to global and step-wise tracking.

Real-World Deployment

VMS is deployed on the Unitree G1 humanoid, successfully executing a wide range of skills, including walking, running, athletic movements, expressive dances, dynamic kicks, and long-horizon martial arts sequences. The system demonstrates minute-level stability and high-fidelity imitation in the physical world, validating the sim-to-real transfer pipeline.

Downstream Applications

Text-to-Motion Generation

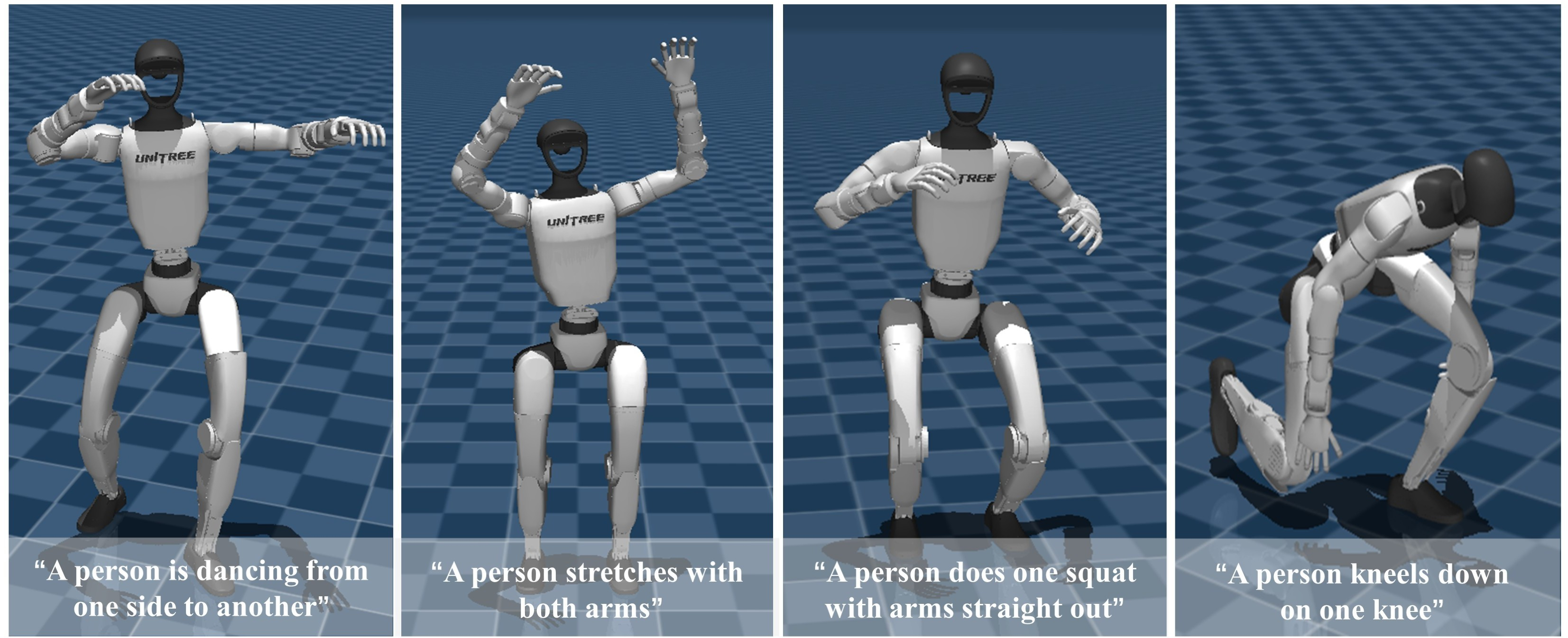

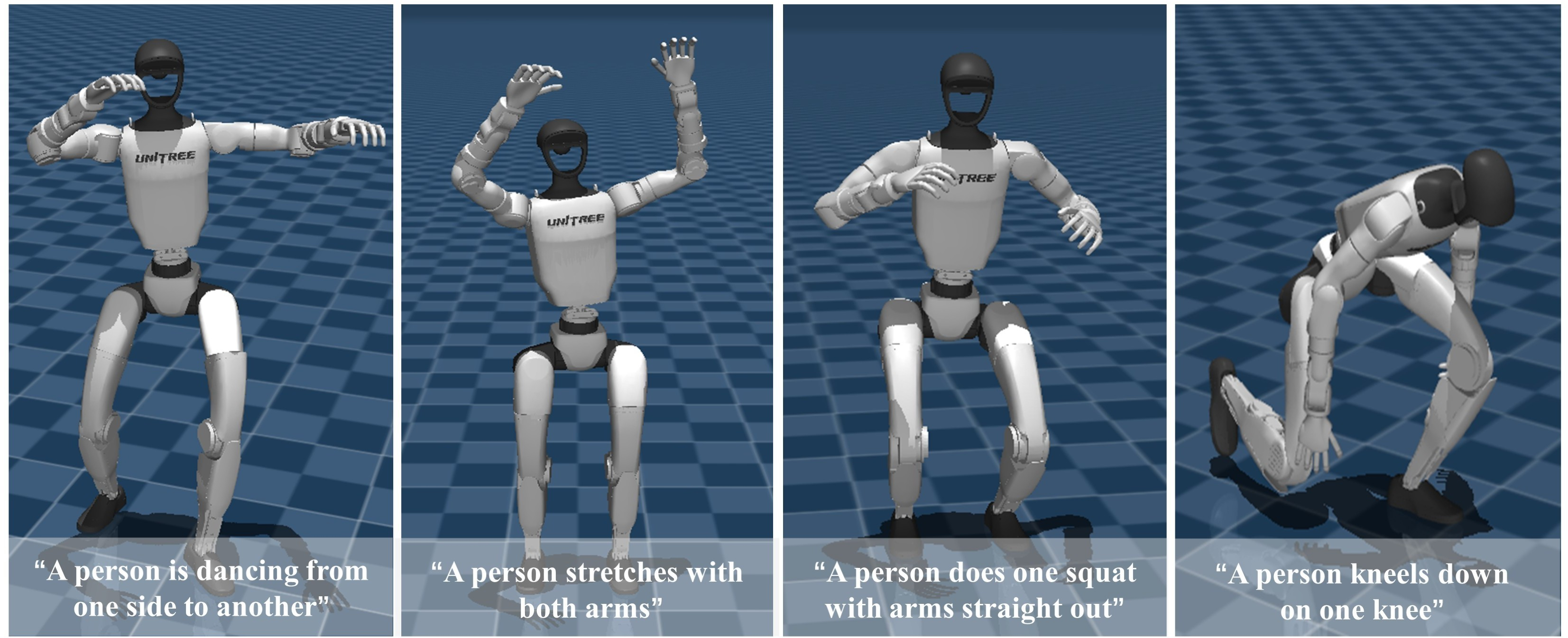

VMS is evaluated as a low-level controller for text-conditioned motion generation. Using MLD-generated reference motions, the policy exhibits strong zero-shot generalization, accurately tracking diverse, previously unseen motions from natural language descriptions.

Figure 6: Text2motion generation results. VMS reproduces diverse motions such as dancing, stretching, squatting, and kneeling from text descriptions, demonstrating strong zero-shot generalization.

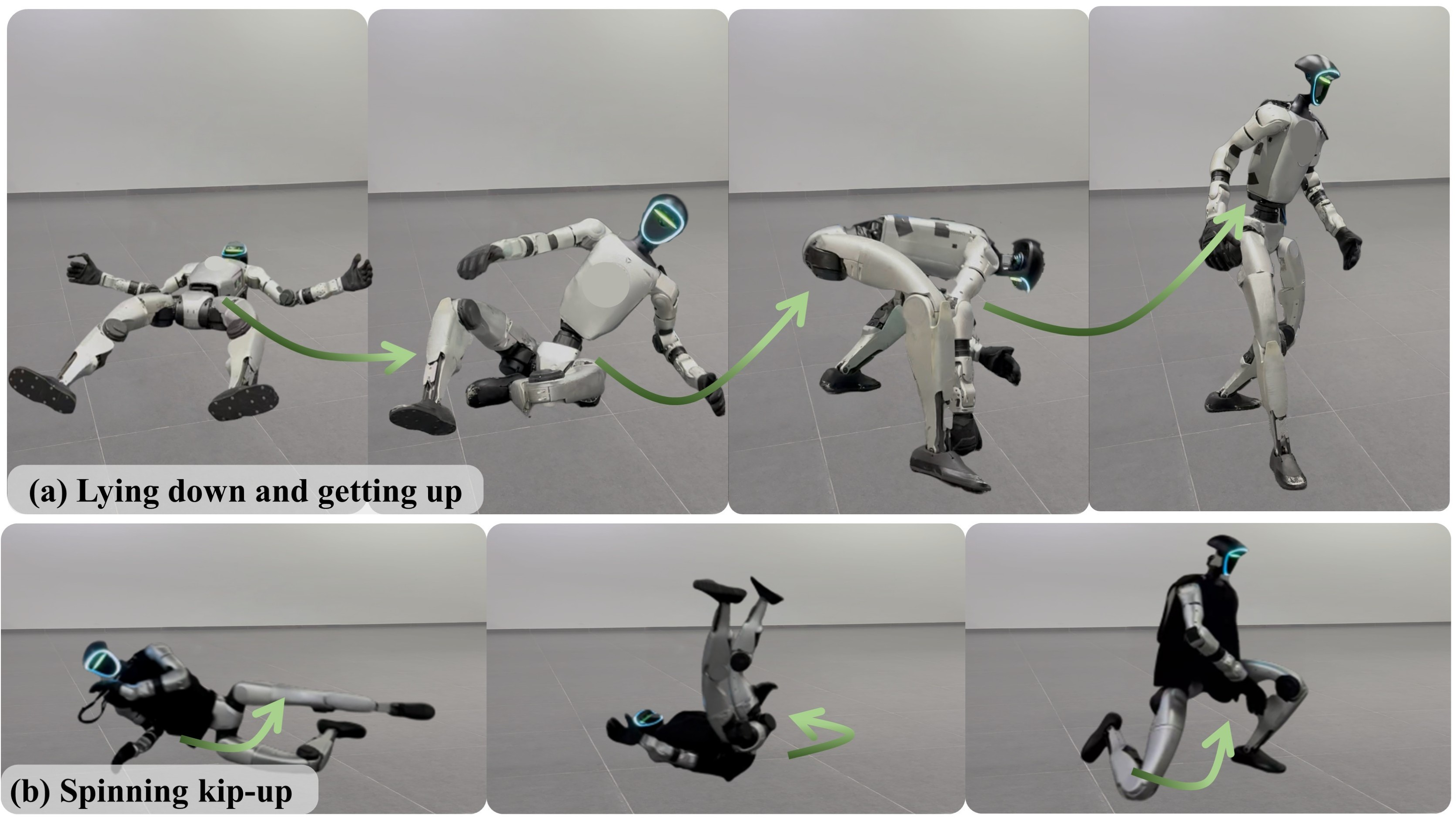

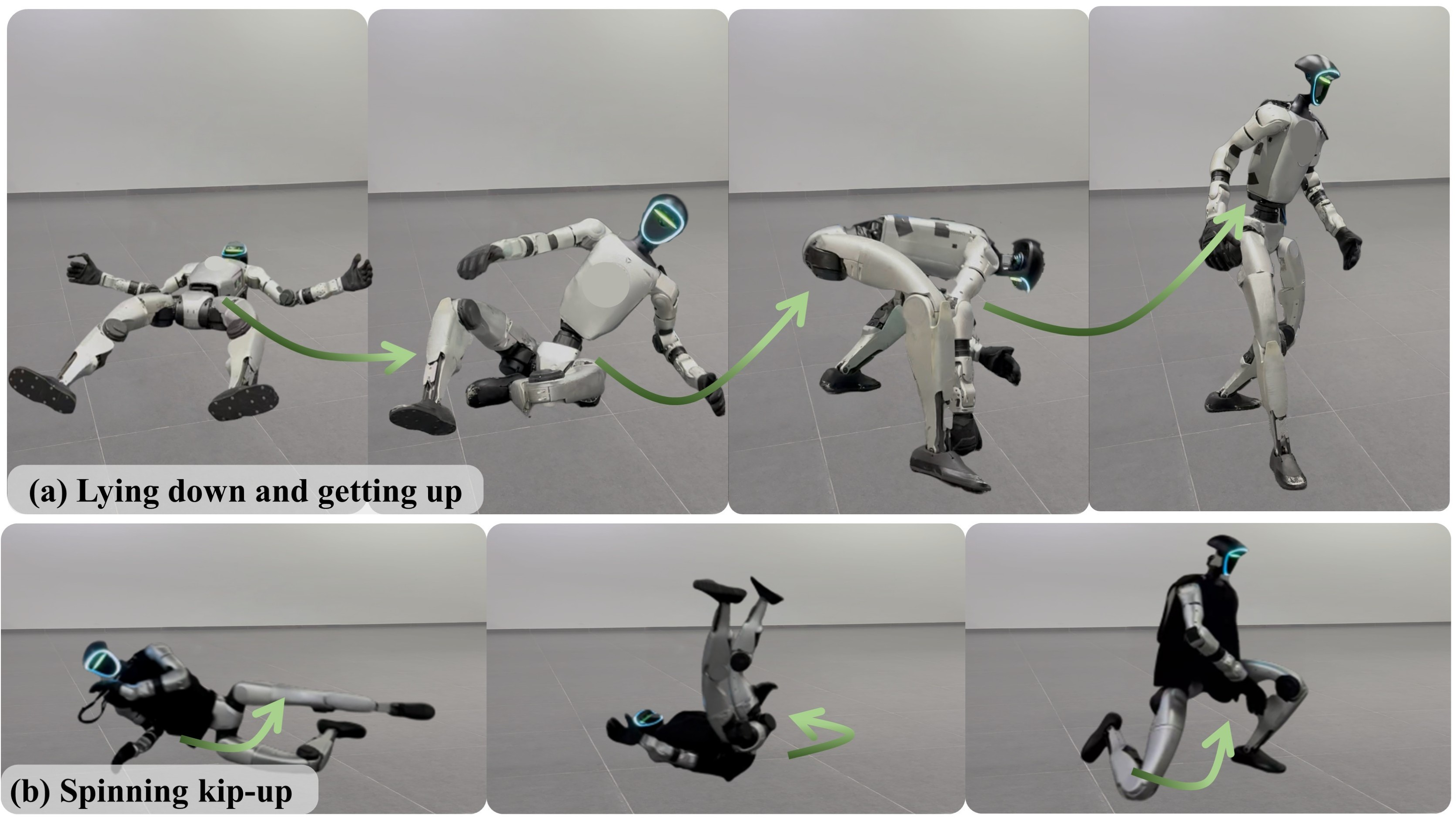

Fine-Tuning for Out-of-Distribution Motions

Minimal fine-tuning enables VMS to adapt to extreme OOD motions, such as lying down, getting up, and acrobatic kip-ups, demonstrating the flexibility and extensibility of the learned policy.

Figure 7: Fintuning results. VMS adapting to OOD motions: (a) lying down and getting up, and (b) a highly dynamic spinning kip-up.

Implications and Future Directions

The VMS framework establishes a scalable foundation for general-purpose humanoid control, with the following implications:

- Policy Expressiveness: The OMoE architecture provides a principled approach to skill decomposition, enabling a single policy to master a broad repertoire of dynamic behaviors.

- Robust Long-Horizon Tracking: The hybrid and segment-level objectives mitigate error accumulation and instability, a critical requirement for real-world deployment.

- Sim2Real Transfer: The combination of domain randomization, noised RSI, and history encoding supports robust transfer, reducing the need for extensive real-world data collection.

- Foundation for Hierarchical Control: The demonstrated zero-shot generalization to text-to-motion tasks positions VMS as a viable low-level controller for integration with high-level planners and behavior foundation models.

However, the current system is limited by the absence of visual perception and the reliance on large-scale, potentially unbalanced Mocap datasets. Future research should explore the integration of visual inputs for scene understanding, active data augmentation for skill coverage, and hierarchical or multi-modal policy architectures.

Conclusion

KungfuBot2's VMS framework advances the state of the art in versatile humanoid whole-body control by unifying skill decomposition, robust tracking, and sim-to-real transfer in a single, scalable system. The strong empirical results, both in simulation and on hardware, underscore the practical viability of the approach. The architectural and algorithmic innovations—particularly the OMoE and segment-level reward—are likely to inform future developments in generalist robot control, hierarchical planning, and foundation models for embodied AI.