The Cybersecurity of a Humanoid Robot (2509.14096v1)

Abstract: The rapid advancement of humanoid robotics presents unprecedented cybersecurity challenges that existing theoretical frameworks fail to adequately address. This report presents a comprehensive security assessment of a production humanoid robot platform, bridging the gap between abstract security models and operational vulnerabilities. Through systematic static analysis, runtime observation, and cryptographic examination, we uncovered a complex security landscape characterized by both sophisticated defensive mechanisms and critical vulnerabilities. Our findings reveal a dual-layer proprietary encryption system (designated FMX') that, while innovative in design, suffers from fundamental implementation flaws including the use of static cryptographic keys that enable offline configuration decryption. More significantly, we documented persistent telemetry connections transmitting detailed robot state information--including audio, visual, spatial, and actuator data--to external servers without explicit user consent or notification mechanisms. We operationalized a Cybersecurity AI agent on the Unitree G1 to map and prepare exploitation of its manufacturer's cloud infrastructure, illustrating how a compromised humanoid can escalate from covert data collection to active counter-offensive operations. We argue that securing humanoid robots requires a paradigm shift toward Cybersecurity AI (CAI) frameworks that can adapt to the unique challenges of physical-cyber convergence. This work contributes empirical evidence for developing robust security standards as humanoid robots transition from research curiosities to operational systems in critical domains.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Explain it Like I'm 14

Overview: What this paper is about

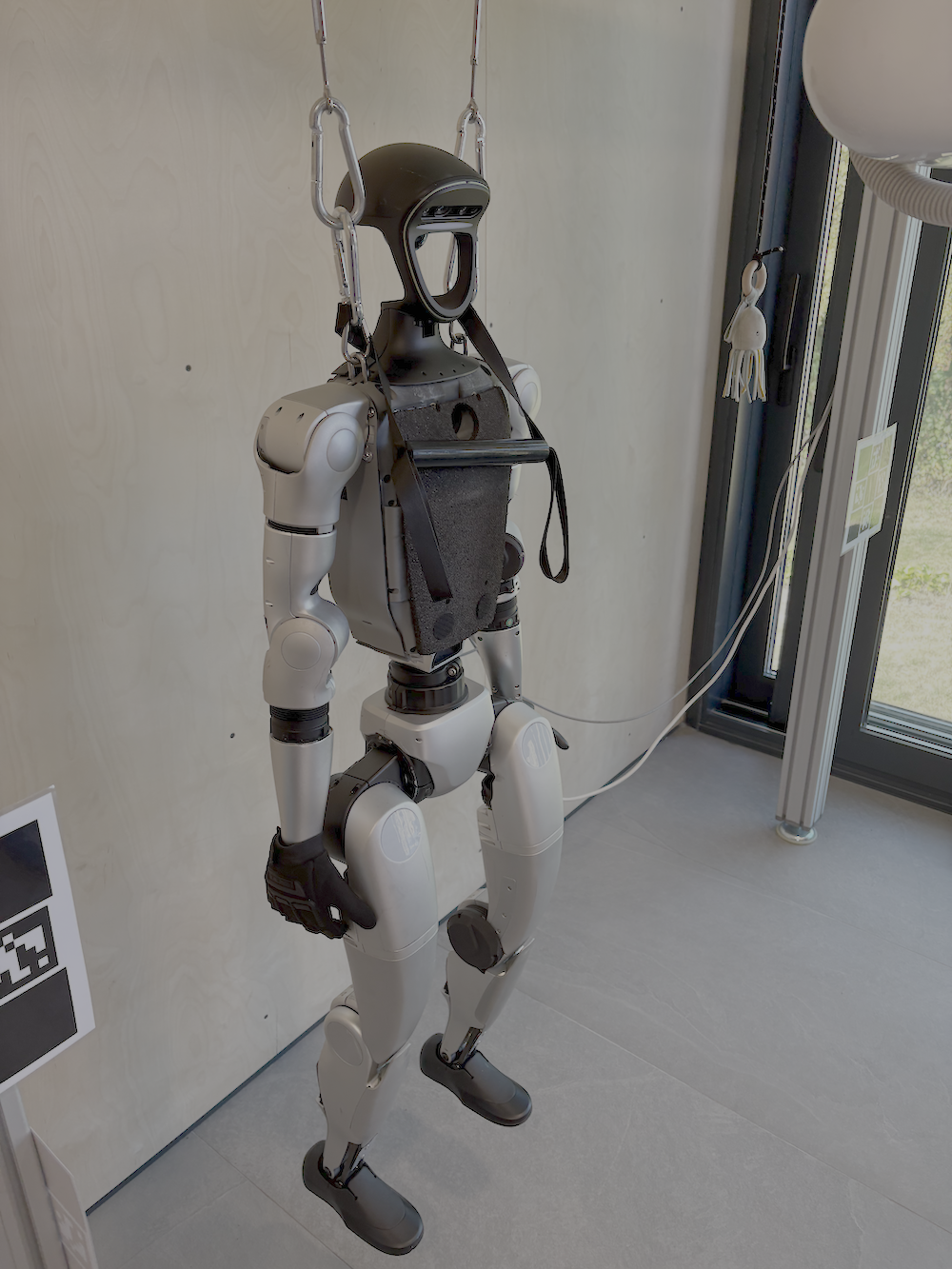

This paper looks at how safe and secure a real humanoid robot is from cyberattacks. The robot they studied is called the Unitree G1. The main goal was to stop guessing about robot security and instead open up a real machine, watch how it behaves, and see what actually goes wrong. The authors found both smart defenses and serious problems—especially around privacy and how the robot talks to the internet.

The questions the researchers asked

In simple terms, the paper tries to answer:

- How is a modern humanoid robot built (hardware and software), and where are the weak spots?

- What data does the robot send over the internet, and is that safe and private?

- How good are the robot’s protections, like encryption (locking data) and software design?

- Could a hacked robot be used to spy on people or attack other systems?

How they studied the robot

The team used several approaches, which you can think of like checking a house for security:

- “Blueprint check” (static analysis): They looked through the robot’s files and programs—like reading the house’s blueprints—to find weak locks or back doors.

- “Live monitoring” (runtime observation): They watched the robot while it was running to see what it sent over the network—like standing outside and noting who comes and goes.

- “Lock-picking test” (cryptographic analysis): They studied the robot’s encryption system (its “locks”) to see if it was strong or could be broken.

- “System mapping”: They drew a map of all the robot’s services and how they talk to each other—like mapping all rooms, doors, and hallways.

Whenever a technical term appears, here’s what it means in everyday language:

- Encryption: Scrambling information so only someone with a key can read it (like a diary with a lock).

- Telemetry: Status and sensor data the robot sends back to its maker (like a health report or live stream).

- Cloud services: Computers on the internet that store data or run parts of the robot’s software (like saving files to an online drive).

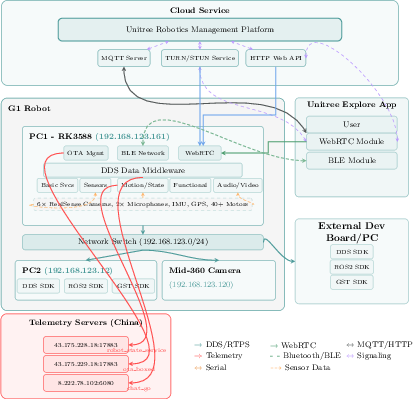

- Middleware (ROS 2, DDS): The “postal system” that moves messages between parts of the robot’s brain and body.

What they found and why it matters

Here are the big findings:

- The robot sends a lot of data to the internet without clearly asking the user

- The robot keeps open, ongoing connections to outside servers.

- It transmits detailed information like audio, video, location/movement, and motor states.

- This can happen without obvious warnings or consent, which is a serious privacy concern.

- The encryption system has a clever design but a basic flaw

- The robot uses a custom, two-layer encryption system they call “FMX.”

- But it uses the same cryptographic keys all the time (“static keys”), which is like locking every diary with the same key—a thief who gets one key can read them all.

- Because of that, attackers could decrypt configuration files offline (without touching the robot again).

- The software stack is outdated and complex, which increases risk

- The robot uses older software (such as ROS 2 Foxy, which is no longer supported).

- Old software often has known bugs that hackers can exploit.

- The system has many moving parts (Bluetooth, WebRTC, data-sharing tools, over-the-air updates), which means more places where things can go wrong.

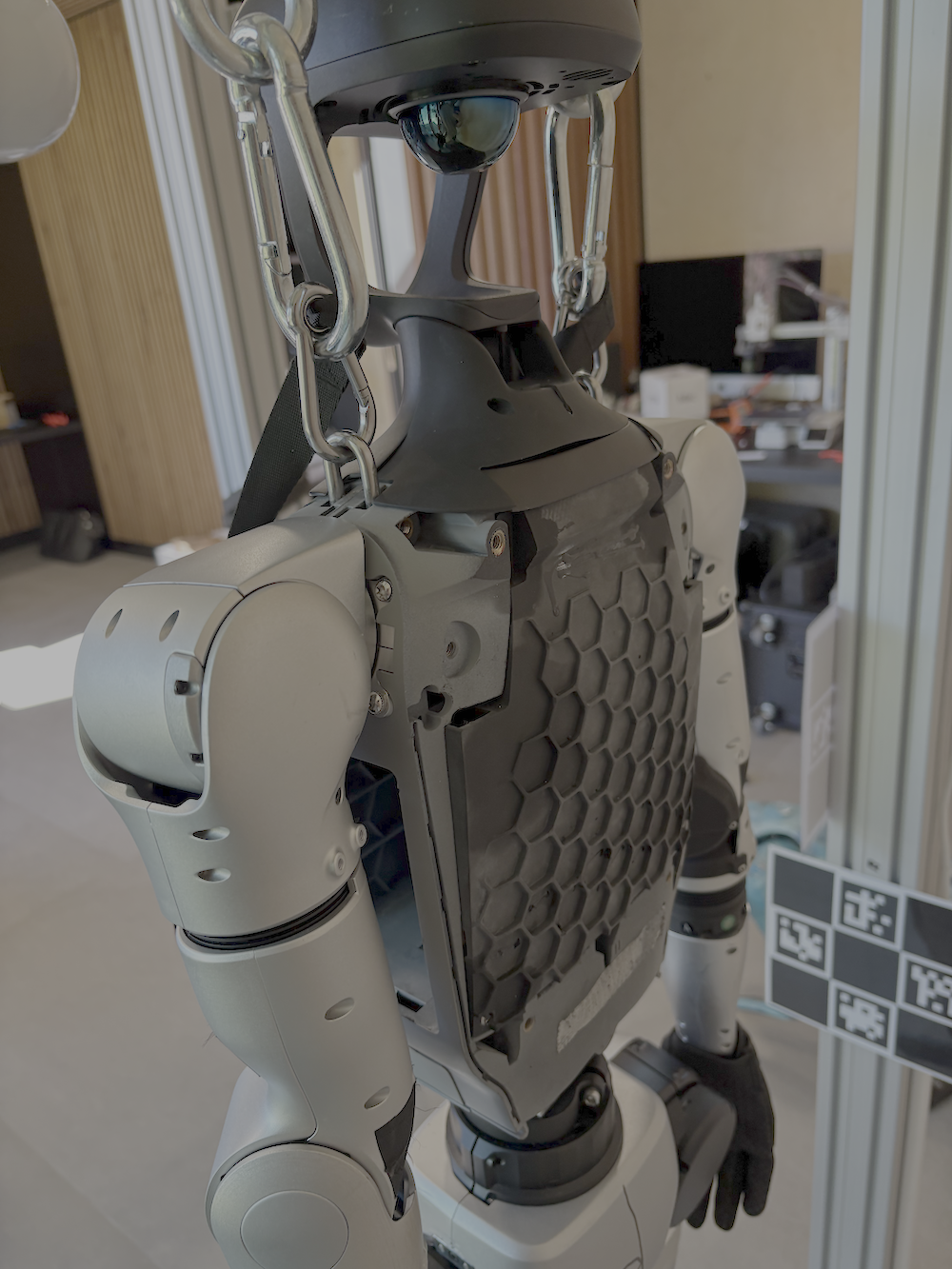

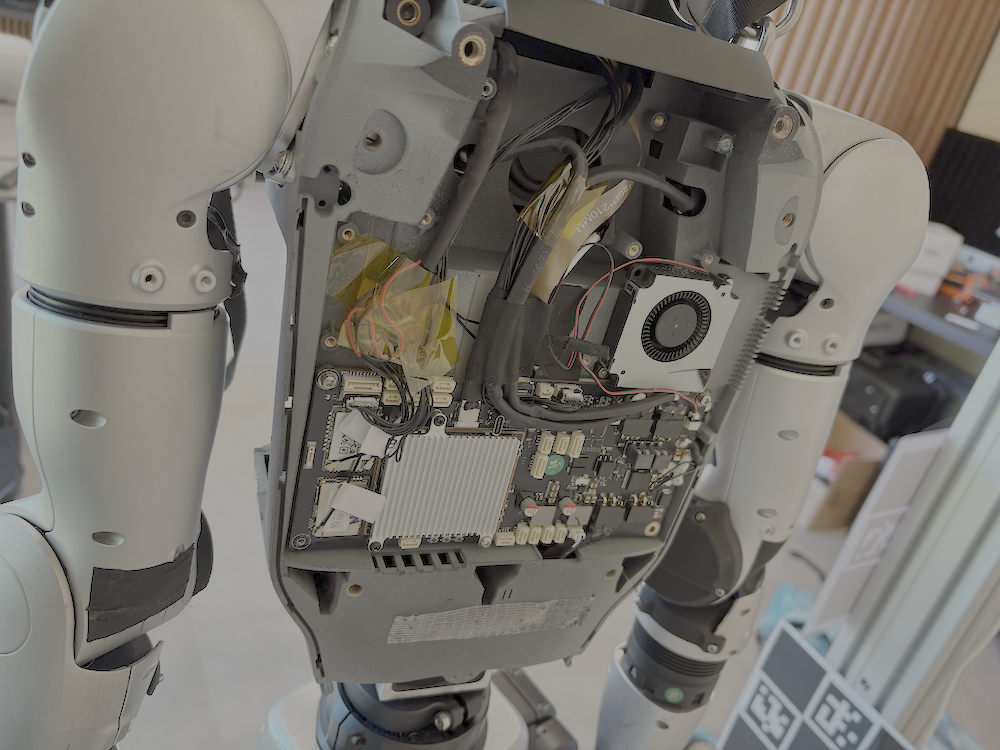

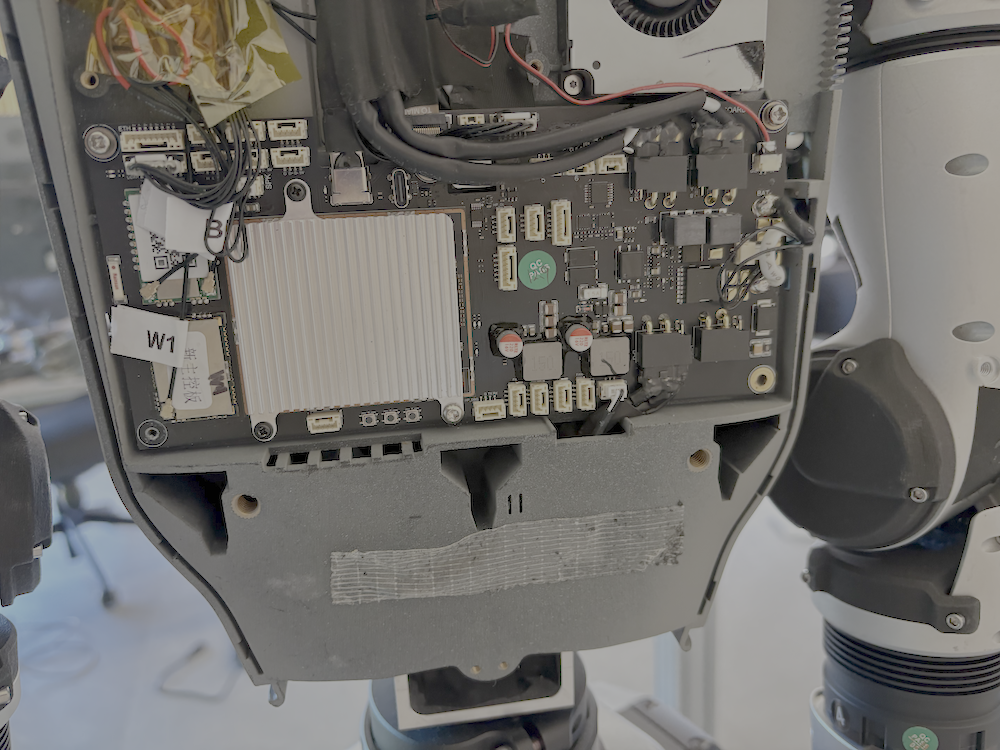

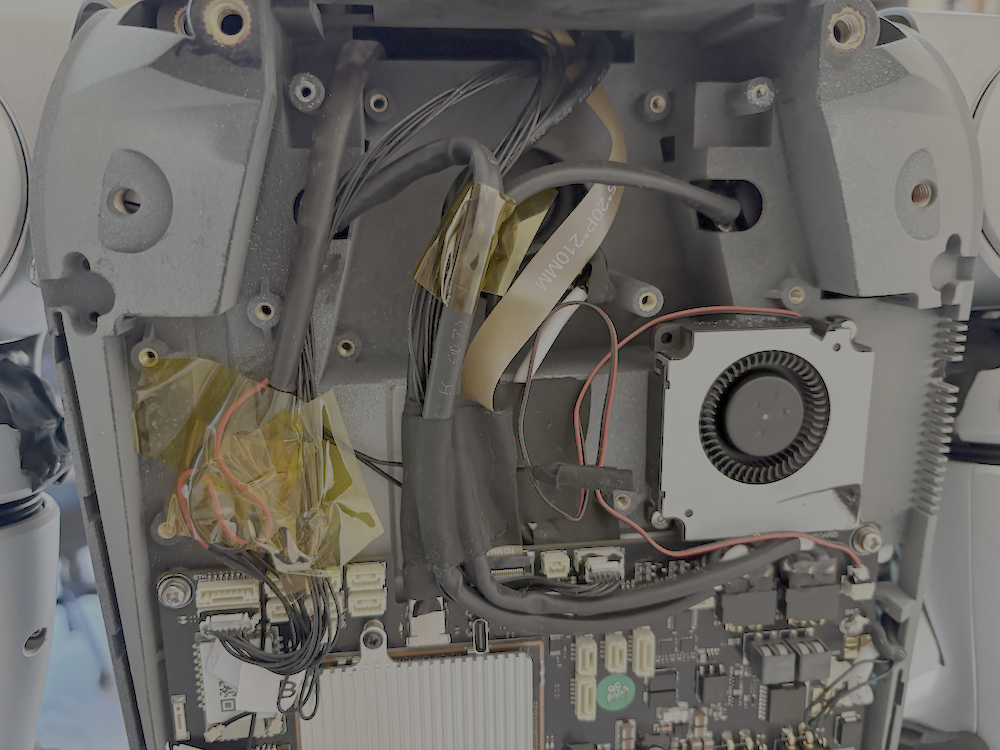

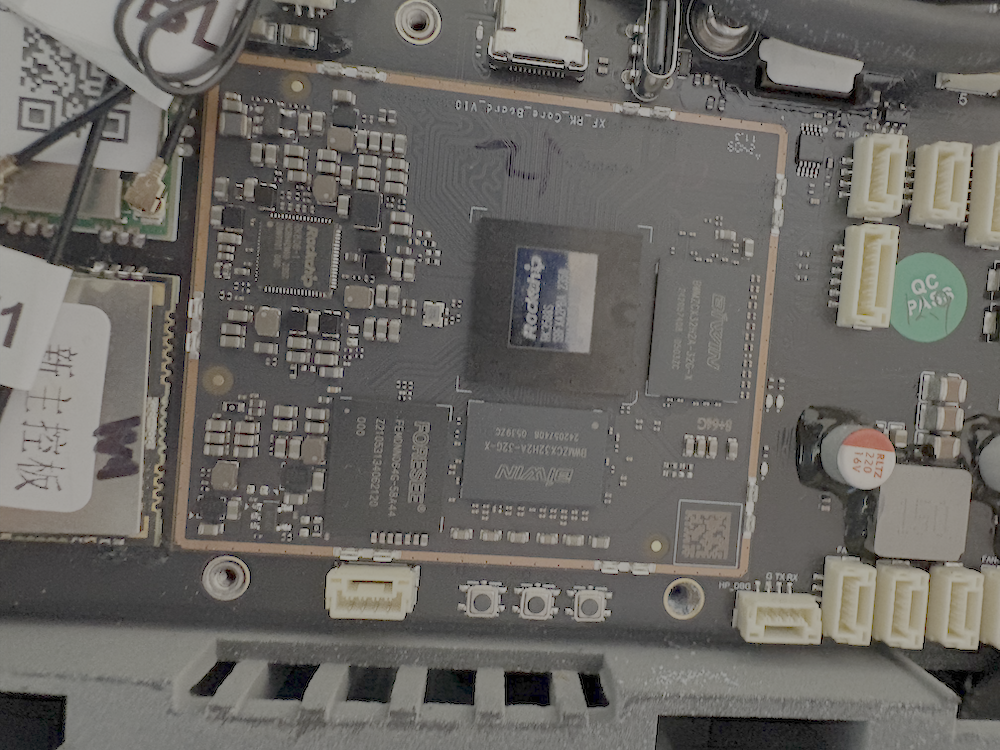

- The hardware is powerful but creates physical security risks

- The main computer chip (Rockchip RK3588) and the layout of the electronics allow several possible physical attacks if someone gets access to the robot’s body (like plugging into a hidden port).

- Secure boot (the “trust chain” that makes sure the robot only runs legit software) may not be fully locked down, which could let attackers load their own software.

- A hacked robot could become an attacker

- The team showed how an AI security agent on the robot could be used to explore and prepare attacks on the maker’s cloud systems.

- That means a robot in your home or workplace, if compromised, might not just spy—it could also help attack other networks.

Why this matters:

- Safety: A compromised humanoid can move and act. That means cybersecurity problems can become physical safety problems.

- Privacy: Always-on cameras and microphones that silently send data elsewhere are a serious concern.

- Trust: If people can’t trust robots to protect their data, adoption will slow down—even if the tech is otherwise ready.

What this means for the future

The paper argues that we need a new way to secure humanoid robots—smarter, faster, and built for machines that live in the real world, not just on desks. The authors suggest using Cybersecurity AI (CAI): security systems that can watch, learn, and defend in real time, because robots mix physical actions with internet connectivity in a way regular computers don’t.

Based on their findings, here are the practical takeaways:

- Privacy first: Users should clearly know what data is collected and be able to turn it off. “Always-on” telemetry should be opt-in, not hidden.

- Stronger locks: Use modern, rotating, per-device encryption keys—never the same static keys for everyone.

- Keep software fresh: Outdated software should be upgraded to supported versions, with regular security patches.

- Secure from the start: Enable full secure boot and protect the boot process, so attackers can’t sneak in at startup.

- Independent checks: Robots should undergo third-party security testing and follow clear, industry-wide standards.

In short, this paper moves the conversation from “what might go wrong” to “what actually is going wrong” in a cutting-edge humanoid robot. It shows why securing robots is urgent and lays out steps to make future humanoids safer, more private, and more trustworthy.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a focused list of what remains missing, uncertain, or unexplored in the paper that future researchers can concretely address:

- Telemetry characterization: precisely identify what data types (audio, video, pose, actuator states), frequencies, volumes, and metadata are transmitted, including encryption status on the wire, protocol details (e.g., TLS versions/ciphers, certificate pinning), and endpoints/regions per deployment mode.

- Consent and configurability: verify whether telemetry can be disabled, scoped, or filtered via user-accessible settings; document any opt-in/opt-out flows, defaults, and persistence across reboots/updates.

- Cross-version validation: repeat all findings across multiple firmware/software releases and hardware revisions to determine which issues are systemic versus version-specific.

- Device-to-device variability: determine whether cryptographic materials (keys, salts, IVs) are global or per-device; test multiple G1 units to assess FMX key uniqueness and rotation behavior.

- FMX crypto analysis: perform a formal cryptanalysis of the dual-layer “FMX” scheme (Blowfish + LCG), including chosen-plaintext/ciphertext attacks, keyspace estimation, keystream reuse detection, and integrity guarantees (or lack thereof).

- FMX key lifecycle: locate key generation, storage (eMMC, NVRAM, TEE, files), access pathways, and rotation/revocation mechanisms; evaluate feasibility of key extraction through software and hardware means.

- End-to-end integrity: assess whether configuration/telemetry channels provide authenticity and integrity (signatures/MACs), not just confidentiality; test tamper and replay resistance.

- OTA chain-of-trust: map the complete update path (server → transport → client → install), verify signature schemes, certificate management, rollback protection, anti-rollback counters, staged rollouts, and recovery paths.

- Secure boot status: empirically verify the RK3588 secure boot configuration (fuse states, boot ROM policy), key provisioning, measurement/attestation, and whether bootloaders/kernels/rootfs are verified on every boot.

- Debug interfaces: identify and test UART/JTAG/USB-OTG interfaces, their protection (passwords, fuses, epoxy, tamper switches), and practical exploitation pathways for firmware extraction or runtime control.

- TEE configuration and isolation: document the Trusted Execution Environment’s roles, trust boundaries, secure storage usage, and attempt privilege boundary crossings from normal world to secure world.

- Kernel and OS hardening: evaluate presence and configuration of AppArmor/SELinux, namespaces/cgroups, seccomp, ASLR/SMEP/SMAP/CONFIG_HARDENED_USERCOPY, and systemd sandboxing of services.

- Service privilege separation: enumerate users, capabilities, and filesystem/network permissions per service (e.g., ai_sport, webrtc_, ota_), and test privilege escalation paths between them.

- DDS/ROS 2 security: verify whether DDS-Security/SROS2 is enabled; test for unauthenticated RTPS discovery/traffic injection, topic snooping/spoofing, and ACLs for critical topics/services.

- WebRTC signaling surface: assess authentication, authorization, CSRF/CORS policies, TURN/STUN configuration (open relays), and fuzz signaling/state machines for RCE or credential leakage.

- Bluetooth attack surface: characterize pairing/bonding modes, MITM protection, LE Secure Connections usage, and fuzz GATT services on upper_bluetooth for unauthorized control or data access.

- MQTT security posture: verify TLS usage, client authentication, topic-level ACLs, and susceptibility to unauthorized publish/subscribe on robot_state_service and other topics.

- Cloud-side exposure: evaluate manufacturer cloud APIs for auth strength, rate limiting, tenant isolation, input validation, and logging/monitoring; ethically coordinate limited testing or simulations if direct testing is out of scope.

- Remote-only exploitability: demonstrate whether full compromise is possible without physical access via exposed services (WebRTC/MQTT/DDS/BLE/HTTP) under realistic NAT/firewall conditions.

- Safety-security coupling: experimentally quantify how cyber compromises translate to unsafe physical behaviors; test the effectiveness of emergency stops, safe torque off, and motion limits under adversarial inputs.

- Adversarial ML robustness: test perception (vision/audio) and NLP models against adversarial examples, data poisoning, and prompt-injection; analyze model update provenance and integrity.

- Sensor spoofing on this platform: empirically validate LiDAR/camera/IMU/GNSS spoofing impacts on state_estimator/ai_sport and whether sensor fusion mitigates or amplifies spoofed inputs.

- Side-channel and fault injection: conduct practical EM/power/clock glitching tests on RK3588 and power stages during crypto and control operations; evaluate feasibility and required attacker proximity.

- Network isolation strategies: test segmented deployments (VLANs, firewalls, zero-trust), offline modes, and their impact on functionality/safety; define minimal connectivity required for safe operations.

- Patch latency and maintenance: measure vendor response times, vulnerability disclosure process outcomes, and the practicality of upgrading from EOL components (ROS 2 Foxy, CycloneDDS 0.10.2) in production.

- Auditability and IDS: determine presence/quality of security logs, tamper-evident logging, local/remote SIEM integration, and feasibility of on-device intrusion detection without degrading real-time performance.

- Data governance and compliance: analyze GDPR/CCPA implications, data minimization, retention policies, cross-border transfers, and whether DPIAs or privacy notices match observed behavior.

- Reproducibility materials: provide sanitized pcaps, configuration snapshots, versioned binaries/hashes, and tooling sufficient for independent replication while preserving responsible disclosure.

- Generalizability: compare findings with other humanoid platforms (e.g., Agility, Figure, Tesla) to separate vendor-specific issues from industry-wide patterns; propose a shared benchmark suite.

- CAI agent viability: rigorously evaluate the proposed Cybersecurity AI agent’s on-device resource footprint, detection efficacy, false-positive/negative rates, attack surface it introduces, and safe failover behavior.

- Standards alignment: map observed gaps to IEC 62443, ISO 10218/TS 15066, ETSI EN 303 645, UL 4600, and emerging robotics security guidance; identify concrete compliance remediation steps.

- SBOM and supply chain risk: generate a full SBOM, run continuous vulnerability scans (CVEs, license risks), and assess third-party dependency update pipelines and provenance.

- Physical tamper detection: test for tamper sensors, chassis intrusion logging, secure erase on tamper, and boot attestation changes after physical compromise.

Collections

Sign up for free to add this paper to one or more collections.