- The paper introduces a continued pretraining framework using millions of synthetic tabular tasks to bolster in-context learning in LLMs.

- It employs a two-stage, random-forest-based warm-start distillation and a token-efficient tabular prompt encoding to increase demonstration capacity.

- Experimental results demonstrate a monotonic many-shot scaling trend with performance competitive to specialized tabular models while preserving general LLM capabilities.

MachineLearningLM: Scaling In-Context Machine Learning in LLMs via Synthetic Tabular Pretraining

Introduction and Motivation

LLMs have demonstrated strong general-purpose reasoning and world knowledge, but their ability to perform in-context learning (ICL) on standard ML tasks—especially with many-shot demonstrations—remains limited. Existing LLMs often plateau in accuracy after a small number of in-context examples, are sensitive to demonstration order and label bias, and fail to robustly model statistical dependencies in tabular data. In contrast, specialized tabular models (e.g., TabPFN, TabICL) can perform ICL for tabular tasks but lack the generality and multimodal capabilities of LLMs.

MachineLearningLM addresses this gap by introducing a continued pretraining framework that endows a general-purpose LLM with robust in-context ML capabilities, specifically for tabular prediction tasks, while preserving its general reasoning and chat abilities. The approach leverages millions of synthetic tasks generated from structural causal models (SCMs), a random-forest (RF) teacher for warm-start distillation, and a highly token-efficient tabular prompt encoding. The result is a model that achieves monotonic many-shot scaling, competitive with state-of-the-art tabular learners, and maintains strong general LLM performance.

Synthetic Task Generation and Pretraining Corpus

A key innovation is the large-scale synthesis of tabular prediction tasks using SCMs. Each task is defined by a randomly sampled DAG, diverse feature types (categorical, numerical), and label mechanisms, including both neural and tree-based functions. This ensures a wide coverage of causal mechanisms and statistical dependencies, with strict non-overlap between pretraining and evaluation data.

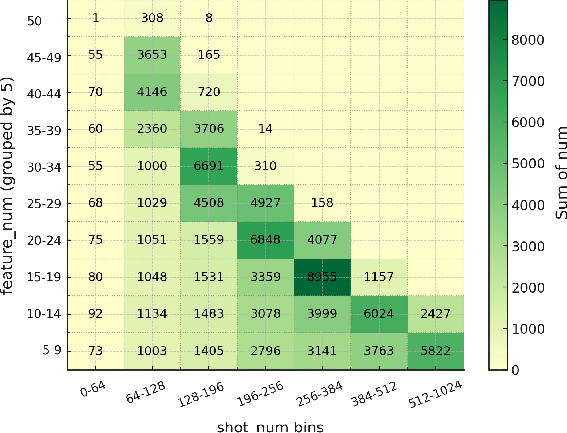

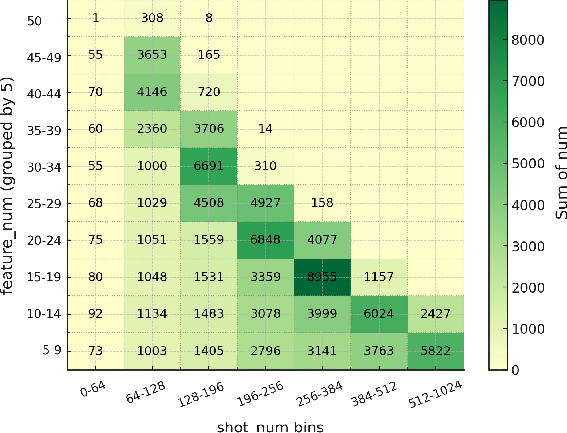

Figure 1: Heatmap of task density across feature and shot counts in the synthetic pretraining corpus, illustrating broad coverage of feature dimensionality and demonstration counts.

The synthetic corpus comprises approximately 3 million distinct SCMs, each yielding i.i.d. samples for in-context demonstrations and queries. Tasks span up to 1,024 in-context examples, 5–50 features, and 2–10 classes, with a training token budget of 32k per task. This scale and diversity are critical for inducing robust, generalizable in-context learning behavior.

Random-Forest Distillation and Warm-Start

Directly training on synthetic tasks can lead to optimization collapse, especially for low-signal or highly imbalanced tasks. To stabilize training, MachineLearningLM employs a two-stage warm-start:

- Task-level filtering: Only tasks where the RF teacher outperforms chance (via binomial test, Cohen’s κ, balanced accuracy, macro-F1, and non-collapse checks) are admitted.

- Example-level consensus: During warm-up, only test examples where the RF prediction matches ground truth are used, ensuring high-quality supervision.

This distillation phase provides informative targets and smooth gradients, mitigating early training collapse and biasing the model toward robust, interpretable decision boundaries.

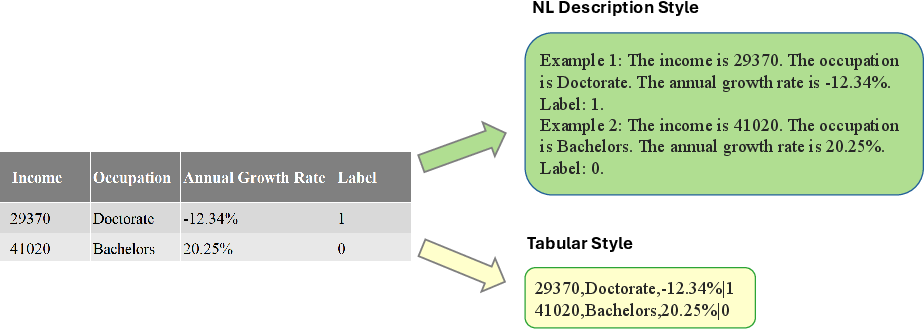

Token-Efficient Prompting and Compression

A major bottleneck for many-shot ICL in LLMs is context length. MachineLearningLM introduces a highly compressed, tabular prompt encoding:

The combined effect is a $3$–6× increase in the number of in-context examples per window and up to 50× amortized throughput via batch inference. This design is critical for scaling to hundreds or thousands of shots.

Order-Robust, Confidence-Aware Self-Consistency

To address the sensitivity of LLMs to demonstration and feature order, MachineLearningLM employs a self-consistency mechanism at inference: multiple prompt variants are generated by shuffling demonstration order, and predictions are aggregated via confidence-weighted voting over next-token probabilities. This approach yields order-robust predictions with minimal computational overhead (using V=5 variants).

Experimental Results

Many-Shot Scaling and Generalization

MachineLearningLM demonstrates a monotonic many-shot scaling law: accuracy increases steadily as the number of in-context demonstrations grows from 8 to 1,024, with no plateau observed within the tested range. In contrast, vanilla LLMs (e.g., GPT-5-mini, o3-mini) show limited or even negative scaling beyond 32–64 shots.

- Average accuracy improvement: ∼15% absolute gain over the Qwen-2.5-7B-Instruct backbone across diverse out-of-distribution tabular tasks.

- High-shot regime: Outperforms GPT-5-mini by ∼13% and o3-mini by ∼16% at 512 shots.

- Random-forest–level accuracy: Achieves within 2% relative accuracy of RF across 8–512 shots, and surpasses RF on ∼30% of tasks at 512 shots.

Comparison to Tabular and LLM Baselines

- Tabular ICL models (TabPFN, TabICL): MachineLearningLM is competitive, especially at moderate shot counts, despite using a general-purpose LLM backbone and no architectural modifications.

- TabuLa-8B: MachineLearningLM supports an order of magnitude more demonstrations per prompt (up to 1,024 vs. 32), and outperforms TabuLa-8B even in the few-shot regime, despite TabuLa leveraging feature names.

- General LLMs: Maintains strong performance on MMLU (73.2% 0-shot, 75.4% 50-shot), indicating no degradation in general reasoning or knowledge.

The tabular encoding natively supports mixed numerical and textual features, without explicit text bucketing or embedding extraction. MachineLearningLM achieves reliable gains on heterogeneous datasets (e.g., bank, adult, credit-g), and remains competitive on pure numeric tables.

Robustness and Limitations

- Class imbalance: Maintains stable accuracy under skewed label distributions, avoiding collapse-to-majority.

- High-cardinality labels: Underperforms on tasks with K>10 classes, due to pretraining bias; expanding K in synthesis is a clear avenue for improvement.

- Forecasting tasks: Performance drops on time-series/forecasting tasks, as the pretraining corpus is i.i.d. tabular; temporal inductive bias is absent.

Implementation and Scaling Considerations

- Backbone: Qwen-2.5-7B-Instruct, with LoRA rank 8 adaptation.

- Pretraining: Two-stage continued pretraining on 3M synthetic tasks, distributed over 40 A100 GPUs, with a 32k-token context window.

- Inference: Supports up to 131k-token inference contexts, generalizing beyond pretraining limits.

- Prompting: Tabular encoding and integer normalization are critical for token efficiency; batch prediction amortizes context overhead.

Implications and Future Directions

MachineLearningLM demonstrates that targeted continued pretraining on synthetic, diverse tabular tasks can induce robust, scalable in-context ML capabilities in general-purpose LLMs, without sacrificing general reasoning or chat abilities. This approach offers a practical path to unifying classical ML robustness with LLM versatility, enabling:

- Scalable any-shot learning for tabular and potentially multimodal tasks.

- Integration with agent memory and retrieval-augmented methods for dynamic, open-ended workflows.

- Interpretability and reasoning-augmented learning via SCM-based rationales and feature attribution.

- Uncertainty-aware prediction and selective abstention, addressing calibration and hallucination.

Future work includes extending to regression, ranking, time-series, and structured prediction; scaling to longer contexts via system optimizations; incorporating multimodal synthetic tasks; and aligning with real-world data via lightweight fine-tuning.

Conclusion

MachineLearningLM establishes a new paradigm for scaling in-context ML in LLMs through synthetic task pretraining, RF distillation, and token-efficient prompting. The model achieves monotonic many-shot scaling, competitive tabular performance, and preserves general LLM capabilities. This work provides a foundation for further advances in unified, robust, and interpretable in-context learning across modalities and domains.